当前位置:网站首页>元数据管理Apache Atlas编译(内嵌式)部署及遇到各种错误记录

元数据管理Apache Atlas编译(内嵌式)部署及遇到各种错误记录

2022-06-23 03:52:00 【韧小钊】

Atlas

概述

Atlas是一套可扩展且可扩展的核心基础治理服务,使企业能够有效且高效地满足Hadoop中的合规性要求,并允许与整个企业数据生态系统集成。Apache

Atlas为组织提供开放的元数据管理和治理功能,以构建其数据资产的目录,对这些资产进行分类和管理,并为数据科学家,分析师和数据治理团队提供围绕这些数据资产的协作功能。

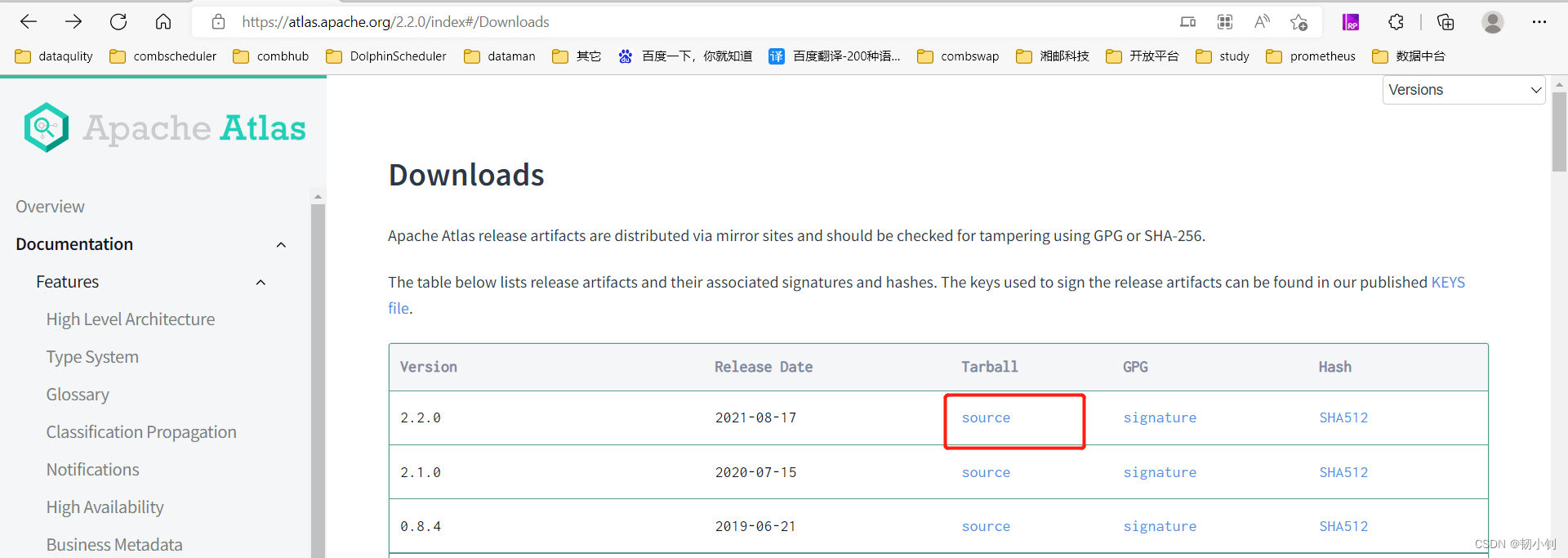

下载地址

解压、导入、编译

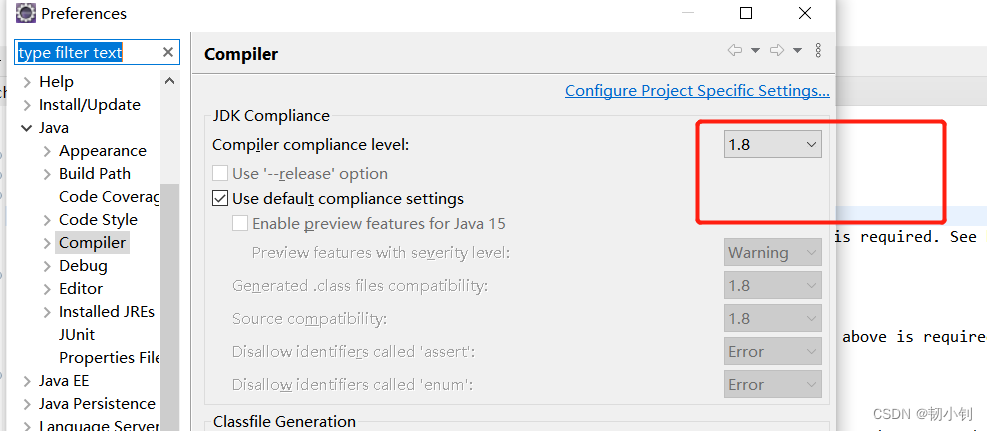

- 通过eclipse进行编译

编译命令:

clean install -Prelease -Pdist,embedded-hbase-solr -Dcheckstyle.skip=true -Dmaven.test.skip=true -Dmaven.javadoc.skip=true

注意,Atlas内嵌部署编译,需要加上embedded-hbase-solr参数,否则不生效,安装内嵌方式启动时会报错,测试则报503错误

- 编译完成

- 编译后文件

部署安装

创建部署用户

[[email protected] ~]# useradd atlas -d /home/atlas

[[email protected] ~]# passwd atlas

更改用户 atlas 的密码 。

新的 密码:

无效的密码: 密码少于 8 个字符

重新输入新的 密码:

passwd:所有的身份验证令牌已经成功更新。

[[email protected] ~]#

[[email protected] ~]# echo 'atlas ALL=(ALL) NOPASSWD: ALL' >> /etc/sudoers

[[email protected] ~]# su - atlas

[[email protected] ~]$

安装部署

上传server安装包到服务器

- 解压

tar xf apache-atlas-2.2.0-server.tar.gz

修改配置文件

内嵌式部署不需要修改任何配置,均会自动生成,部署完即可启动

atlas-env.sh

指定hbase配置目录

# rxz 20220527 add

export HBASE_CONF_DIR=/home/atlas/apache-atlas-2.2.0/conf/hbase

启停atlas

- 设置变量

export MANAGE_LOCAL_HBASE=true

export MANAGE_LOCAL_SOLR=true

- 启动

bin/atlas_start.py

- 停止

[[email protected] apache-atlas-2.2.0]$ bin/atlas_stop.py

atlas-application.properties

该文件配置相关组件地址,

登录验证

- 环境验证

curl -u admin:admin http://localhost:21000/api/atlas/admin/version

- 浏览器验证

http://192.168.56.10:21000/

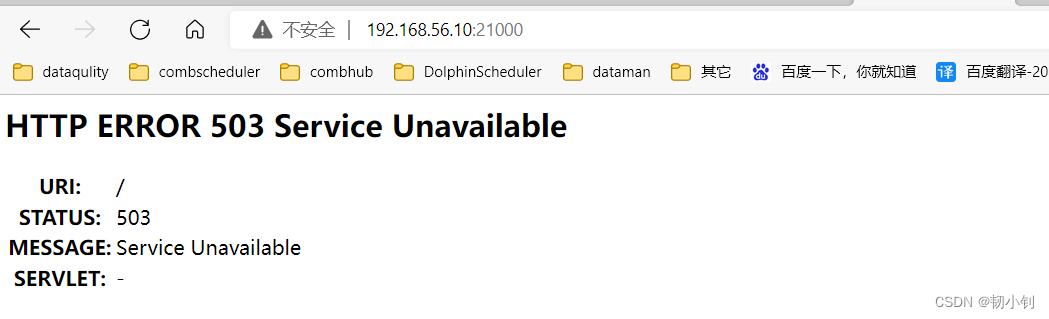

HTTP ERROR 503

查看日志

- atlas.20220527-141555.err

[[email protected] logs]$ cat atlas.20220527-141555.err

Exception in thread "main" org.apache.atlas.exception.AtlasBaseException: EmbeddedServer.Start: failed!

at org.apache.atlas.web.service.EmbeddedServer.start(EmbeddedServer.java:116)

at org.apache.atlas.Atlas.main(Atlas.java:133)

Caused by: java.lang.NullPointerException

at org.apache.atlas.util.BeanUtil.getBean(BeanUtil.java:36)

at org.apache.atlas.web.service.EmbeddedServer.auditServerStatus(EmbeddedServer.java:129)

at org.apache.atlas.web.service.EmbeddedServer.start(EmbeddedServer.java:112)

... 1 more

[[email protected] logs]$

- application.log

[[email protected] logs]$ tail -50f application.log

at org.eclipse.jetty.webapp.WebAppContext.startWebapp(WebAppContext.java:1445)

at org.eclipse.jetty.webapp.WebAppContext.startContext(WebAppContext.java:1409)

at org.eclipse.jetty.server.handler.ContextHandler.doStart(ContextHandler.java:855)

at org.eclipse.jetty.servlet.ServletContextHandler.doStart(ServletContextHandler.java:275)

at org.eclipse.jetty.webapp.WebAppContext.doStart(WebAppContext.java:524)

at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:72)

at org.eclipse.jetty.util.component.ContainerLifeCycle.start(ContainerLifeCycle.java:169)

at org.eclipse.jetty.server.Server.start(Server.java:408)

at org.eclipse.jetty.util.component.ContainerLifeCycle.doStart(ContainerLifeCycle.java:110)

at org.eclipse.jetty.server.handler.AbstractHandler.doStart(AbstractHandler.java:97)

at org.eclipse.jetty.server.Server.doStart(Server.java:372)

at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:72)

at org.apache.atlas.web.service.EmbeddedServer.start(EmbeddedServer.java:110)

at org.apache.atlas.Atlas.main(Atlas.java:133)

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.janusgraph.util.system.ConfigurationUtil.instantiate(ConfigurationUtil.java:58)

... 97 more

Caused by: org.janusgraph.diskstorage.PermanentBackendException: Permanent failure in storage backend

at org.janusgraph.diskstorage.hbase2.HBaseStoreManager.<init>(HBaseStoreManager.java:316)

... 102 more

Caused by: java.io.IOException: java.lang.reflect.UndeclaredThrowableException

at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:232)

at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:128)

at org.janusgraph.diskstorage.hbase2.HBaseCompat2_0.createConnection(HBaseCompat2_0.java:46)

at org.janusgraph.diskstorage.hbase2.HBaseStoreManager.<init>(HBaseStoreManager.java:314)

... 102 more

Caused by: java.lang.reflect.UndeclaredThrowableException

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1861)

at org.apache.hadoop.hbase.security.User$SecureHadoopUser.runAs(User.java:347)

at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:228)

... 105 more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hbase.client.ConnectionFactory.lambda$createConnection$0(ConnectionFactory.java:230)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1845)

... 107 more

Caused by: java.lang.NullPointerException

at org.apache.hadoop.hbase.client.ConnectionImplementation.close(ConnectionImplementation.java:2040)

at org.apache.hadoop.hbase.client.ConnectionImplementation.<init>(ConnectionImplementation.java:330)

... 115 more

2022-05-27 14:17:29,005 INFO - [main:] ~ Max retries exceeded. (AtlasGraphProvider:121)

观察日志,应该是没安装组件hbase导致的,但是官网是支持内嵌式启动的,不需要额外安装组件,最终排查发现是编译的时候没指定内嵌文件参数

重新编译atlas,启动验证

- 编译

clean install -Prelease -Pdist,embedded-hbase-solr -Dcheckstyle.skip=true -Dmaven.test.skip=true -Dmaven.javadoc.skip=true

-P表⽰ Profiles配置⽂件,对应profile的id,多个时以逗号隔开

-D 表⽰ Properties属性,XXXskip=true可以跳过某些属性

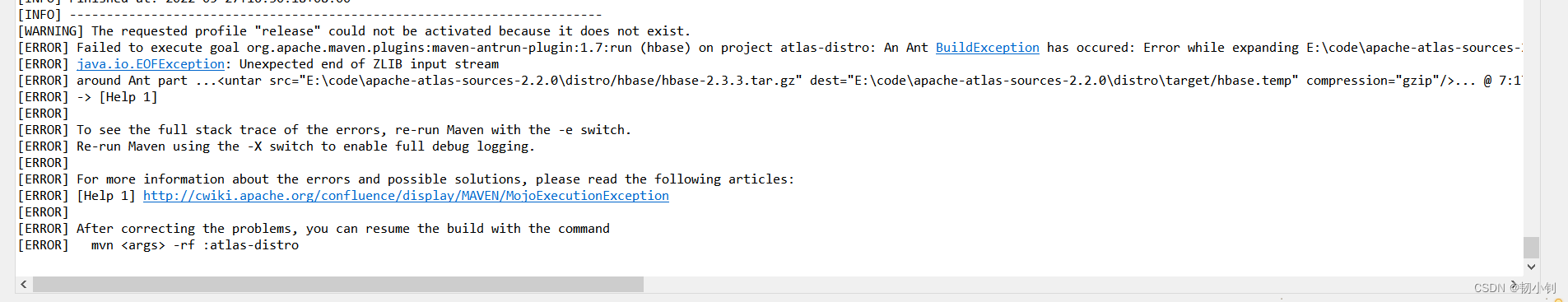

编译报错:Unexpected end of ZLIB input stream(下载资源超时所致)

[WARNING] The requested profile "release" could not be activated because it does not exist.

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-antrun-plugin:1.7:run (hbase) on project atlas-distro: An Ant BuildException has occured: Error while expanding E:\code\apache-atlas-sources-2.2.0\distro\hbase\hbase-2.3.3.tar.gz

[ERROR] java.io.EOFException: Unexpected end of ZLIB input stream

[ERROR] around Ant part ...<untar src="E:\code\apache-atlas-sources-2.2.0\distro/hbase/hbase-2.3.3.tar.gz" dest="E:\code\apache-atlas-sources-2.2.0\distro\target/hbase.temp" compression="gzip"/>... @ 7:170 in E:\code\apache-atlas-sources-2.2.0\distro\target\antrun\build-Download HBase.xml

[ERROR] -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoExecutionException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn <args> -rf :atlas-distro

原因是因为没指定jdk

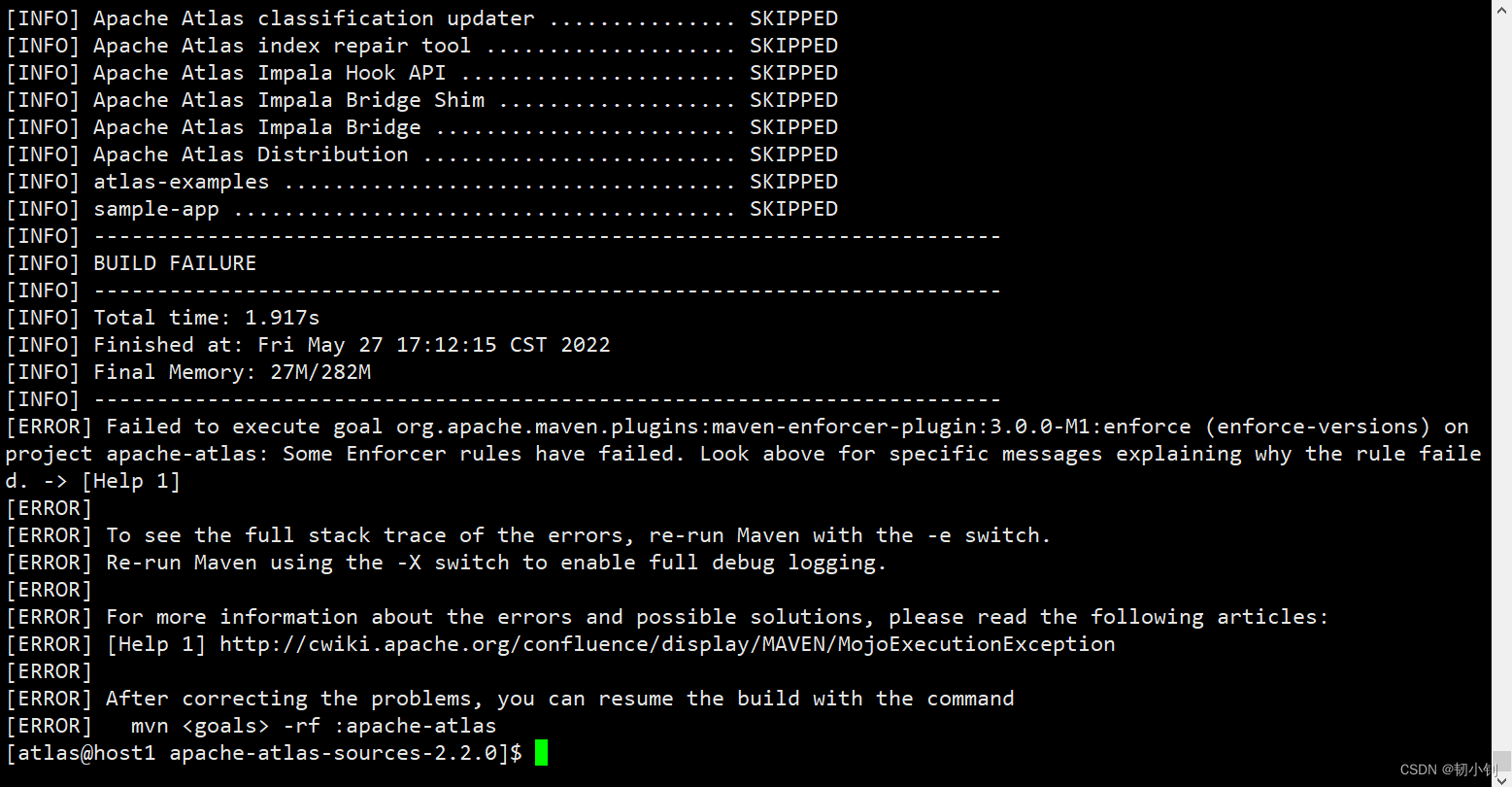

编译报错:enforce (enforce-versions) on project apache-atlas(maven或者jdk版本不符合要求)

[WARNING] The requested profile "release" could not be activated because it does not exist.

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-enforcer-plugin:3.0.0-M1:enforce (enforce-versions) on project apache-atlas: Some Enforcer rules have failed. Look above for specific messages explaining why the rule failed. -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoExecutionException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn <args> -rf :apache-atlas

指定jdk,但是电脑现在装的版本还是不符合要求

指定的jdk版本过低(之前没指定jdk咋成功的?默认?)

- 安装jdk1.8.151(free download)

- 和Linux一样,下载hbase连接超时,-P只保留dist,则可以成功

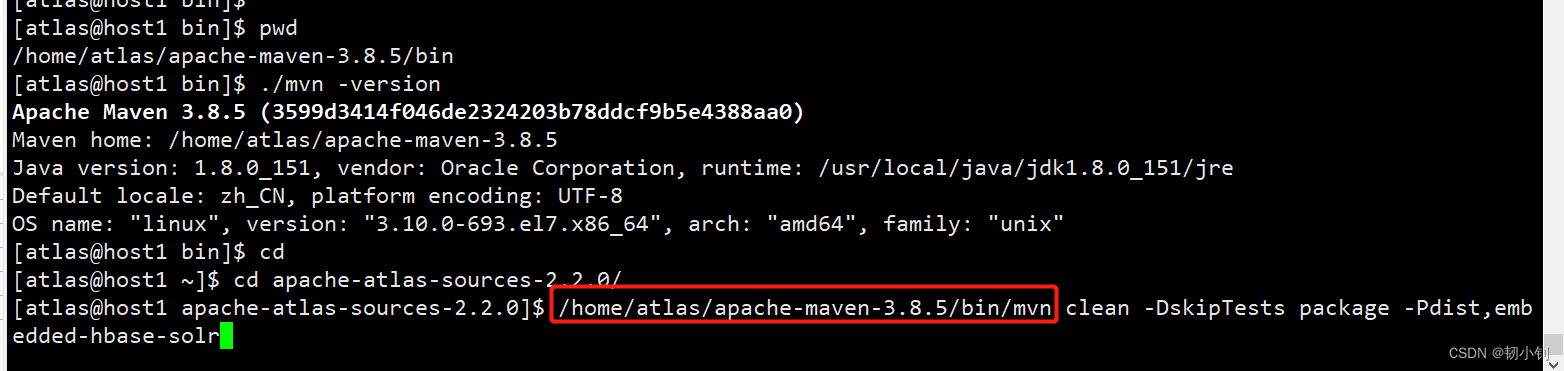

Linux 编译

- 虚拟机安装的jdk版本符合要求,尝试在Linux上编译

mvn clean -DskipTests package -Pdist,embedded-hbase-solr

- 依然报错,原因是maven版本低

- 安装maven呗,这网速最起码比安装jdk要快,Oracle官网都打不开

- maven 解压即用,再次尝试编译

/home/atlas/apache-maven-3.8.5/bin/mvn clean -DskipTests package -Pdist,embedded-hbase-solr

漫长的等待,希望今晚十一点前能结束,明天准备去徒步呢

停止编译,直接本地上传

- 报错,删除对应jar包,替换镜像地址,再次编译

清除

替换镜像地址

<mirrors>

<mirror>

<id>alimaven</id>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

</mirrors>

- 编译…此时windows64 jdk1.8.151已经下载好了,同步进行

下载超时失败,下载太慢了…

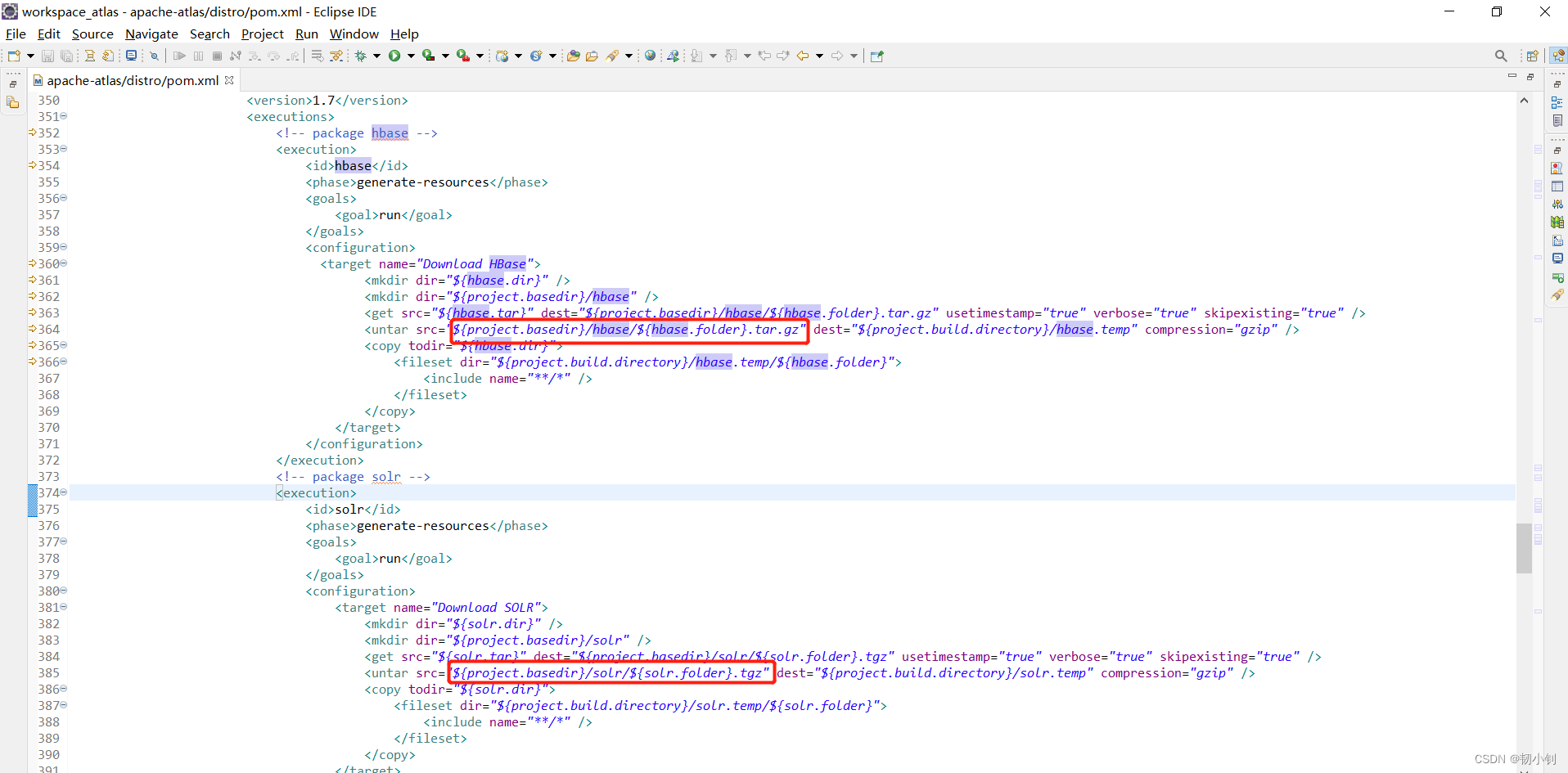

内嵌编译一直失败解决方案

失败原因

因为资源包下载失败,导致超时失败,eclipse表现是stream错误

手动下载

先线下下载好对应tar包(内置?呵呵,还特么要下载)

NND,又TM凌晨一点多了,下次有机会继续吧

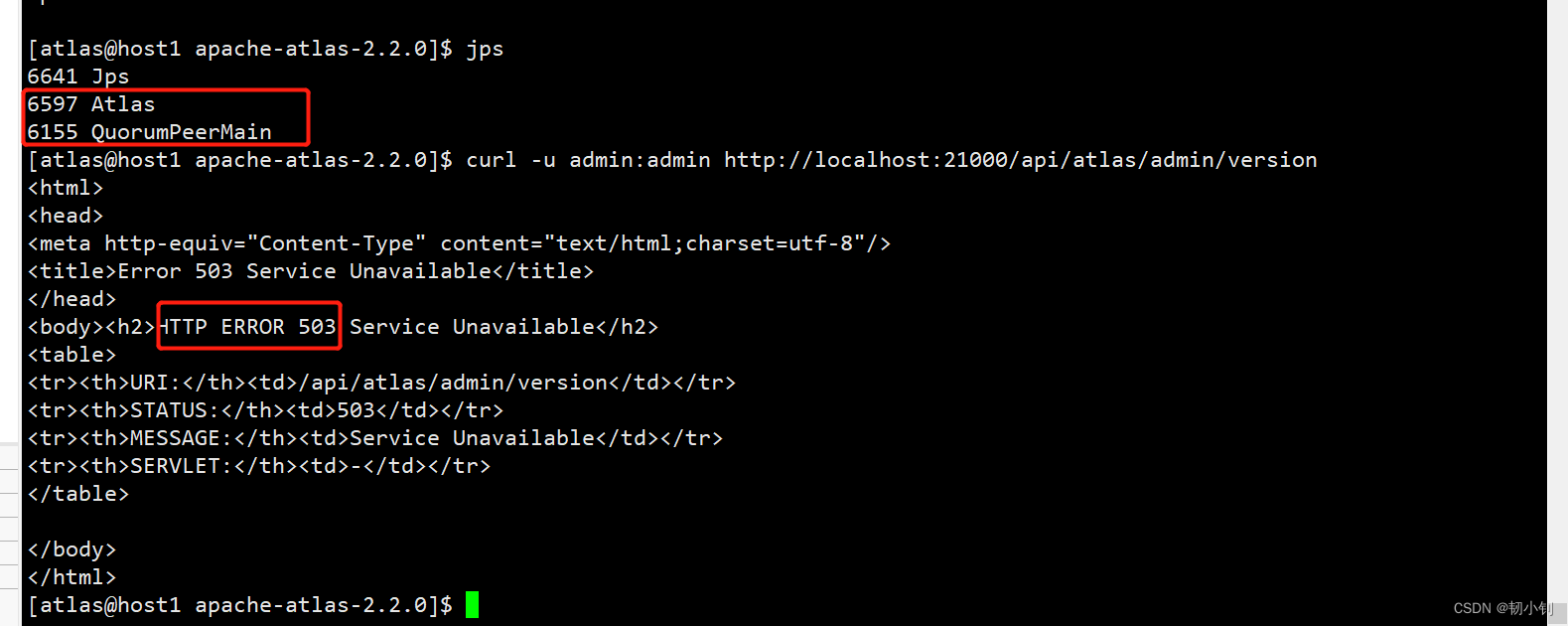

早上7点起来继续搞,编译成功,应用日志也不报错,但是验证,还是报503错,看err日志,貌似是zookeeper没注册,然后打包参数增加zookeeper参数,启动atlas之后,会多出一个zookeeper的进程,但是依然报错,验证还是503,十点钟出门徒步,到了下午四点半回来,打开电脑,电脑蓝屏…最终进来后,发现今天上午的安装记录都不在了,所以接下来再来一遍上午干过的嘛?关键上午的也没验证成功呀!!!OK,牢骚结束,继续开整呗🤯🤯🤯

手动替换

下载好的安装包上传到响应目录,按照pom.xml中要求重命名(或者复制一份)

project.basedir对应项目所在目录,比如distro所在目录:/home/atlas/apache-atlas-sources-2.2.0/distro

再次编译

- 编译命令

/home/atlas/apache-maven-3.8.5/bin/mvn clean -DskipTests package -Pdist,embedded-hbase-solr

- 编译过程,会直接跳过一直超时的这一步

- 编译结果,终于成功

内嵌式启动

export MANAGE_LOCAL_HBASE=true

export MANAGE_LOCAL_SOLR=true

bin/atlas_start.py

验证成功

- curl

curl -u admin:admin http://localhost:21000/api/atlas/admin/version

- 登录(admin/admin)

成功的不科学,但也能接受

这么不科学,因为过于相信博客的自动保存功能,导致上午的记录都没了,现在就是在重复上午的操作,结果重复到一半,就成功了…啥意思呀,看我不容易可怜我嘛?WTF!感觉受到了侮辱…OK,既然成功了,还是要想办法解释一下!!

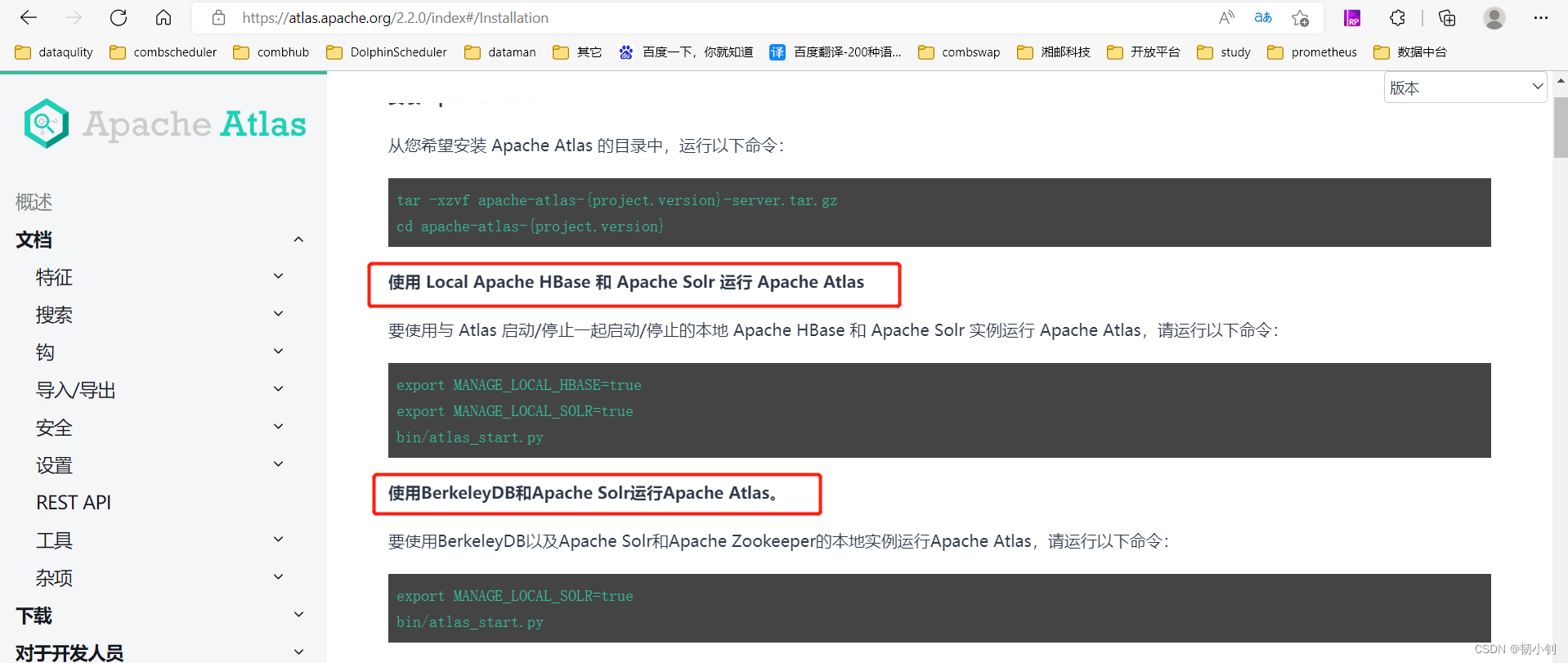

查看官网,通过本地组件去启动,两种方式:

内嵌式启动方式一:hbase+solr

- 使用 Local Apache HBase 和 Apache Solr 运行 Apache Atlas

export MANAGE_LOCAL_HBASE=true

export MANAGE_LOCAL_SOLR=true

bin/atlas_start.py

编译需要指定的profile:

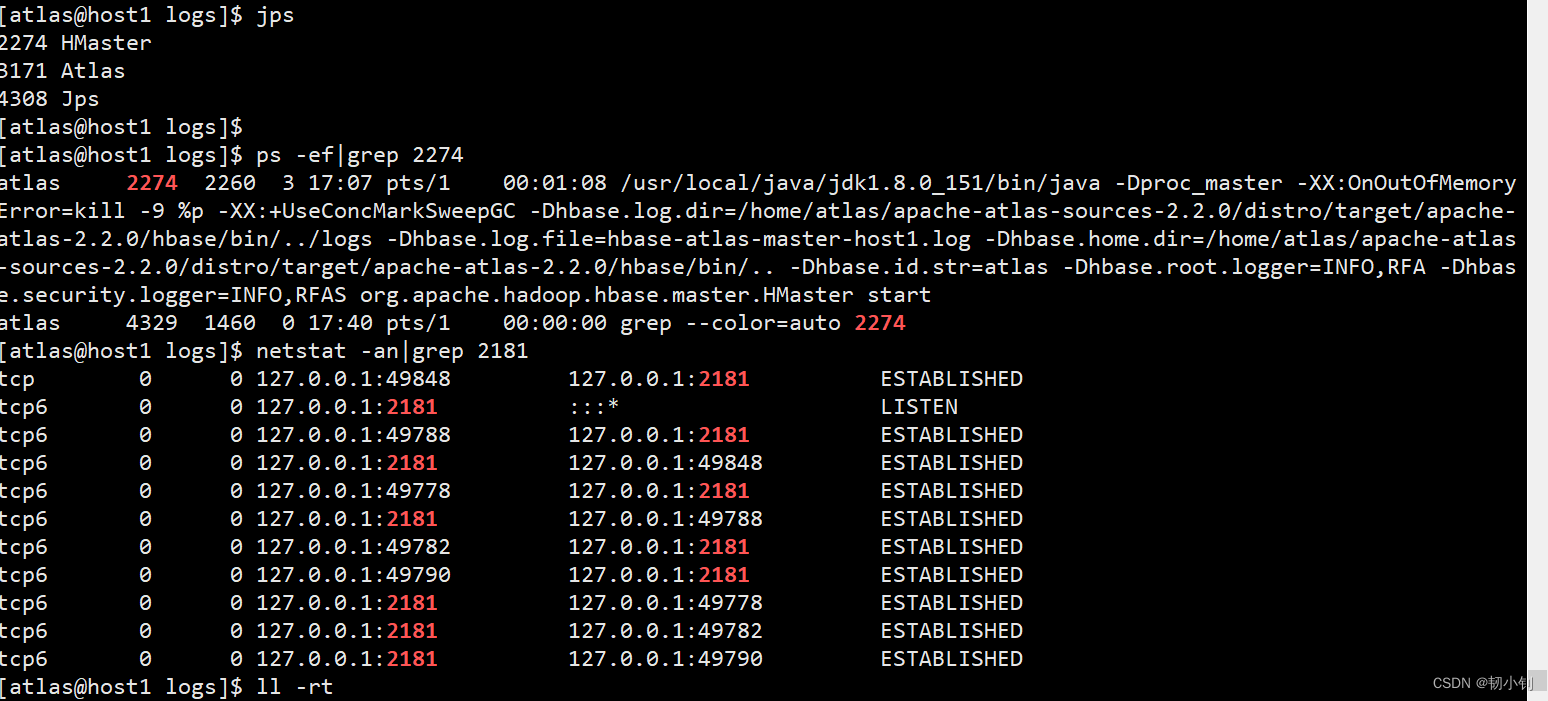

-Pdist,embedded-hbase-solr启动成功后显示的进程有Atlas和HMaster(上午测试的时候只有Atlas进程)

虽然没有zookeeper进程,但是2181端口是有的,是巧合还是内置呢?

应用日志(上午测试的时候application.log也没报错,但是日志只到红线上部就结束了)

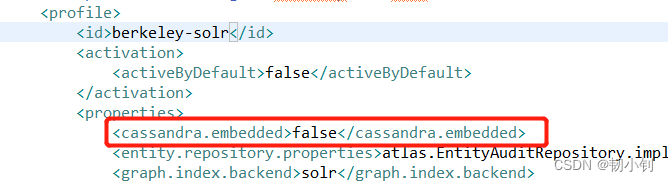

内嵌式启动方式二:BerkeleyDB、Solr+Zookeeper

- 使用BerkeleyDB和Apache Solr运行Apache Atlas

export MANAGE_LOCAL_SOLR=true

bin/atlas_start.py

- 编译需要指定的profile:

-Pdist,embedded-cassandra-solr

-Pdist,berkeley-solr(不断摸索出来的,本以为是-Pdist,embedded-cassandra-solr,然而不是)

早上,验证失败的时候,有err日志的,里面显示的zookeeper节点不存在,于是尝试增加了上面参数-Pdist,embedded-hbase-solr,embedded-cassandra-solr,现象就是,启动atlas之后,会多出QuorumPeerMain进程,但是验证依然失败,errc错误也没变化,本身看官网资料,感觉也不需要加这个参数,这两个参数对应的应该是两种启动方式,但是尝试嘛,不就是走弯路

编译

zookeeper对应的包不大,可以直接编译,不需要手动下载丢到相应目录,改成所需文件名称,最后一次尝试吧,毕竟走到这了,不都试一遍,心里怪难受的(活该掉头发呀)!

- 编译

停掉进程,情况target目录,执行编译

- 编译过程(zookeeper已存在,也算是上午测试留下的证明吧)

- 编译结果

- 编译结果

启动

export MANAGE_LOCAL_SOLR=true

bin/atlas_start.py

验证报错

- HTTP ERROR 503

- 和上午内嵌方式一

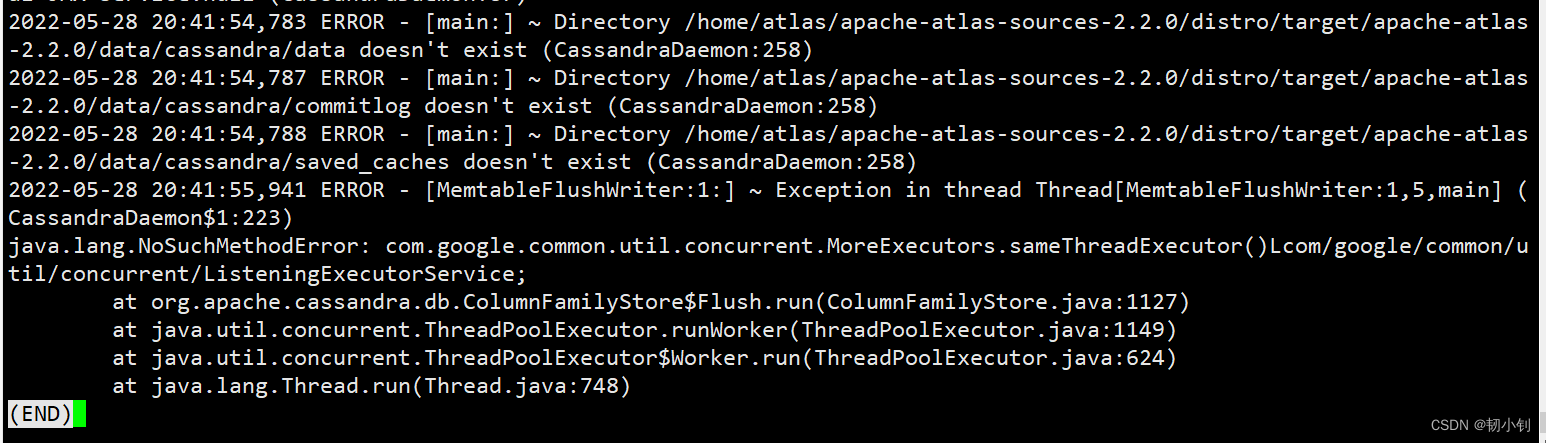

报错:

应用日志则不再往下执行:

- 查看输出out日志:

java.lang.NoSuchMethodError: com.google.common.base.Preconditions.checkArgument

将下图中guava-19.0.jar替换为guava-25.1-jre.jar可以解决此问题

重新编译,增加berkeley-solr

感觉是病急乱投医,因为从pom.xml配置看,berkeley-solr和embedded-cassandra-solr是互斥的,试最后一次吧,没那么多头发,省着点用吧

- 编译

[[email protected] apache-atlas-sources-2.2.0]$ /home/atlas/apache-maven-3.8.5/bin/mvn clean -DskipTests package -Pdist,berkeley-solr,embedded-cassandra-solr

- 互不互斥的都不影响编译结果

- 最后的尝试

[[email protected] apache-atlas-2.2.0]$ cp solr/server/solr-webapp/webapp/WEB-INF/lib/guava-25.1-jre.jar server/webapp/atlas/WEB-INF/lib/

[[email protected] apache-atlas-2.2.0]$ cp server/webapp/atlas/WEB-INF/lib/curator-client-4.3.0.jar solr/server/solr-webapp/webapp/WEB-INF/lib/

[[email protected] apache-atlas-2.2.0]$ mv server/webapp/atlas/WEB-INF/lib/guava-19.0.jar /tmp/

[[email protected] apache-atlas-2.2.0]$ mv solr/server/solr-webapp/webapp/WEB-INF/lib/curator-client-2.13.0.jar /tmp/

还是报类找不到错,替换jar包后又报其他类:

把下图jar去除,依然报上图错:

- 上图日志,报目录不存在,实际上是存在的,难道有权限有问题,用sudo启动试一下,真的最后一次了(失败…)

- 最后一次编译,去除embedded…(安装官网说的BerkeleyDB、Solr+Zookeeper,我一直盯着embedded关键字是不是跑偏了)

[[email protected] apache-atlas-sources-2.2.0]$ /home/atlas/apache-maven-3.8.5/bin/mvn clean -DskipTests package -Pdist,berkeley-solr

报了一堆错…就这样吧,真爱生命,远离啥呢?就这样,总结也不写了,本身工作中有开发元数据管理,寻思能不能借鉴呢,这整的一地鸡毛…而且atlas感觉就是给hadoop生态圈使用的,我参与的元数据管理开发针对的都是关系型数据库…就这样吧,累了…两天之后,发现上面的编译方式(-Pdist,berkeley-solr)是对的,可以正常启动登录!!!

再战内嵌启动方式二

书接前文,内嵌启动方式二(

BerkeleyDB、Solr+Zookeeper)一直没有验证成功,今天再次尝试,看是否能验证成功

日志分析

前文最后一次尝试,部分错误日志如下:

2022-05-28 21:28:54,433 WARN - [main:] ~ JanusGraphException: Could not execute operation due to backend exception (AtlasJanusGraphDatabase:184)

2022-05-28 21:28:54,433 WARN - [main:] ~ Failed to obtain graph instance on attempt 3 of 3 (AtlasGraphProvider:118)

java.lang.RuntimeException: org.janusgraph.core.JanusGraphException: Could not execute operation due to backend exception

at org.apache.atlas.repository.graphdb.janus.AtlasJanusGraphDatabase.initJanusGraph(AtlasJanusGraphDatabase.java:190)

at org.apache.atlas.repository.graphdb.janus.AtlasJanusGraphDatabase.getGraphInstance(AtlasJanusGraphDatabase.java:169)

at org.apache.atlas.repository.graphdb.janus.AtlasJanusGraphDatabase.getGraph(AtlasJanusGraphDatabase.java:278)

at org.apache.atlas.repository.graph.AtlasGraphProvider.getGraphInstance(AtlasGraphProvider.java:52)

at org.apache.atlas.repository.graph.AtlasGraphProvider.retry(AtlasGraphProvider.java:114)

at org.apache.atlas.repository.graph.AtlasGraphProvider.get(AtlasGraphProvider.java:102)

at org.apache.atlas.repository.graph.AtlasGraphProvider$$EnhancerBySpringCGLIB$$865b0924.CGLIB$get$1(<generated>)

at org.apache.atlas.repository.graph.AtlasGraphProvider$$EnhancerBySpringCGLIB$$865b0924$$FastClassBySpringCGLIB$$595a0c30.invoke(<generated>)

at org.springframework.cglib.proxy.MethodProxy.invokeSuper(MethodProxy.java:228)

at org.springframework.context.annotation.ConfigurationClassEnhancer$BeanMethodInterceptor.intercept(ConfigurationClassEnhancer.java:358)

at org.apache.atlas.repository.graph.AtlasGraphProvider$$EnhancerBySpringCGLIB$$865b0924.get(<generated>)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.springframework.beans.factory.support.SimpleInstantiationStrategy.instantiate(SimpleInstantiationStrategy.java:162)

at org.springframework.beans.factory.support.ConstructorResolver.instantiateUsingFactoryMethod(ConstructorResolver.java:588)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.instantiateUsingFactoryMethod(AbstractAutowireCapableBeanFactory.java:1176)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBeanInstance(AbstractAutowireCapableBeanFactory.java:1071)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.doCreateBean(AbstractAutowireCapableBeanFactory.java:511)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBean(AbstractAutowireCapableBeanFactory.java:481)

at org.springframework.beans.factory.support.AbstractBeanFactory$1.getObject(AbstractBeanFactory.java:312)

at org.springframework.beans.factory.support.DefaultSingletonBeanRegistry.getSingleton(DefaultSingletonBeanRegistry.java:230)

at org.springframework.beans.factory.support.AbstractBeanFactory.doGetBean(AbstractBeanFactory.java:308)

at org.springframework.beans.factory.support.AbstractBeanFactory.getBean(AbstractBeanFactory.java:202)

at org.springframework.beans.factory.config.DependencyDescriptor.resolveCandidate(DependencyDescriptor.java:211)

at org.springframework.beans.factory.support.DefaultListableBeanFactory.doResolveDependency(DefaultListableBeanFactory.java:1134)

at org.springframework.beans.factory.support.DefaultListableBeanFactory.resolveDependency(DefaultListableBeanFactory.java:1062)

at org.springframework.beans.factory.support.ConstructorResolver.resolveAutowiredArgument(ConstructorResolver.java:835)

at org.springframework.beans.factory.support.ConstructorResolver.createArgumentArray(ConstructorResolver.java:741)

at org.springframework.beans.factory.support.ConstructorResolver.autowireConstructor(ConstructorResolver.java:189)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.autowireConstructor(AbstractAutowireCapableBeanFactory.java:1196)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBeanInstance(AbstractAutowireCapableBeanFactory.java:1098)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.doCreateBean(AbstractAutowireCapableBeanFactory.java:511)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBean(AbstractAutowireCapableBeanFactory.java:481)

at org.springframework.beans.factory.support.AbstractBeanFactory$1.getObject(AbstractBeanFactory.java:312)

at org.springframework.beans.factory.support.DefaultSingletonBeanRegistry.getSingleton(DefaultSingletonBeanRegistry.java:230)

at org.springframework.beans.factory.support.AbstractBeanFactory.doGetBean(AbstractBeanFactory.java:308)

at org.springframework.beans.factory.support.AbstractBeanFactory.getBean(AbstractBeanFactory.java:202)

at org.springframework.beans.factory.config.DependencyDescriptor.resolveCandidate(DependencyDescriptor.java:211)

at org.springframework.beans.factory.support.DefaultListableBeanFactory.doResolveDependency(DefaultListableBeanFactory.java:1134)

at org.springframework.beans.factory.support.DefaultListableBeanFactory.resolveDependency(DefaultListableBeanFactory.java:1062)

at org.springframework.beans.factory.support.ConstructorResolver.resolveAutowiredArgument(ConstructorResolver.java:835)

at org.springframework.beans.factory.support.ConstructorResolver.createArgumentArray(ConstructorResolver.java:741)

at org.springframework.beans.factory.support.ConstructorResolver.autowireConstructor(ConstructorResolver.java:189)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.autowireConstructor(AbstractAutowireCapableBeanFactory.java:1196)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBeanInstance(AbstractAutowireCapableBeanFactory.java:1098)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.doCreateBean(AbstractAutowireCapableBeanFactory.java:511)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBean(AbstractAutowireCapableBeanFactory.java:481)

at org.springframework.beans.factory.support.AbstractBeanFactory$1.getObject(AbstractBeanFactory.java:312)

at org.springframework.beans.factory.support.DefaultSingletonBeanRegistry.getSingleton(DefaultSingletonBeanRegistry.java:230)

at org.springframework.beans.factory.support.AbstractBeanFactory.doGetBean(AbstractBeanFactory.java:308)

at org.springframework.beans.factory.support.AbstractBeanFactory.getBean(AbstractBeanFactory.java:202)

at org.springframework.aop.framework.autoproxy.BeanFactoryAdvisorRetrievalHelper.findAdvisorBeans(BeanFactoryAdvisorRetrievalHelper.java:89)

at org.springframework.aop.framework.autoproxy.AbstractAdvisorAutoProxyCreator.findCandidateAdvisors(AbstractAdvisorAutoProxyCreator.java:102)

at org.springframework.aop.aspectj.annotation.AnnotationAwareAspectJAutoProxyCreator.findCandidateAdvisors(AnnotationAwareAspectJAutoProxyCreator.java:88)

at org.springframework.aop.aspectj.autoproxy.AspectJAwareAdvisorAutoProxyCreator.shouldSkip(AspectJAwareAdvisorAutoProxyCreator.java:103)

at org.springframework.aop.framework.autoproxy.AbstractAutoProxyCreator.postProcessBeforeInstantiation(AbstractAutoProxyCreator.java:245)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.applyBeanPostProcessorsBeforeInstantiation(AbstractAutowireCapableBeanFactory.java:1041)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.resolveBeforeInstantiation(AbstractAutowireCapableBeanFactory.java:1015)

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBean(AbstractAutowireCapableBeanFactory.java:471)

at org.springframework.beans.factory.support.AbstractBeanFactory$1.getObject(AbstractBeanFactory.java:312)

at org.springframework.beans.factory.support.DefaultSingletonBeanRegistry.getSingleton(DefaultSingletonBeanRegistry.java:230)

at org.springframework.beans.factory.support.AbstractBeanFactory.doGetBean(AbstractBeanFactory.java:308)

at org.springframework.beans.factory.support.AbstractBeanFactory.getBean(AbstractBeanFactory.java:197)

at org.springframework.beans.factory.support.DefaultListableBeanFactory.preInstantiateSingletons(DefaultListableBeanFactory.java:756)

at org.springframework.context.support.AbstractApplicationContext.finishBeanFactoryInitialization(AbstractApplicationContext.java:867)

at org.springframework.context.support.AbstractApplicationContext.refresh(AbstractApplicationContext.java:542)

at org.springframework.web.context.ContextLoader.configureAndRefreshWebApplicationContext(ContextLoader.java:443)

at org.springframework.web.context.ContextLoader.initWebApplicationContext(ContextLoader.java:325)

at org.springframework.web.context.ContextLoaderListener.contextInitialized(ContextLoaderListener.java:107)

at org.apache.atlas.web.setup.KerberosAwareListener.contextInitialized(KerberosAwareListener.java:31)

at org.eclipse.jetty.server.handler.ContextHandler.callContextInitialized(ContextHandler.java:1013)

at org.eclipse.jetty.servlet.ServletContextHandler.callContextInitialized(ServletContextHandler.java:553)

at org.eclipse.jetty.server.handler.ContextHandler.contextInitialized(ContextHandler.java:942)

at org.eclipse.jetty.servlet.ServletHandler.initialize(ServletHandler.java:782)

at org.eclipse.jetty.servlet.ServletContextHandler.startContext(ServletContextHandler.java:360)

at org.eclipse.jetty.webapp.WebAppContext.startWebapp(WebAppContext.java:1445)

at org.eclipse.jetty.webapp.WebAppContext.startContext(WebAppContext.java:1409)

at org.eclipse.jetty.server.handler.ContextHandler.doStart(ContextHandler.java:855)

at org.eclipse.jetty.servlet.ServletContextHandler.doStart(ServletContextHandler.java:275)

at org.eclipse.jetty.webapp.WebAppContext.doStart(WebAppContext.java:524)

at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:72)

at org.eclipse.jetty.util.component.ContainerLifeCycle.start(ContainerLifeCycle.java:169)

at org.eclipse.jetty.server.Server.start(Server.java:408)

at org.eclipse.jetty.util.component.ContainerLifeCycle.doStart(ContainerLifeCycle.java:110)

at org.eclipse.jetty.server.handler.AbstractHandler.doStart(AbstractHandler.java:97)

at org.eclipse.jetty.server.Server.doStart(Server.java:372)

at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:72)

at org.apache.atlas.web.service.EmbeddedServer.start(EmbeddedServer.java:110)

at org.apache.atlas.Atlas.main(Atlas.java:133)

Caused by: org.janusgraph.core.JanusGraphException: Could not execute operation due to backend exception

at org.janusgraph.diskstorage.util.BackendOperation.execute(BackendOperation.java:56)

at org.janusgraph.diskstorage.util.BackendOperation.execute(BackendOperation.java:158)

at org.janusgraph.diskstorage.configuration.backend.KCVSConfiguration.set(KCVSConfiguration.java:146)

at org.janusgraph.diskstorage.configuration.backend.KCVSConfiguration.set(KCVSConfiguration.java:123)

at org.janusgraph.diskstorage.configuration.ModifiableConfiguration.set(ModifiableConfiguration.java:43)

at org.janusgraph.diskstorage.configuration.ModifiableConfiguration.setAll(ModifiableConfiguration.java:50)

at org.janusgraph.diskstorage.configuration.builder.ReadConfigurationBuilder.buildGlobalConfiguration(ReadConfigurationBuilder.java:72)

at org.janusgraph.graphdb.configuration.builder.GraphDatabaseConfigurationBuilder.build(GraphDatabaseConfigurationBuilder.java:53)

at org.janusgraph.core.JanusGraphFactory.open(JanusGraphFactory.java:161)

at org.janusgraph.core.JanusGraphFactory.open(JanusGraphFactory.java:132)

at org.janusgraph.core.JanusGraphFactory.open(JanusGraphFactory.java:112)

at org.apache.atlas.repository.graphdb.janus.AtlasJanusGraphDatabase.initJanusGraph(AtlasJanusGraphDatabase.java:182)

... 90 more

Suppressed: org.janusgraph.core.JanusGraphException: Could not close configuration store

at org.janusgraph.diskstorage.configuration.backend.KCVSConfiguration.close(KCVSConfiguration.java:228)

at org.janusgraph.diskstorage.configuration.builder.ReadConfigurationBuilder.buildGlobalConfiguration(ReadConfigurationBuilder.java:94)

... 95 more

Caused by: org.janusgraph.diskstorage.PermanentBackendException: Could not close BerkeleyJE database

at org.janusgraph.diskstorage.berkeleyje.BerkeleyJEStoreManager.close(BerkeleyJEStoreManager.java:249)

at org.janusgraph.diskstorage.keycolumnvalue.keyvalue.OrderedKeyValueStoreManagerAdapter.close(OrderedKeyValueStoreManagerAdapter.java:73)

at org.janusgraph.diskstorage.configuration.backend.builder.KCVSConfigurationBuilder$1.close(KCVSConfigurationBuilder.java:46)

at org.janusgraph.diskstorage.configuration.backend.KCVSConfiguration.close(KCVSConfiguration.java:225)

... 96 more

Caused by: com.sleepycat.je.DiskLimitException: (JE 18.3.12) Disk usage is not within je.maxDisk or je.freeDisk limits and write operations are prohibited: maxDiskLimit=0 freeDiskLimit=5,368,709,120 adjustedMaxDiskLimit=0 maxDiskOverage=0 freeDiskShortage=178,274,304 diskFreeSpace=5,190,434,816 availableLogSize=-178,274,304 totalLogSize=45,149 activeLogSize=45,149 reservedLogSize=0 protectedLogSize=0 protectedLogSizeMap={}

at com.sleepycat.je.dbi.EnvironmentImpl.checkDiskLimitViolation(EnvironmentImpl.java:2733)

at com.sleepycat.je.recovery.Checkpointer.doCheckpoint(Checkpointer.java:724)

at com.sleepycat.je.dbi.EnvironmentImpl.invokeCheckpoint(EnvironmentImpl.java:2321)

at com.sleepycat.je.dbi.EnvironmentImpl.doClose(EnvironmentImpl.java:1972)

at com.sleepycat.je.dbi.DbEnvPool.closeEnvironment(DbEnvPool.java:342)

at com.sleepycat.je.dbi.EnvironmentImpl.close(EnvironmentImpl.java:1866)

at com.sleepycat.je.Environment.close(Environment.java:444)

at org.janusgraph.diskstorage.berkeleyje.BerkeleyJEStoreManager.close(BerkeleyJEStoreManager.java:247)

... 99 more

Caused by: org.janusgraph.diskstorage.PermanentBackendException: Permanent failure in storage backend

at org.janusgraph.diskstorage.berkeleyje.BerkeleyJETx.commit(BerkeleyJETx.java:112)

at org.janusgraph.diskstorage.util.BackendOperation.execute(BackendOperation.java:153)

at org.janusgraph.diskstorage.util.BackendOperation$1.call(BackendOperation.java:161)

at org.janusgraph.diskstorage.util.BackendOperation.executeDirect(BackendOperation.java:68)

at org.janusgraph.diskstorage.util.BackendOperation.execute(BackendOperation.java:54)

... 101 more

Caused by: com.sleepycat.je.DiskLimitException: (JE 18.3.12) Transaction 21 must be aborted, caused by: com.sleepycat.je.DiskLimitException: (JE 18.3.12) Disk usage is not within je.maxDisk or je.freeDisk limits and write operations are prohibited: maxDiskLimit=0 freeDiskLimit=5,368,709,120 adjustedMaxDiskLimit=0 maxDiskOverage=0 freeDiskShortage=178,274,304 diskFreeSpace=5,190,434,816 availableLogSize=-178,274,304 totalLogSize=45,149 activeLogSize=45,149 reservedLogSize=0 protectedLogSize=0 protectedLogSizeMap={}

at com.sleepycat.je.DiskLimitException.wrapSelf(DiskLimitException.java:61)

at com.sleepycat.je.txn.Txn.checkState(Txn.java:1951)

at com.sleepycat.je.txn.Txn.commit(Txn.java:725)

at com.sleepycat.je.txn.Txn.commit(Txn.java:631)

at com.sleepycat.je.Transaction.commit(Transaction.java:337)

at org.janusgraph.diskstorage.berkeleyje.BerkeleyJETx.commit(BerkeleyJETx.java:109)

... 105 more

Caused by: com.sleepycat.je.DiskLimitException: (JE 18.3.12) Disk usage is not within je.maxDisk or je.freeDisk limits and write operations are prohibited: maxDiskLimit=0 freeDiskLimit=5,368,709,120 adjustedMaxDiskLimit=0 maxDiskOverage=0 freeDiskShortage=178,274,304 diskFreeSpace=5,190,434,816 availableLogSize=-178,274,304 totalLogSize=45,149 activeLogSize=45,149 reservedLogSize=0 protectedLogSize=0 protectedLogSizeMap={}

at com.sleepycat.je.Cursor.checkUpdatesAllowed(Cursor.java:5407)

at com.sleepycat.je.Cursor.checkUpdatesAllowed(Cursor.java:5384)

at com.sleepycat.je.Cursor.putInternal(Cursor.java:2439)

at com.sleepycat.je.Cursor.putInternal(Cursor.java:841)

at com.sleepycat.je.Database.put(Database.java:1635)

at org.janusgraph.diskstorage.berkeleyje.BerkeleyJEKeyValueStore.insert(BerkeleyJEKeyValueStore.java:229)

at org.janusgraph.diskstorage.berkeleyje.BerkeleyJEKeyValueStore.insert(BerkeleyJEKeyValueStore.java:213)

at org.janusgraph.diskstorage.keycolumnvalue.keyvalue.OrderedKeyValueStoreAdapter.mutate(OrderedKeyValueStoreAdapter.java:99)

at org.janusgraph.diskstorage.configuration.backend.KCVSConfiguration$2.call(KCVSConfiguration.java:151)

at org.janusgraph.diskstorage.configuration.backend.KCVSConfiguration$2.call(KCVSConfiguration.java:146)

at org.janusgraph.diskstorage.util.BackendOperation.execute(BackendOperation.java:147)

... 104 more

2022-05-28 21:28:54,435 INFO - [main:] ~ Max retries exceeded. (AtlasGraphProvider:121)

2022-05-28 21:49:37,789 INFO - [shutdown-hook-0:] ~ ==> Shutdown of Atlas (Atlas$1:63)

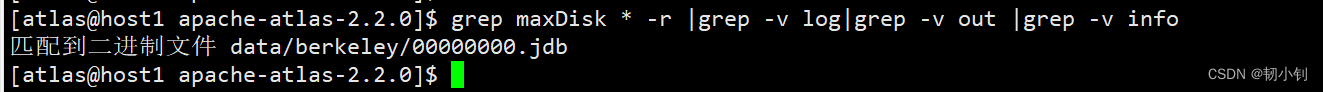

磁盘空间不足所致?

5个G呢,还不够用嘛,上图可用磁盘不足参数,代表还差1.7G的空间?

是否可以修改配置文件,关闭检查或者降低配置?(无果)

- 搜索相关配置

- atlas-application.properties(未找到Disk相关配置 )

atlas.graph.storage.backend=berkeleyje

atlas.graph.storage.hbase.table=apache_atlas_janus

atlas.graph.storage.directory=${sys:atlas.home}/data/berkeley

atlas.graph.storage.lock.clean-expired=true

atlas.graph.storage.lock.expiry-time=500

atlas.graph.storage.lock.wait-time=300

atlas.EntityAuditRepository.impl=org.apache.atlas.repository.audit.NoopEntityAuditRepository

atlas.graph.index.search.backend=solr

atlas.graph.index.search.solr.mode=cloud

atlas.graph.index.search.solr.zookeeper-url=localhost:2181

atlas.graph.index.search.solr.zookeeper-connect-timeout=60000

atlas.graph.index.search.solr.zookeeper-session-timeout=60000

atlas.graph.index.search.solr.wait-searcher=false

atlas.graph.index.search.max-result-set-size=150

atlas.notification.embedded=true

atlas.kafka.data=${sys:atlas.home}/data/kafka

atlas.kafka.zookeeper.connect=localhost:9026

atlas.kafka.bootstrap.servers=localhost:9027

atlas.kafka.zookeeper.session.timeout.ms=400

atlas.kafka.zookeeper.connection.timeout.ms=200

atlas.kafka.zookeeper.sync.time.ms=20

atlas.kafka.auto.commit.interval.ms=1000

atlas.kafka.hook.group.id=atlas

atlas.kafka.enable.auto.commit=false

atlas.kafka.auto.offset.reset=earliest

atlas.kafka.session.timeout.ms=30000

atlas.kafka.offsets.topic.replication.factor=1

atlas.kafka.poll.timeout.ms=1000

atlas.notification.create.topics=true

atlas.notification.replicas=1

atlas.notification.topics=ATLAS_HOOK,ATLAS_ENTITIES

atlas.notification.log.failed.messages=true

atlas.notification.consumer.retry.interval=500

atlas.notification.hook.retry.interval=1000

atlas.enableTLS=false

atlas.authentication.method.kerberos=false

atlas.authentication.method.file=true

atlas.authentication.method.ldap.type=none

atlas.authentication.method.file.filename=${sys:atlas.home}/conf/users-credentials.properties

atlas.rest.address=http://localhost:21000

atlas.audit.hbase.tablename=apache_atlas_entity_audit

atlas.audit.zookeeper.session.timeout.ms=1000

atlas.audit.hbase.zookeeper.quorum=localhost:2181

atlas.server.ha.enabled=false

atlas.authorizer.impl=simple

atlas.authorizer.simple.authz.policy.file=atlas-simple-authz-policy.json

atlas.rest-csrf.enabled=true

atlas.rest-csrf.browser-useragents-regex=^Mozilla.*,^Opera.*,^Chrome.*

atlas.rest-csrf.methods-to-ignore=GET,OPTIONS,HEAD,TRACE

atlas.rest-csrf.custom-header=X-XSRF-HEADER

atlas.metric.query.cache.ttlInSecs=900

atlas.search.gremlin.enable=false

atlas.ui.default.version=v1

[[email protected] apache-atlas-2.2.0]$

删除多余文件,增加可用空间(成功解决)

- 启动

- 验证

验证方式同上

看页面没啥区别,看不出是何种方式启动的

总结

- 这就是搞技术的魅力,就是一个坚持,问题总会被解决,当然也可以分步走!前几天试了很久很久,看到日志报错直接放弃了。可以暂时放弃,让脑子休息一段时间,后面再捡起来,比如今天,只花了十几分钟,分析下日志,找找原因,成功解决!

- 当然后面如何使用?能否集成到自己的项目?路还很长,部署完成只是万里长征的第一步!加油吧!

边栏推荐

- Abnova酸性磷酸酶(小麦胚芽)说明书

- How to make social media the driving force of cross-border e-commerce? This independent station tool cannot be missed!

- openwrt目录结构

- 七年码农路

- Kail 渗透基本素养 基础命令

- Experience of Tencent cloud installed by Kali

- 静态双位置继电器GLS-3004K/DC220V

- ICER skill 02makefile script self running VCs simulation

- Icer Skill 02makefile script Running VCS Simulation

- Ad9 tips

猜你喜欢

美团好文:从预编译的角度理解Swift与Objective-C及混编机制

Abnova blood total nucleic acid purification kit protocol

Dpr-34v/v two position relay

2 万字 + 20张图|细说 Redis 九种数据类型和应用场景

Openwrt directory structure

The paddepaddle model is deployed in a service-oriented manner. After restarting the pipeline, an error is reported, and the TRT error is reported

Reinstallation of cadence16.3, failure and success

Less than a year after development, I dared to ask for 20k in the interview, but I didn't even want to give 8K after the interview~

Usage of API interface test ------ post

Amazing tips for using live chat to drive business sales

随机推荐

在Pycharm中对字典的键值作更新时提示“This dictionary creation could be rewritten as a dictionary literal ”的解决方法

dolphinscheduler 2.0.5 任务测试(spark task)报错:Container exited with a non-zero exit code 1

free( )的一个理解(《C Primer Plus》的一个错误)

Notes on writing questions in C language -- free falling ball

Laravel中使用 Editor.md 上传图片如何处理?

一款MVC5+EasyUI企业快速开发框架源码 BS框架源码

Non return to zero code NRZ

Icer Skill 02makefile script Running VCS Simulation

Abnova ACTN4纯化兔多克隆抗体说明书

Laravel 8.4 routing problem. At the end is the cross reference table on the left side of the editor, which can be understood by Xiaobai

智能语音时代到来,谁在定义新时代AI?

独立站聊天机器人有哪些类型?如何快速创建属于自己的免费聊天机器人?只需3秒钟就能搞定!

Openjudge noi 1.13 51: ancient password

OGNL Object-Graph Navigation Language

A mvc5+easyui enterprise rapid development framework source code BS framework source code

【图论】—— 二分图

ICer技能03Design Compile

WPF 基础控件之 TabControl样式

重装Cadence16.3,失败与成功

How can mushrooms survive a five-year loss of 4.2 billion yuan?