当前位置:网站首页>Dolphin scheduler 2.0.5 task test (spark task) reported an error: container exited with a non zero exit code 1

Dolphin scheduler 2.0.5 task test (spark task) reported an error: container exited with a non zero exit code 1

2022-06-23 05:03:00 【Zhun Xiaozhao】

Catalog

Container exited with a non-zero exit code 1

Yesterday in dolphinscheduler involve HDFS A functional test ( 3、 ... and )spark task Problems encountered in , It hasn't been solved , Take another look today , At a glance

Local browser access virtual machine domain name configuration

Every time you view the page, you need to add the virtual machine domain name host1 Replace with specific IP, The browser can access it normally , It's too troublesome

Configuration method

- Will the machine

C:\Windows\System32\drivers\etc'\hostsConfigure to and virtual machine/etc/hostsThe address is consistent

validate logon

Check the log

Check the output log

stderr( No useful information )

stdout( Finally, I have found a way )

Specific log :

Tools

/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/pyspark.zip/pyspark/sql/context.py:77: FutureWarning: Deprecated in 3.0.0. Use SparkSession.builder.getOrCreate() instead.

/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/pyspark.zip/pyspark/sql/dataframe.py:138: FutureWarning: Deprecated in 2.0, use createOrReplaceTempView instead.

Traceback (most recent call last):

File "/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/sparktasktest.py", line 42, in <module>

df_result.coalesce(1).write.json(sys.argv[2])

File "/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/pyspark.zip/pyspark/sql/readwriter.py", line 846, in json

File "/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/py4j-0.10.9.3-src.zip/py4j/java_gateway.py", line 1321, in __call__

File "/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/pyspark.zip/pyspark/sql/utils.py", line 111, in deco

File "/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/py4j-0.10.9.3-src.zip/py4j/protocol.py", line 326, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling o56.json.

: java.io.IOException: Incomplete HDFS URI, no host: hdfs:///test/softresult

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:143)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2669)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:94)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2703)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2685)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:373)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:295)

at org.apache.spark.sql.execution.datasources.DataSource.planForWritingFileFormat(DataSource.scala:461)

at org.apache.spark.sql.execution.datasources.DataSource.planForWriting(DataSource.scala:556)

at org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:382)

at org.apache.spark.sql.DataFrameWriter.saveInternal(DataFrameWriter.scala:355)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:239)

at org.apache.spark.sql.DataFrameWriter.json(DataFrameWriter.scala:763)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.ClientServerConnection.waitForCommands(ClientServerConnection.java:182)

at py4j.ClientServerConnection.run(ClientServerConnection.java:106)

at java.lang.Thread.run(Thread.java:748)

Environmental problems

ModuleNotFoundError: No module named ‘py4j’

The last night's runaway script can be successful , Now it seems that the script execution reports an error , The module of independent execution does not exist

pyspark reinstall

Online installation , It was installed offline last night , There may be a problem with the downloaded package

sudo /usr/local/python3/bin/pip3 install pyspark

- Execute the script again and no module missing error will be reported

- Manual installation pyspark step ( Unzip the installation package to execute

sudo python3 setup.py install)

spark-submit To verify again

The mistake remains :

Report errors :Incomplete HDFS URI, no host: hdfs:///test/softresult

It is said on the Internet that it may not be introduced hadoop The configuration file , Result 1 inspection , It is found that there is a real problem with the configuration ( Matched hadoop_home The address of )

- Simply HADOOP_HOME Configure it (

conf/spark-env.sh)

export JAVA_HOME=/usr/local/java/jdk1.8.0_151

export HADOOP_HOME=/home/dolphinscheduler/app/hadoop-2.7.3

export HADOOP_CONF_DIR=/home/dolphinscheduler/app/hadoop-2.7.3/etc/hadoop

export SPARK_PYTHON=/usr/local/bin/python3

Report errors :path hdfs://192.168.56.10:8020/test/softresult already exists.

The problem should have been solved , Before no host: hdfs:///test/softresult, It is suspected that the directory does not exist , Manually created , So the report directory already exists , Specify a new directory to view again

SUCCEEDED

- It's a success

- The verification results , Still a little imperfect , The data is not written in , It must be a matter of procedure ( The next step is to study python Well ? The more you study, the more ignorant you become )

边栏推荐

- Examples of corpus data processing cases (part of speech encoding, part of speech restoration)

- go学习记录二(Window)

- Halcon knowledge: binocular_ Discrimination knowledge

- 数据科学家是不是特有前途的职业?

- Three operation directions of integral mall

- Abnova actn4 purified rabbit polyclonal antibody instructions

- VGg Chinese herbal medicine identification

- What are the types of independent station chat robots? How to quickly create your own free chat robot? It only takes 3 seconds!

- Abnova acid phosphatase (wheat germ) instructions

- mysql json

猜你喜欢

Abnova fluorescent dye 555-c3 maleimide scheme

Cloud native database is in full swing, and the future can be expected

【论文阅读】Semi-Supervised Learning with Ladder Networks

Laravel中使用 Editor.md 上传图片如何处理?

PaddlePaddle模型服务化部署,重新启动pipeline后出现报错,trt报错

Current relay hdl-a/1-110vdc-1

Actual combat | multiple intranet penetration through Viper

What are the types of independent station chat robots? How to quickly create your own free chat robot? It only takes 3 seconds!

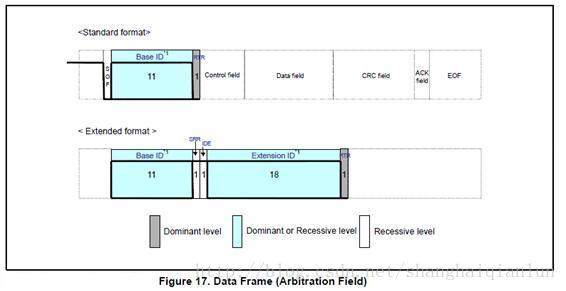

Can bus Basics

怎样利用数据讲一个精彩故事?

随机推荐

const理解之二

电流继电器JDL-1002A

欢迎使用CSDN-markdown编辑器

go学习记录二(Window)

OGNL Object-Graph Navigation Language

Summary of switched reluctance motor suspension drive ir2128

Bootstrap drive, top switching power supply and Optocoupler

Less than a year after development, I dared to ask for 20k in the interview, but I didn't even want to give 8K after the interview~

Abnova PSMA bead solution

Dsp7 environment

The paddepaddle model is deployed in a service-oriented manner. After restarting the pipeline, an error is reported, and the TRT error is reported

左值与右值

20000 words + 20 pictures | details of nine data types and application scenarios of redis

free( )的一个理解(《C Primer Plus》的一个错误)

Talk about the composite pattern in C #

Thinkphp6 solving jump problems

如何更好地组织最小 WEB API 代码结构

Dpr-34v/v two position relay

An understanding of free() (an error in C Primer Plus)

Li Kou today's question 513 Find the value in the lower left corner of the tree