当前位置:网站首页>Definition of information entropy

Definition of information entropy

2022-07-25 11:26:00 【fatfatmomo】

Let me explain why the definition of entropy is as shown in the title from a very intuitive point of view .

First of all , Suppose there is a random variable

second , Suppose two random variables

The minus sign here is only used to guarantee entropy ( The amount of information ) Is a positive number or zero . and

Last , We use entropy to evaluate the entire random variable

边栏推荐

- Learn NLP with Transformer (Chapter 1)

- 【flask高级】结合源码解决flask经典报错:Working outside of application context

- 让运动自然发生,FITURE打造全新生活方式

- Code representation learning: introduction to codebert and other related models

- 苹果美国宣布符合销售免税假期的各州产品清单细节

- Learn NLP with Transformer (Chapter 5)

- Tree dynamic programming

- Database design - Simplified dictionary table [easy to understand]

- DNS分离解析的实现方法详解

- Ue4.26 source code version black screen problem of client operation when learning Wan independent server

猜你喜欢

复习背诵整理版

SQL注入 Less17(报错注入+子查询)

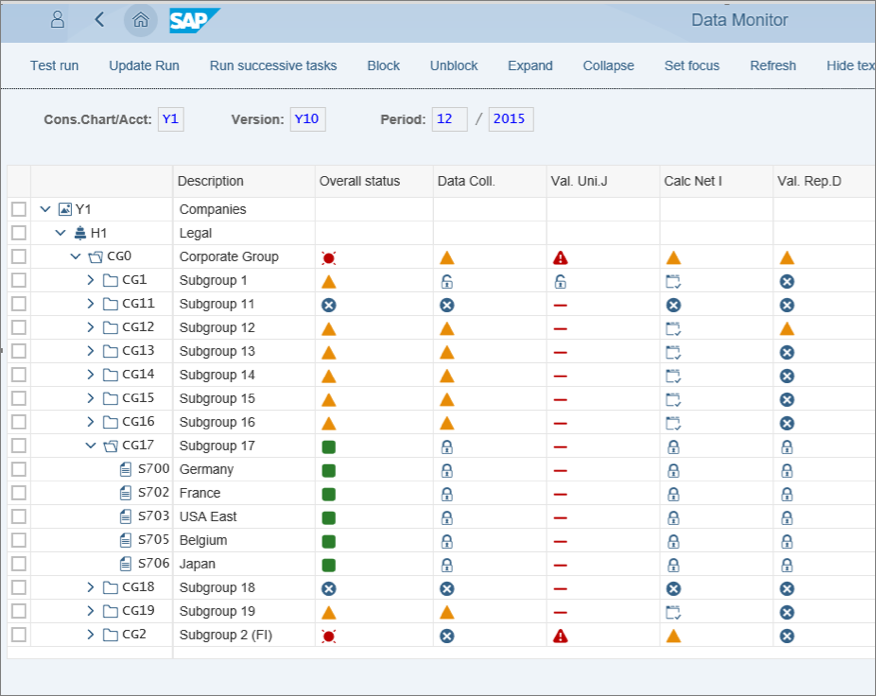

From the perspective of open source, analyze the architecture design of SAP classic ERP that will not change in 30 years

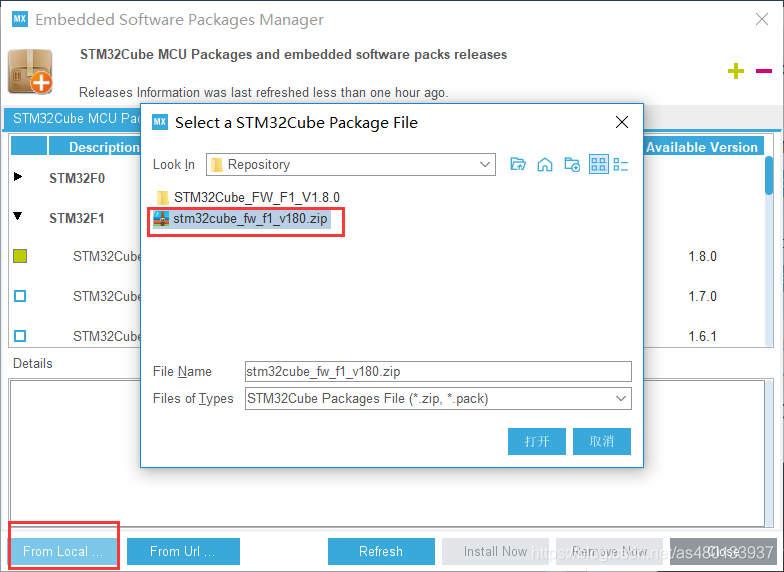

机智云物联网平台 STM32 ESP8266-01S 简单无线控灯

100W!

Stm32cubemx learning record -- installation, configuration and use

Some usages of beautifulsoup

Nowcodertop12-16 - continuous updating

Let sports happen naturally, and fire creates a new lifestyle

Reinforcement Learning 强化学习(三)

随机推荐

The University of Gottingen proposed clipseg: a model that can perform three segmentation tasks simultaneously using text and image prompts

C# Newtonsoft.Json 高级用法

Hcip experiment (03)

Google Earth engine -- Statistics on the frequency of land classification year by year

Learn NLP with Transformer (Chapter 3)

Compressed list ziplist of redis

Web mobile terminal: touchmove realizes local scrolling

BeautifulSoup的一些用法

一篇看懂:IDEA 使用scala 编写wordcount程序 并生成jar包 实测

Software Testing Technology: cross platform mobile UI automated testing (Part 1)

shell-第四天作业

Implementation of recommendation system collaborative filtering in spark

SQL语言(四)

只知道预制体是用来生成物体的?看我如何使用Unity生成UI预制体

HCIP(13)

企业实践开源的动机

Esp8266 uses drv8833 drive board to drive N20 motor

Learn NLP with Transformer (Chapter 1)

SQL注入 Less17(报错注入+子查询)

Learn NLP with Transformer (Chapter 8)

![\[h(x)=-log_2p(x)\]](/img/5a/ea6df94e93ac7f65a27fb673cf4184)

![H[x]=-\sum_xp(x)log_2p(x)](/img/d0/104e3d34e58f3d4c31e22501b38233)