当前位置:网站首页>BeautifulSoup的一些用法

BeautifulSoup的一些用法

2022-07-25 10:24:00 【Icy Hunter】

前言

xpath确实好用,但是对于网页结构不太一样,但是我们需要的内容的标签是一样的适合,可能BeautifulSoup会更简单些

prettify()

能够使得HTML非常美观好看:

import requests

from bs4 import BeautifulSoup

headers = {

'authority': 'www.amazon.com',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'accept-language': 'zh-CN,zh;q=0.9',

# Requests sorts cookies= alphabetically

# 'cookie': 'session-id=146-9554352-7241337; i18n-prefs=USD; ubid-main=131-8725565-8106466; av-timezone=Asia/Shanghai; session-id-time=2082787201l; lc-main=en_US; skin=noskin; csm-hit=tb:SS9VMRPJNKAAQ188PJ81+s-R3FAJTKYBMR48FHFKANG|1658566295845&t:1658566295845&adb:adblk_no; session-token="HkMB3OYPMO+kqXfcANFKCAk5ZslBpMiVjjm6qx3W0ZymVpaswxVYesgvN9Agpzyi9Riv4lvHwkdZCdE+T4i+9rq9Pj9DZ65sN1btJqxODob2xX3bOPwQoShuzGatDpuYCUwEvqvUQfq8GwGizfic1qtSuVDCKgT2u6CHD5ALOL97sRm2PXBtvSEfN+4xtNdu/2+pvUQDBSS8exN0DLudJw=="',

'device-memory': '8',

'downlink': '1.4',

'dpr': '1.25',

'ect': '3g',

'referer': 'https://www.amazon.com/s?k=case&crid=1Q6U78YWZGRPO&sprefix=ca%2Caps%2C761&ref=nb_sb_noss_2',

'rtt': '300',

'sec-ch-device-memory': '8',

'sec-ch-dpr': '1.25',

'sec-ch-ua': '".Not/A)Brand";v="99", "Google Chrome";v="103", "Chromium";v="103"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-ch-viewport-width': '1229',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36',

'viewport-width': '1229',

}

response = requests.get('https://www.baidu.com/', headers=headers)

soup = BeautifulSoup(response.text, features='lxml')

print(type(soup.prettify()))

print(soup.prettify())

输出结果:

可以看到prettify后数据类型变成了str,这个美观是用\n、” “(双空格)、\t来完成的

s = soup.prettify()

s = s.replace("\t", "")

s = s.replace("\n", "")

s = s.replace(" ", "")

print(s)

加上这段,就会变回去了

find_all()

能够找到需要的特定标签内的内容

import requests

from bs4 import BeautifulSoup

headers = {

'authority': 'www.amazon.com',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'accept-language': 'zh-CN,zh;q=0.9',

# Requests sorts cookies= alphabetically

# 'cookie': 'session-id=146-9554352-7241337; i18n-prefs=USD; ubid-main=131-8725565-8106466; av-timezone=Asia/Shanghai; session-id-time=2082787201l; lc-main=en_US; skin=noskin; csm-hit=tb:SS9VMRPJNKAAQ188PJ81+s-R3FAJTKYBMR48FHFKANG|1658566295845&t:1658566295845&adb:adblk_no; session-token="HkMB3OYPMO+kqXfcANFKCAk5ZslBpMiVjjm6qx3W0ZymVpaswxVYesgvN9Agpzyi9Riv4lvHwkdZCdE+T4i+9rq9Pj9DZ65sN1btJqxODob2xX3bOPwQoShuzGatDpuYCUwEvqvUQfq8GwGizfic1qtSuVDCKgT2u6CHD5ALOL97sRm2PXBtvSEfN+4xtNdu/2+pvUQDBSS8exN0DLudJw=="',

'device-memory': '8',

'downlink': '1.4',

'dpr': '1.25',

'ect': '3g',

'referer': 'https://www.amazon.com/s?k=case&crid=1Q6U78YWZGRPO&sprefix=ca%2Caps%2C761&ref=nb_sb_noss_2',

'rtt': '300',

'sec-ch-device-memory': '8',

'sec-ch-dpr': '1.25',

'sec-ch-ua': '".Not/A)Brand";v="99", "Google Chrome";v="103", "Chromium";v="103"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-ch-viewport-width': '1229',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36',

'viewport-width': '1229',

}

response = requests.get('https://www.baidu.com/', headers=headers)

soup = BeautifulSoup(response.text, features='lxml')

div = soup.find_all("div", class_="s-isindex-wrap")

for d in div:

print(d)

# print(all_a)

# print(soup.prettify())

上述代码就是寻找class中包含s-isindex-wrap的div,不过只要包含就会取出。如果我想找出只包含的呢?目前还不知道咋设置参数,望指教

输出截取:

符合预期

tag.get、tag.string

获取对应属性或者文本

import requests

from bs4 import BeautifulSoup

headers = {

'authority': 'www.amazon.com',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'accept-language': 'zh-CN,zh;q=0.9',

# Requests sorts cookies= alphabetically

# 'cookie': 'session-id=146-9554352-7241337; i18n-prefs=USD; ubid-main=131-8725565-8106466; av-timezone=Asia/Shanghai; session-id-time=2082787201l; lc-main=en_US; skin=noskin; csm-hit=tb:SS9VMRPJNKAAQ188PJ81+s-R3FAJTKYBMR48FHFKANG|1658566295845&t:1658566295845&adb:adblk_no; session-token="HkMB3OYPMO+kqXfcANFKCAk5ZslBpMiVjjm6qx3W0ZymVpaswxVYesgvN9Agpzyi9Riv4lvHwkdZCdE+T4i+9rq9Pj9DZ65sN1btJqxODob2xX3bOPwQoShuzGatDpuYCUwEvqvUQfq8GwGizfic1qtSuVDCKgT2u6CHD5ALOL97sRm2PXBtvSEfN+4xtNdu/2+pvUQDBSS8exN0DLudJw=="',

'device-memory': '8',

'downlink': '1.4',

'dpr': '1.25',

'ect': '3g',

'referer': 'https://www.amazon.com/s?k=case&crid=1Q6U78YWZGRPO&sprefix=ca%2Caps%2C761&ref=nb_sb_noss_2',

'rtt': '300',

'sec-ch-device-memory': '8',

'sec-ch-dpr': '1.25',

'sec-ch-ua': '".Not/A)Brand";v="99", "Google Chrome";v="103", "Chromium";v="103"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sec-ch-viewport-width': '1229',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36',

'viewport-width': '1229',

}

response = requests.get('https://www.baidu.com/', headers=headers)

soup = BeautifulSoup(response.text, features='lxml')

div = soup.find_all("div", class_="s-isindex-wrap")

for d in div:

d = d.prettify() # 美化后为str

d = BeautifulSoup(d, features='lxml') # 再次解析可以find_all

dd = d.find_all("div", class_="s-top-left-new") # 找出有s-top-left-new类的div

for ddd in dd: # 遍历div

a_all = ddd.find_all("a") # 找出a标签

for a in a_all: # 遍历a标签

href = a.get("href") # 找出href

string = a.string

try:

string = string.replace(" ", "")

string = string.replace("\t", "")

string = string.replace("\n", "")

except:

print("string为空")

print(href)

print(string)

边栏推荐

- Learn NLP with Transformer (Chapter 6)

- MySQL advanced statement (I) (there is always someone who will make your life no longer bad)

- HCIA experiment (06)

- How to optimize the performance when the interface traffic increases suddenly?

- Learn NLP with Transformer (Chapter 1)

- 学习路之PHP--Phpstudy 提示 Mysqld.Exe: Error While Setting Value ‘NO_ENGINE_SUBSTITUTION 错误的解决办法

- mysql主从复制与读写分离

- 一文读懂小程序的生命周期和路由跳转

- HCIP(13)

- Flask framework -- flask caching

猜你喜欢

SQL语言(六)

Use three.js to realize the cool cyberpunk style 3D digital earth large screen

JS bidirectional linked list 02

ESP32C3基于Arduino框架下的 ESP32 RainMaker开发示例教程

Learn NLP with Transformer (Chapter 7)

HCIP(12)

HCIA实验(07)综合实验

AI系统前沿动态第43期:OneFlow v0.8.0正式发布;GPU发现人脑连接;AI博士生在线众筹研究主题

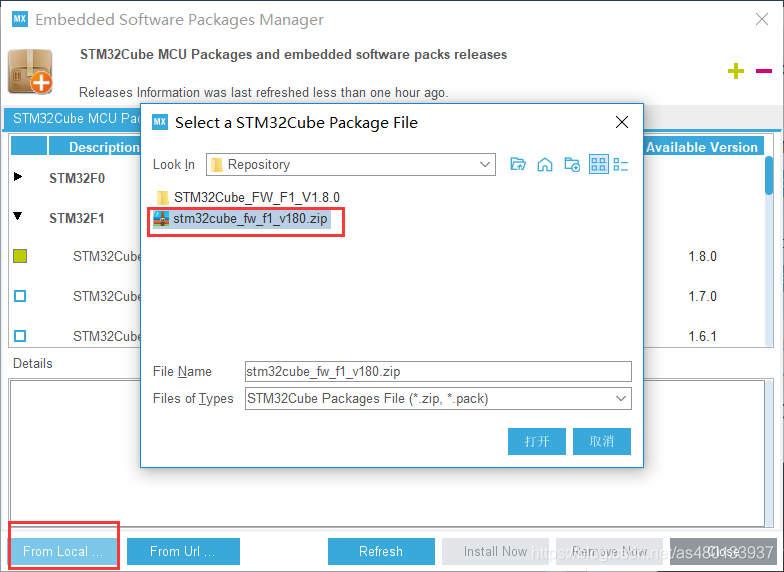

STM32CubeMX学习记录--安装配置与使用

一个 DirectShow 播放问题的排查记录

随机推荐

mysql高级语句(一)(总有一个人的出现,让你的生活不再继续糟糕)

[flask advanced] solve the classic error reporting of flask by combining the source code: working outside of application context

Learn NLP with Transformer (Chapter 3)

HCIP(13)

HCIA experiment (09)

Flask框架——消息闪现

Flask框架——Flask-WTF表单:文件上传、验证码

SQL语言(四)

2021 CEC written examination summary

Hcip experiment (04)

Motivation of enterprises to practice open source

HCIP(11)

JS bidirectional linked list 02

Disabled and readonly and focus issues

接口流量突增,如何做好性能调优?

Reinforcement Learning 强化学习(三)

Gan, why '𠮷 𠮷'.Length== 3 ??

HCIA experiment (06)

Esp32c3 based on the example tutorial of esp32 Rainmaker development under Arduino framework

ESP32C3基于Arduino框架下的 ESP32 RainMaker开发示例教程