当前位置:网站首页>In this year's English college entrance examination, CMU delivered 134 high scores with reconstruction pre training, significantly surpassing gpt3

In this year's English college entrance examination, CMU delivered 134 high scores with reconstruction pre training, significantly surpassing gpt3

2022-06-23 14:16:00 【Zhiyuan community】

The reconfiguration pre training proposed in this paper (reStructured Pre-training,RST), Not only in various NLP Perform brilliantly on the task , In college entrance examination English , Also handed over a satisfactory result .

In biological neural networks ( Such as human brain ) aspect , Human beings are taught at a very young age ( Knowledge ) education , So that they can extract specific data to deal with the complex and changeable life . For external device storage , People usually follow a certain pattern ( For example, form ) Structure the data , Then use a special language ( for example SQL) Effectively retrieve the required information from the database . For storage based on artificial neural network , Researchers use self supervised learning to store data from large corpora ( Pre training ), The network is then used for various downstream tasks ( For example, emotional classification ).

边栏推荐

- quartus调用&设计D触发器——仿真&时序波验证

- Face registration, unlock, respond, catch all

- MIT 6.031 reading5: version control learning experience

- When pandas met SQL, a powerful tool library was born

- [Course preview] AI meter industry solution based on propeller and openvino | industrial meter reading and character detection

- ASP. Net C pharmacy management information system (including thesis) graduation project [demonstration video]

- Is flush a stock? Is it safe to open an account online now?

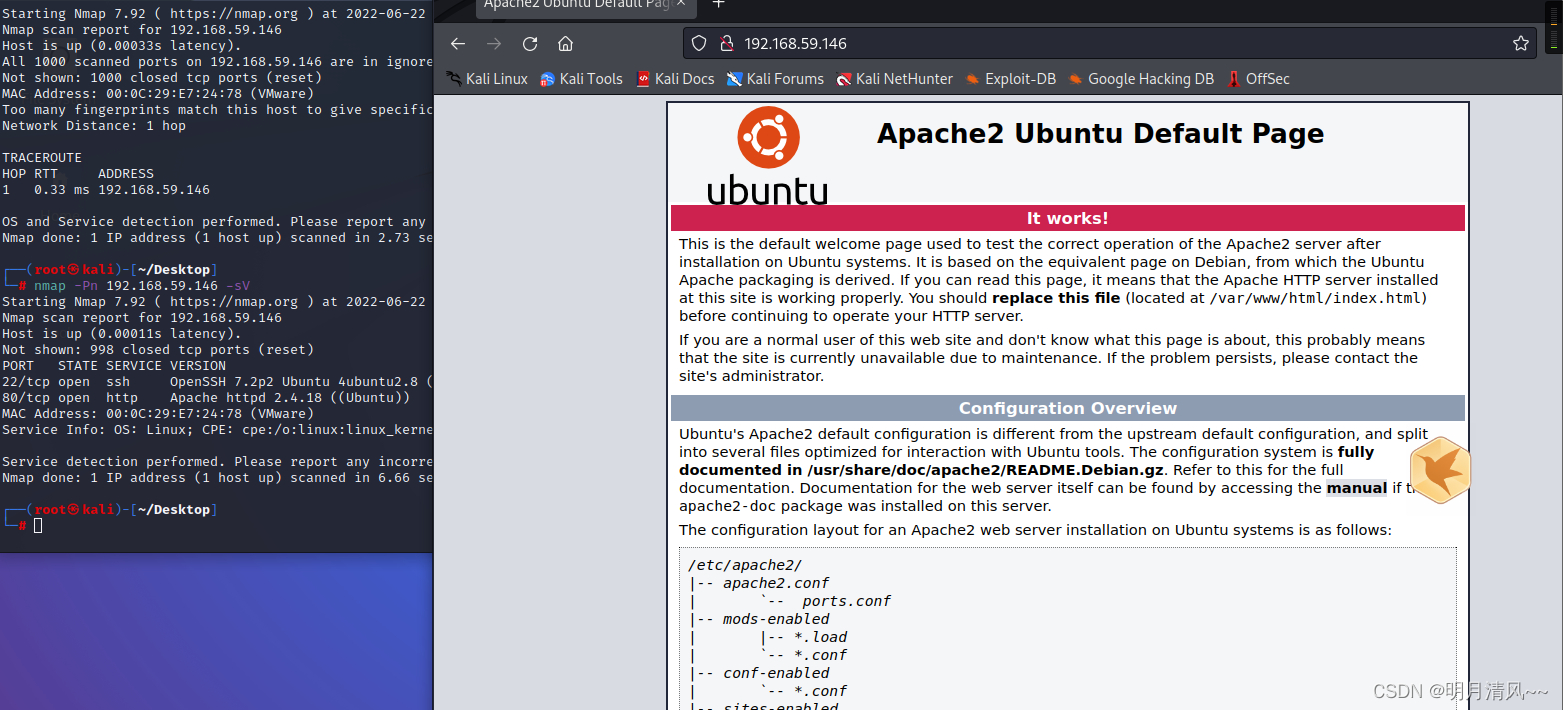

- kali使用

- Tencent cloud tdsql-c heavy upgrade, leading the cloud native database market in terms of performance

- Deci 和英特尔如何在 MLPerf 上实现高达 16.8 倍的吞吐量提升和 +1.74% 的准确性提升

猜你喜欢

Simplify deployment with openvino model server and tensorflow serving

vulnhub靶机Os-hackNos-1

Building Intel devcloud

Intelligent digital signage solution

微信小程序之input前加图标

【深入理解TcaplusDB技术】如何实现Tmonitor单机安装

![[in depth understanding of tcapulusdb technology] how to realize single machine installation of tmonitor](/img/6d/8b1ac734cd95fb29e576aa3eee1b33.png)

[in depth understanding of tcapulusdb technology] how to realize single machine installation of tmonitor

Best practices for auto plug-ins and automatic batch processing in openvinotm 2022.1

微信小程序之获取php后台数据库转化的json

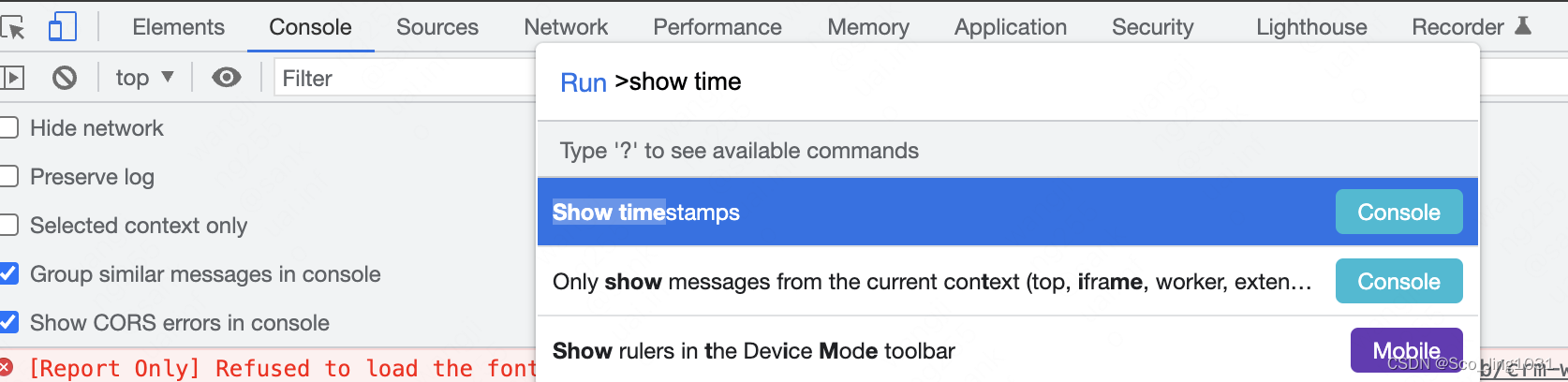

如何打开/关闭chrome控制台调试时的时间戳?

随机推荐

白皮书丨英特尔携手知名RISC-V工具提供商Ashling,着力扩展多平台RISC-V支持

同花顺是股票用的么?现在网上开户安全么?

When pandas met SQL, a powerful tool library was born

Short talk about community: how to build a game community?

Quartus call & Design d Trigger - simulation & time sequence Wave Verification

Learning experiences on how to design reusable software from three aspects: class, API and framework

Crmeb second open SMS function tutorial

Test article

[digital signal processing] linear time invariant system LTI (judge whether a system is a "non time variant" system | case 3)

分布式数据库使用逻辑卷管理存储之扩容

首个大众可用PyTorch版AlphaFold2复现,哥大开源OpenFold,star量破千

Shutter clip clipping component

SQLserver2008r2安装dts组件不成功

Detailed description of Modelsim installation steps

Cause analysis and intelligent solution of information system row lock waiting

Strengthen the sense of responsibility and bottom line thinking to build a "safety dike" for flood fighting and rescue

Flex attribute of wechat applet

Linear regression analysis of parent-child height data set

WPF (c) new open source control library: newbeecoder UI waiting animation

构建英特尔 DevCloud