当前位置:网站首页>Simplify deployment with openvino model server and tensorflow serving

Simplify deployment with openvino model server and tensorflow serving

2022-06-23 13:36:00 【Intel edge computing community】

author :Milosz Zeglarski

translate : Li Yiwei

Introduce

In this blog , You will learn how to use OpenVINO Model Server Medium gRPC API Yes JPEG Images perform reasoning .Model servers It plays an important role in smoothly introducing the model from the development environment to production . They provide models through network endpoints , And expose the API. After providing the model , We need a set of functions to call from our application API.

OpenVINO Model Server and TensorFlow Serving Share the same front end API, This means that we can use the same code to interact with both . about Python For developers , A typical starting point is to use tensorflow-serving-api pip package . Unfortunately , Because this package contains TensorFlow As a dependency , So it takes up a lot of space .

The road to lightweight customers

because tensorflow-serving- api It depends on The size of the dependency is about 1.3GB, So I We decided to create a lightweight client , Only has execution API Call the required functions . In the latest version of OpenVINO Model Server in , I We introduce Model 了 Python A preview of the client library - ovmsclient. This new package and all its dependencies are less than 100MB - Make it Than tensorflow-serving-api Small 13 times .

Python Environment comparison . Note the difference in the number of packages installed with the client .

In addition to having a larger binary size , Import... In the application tensorflow-serving- api It also consumes more memory . Refer to the following for performing the same operation Python Script run top Ordered result —

One One use tensorflow-serve-api, Another memory usage comparison .

package | VIRT [KB] | RES [KB] | SHR [KB] |

tensorflow-serving-api | 4543760 | 293516 | 155708 |

ovmsclient | 3500296 | 52008 | 23040 |

Please note that RES [KB] Differences in columns , This column indicates the amount of physical memory used by the task .

Importing a package that takes up a large amount of space will also increase the initialization time , This is another benefit of using a lightweight client . new Python The client package is also better than tensorflow-serving- api Easier to use , because It provides a utility for end-to-end interaction with the model server . Use ovmsclient when , Application developers do not need to know about the server API Of Details . The package provides a set of conveniences for each phase of the interaction The function of - From setting up connections and making API call , To decompress results in standard format . before , Developers need to know which services accept what types of requests , Or how to manually prepare requests and process responses .

Let's use ovmsclient

To use ResNet-50 Image classification model run prediction , Please use this model to deploy OpenVINO Model Server. You can do this using the following command :

docker run -d --rm -p 9000:9000 openvino/model_server:latest \

--model_name resnet --model_path gs://ovms-public-eu/resnet50-binary \

--layout NHWC:NCHW --port 9000 This command uses from Google Cloud Storage Downloaded from the public storage bucket on ResNet-50 The model starts the server . Use the model server to listen port 9000 Upper gRPC call , You can start interacting with the server . Next , Let us Use pip install ovmsclient package :

pip3 install ovmsclientBefore running the client , download Images Make a classification and corresponding ImageNet Label file , To explain the forecast results :

wget https://raw.githubusercontent.com/openvinotoolkit/model_server/main/demos/common/static/images/zebra... wget https://raw.githubusercontent.com/openvinotoolkit/model_server/main/demos/common/python/classes.py

Used to predict Of Zebras Pictures of the

step 1: Create a connection to the server gRPC Connect :

Now? , You can open Python interpreter And create With the model server gRPC Connect .

$ python3

Python 3.6.9 (default, Jul 17 2020, 12:50:27)

[GCC 8.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>> from ovmsclient import make_grpc_client

>> client = make_grpc_client("localhost:9000")step 2: Request model metadata :

There are three methods for client objects : get_model_status、get_model_metadata and forecast . To create a valid inference request , You need to know the model input . So , Let's request model metadata :

>> client.get_model_metadata(model_name= "resnet")

{'model_version': 1, 'inputs': {'0': {'shape': [1, 224, 224, 3], 'dtype': 'DT_FLOAT'}}, 'outputs': {'1463': {'shape': [1, 1000], 'dtype': 'DT_FLOAT'}}}step 3: take JPEG The image is sent to the server :

From the model metadata , We learned that the model has one input and one output . Enter a name of “0”, It needs to be in the shape of (1,224,224,3) Of data , With floating-point data type . Now? , You have all the information you need to run your reasoning . Let's use it in the last Step down The image of the zebra . In this example , We will use the model server binary input function , This function only needs to be loaded JPEG and Request encoding The second of Binary file for prediction - Use two It is not necessary to enter in hexadecimal want Preprocessing .

>> with open("zebra.jpeg", "rb") as f:

... img = f.read()

...

>> output = client.predict(inputs={ "0": img}, model_name= "resnet")

>> type(output)

<class 'numpy.ndarray'>

>> output.shape

(1, 1000) step 4: Map output to imagenet class :

Prediction success returns , The output shape is numpy ndarray (1, 1000) - And “ Output ” Part of the model metadata . The next step is to interpret the model output and extract the classification results .

The shape of the output is (1, 1000), First of all Dimensions represent the batch size ( Number of images processed ), The second dimension indicates that the image belongs to each ImageNet The probability of a class . To get the classification results , You need to get the index that outputs the maximum value in the second dimension . then Use imagenet_classes In the previous step Carried classes.py Dictionaries Perform the index number to class name mapping and view the results .

>>> import numpy as np

>>> from classes import imagenet_classes

>>> result_index = np.argmax(output[0])

>>> imagenet_classes[result_index]

'zebra' Conclusion

new ovmsclient Baubi tensorflow-serving-api smaller , Consume less memory , And it's easier to use . In this blog , We learned how to get model metadata , And how to pass OpenVINO Model server Medium gRPC Interface Binary encoded JPEG Image run prediction .

See about using NumPy Array run prediction 、 Check the status of the model as well as in GitHub Upper use REST API More detailed examples of :https://github.com/openvinotoolkit/model_server/tree/main/client/python/ovmsclient/samples

To learn about ovmsclient More about features , see also API file :

https://github.com/openvinotoolkit/model_server/blob/main/client/python/ovmsclient/lib/docs/README.m...

This is the first version of the client library . It will Over time , but Already able to use OpenVINO Model Server and TensorFlow Serving Operation forecast , Just a minimal Python package . Yes Questions or suggestions ? Please be there. GitHub Put forward on problem .

边栏推荐

- 你管这破玩意儿叫 MQ?

- 火绒安全与英特尔vPro平台合作 共筑软硬件协同安全新格局

- Architecture design methods in technical practice

- Homekit supports the matter protocol. What does this imply?

- .Net怎么使用日志框架NLog

- First exposure! The only Alibaba cloud native security panorama behind the highest level in the whole domain

- MySQL使用ReplicationConnection導致的連接失效分析與解决

- [Yunzhou said live room] - digital security special session will be officially launched tomorrow afternoon

- [website architecture] the unique skill of 10-year database design, practical design steps and specifications

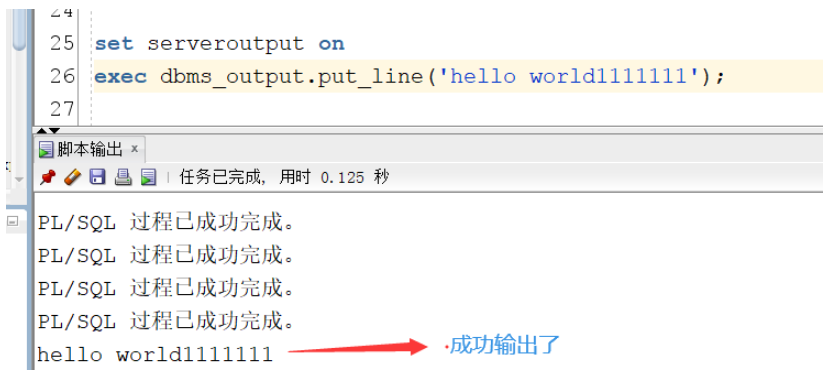

- Oracle中dbms_output.put_line怎么使用

猜你喜欢

Homekit and NFC support: smart Ting smart door lock SL1 only costs 149 yuan

通过 OpenVINO Model Server和 TensorFlow Serving简化部署

64 channel PCM telephone optical transceiver 64 channel telephone +2-channel 100M Ethernet telephone optical transceiver 64 channel telephone PCM voice optical transceiver

Oracle中dbms_output.put_line怎么使用

AAIG看全球6月刊(上)发布|AI人格真的觉醒了吗?NLP哪个细分方向最具社会价值?Get新观点新启发~

Go写文件的权限 WriteFile(filename, data, 0644)?

实战监听Eureka client的缓存更新

Common usage of OS (picture example)

kubernetes日志监控系统架构详解

逆向调试入门-了解PE结构文件

随机推荐

Groovy map operation

Hanyuan high tech USB3.0 optical transceiver USB industrial touch screen optical transceiver USB3.0 optical fiber extender USB3.0 optical fiber transmitter

从类、API、框架三个层面学习如何设计可复用软件的学习心得

PHP handwriting a perfect daemon

AAIG看全球6月刊(上)发布|AI人格真的觉醒了吗?NLP哪个细分方向最具社会价值?Get新观点新启发~

DBMS in Oracle_ output. put_ How to use line

实战 | 如何制作一个SLAM轨迹真值获取装置?

华三交换机配置SSH远程登录

Online text entity extraction capability helps applications analyze massive text data

使用OpenVINOTM预处理API进一步提升YOLOv5推理性能

32-way telephone +2-way Gigabit Ethernet 32-way PCM telephone optical transceiver supports FXO port FXS voice telephone to optical fiber

quartus调用&设计D触发器——仿真&时序波验证

怎么手写vite插件

What is the principle of live CDN in the process of building the source code of live streaming apps with goods?

Homekit and NFC support: smart Ting smart door lock SL1 only costs 149 yuan

R language uses matchit package for propensity matching analysis (set the matching method as nearest, match the control group and case group with the closest propensity score, 1:1 ratio), and use matc

2-optical-2-electric cascaded optical fiber transceiver Gigabit 2-optical-2-electric optical fiber transceiver Mini embedded industrial mine intrinsic safety optical fiber transceiver

Runtime application self-protection (rasp): self-cultivation of application security

OS的常见用法(图片示例)

HomeKit支持matter协议,这背后将寓意着什么?