当前位置:网站首页>The first public available pytorch version alphafold2 is reproduced, and Columbia University is open source openfold, with more than 1000 stars

The first public available pytorch version alphafold2 is reproduced, and Columbia University is open source openfold, with more than 1000 stars

2022-06-24 20:17:00 【Opencv school】

Click on the above ↑↑↑“OpenCV School ” Source... Pay attention to me : official account Almost Human to grant authorization AlphaFold2 yes 2021 year AI for Science The most dazzling star in the field . Now? , Someone is here. PyTorch It is reproduced in , And already in GitHub The open source . This recovery is now comparable in performance to the original AlphaFold2, And calculating force 、 Storage requirements are more public friendly .

just , Assistant professor of systems biology, Columbia University Mohammed AlQuraishi Announce on twitter , They trained a new one called OpenFold Model of , The model is AlphaFold2 Trainable PyTorch Duplicate version .Mohammed AlQuraishi Also said , This is the first one available to the public AlphaFold2 Reappear .

AlphaFold2 Protein structure can be predicted periodically with atomic accuracy , Technically, multi sequence alignment and deep learning algorithm are used to design , Combined with the physical and biological knowledge of protein structure, the prediction effect is improved . It has achieved 2/3 The outstanding achievement of protein structure prediction was listed on the 《 natural 》 The magazine . What's more surprising is ,DeepMind The team not only opened the model , Will also AlphaFold2 The forecast data is made into a free and open data set .

However , Open source doesn't mean you can use 、 To use . Actually ,AlphaFold2 The deployment of software system is very difficult , And high requirements for hardware 、 The data set download cycle is long 、 Large space , Every one of them makes ordinary developers flinch . therefore , The open source community has been working hard to achieve AlphaFold2 The available version of .

This time Columbia University Mohammed AlQuraishi Realized by professors and others OpenFold The total training time is about 100000 A100 Hours , But around 3000 It will be reached within hours 90% The accuracy of .

OpenFold With the original AlphaFold2 The accuracy of this method is quite , Even slightly better , May be because OpenFold Your training set is a little bigger :

OpenFold The main advantage of is that the reasoning speed is significantly improved , For shorter protein sequences ,OpenFold The speed of reasoning can reach AlphaFold2 Twice as many . in addition , Due to the use of custom CUDA kernel ,OpenFold With less memory, you can infer longer protein sequences .

OpenFold Introduce

OpenFold It almost reproduces the original open source reasoning code (v2.0.1) All functions of , Except for those that tend to be eliminated 「 Model integration 」 function , This function is available in DeepMind I didn't perform well in my ablation test .

Whether or not DeepSpeed,OpenFold Can be used with full accuracy or bfloat16 Training . In order to achieve AlphaFold2 The original performance of , The team trained from scratch OpenFold, Model weights and training data have been published publicly . among , The training data contains approximately 400000 Share MSA and PDB70 Template file .OpenFold Also supports the use of AlphaFold The official parameters for protein reasoning .

Compared with other implementations ,OpenFold Has the following advantages :

- Short sequence reasoning : Accelerated in GPU The upper inference is less than 1500 The speed of the chain of amino acid residues ;

- Long sequence reasoning : Low memory attention achieved through this study (low-memory attention) Reasoning about very long chains ,OpenFold Can be in a single A100 Up forecast exceed 4000 Sequence structure of residues , With the help of CPU offload Even longer sequences can be predicted ;

- Memory efficient during training and reasoning , stay FastFold Customization based on kernel modification CUDA The core of attention , The use of GPU The memory is better than the equivalent FastFold And existing PyTorch Less realization 4 Times and 5 times ;

- Efficiently align scripts : The team uses the original AlphaFold HHblits/JackHMMER pipeline Or with MMseqs2 Of ColabFold, Millions of alignments have been generated .

Linux Installation and use of the system

The development team provides a local installation Miniconda、 establish conda A virtual environment 、 Install all Python Dependencies and download scripts for useful resources , Including two sets of model parameters .

Run the following command :

scripts/install_third_party_dependencies.shUse the following command to activate the environment :

source scripts/activate_conda_env.shDisable command :

source scripts/deactivate_conda_env.shIn the active environment , compile OpenFold Of CUDA kernel

python3 setup.py installstay / usr/bin Install under path HH-suite:

# scripts/install_hh_suite.shUse the following command to download for training OpenFold and AlphaFold The database of :

bash scripts/download_data.sh data/If you want to use a set of DeepMind One or more sequences are reasoned by the pre training parameters of , You can run the following code :

python3 run_pretrained_openfold.py \

fasta_dir \

data/pdb_mmcif/mmcif_files/ \

--uniref90_database_path data/uniref90/uniref90.fasta \

--mgnify_database_path data/mgnify/mgy_clusters_2018_12.fa \

--pdb70_database_path data/pdb70/pdb70 \

--uniclust30_database_path data/uniclust30/uniclust30_2018_08/uniclust30_2018_08 \

--output_dir ./ \

--bfd_database_path data/bfd/bfd_metaclust_clu_complete_id30_c90_final_seq.sorted_opt \

--model_device "cuda:0" \

--jackhmmer_binary_path lib/conda/envs/openfold_venv/bin/jackhmmer \

--hhblits_binary_path lib/conda/envs/openfold_venv/bin/hhblits \

--hhsearch_binary_path lib/conda/envs/openfold_venv/bin/hhsearch \

--kalign_binary_path lib/conda/envs/openfold_venv/bin/kalign

--config_preset "model_1_ptm"

--openfold_checkpoint_path openfold/resources/openfold_params/finetuning_2_ptm.ptFor more details, see GitHub:https://github.com/aqlaboratory/openfold

Reference link :

https://cloud.tencent.com/developer/article/1861192

https://twitter.com/MoAlQuraishi

边栏推荐

- Programmers spend most of their time not writing code, but...

- 【云驻共创】ModelBox隔空作画 绘制你的专属画作

- SQL export CSV data, unlimited number of entries

- If the programmer tells the truth during the interview

- Some small requirements for SQL Engine for domestic database manufacturers

- Install the custom module into the system and use find in the independent project_ Package found

- Win7 10 tips for installing Office2010 five solutions for installing MSXML components

- Php OSS file read and write file, workerman Generate Temporary file and Output Browser Download

- Bytebase加入阿里云PolarDB开源数据库社区

- 京东一面:Redis 如何实现库存扣减操作?如何防止商品被超卖?

猜你喜欢

Making startup U disk -- Chinese cabbage U disk startup disk making tool V5.1

Two solutions to the problem of 0xv0000225 unable to start the computer

Digital twin industry case: Digital Smart port

LCD12864 (ST7565P) Chinese character display (STM32F103)

Teach you how to view the number of connected people on WiFi in detail how to view the number of connected people on WiFi

年轻人捧红的做饭生意经:博主忙卖课带货,机构月入百万

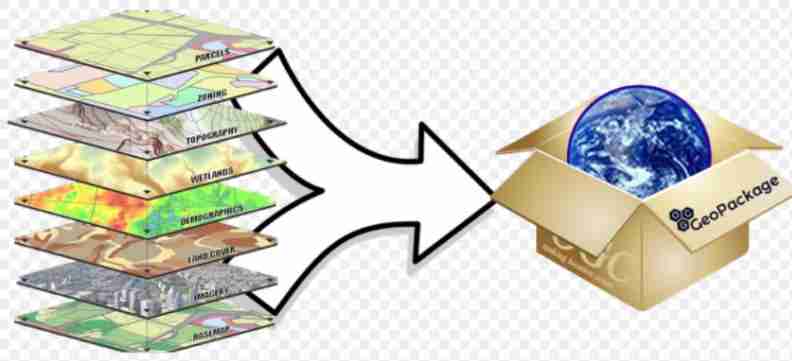

What other data besides SHP data

Zadig + cave Iast: let safety dissolve in continuous delivery

两位湖南老乡,联手干出一个百亿IPO

【Go語言刷題篇】Go從0到入門4:切片的高級用法、初級複習與Map入門學習

随机推荐

Saltstack state state file configuration instance

2022年最新四川建筑八大员(电气施工员)模拟题库及答案

Bytebase 加入阿裏雲 PolarDB 開源數據庫社區

What about the Golden Angel of thunder one? Golden Angel mission details

RF_DC系统时钟设置GEN1/GEN2

首个大众可用PyTorch版AlphaFold2复现,哥大开源OpenFold,star量破千

RF_ DC system clock setting gen1/gen2

用手机摄像头就能捕捉指纹?!准确度堪比签字画押,专家:你们在加剧歧视

【CANN文档速递04期】揭秘昇腾CANN算子开发

应用实践 | 海量数据,秒级分析!Flink+Doris 构建实时数仓方案

视频平台如何将旧数据库导入到新数据库?

Stackoverflow 年度报告 2022:开发者最喜爱的数据库是什么?

Data backup and recovery of PgSQL

【CANN文档速递06期】初识TBE DSL算子开发

SQL export CSV data, unlimited number of entries

Docker installing MySQL

IP address to integer

Hutool reads large excel (over 10m) files

Landcover100, planned land cover website

JVM tuning