当前位置:网站首页>[machinetranslation] - Calculation of Bleu value

[machinetranslation] - Calculation of Bleu value

2022-06-26 02:44:00 【Muasci】

Preface

Recently, I am still stuck in the process of reproducing the results of my work . say concretely , I use the script provided by that job , It uses fairseq-generate To complete the evaluation of the results . Then I found that the results I got were completely inconsistent with those in the paper .

First , In the pretreatment stage , Such as Remember the training of a multilingual machinetranslation model Shown , I use moses Of tokenizer Accomplished tokenize, And then use moses Of lowercase Lowercase completed , Last use subword-nmt bpelearn and apply Subwords of . Of course , One side , Lowercase is not conducive to model performance comparison ( From senior brother ); On the other hand , have access to sentencepiece Use this tool to learn directly bpe, Without having to do extra tokenize, But this article will not consider .

How others do it ?

Main reference of this part :Computing and reporting BLEU scores

Consider the following : Training and testing data of machinetranslation , All through tokenize and truecased Preprocessing , as well as bpe Word segmentation . And in the post-processing stage , In ensuring the correct answer (ref) And model output (hyp) After the same post-processing operation , Different operations bring BLEU The values are very different . As shown in the figure below :

The main findings are as follows :

- 【 That's ok 1vs That's ok 2】 stay bpe Level calculated BLEU High value . explain : That's ok 1 No post-treatment has been taken , in other words ,hyp and ref All are tokenized、truecased Of bpe Sub word . here , Due to finer particle size , Original word The level is the wrong output , Break into finer grained subwords , May produce “ correct ” The results of the .

- 【 That's ok 3vs That's ok 4】 If not used sacreBLEU Provided standard tokenization, result BLEU High value . explain : That's ok 3 Didn't do detokenize, meanwhile , hold sacreBLEU Medium standard tokenization also ban It fell off ; Go ahead 4 I did it first. detokenize( That's ok 4 Medium tokenization To be understood as detokenize), And then used sacreBLEU Medium standard tokenization( Default should be 13a tokenizier). Generally speaking , That's ok 3 go by the name of tokenized BLEU(wrong), That's ok 4 go by the name of detokenized BLEU(right). But I don't know why the two are so different .

- 【 That's ok 5】 If you don't consider case , That is, completely lowercase ,BLEU High value .

- 【 That's ok 6】 This category should also be regarded as tokenized BLEU, in other words , It did it first detokenize, Just calculating BLEU when , It's going to happen again tokenize When , Using other third-party tokenizer instead of sacreBLEU Medium tokenizer, This practice also has an impact on the final result .

summary :

- use SacreBLEU!

- In the calculation BLEU Before , Be completely post-preprocess(undo BPE\truecase\detokenize wait )!

My mistake

After the previous part , You can see , The problem with my preprocessing steps is not so big ( Lower case results in higher results ), My main problem is , Running fairseq-generate when , I didn't provide it bpe\bpe-codes\tokenizer\scoring\post-process Parameters , in other words , I didn't do anything post-process. among , The functions of each parameter are as follows :

- bpe: adopt bpe.decode(x) Statement to do undo bpe. With bpe=subword_nmt For example , What I do is :

(x + " ").replace(self.bpe_symbol, "").rstrip(), among [email protected]@ - tokenizer: adopt tokenizer.decode(x) Statement to do detokenize. With tokenzier=moses For example , What I do is :

MosesDetokenizer.detokenize(inp.split()) - scoring: adopt scorer = scoring.build_scorer(cfg.scoring, tgt_dict) Statement to create a calculation BLEU The object of . If scoring=‘bleu’( Default ), The specific calculation statement is

scorer.add(target_tokens, hypo_tokens), among ,target\hypo_tokens After some pretreatment ( Maybe after undo bpe and detokenize, It is also possible that nothing has been done : Just use " " This separator puts sentence All of the tokenized bpe The subwords are connected into a string ), obtain target\hypo_str after , Do it again fairseq The simple tokenize(fairseq/fairseq/tokenizer.py), And then calculate BLEU value . And if the scoring=‘sacrebleu’, The specific calculation statement isscorer.add_string(target_str, detok_hypo_str), under these circumstances , If we do what we should do post-process,target_str Is the pure original text ,detok_hypo_str So it is . And calculating BLEU When the value of , We use it again sacrebleu Of standard tokenization, The comparability will be much higher . - post-process: The sum of this parameter bpe Parameters repeat

( Probably ) The correct approach

CUDA_VISIBLE_DEVICES=5 fairseq-generate ../../data/iwslt14/data-bin --path checkpoints/iwslt14/baseline/evaluate_bleu/checkpoint_best.pt --task translation_multi_simple_epoch --source-lang ar --target-lang en --encoder-langtok "src" --decoder-langtok --bpe subword_nmt --tokenizer moses --scoring sacrebleu --bpe-codes /home/syxu/data/iwslt14/code --lang-pairs "ar-en,de-en,en-ar,en-de,en-es,en-fa,en-he,en-it,en-nl,en-pl,es-en,fa-en,he-en,it-en,nl-en,pl-en" --quiet

It mainly provides bpe\bpe-codes\tokenizer\scoring, These four parameters . give the result as follows .

TBC

in addition , It's OK not to look at fairseq-generate Provided BLEU result , Instead, it generates hyp.txt and ref.txt file , And then use sacrebleu Tool to calculate scores .

TBC

Questions remain

- Why? tokenized\detokenized BLEU So different ?----> Said the elder martial brother , There may be some languages , Such as the Latin alphabet , In this case ,tokenized BLEU signify , Use the special word separator of Latin alphabet to segment words , It is possible to divide the text into characters .

- fairseq-generate Of –sacrebleu What's the usage? ?----> It seems useless .

Reference material

TBC

边栏推荐

- 使用 AnnotationDbi 转换 R 中的基因名称

- WPF window centering & change trigger mechanism

- MySQL must master 4 languages!

- Google 推荐在 MVVM 架构中使用 Kotlin Flow

- Is it safe to trade stocks with a compass? How does the compass trade stocks? Do you need to open an account

- R 语言降维的 PCA 与自动编码器

- DF reports an error stale file handle

- Share some remote office experience in Intranet operation | community essay solicitation

- MySQL doit maîtriser 4 langues!

- How to check and cancel subscription auto renewal on iPhone or iPad

猜你喜欢

UTONMOS:以数字藏品助力华夏文化传承和数字科技发展

How to solve the problem that the iPhone 13 lock screen cannot receive the wechat notification prompt?

Install development cross process communication

![[机器翻译]—BLEU值的计算](/img/c3/8f98db33eb0ab5a016621d21d971e4.png)

[机器翻译]—BLEU值的计算

如何在 R 中的绘图中添加回归方程

@Query difficult and miscellaneous diseases

Ardiuno smart mosquito racket

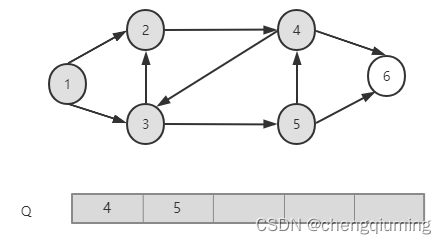

图的广度优先遍历

Digital commodity DGE -- the dark horse of wealth in digital economy

How do I take a screenshot of the iPad? 7 ways to take quick screenshots of iPad

随机推荐

vulhub复现一 activemq

Redis6.0新特性——ACL(权限控制列表)实现限制用户可执行命令和KEY

A few simple ways for programmers to exercise their waist

Share some remote office experience in Intranet operation | community essay solicitation

Largeur d'abord traversée basée sur la matrice de contiguïté

记一个RestControll和Controller 引起的折磨BUG

【机器学习】基于多元时间序列对高考预测分析案例

df报错Stale file handle

[machine learning] case study of college entrance examination prediction based on multiple time series

. Net7 miniapi (special part):preview5 optimizes JWT verification (Part 2)

.NET7之MiniAPI(特别篇) :Preview5优化了JWT验证(下)

WPF window centering & change trigger mechanism

MySQL必须掌握4种语言!

How do I fix the iPhone green screen problem? Try these solutions

饼图变形记,肝了3000字,收藏就是学会!

Fresh graduates talk about their graduation stories

Multi surveyor Gongshu Xiao sir_ The solution of using websocket error reporting under working directory

OpenAPI 3.0 规范-食用指南

Which SMS plug-in is easy to use? The universal form needs to be tested by sending SMS

Deep understanding of distributed cache design