当前位置:网站首页>Crawler frame

Crawler frame

2022-06-23 08:03:00 【Mysterious world】

Go online every day , Do you want to collect useful data ? If you want to, let's take a look at the following methods —— Reptiles

What is a reptile ?

- Reptiles : A program that automatically grabs Internet Information .

- TA Can be from a URL set out , Visit all URL. And we can extract the value data we need from each page .

- Automatically discover target data —— Automatic download —— Auto parse —— Autostore .

So what is the value of reptile Technology ?

- value —— Exaggeration is :" Internet data , For my use "!

- Interconnected data can be better secondary 、 Three or even unlimited uses

Simple crawler framework

Crawler scheduling side

URL Manager

Web downloader ( for example :urllib2)

Web parser ( for example :BeautifulSoup)

Data storage

Operation principle

1. First , Dispatcher asked URL Manager : Whether to climb URL?

2. then ,URL The manager replies to the scheduler : yes / no

3. Next , Scheduler notification URL Manager : Get a to crawl URL

4. Next , The scheduler notifies the downloader : Please download URL The content of , Download it and give it to me

5. Next , The scheduler notifies the parser : Please analyze URL Content , After parsing, return it to me

6. Last , The scheduler notifies the memory : Please keep the data I gave you

Python Simple crawler framework program ( part )

Crawler scheduling side

principle

- Traditional crawlers from one or several initial web pages URL Start , Get the URL, In the process of grabbing web pages , Constantly extract new URL Put in queue , Until certain stop conditions of the system are met .

- Crawler focused workflow is complex , We need to filter the links irrelevant to the theme according to certain web page analysis algorithm , Keep the useful links and put them in the waiting URL queue . then , It will select the next web page from the queue according to a certain search strategy URL, And repeat the process , Stop... Until a certain condition of the system is reached .

- in addition , All crawled pages will be stored in the system , Do some analysis 、 Filter , And index it , For later query and retrieval ; For focused reptiles , The analysis results obtained in this process may also give feedback and guidance to the later grabbing process .

URL Manager

- Add a single url

- Determine whether there is a new UN crawled in the array url

- To obtain a new url

- Add batch urls

Web downloader (urllib2)

### Fetching strategy

Depth first traversal strategy

- The web crawler will start from the start page , One link, one link to follow , After processing this line, go to the next start page , Continue tracking links .

Width first traversal strategy

- The basic idea is , Link found in the newly downloaded Web page directly ** To be seized URL At the end of the queue . That is, the web crawler will first grab all the linked pages in the starting page , Then select one of the linked pages , Continue to crawl all pages linked in this page . Take the picture above as an example :

Backlink count policy

- It refers to the number of links of a web page to other web pages . The number of backlinks indicates the degree to which the content of a web page is recommended by others . therefore , Most of the time, the crawling system of search engine will use this index to evaluate the importance of web pages , So as to determine the order of capturing different web pages .

Partial PageRank Strategy

- This strategy draws on PageRank The idea of algorithm : For downloaded pages , Together with the to be grabbed URL Queue URL, Form a web page collection , Calculate for each page PageRank value , After the calculation , To be grabbed URL Queue URL according to PageRank Value size arrangement , And grab pages in this order .

OPIC Strategy strategy

- In fact, the algorithm scores the importance of the page . Before the algorithm starts , Give all pages the same initial cash (cash). When you download a page P after , take P Of the cash allocated to all from P Links analyzed in , And will P Cash emptied . For the to be captured URL All pages in the queue are sorted by cash amount .

Station priority strategy

- For the to be captured URL All pages in the queue , Classify according to the website you belong to . For websites with a large number of pages to download , Priority download . This strategy is also called big station priority strategy .

The depth first traversal strategy used in this example

Web parser (BeautifulSoup)

The question to consider : Analysis and filtering mode for web pages or data ?

- This example applies tags extracted from web pages and class Extract data for nodes

#<dd class="lemmaWgt-lemmaTitle-title"><h1>Python</h1>

title_node=soup.find('dd',class_="lemmaWgt-lemmaTitle-title").find("h1")

res_data['title'] = title_node.get_text()

#<div class="lemma-summary" label-module="lemmaSummary">

summary_node = soup.find("div",class_="lemma-summary")

res_data['summary'] = summary_node.get_text()

Data storage

- It is mainly a container for storing data records downloaded from web pages , And provide the target source for generating the index . Medium and large database products include :Oracle、Sql Server etc. .

- We use html generator

def output_html(self):

fout = open('output.html','w')

fout.write("<html><meta charset=\"utf-8\" />")

fout.write("<body>")

fout.write("< >")

for data in self.datas:

fout.write("<tr>")

fout.write("<td>%s</td>" % data['url'])

fout.write("<td>%s</td>" % data['title'].encode('utf-8'))

fout.write("<td>%s</td>" % data['summary'].encode('utf-8'))

fout.write("</tr>")

fout.write("</table>")

fout.write("</body>")

fout.write("</html>")

fout.close()

Page trial summary

- It's just a simple reptile , If you want to use , You also need to solve the problem of login 、 Verification Code 、Ajax、 Server and other problems . To be shared next time .

The following is a picture of the trial results

边栏推荐

猜你喜欢

Commonly used bypass methods for SQL injection -ctf

Check the file through the port

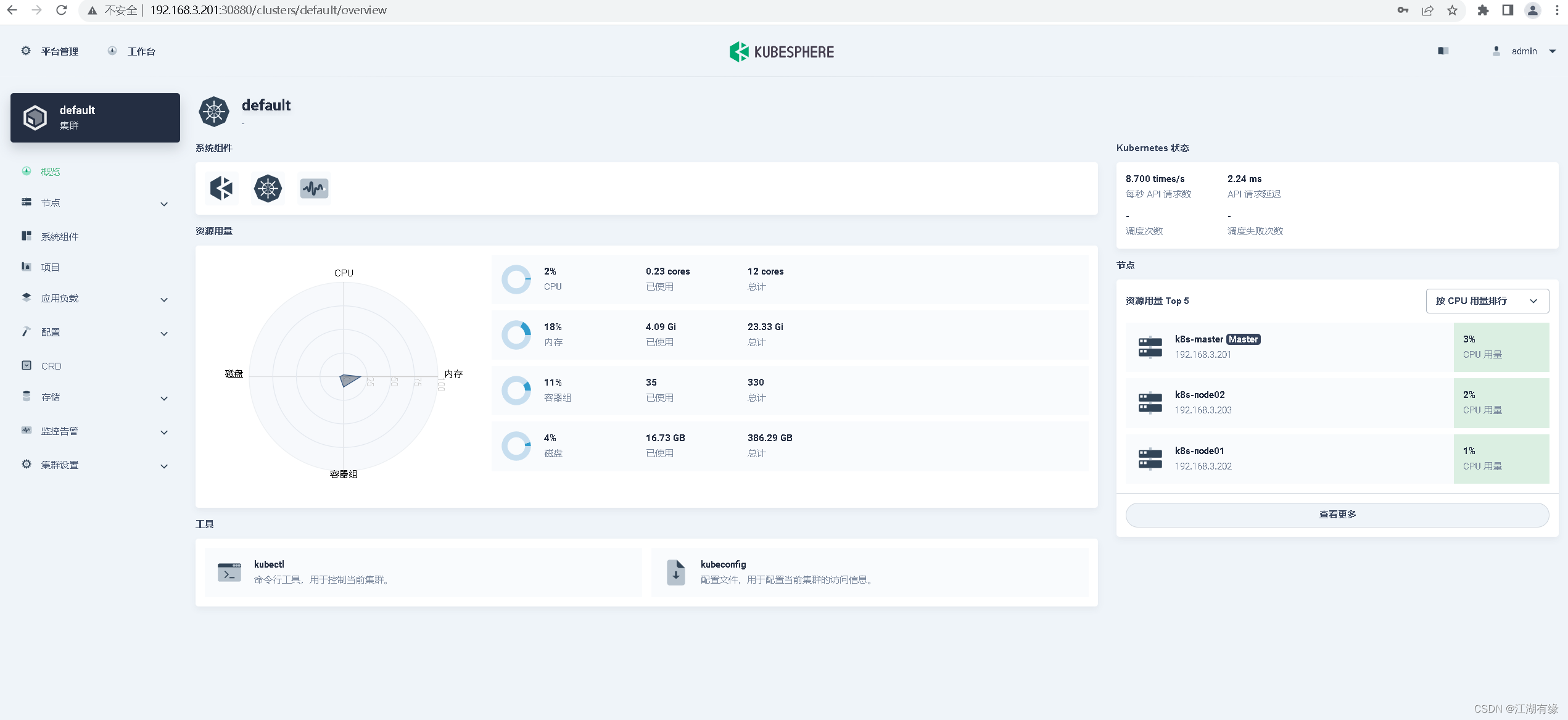

Deploy kubersphere in kubernetes

Acwing第 56 场周赛【完结】

Interview questions of a company in a certain month of a certain year (1)

AVL树的实现

记一次高校学生账户的“从无到有”

【Try to Hack】ip地址

看了5本书,我总结出财富自由的这些理论

Test APK exception control nettraffic attacker development

随机推荐

vtk.js鼠标左键滑动改变窗位和窗宽

YGG Spain subdao Ola GG officially established

Hackers use new PowerShell backdoors in log4j attacks

Commonly used bypass methods for SQL injection -ctf

Imperva- method of finding regular match timeout

C# richTextBox控制最大行数

MFC radio button grouping

生产环境服务器环境搭建+项目发布流程

INT 104_ LEC 06

PHP file contains -ctf

The sandbox has reached a cooperation with football player to bring popular football cartoons and animation into the metauniverse

Socket programming (multithreading)

11 string function

【Try to Hack】ip地址

黄蓉真的存在吗?

QT reading XML files using qdomdocument

Start appium

[kubernetes] download address of the latest version of each major version of kubernetes

Sequence table Curriculum

QT irregular shape antialiasing