当前位置:网站首页>GPU calculation

GPU calculation

2022-06-25 08:24:00 【Happy little yard farmer】

List of articles

GPU Calculation

1. GPU and CPU The difference between

Different design goals ,CPU Based on low latency ,GPU Based on high throughput .

- CPU: Dealing with different data types , At the same time, logical judgment will introduce a lot of branch jump and interrupt processing

- GPU: Processing types are highly uniform 、 Large scale data that are independent of each other , A clean computing environment that doesn't need to be interrupted

What type of program is suitable for GPU Up operation ?

- Computationally intensive

- Easy to parallel program

2. GPU Interpretation of the main parameters of

- Memory size : When the model is larger or the training batch is larger , The more video memory you need .

- FLOPs: Floating point operations per second ( Also known as Peak speed per second ) Is what runs every second floating-point Number of operations ( English :Floating-point operations per second; abbreviation :FLOPS) For short , Used to estimate Computer performance , Especially in the field of scientific computing that uses a large number of floating-point operations .

- Video bandwidth : The number of bits that can be transferred in a clock cycle ; The larger the number of bits, the larger the amount of data that can be transmitted instantaneously .

3. How to be in pytorch Use in GPU

- Model to cuda

- The data goes to cuda

- Output data to cuda, To numpy

If there are several available GPU: You can set dev="cuda:0" or dev="cuda:1". It should be noted that , If you use multiple cards for training and prediction , Some calculation results may be lost . There is GPU Under the condition of , You can try “ Single card for training , Multi card for prediction ”、“ Training with multi card 、 Single card for forecasting ” And so on .

4. The mainstream of the market GPU The choice of

Reference resources :https://www.bybusa.com/gpu-rank

https://zhuanlan.zhihu.com/p/61411536

http://timdettmers.com/2020/09/07/which-gpu-for-deep-learning/

Use the host chassis configuration or ( cloud ) The server , Do not use notebooks .

Free entry :Colab,Kaggle(RTX 2070)

For different in-depth learning architectures ,GPU The priority of parameter selection is different , Generally speaking, there are two routes :

Convolutional networks and Transformer: Tensor core >FLOPs( Floating point operations per second )> Video bandwidth >16 Bit floating-point computing power

Cyclic neural network : Video bandwidth >16 Bit floating-point computing power > Tensor core >FLOPs

Welcome to my official account. 【SOTA Technology interconnection 】, I will share more dry goods .

边栏推荐

- Socket problem record

- 自制坡道,可是真的很香

- TCP 加速小记

- Drawing of clock dial

- 4 reasons for adopting "safe left shift"

- LeetCode_ Hash table_ Medium_ 454. adding four numbers II

- What are the indicators of entropy weight TOPSIS method?

- Ffmpeg+sdl2 for audio playback

- Rosparam statement

- In 2022, which industry will graduates prefer when looking for jobs?

猜你喜欢

C disk drives, folders and file operations

TCP acceleration notes

每日刷题记录 (三)

使用apt-get命令如何安装软件?

Daily question brushing record (III)

Modeling and fault simulation of aircraft bleed system

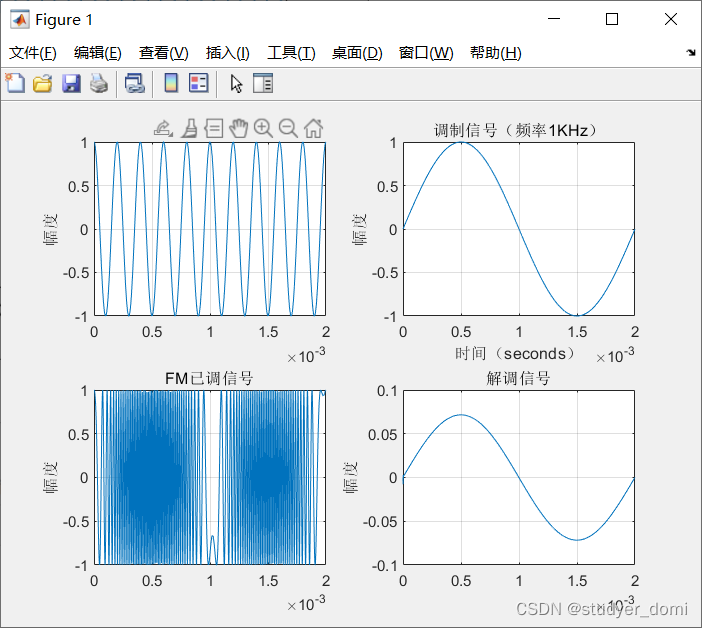

FM signal, modulated signal and carrier

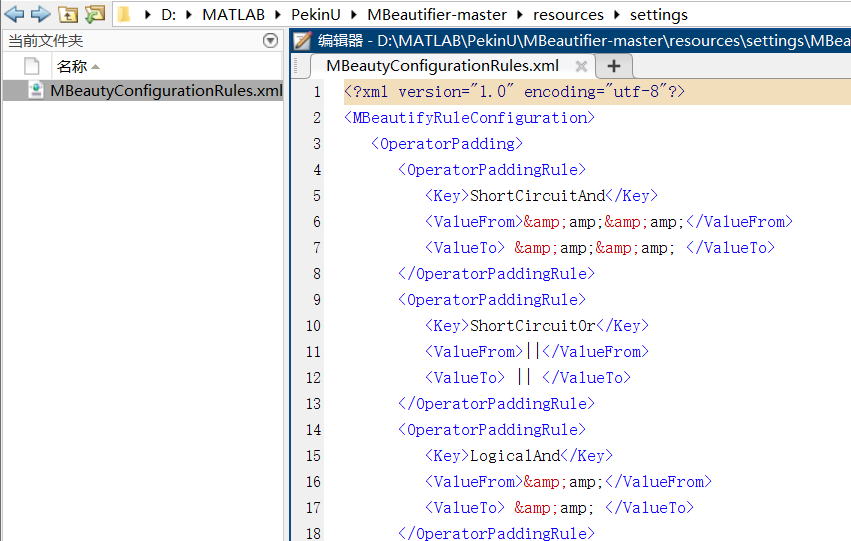

Matlab code format one click beautification artifact

Electronics: Lesson 009 - Experiment 7: study relays

Apache CouchDB Code Execution Vulnerability (cve-2022-24706) batch POC

随机推荐

Stm32cubemx Learning (5) Input capture Experiment

FFT [template]

Luogu p1073 [noip2009 improvement group] optimal trade (layered diagram + shortest path)

Electronics: Lesson 011 - experiment 10: transistor switches

How to analyze the grey prediction model?

Home server portal easy gate

Niuke: flight route (layered map + shortest path)

Sword finger offer (simple level)

第五天 脚本与UI系统

[thesis study] vqmivc

Luogu p6822 [pa2012]tax (shortest circuit + edge change point)

How to calculate the independence weight index?

Go语言学习教程(十三)

How to calculate the D value and W value of statistics in normality test?

Apache CouchDB Code Execution Vulnerability (cve-2022-24706) batch POC

Rank sum ratio (RSR) index calculation

Number theory template

Ffmpeg+sdl2 for audio playback

TCP and UDP

C disk drives, folders and file operations