当前位置:网站首页>Machine learning artifact scikit learn minimalist tutorial

Machine learning artifact scikit learn minimalist tutorial

2022-06-23 06:36:00 【PIDA】

author :Peter edit :Peter

Hello everyone , I am a Peter~

Scikit-learn Is a very well-known Python Machine learning library , It is widely used in data science fields such as statistical analysis and machine learning modeling .

- Modeling is invincible : User pass scikit-learn It can realize various supervised and unsupervised learning models

- Various functions : Use at the same time sklearn It can also preprocess data 、 Feature Engineering 、 Data set segmentation 、 Model evaluation, etc

- Rich data : Built in rich data sets , such as : Titanic 、 Iris, etc , No more worries about data

This article introduces... In a concise way scikit-learn Use , Please refer to the official website for more details :

- The built-in dataset uses

- Data set segmentation

- Data normalization and Standardization

- Type code

- modeling 6 The part

<!--MORE-->

Scikit-learn Use the divine map

The following picture is provided on the official website , Start with the size of the sample size , Divided into regression 、 classification 、 clustering 、 Data dimensionality reduction involves 4 Three aspects summarize scikit-learn Use :

https://scikit-learn.org/stable/tutorial/machine_learning_map/index.html

install

About installation scikit-learn, It is recommended to use anaconda To install , Don't worry about configuration and environmental issues . Of course, you can also directly pip To install :

pip install scikit-learn

Dataset generation

sklearn Built in some excellent data sets , such as :Iris data 、 House price data 、 Titanic data, etc .

import pandas as pd import numpy as np import sklearn from sklearn import datasets # Import dataset

Classified data -iris data

# iris data iris = datasets.load_iris() type(iris) sklearn.utils.Bunch

iris What the data looks like ? Every built-in data has a lot of information

The above data can be generated into what we want to see DataFrame, You can also add dependent variables :

Regression data - Boston prices

The attributes we focus on :

- data

- target、target_names

- feature_names

- filename

Can also generate DataFrame:

There are three ways to generate data

The way 1

# Call module from sklearn.datasets import load_iris data = load_iris() # Import data and labels data_X = data.data data_y = data.target

The way 2

from sklearn import datasets loaded_data = datasets.load_iris() # Properties of the imported dataset # Import sample data data_X = loaded_data.data # Import label data_y = loaded_data.target

The way 3

# Go straight back to data_X, data_y = load_iris(return_X_y=True)

Data sets use summary

from sklearn import datasets # Import library boston = datasets.load_boston() # Import Boston house price data print(boston.keys()) # View key ( attribute ) ['data','target','feature_names','DESCR', 'filename'] print(boston.data.shape,boston.target.shape) # Look at the shape of the data print(boston.feature_names) # See what features print(boston.DESCR) # described Data set description information print(boston.filename) # File path

Data segmentation

# The import module from sklearn.model_selection import train_test_split # It is divided into training set and test set data X_train, X_test, y_train, y_test = train_test_split( data_X, data_y, test_size=0.2, random_state=111 ) # 150*0.8=120 len(X_train)

Data standardization and normalization

from sklearn.preprocessing import StandardScaler # Standardization from sklearn.preprocessing import MinMaxScaler # normalization # Standardization ss = StandardScaler() X_scaled = ss.fit_transform(X_train) # Incoming data to be standardized # normalization mm = MinMaxScaler() X_scaled = mm.fit_transform(X_train)

Type code

From the official website :https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.LabelEncoder.html

Encode numbers

Encoding strings

Modeling cases

The import module

from sklearn.neighbors import KNeighborsClassifier, NeighborhoodComponentsAnalysis # Model from sklearn.datasets import load_iris # Import data from sklearn.model_selection import train_test_split # Segmentation data from sklearn.model_selection import GridSearchCV # The grid search from sklearn.pipeline import Pipeline # Pipeline operation from sklearn.metrics import accuracy_score # Score verification

Model instantiation

# Model instantiation knn = KNeighborsClassifier(n_neighbors=5)

Training models

knn.fit(X_train, y_train)

KNeighborsClassifier()

Test set prediction

y_pred = knn.predict(X_test) y_pred # Model based predictions

array([0, 0, 2, 2, 1, 0, 0, 2, 2, 1, 2, 0, 1, 2, 2, 0, 2, 1, 0, 2, 1, 2,

1, 1, 2, 0, 0, 2, 0, 2])Score verification

Two methods of model score verification :

knn.score(X_test,y_test)

0.9333333333333333

accuracy_score(y_pred,y_test)

0.9333333333333333

The grid search

How to search for parameters

from sklearn.model_selection import GridSearchCV

# Search parameters

knn_paras = {"n_neighbors":[1,3,5,7]}

# Default model

knn_grid = KNeighborsClassifier()

# Instanced objects for grid search

grid_search = GridSearchCV(

knn_grid,

knn_paras,

cv=10 # 10 Crossover verification

)

grid_search.fit(X_train, y_train)GridSearchCV(cv=10, estimator=KNeighborsClassifier(),

param_grid={'n_neighbors': [1, 3, 5, 7]})# The best parameter value found by searching grid_search.best_estimator_

KNeighborsClassifier(n_neighbors=7)

grid_search.best_params_

Out42:

{'n_neighbors': 7}grid_search.best_score_

0.975

Modeling based on search results

knn1 = KNeighborsClassifier(n_neighbors=7) knn1.fit(X_train, y_train)

KNeighborsClassifier(n_neighbors=7)

As can be seen from the following results : The modeling effect after grid search is better than that without grid search

y_pred_1 = knn1.predict(X_test) knn1.score(X_test,y_test)

1.0

accuracy_score(y_pred_1,y_test)

1.0

边栏推荐

- Steam教育对国内大学生的影响力

- 【Leetcode】431. Encode n-ary tree to binary tree (difficult)

- The central network and Information Technology Commission issued the National Informatization Plan for the 14th five year plan, and the network security market entered a period of rapid growth

- Day_04 傳智健康項目-預約管理-套餐管理

- Redis sentry

- 学习太极创客 — ESP8226 (十一)用 WiFiManager 库配网

- Softing dataFEED OPC Suite将西门子PLC数据存储到Oracle数据库中

- For non dpdk kvm1.0 machines, set init Maxrxbuffers changed from 256 to 1024 to improve packet receiving capacity

- mysql读已提交和可重复度区别

- How to batch produce QR codes that can be read online after scanning

猜你喜欢

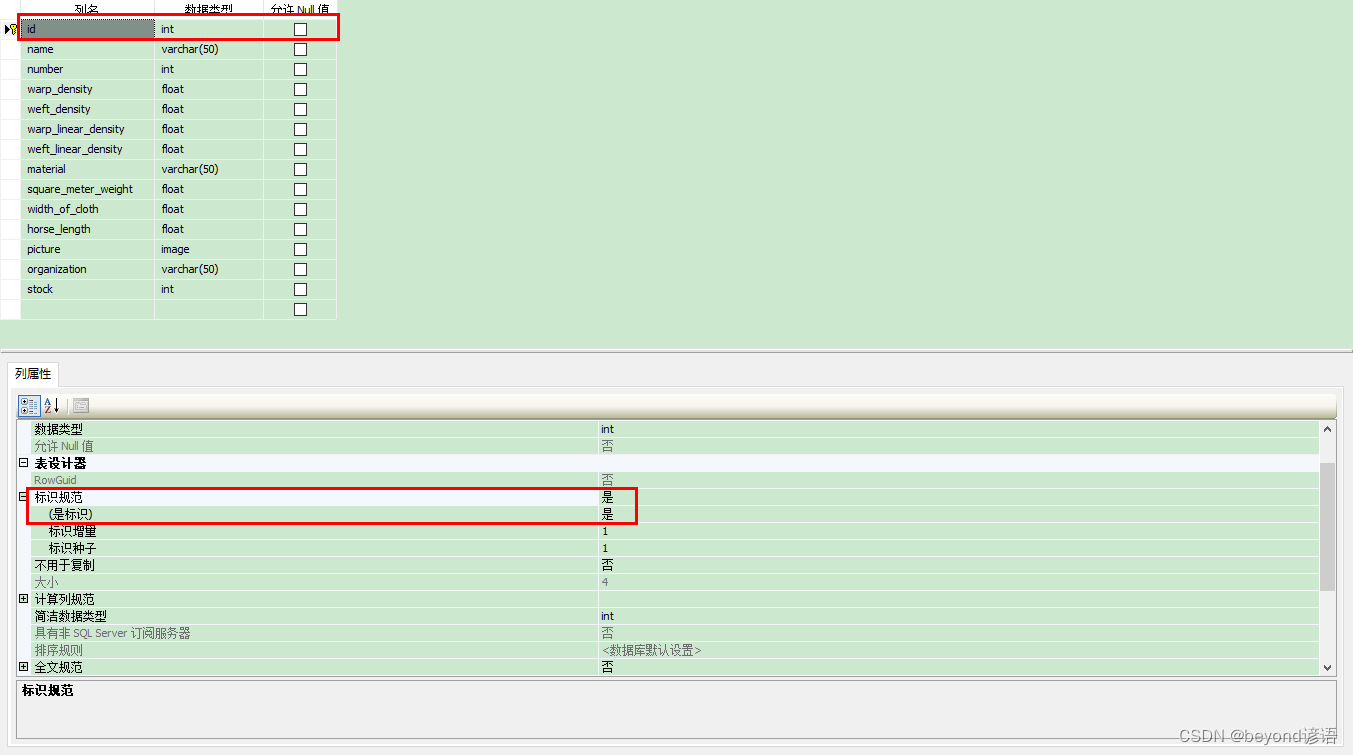

c#数据库报错问题大家帮我看看吧

Day_ 01 smart communication health project - project overview and environmental construction

Day_ 12 smart health project jasperreports

射频内容学习

解析创客教育中的个性化学习进度

Steam教育对国内大学生的影响力

程序员的真实想法 | 每日趣闻

Gplearn appears assignment destination is read only

Day_04 传智健康项目-预约管理-套餐管理

11、 Realization of textile fabric off shelf function

随机推荐

JVM原理简介

Day_ 07 smart communication health project FreeMarker

C # database reports errors. Let's have a look

C语言 获取秒、毫秒、微妙、纳秒时间戳

Day_ 03 smart communication health project - appointment management - inspection team management

C# wpf 附加属性实现界面上定义装饰器

如何实现与FDA保持邮件通信安全加密?

论文笔记: 多标签学习 LSML

去除防火墙和虚拟机对live555启动IP地址的影响

WordPress aawp 3.16 cross site scripting

Redis sentry

记一次GLIB2.14升级GLIB2.18的记录以及其中的步骤原理

qt creater搭建osgearth环境(osgQT MSVC2017)

QT creator builds osgearth environment (osgqt msvc2017)

【踩坑记录】数据库连接未关闭连接,释放资源的坑

2020 smart power plant industry insight white paper

什么是客户体验自动化?

如何查看本机IP

[focus on growth and build a dream for the future] - TDP year-end event, three chapters go to the Spring Festival!

LeetCode笔记:Weekly Contest 298