当前位置:网站首页>Basic concepts of NLP 1

Basic concepts of NLP 1

2022-07-25 12:15:00 【bolite】

notes : This note is from 《 Li Hongyi 2021/2022 Spring machine learning course 》p1-p4 What to learn

Reinforcement learning , Supervised learning , Unsupervised learning

Supervised learning

Supervised learning First by mark Correct Training set Training , After training “ Experience ” It's called a model , Then pass the unknown data into the model , The machine can pass through “ Experience ” Infer the correct result

Unsupervised learning

Unsupervised learning It's essentially a Statistical means ( It can also be understood as a means of classification ), It has no purposeful way of training , You can't know in advance what the result is , therefore No need to label . Its principle is similar to regression in supervised learning , But there is no label in the regression results .

Reinforcement learning

Reinforcement learning It refers to the generalization ability of the computer to make correct solutions to problems that have not been learned , It can be understood as Reinforcement learning = Supervised learning + Unsupervised learning . Like supervised learning , It also needs human intervention .

Two major tasks of machine learning

1.Regression( Return to ) Is to find a function function, By inputting features x , Output a value Scalar .

2.Classification( classification ) It is to let the machine choose a task as output among the options set by human beings

Find the functional formula

1. First assume that the function tries Y=b+wX1(X For input in training data ,Y by X Corresponding output ,b and w Is an unknown parameter , The formula is a guess, not necessarily right , The following data can be modified after training )

2. Definition Loss function :L(b,w) The parameter is the previous b and w,Loss The output of the function indicates that the b and w When set to this value , Is the corresponding accuracy good or bad

Loss How to get the function : Can be x Input to a specific b and w Get the predicted y, Then the predicted y And the actual y The difference is absolutely worth it e, It will be all e Add to average .

3. Optimize

An optimization method —— Gradient descent method

My understanding is to beg Loss Function about w The slope of , When the slope is less than 0 When w Move forward , When the slope is less than 0 When w Just go back . Keep updating until you find the differential as 0 Or the number of initial setting updates reaches .

among , How much forward and backward depends on his differentiation and learning rate ( Red landau It means ,hyperparameter Set the value for yourself in the experiment , The learning rate here is set by ourselves )

The model is more complex

Because most of the models are not similar to this form of univariate quadratic function , So for finding functional 3 There are also some steps that need to be changed .

We can split a complex function into several simple functions and a constant : The red in the figure is the objective function , You can use a constant 0 and 3 Synthesis of blue functions with different labels .( black b Is constant , Green bi yes sigmoid The parameters of the function )

A curve similar to this radian , We can also take points on the curve , Then use the above method to get the result

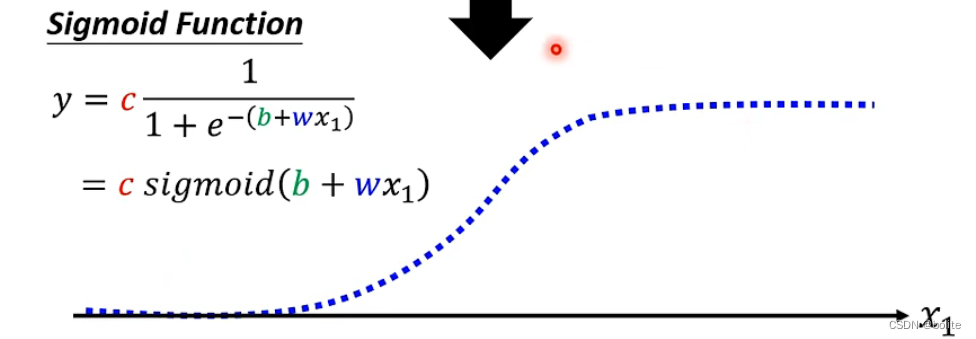

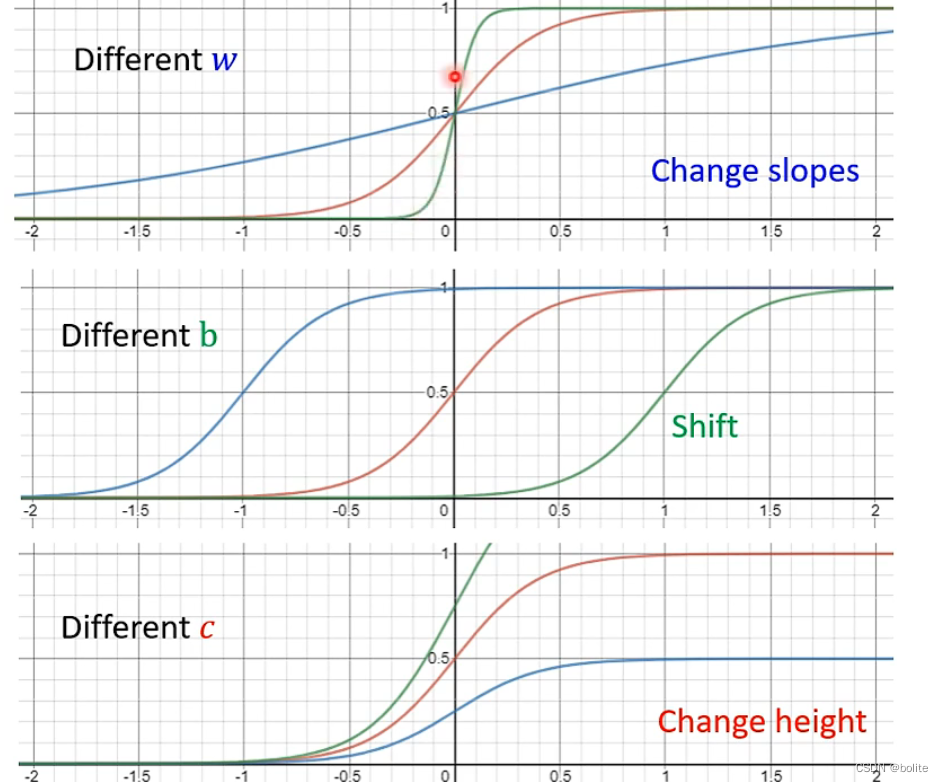

About a single simple blue function

We can adjust constantly sigmoid function ( Activation function , It can also be represented by other activation functions ) Make him constantly change the corresponding single blue function

Corresponding to the above simple model, the result after change (x Is the previous variable , Training input data )

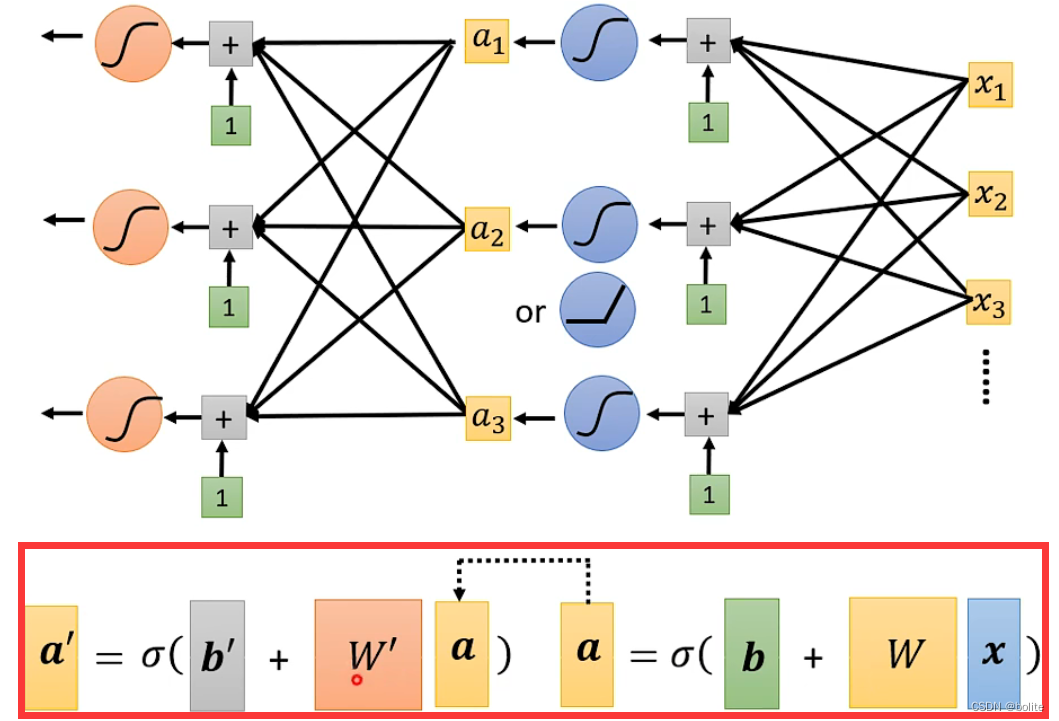

Analyze the following formula

hypothesis i and j Only 1,2,3 Three data , Then the operation in blue brackets can be regarded as a matrix operation

And then r The value of is carried into sigmoid Function

Loss function Loss The variable of

Some changes need to be made on the input side of calculating the loss function

By the previous b,w Change to θ

θ Expressed as W, Green b,c, black b( All unknown parameters ) One dimensional vector composed of vertical arrangement

The remaining calculation method is the same as the previous

neural network

When we get the first y The result of is passed into a new sigmoid Function , Form one or more new nested operations , It becomes a neural network . But it is not that the deeper the neural network is, the more accurate the result of the data is .

边栏推荐

- scrapy 爬虫框架简介

- 对比学习的应用(LCGNN,VideoMoCo,GraphCL,XMC-GAN)

- Intelligent information retrieval (overview of intelligent information retrieval)

- 【GCN-RS】MCL: Mixed-Centric Loss for Collaborative Filtering (WWW‘22)

- Transformer variants (spark transformer, longformer, switch transformer)

- Scott+scott law firm plans to file a class action against Yuga labs, or will confirm whether NFT is a securities product

- 【GCN-RS】Are Graph Augmentations Necessary? Simple Graph Contrastive Learning for RS (SIGIR‘22)

- Atomic 原子类

- Start with the development of wechat official account

- Zero shot image retrieval (zero sample cross modal retrieval)

猜你喜欢

【GCN-RS】Learning Explicit User Interest Boundary for Recommendation (WWW‘22)

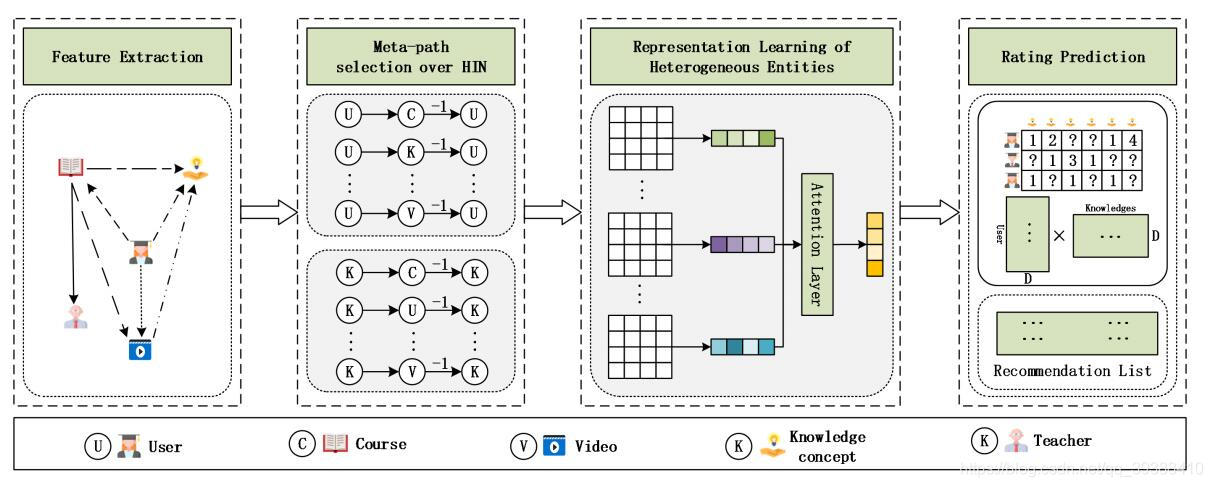

Heterogeneous graph neural network for recommendation system problems (ackrec, hfgn)

![[multimodal] transferrec: learning transferable recommendation from texture of modality feedback arXiv '22](/img/02/5f24b4af44f2f9933ce0f031d69a19.png)

[multimodal] transferrec: learning transferable recommendation from texture of modality feedback arXiv '22

给生活加点惊喜,做创意生活的原型设计师丨编程挑战赛 x 选手分享

![[comparative learning] understanding the behavior of contractual loss (CVPR '21)](/img/96/9b58936365af0ca61aa7a8e97089fe.png)

[comparative learning] understanding the behavior of contractual loss (CVPR '21)

【RS采样】A Gain-Tuning Dynamic Negative Sampler for Recommendation (WWW 2022)

NLP的基本概念1

scrapy 爬虫框架简介

【多模态】《HiT: Hierarchical Transformer with Momentum Contrast for Video-Text Retrieval》ICCV 2021

利用wireshark对TCP抓包分析

随机推荐

通信总线协议一 :UART

[high concurrency] Why is the simpledateformat class thread safe? (six solutions are attached, which are recommended for collection)

【AI4Code】《InferCode: Self-Supervised Learning of Code Representations by Predicting Subtrees》ICSE‘21

Video Caption(跨模态视频摘要/字幕生成)

Multi-Label Image Classification(多标签图像分类)

The applet image cannot display Base64 pictures. The solution is valid

Intelligent information retrieval(智能信息检索综述)

【AI4Code】《CoSQA: 20,000+ Web Queries for Code Search and Question Answering》 ACL 2021

Scott+Scott律所计划对Yuga Labs提起集体诉讼,或将确认NFT是否属于证券产品

客户端开放下载, 欢迎尝鲜

2.1.2 机器学习的应用

【AI4Code】《CodeBERT: A Pre-Trained Model for Programming and Natural Languages》 EMNLP 2020

图神经网络用于推荐系统问题(IMP-GCN,LR-GCN)

Eureka usage record

OSPF综合实验

OSPF comprehensive experiment

Eureka使用记录

NLP知识----pytorch,反向传播,预测型任务的一些小碎块笔记

I advise those students who have just joined the work: if you want to enter the big factory, you must master these concurrent programming knowledge! Complete learning route!! (recommended Collection)

给生活加点惊喜,做创意生活的原型设计师丨编程挑战赛 x 选手分享