当前位置:网站首页>[comparative learning] understanding the behavior of contractual loss (CVPR '21)

[comparative learning] understanding the behavior of contractual loss (CVPR '21)

2022-07-25 12:00:00 【chad_ lee】

Understanding the Behaviour of Contrastive Loss (CVPR’21)

Contrastive Loss Temperature coefficient in τ \tau τ Is a key parameter , Most papers put τ \tau τ Set to a small number , This article starts with the analysis of temperature parameters τ \tau τ set out , Analysis shows that :

- Contrast loss can actually automatically mine hard negative samples , Therefore, we can learn high-quality self-monitoring representations . In particular , For negative samples that have been far away , There is no need to keep it away ; Mainly focus on negative samples that are not far away ( Hard negative sample ), Thus, the representation space is more uniform ( It's similar to the red circle chart below ).

- temperature coefficient τ \tau τ The degree of mining negative samples can be controlled , τ \tau τ The smaller the sample, the more attention is paid to the difficult negative sample .

Hardness-Awareness

The widely used comparison loss function is InfoNCE:

L ( x i ) = − log [ exp ( s i , i / τ ) ∑ k ≠ i exp ( s i , k / τ ) + exp ( s i , i / τ ) ] \mathcal{L}\left(x_{i}\right)=-\log \left[\frac{\exp \left(s_{i, i} / \tau\right)}{\sum_{k \neq i} \exp \left(s_{i, k} / \tau\right)+\exp \left(s_{i, i} / \tau\right)}\right] L(xi)=−log[∑k=iexp(si,k/τ)+exp(si,i/τ)exp(si,i/τ)]

This loss function requires the second i Samples and it's another amplified ( just ) Similarity between samples s i , i s_{i,i} si,i As big as possible , And with other examples ( Negative sample ) The similarity between s i , k s_{i,k} si,k As small as possible . But there are many loss functions that satisfy this condition , For example, the simplest function L simple \mathcal{L}_{\text {simple }} Lsimple :

L simple ( x i ) = − s i , i + λ ∑ i ≠ j s i , j \mathcal{L}_{\text {simple }}\left(x_{i}\right)=-s_{i, i}+\lambda \sum_{i \neq j} s_{i, j} Lsimple (xi)=−si,i+λi=j∑si,j

But the training effect of these two loss functions is much worse :

| Data sets | Contrastive Loss | Simple Loss |

|---|---|---|

| CIFAR-10 | 79.75 | 74 |

| CIFAR-100 | 51.82 | 49 |

| ImageNet-100 | 71.53 | 74.31 |

| SVHN | 92.55 | 94.99 |

This is because Simple Loss The same weight penalty is given to all negative sample similarity : ∂ L simple ∂ s i , k = λ \frac{\partial L_{\text {simple }}}{\partial s_{i, k}}=\lambda ∂si,k∂Lsimple =λ, That is, the gradient of the similarity of the loss function to all negative samples is equal . But in Contrastive Loss in , It will automatically give higher penalties to negative samples with higher similarity :

The gradient of the positive sample : ∂ L ( x i ) ∂ s i , i = − 1 τ ∑ k ≠ i P i , k The gradient of negative samples : ∂ L ( x i ) ∂ s i , j = 1 τ P i , j ∝ s i , j \text { The gradient of the positive sample : } \frac{\partial \mathcal{L}\left(x_{i}\right)}{\partial s_{i, i}}=-\frac{1}{\tau} \sum_{k \neq i} P_{i, k} \\ \text { The gradient of negative samples : } \frac{\partial \mathcal{L}\left(x_{i}\right)}{\partial s_{i, j}}=\frac{1}{\tau} P_{i, j} \propto s_{i, j} The gradient of the positive sample : ∂si,i∂L(xi)=−τ1k=i∑Pi,k The gradient of negative samples : ∂si,j∂L(xi)=τ1Pi,j∝si,j

among P i , j = exp ( s i , j / τ ) ∑ k ≠ i exp ( s i , k / τ ) + exp ( s i , i / τ ) P_{i, j}=\frac{\exp \left(s_{i, j /} \tau\right)}{\sum_{k \neq i} \exp \left(s_{i, k} / \tau\right)+\exp \left(s_{i, i} / \tau\right)} Pi,j=∑k=iexp(si,k/τ)+exp(si,i/τ)exp(si,j/τ), For all negative samples , P i , j P_{i, j} Pi,j The denominator of is the same , therefore s i , j s_{i, j} si,j The bigger it is , The gradient term of negative samples is also larger , This gives the negative sample a greater gradient away from the sample .( It can be understood as focal loss, The harder it is, the greater the gradient ). Thus, all samples are encouraged to be evenly distributed on a hypersphere .

To verify the truth Contrastive Loss It's really because we can mine the characteristics of difficult negative samples , The article shows that some additional difficult samples are selected for Simple Loss On ( Select for each sample 4096 A hard negative sample ), Improved performance :

| Data sets | Contrastive Loss | Simple Loss + Hard |

|---|---|---|

| CIFAR-10 | 79.75 | 84.84 |

| CIFAR-100 | 51.82 | 55.71 |

| ImageNet-100 | 71.53 | 74.31 |

| SVHN | 92.55 | 94.99 |

temperature coefficient τ \tau τ Degree of control

temperature coefficient τ \tau τ The smaller it is , The loss function pays more attention to hard negative samples , Specially :

When τ \tau τ Tend to be 0 when ,Contrastive Loss Degenerate into focusing only on the hardest samples :

lim τ → 0 + 1 τ max [ s max − s i , i , 0 ] \lim _{\tau \rightarrow 0^{+}} \frac{1}{\tau} \max \left[s_{\max }-s_{i, i}, 0\right] τ→0+limτ1max[smax−si,i,0]

This means that One by one Push each negative sample to the same distance from yourself :

When τ \tau τ Approaching infinity ,Contrastive Loss Almost degenerate into Simple Loss, The weight is the same for all negative samples .

So the temperature coefficient τ \tau τ The smaller it is , The more uniform the distribution of sample characteristics , But this is not a good thing , Because the potential positive sample (False Negative) Also pushed away :

边栏推荐

- Varest blueprint settings JSON

- 擎创科技加入龙蜥社区,共建智能运维平台新生态

- 11. Reading rumors spread with deep learning

- Brpc source code analysis (VI) -- detailed explanation of basic socket

- Web APIs (get element event basic operation element)

- 银行理财子公司蓄力布局A股;现金管理类理财产品整改加速

- Oil monkey script link

- W5500 adjusts the brightness of LED light band through upper computer control

- pycharm连接远程服务器ssh -u 报错:No such file or directory

- 【IMX6ULL笔记】--内核底层驱动初步探究

猜你喜欢

硬件连接服务器 tcp通讯协议 gateway

Javescript loop

30 sets of Chinese style ppt/ creative ppt templates

Experimental reproduction of image classification (reasoning only) based on caffe resnet-50 network

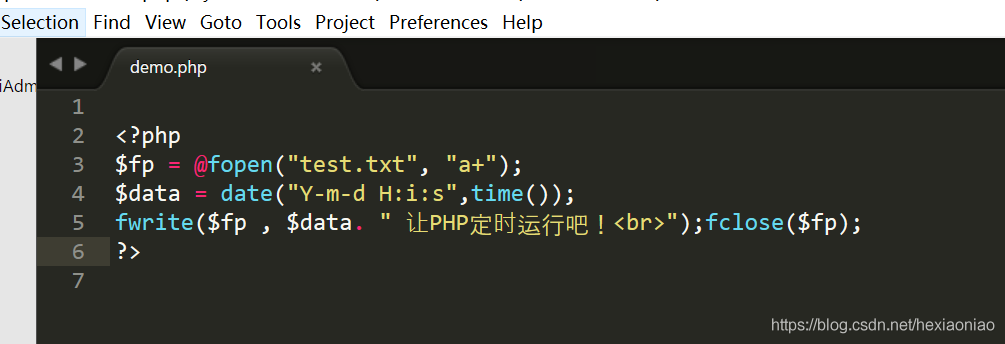

winddows 计划任务执行bat 执行PHP文件 失败的解决办法

教你如何通过MCU将S2E配置为UDP的工作模式

Return and finally? Everyone, please look over here,

【GCN-CTR】DC-GNN: Decoupled GNN for Improving and Accelerating Large-Scale E-commerce Retrieval WWW22

Miidock Brief

Differences in usage between tostring() and new string()

随机推荐

程序员送给女孩子的精美礼物,H5立方体,唯美,精致,高清

brpc源码解析(六)—— 基础类socket详解

[leetcode brush questions]

W5500 upload temperature and humidity to onenet platform

【6篇文章串讲ScalableGNN】围绕WWW 2022 best paper《PaSca》

30 sets of Chinese style ppt/ creative ppt templates

[cloud co creation] what is the role of AI in mathematics? What will be the disruptive impact on the mathematical world in the future?

toString()与new String()用法区别

JS operator

奉劝那些刚参加工作的学弟学妹们:要想进大厂,这些并发编程知识是你必须要掌握的!完整学习路线!!(建议收藏)

Learning to Pre-train Graph Neural Networks(图预训练与微调差异)

'C:\xampp\php\ext\php_zip.dll' - %1 不是有效的 Win32 应用程序 解决

油猴脚本链接

JS中的函数

【高并发】高并发场景下一种比读写锁更快的锁,看完我彻底折服了!!(建议收藏)

Teach you how to configure S2E to UDP working mode through MCU

Make a reliable delay queue with redis

【GCN-RS】MCL: Mixed-Centric Loss for Collaborative Filtering (WWW‘22)

微星主板前面板耳机插孔无声音输出问题【已解决】

Wiznet embedded Ethernet technology training open class (free!!!)