当前位置:网站首页>How to use crawlers to capture bullet screen and comment data of station B?

How to use crawlers to capture bullet screen and comment data of station B?

2022-06-25 03:54:00 【Blockchain research】

Tool preparation :

Cloud gathering reptile :http://www.yuncaix.com/

The goal is :

For example, we capture the data of this video :

https://www.bilibili.com/video/BV1q741167KA?from=search

Mainly capture comments 、 title 、 Barrage this information , Pictured :

Data modeling :

The preview of the entire data structure is as follows :

There are two key fields , They are barrage and comment , We set the data type to data grouping , For example, comments , Used to store multiple comments

Grasping process

Design flow chart :

What we are grabbing is only a detail page , So just pull the component of a detail page , Pictured :

Capture video information

Video information is stored in the web source code , We just need to find a way to extract data from it . But this paragraph is a paragraph js Code defined json. How do we extract it ?

We have to pull one 【 The data processing 】 Components , The data processing , seeing the name of a thing one thinks of its function , Processing data .

The following rules :

window.__INITIAL_STATE__=[data];(function()We use strings , Intercepted the middle section json. The source code can be analyzed , This requires a little eyesight .

Then we click debug , Get a paragraph json data :

Let's put this data into json See what is in the parsing tool . website https://json.cn/

From the picture , We saw this paragraph json What's in it .

Including video data ,up Master's message , Including the number of fans 、 Play data or something , It's all inside .

Let's pull another one 【 Data extractor 】, Extract these data , Pictured :

For example, get the title field :

His json The path is a

videoData.titleThe following rules :

choice json The way

test result :

The title of the video is thus obtained , What other fans are there , The number of bullets , All in this way . This process is too much , No more description .

Let's move on to the second stage , Grab comments

Grab comments

First we need to analyze , Where are the comments .

First , Let's pull the page down , It will be loaded into the comment data . stay CHrome Analysis in the browser . You can type reply Keyword filtering .( I found this after looking for it for a long time , It took a while )

Get this js Request address :https://api.bilibili.com/x/v2/reply?callback=jQuery172007409334557635194_1587209947092&jsonp=jsonp&pn=1&type=1&oid=85246284&sort=2&_=1587209958023

We have direct access to , Will go wrong :

What do I do ?

Then I found out , hold callback=jQuery172007409334557635194_1587209947092& Just remove this parameter .

The date should also be removed .

So the address is :https://api.bilibili.com/x/v2/reply?jsonp=jsonp&pn=1&type=1&oid=85246284&sort=2 see , The data came out ....

You ask me why , I don't know either . Sometimes intuition is such a thing , It's amazing . Interested students can analyze the reasons .

Let's format the data :

Comments in data.replies Inside

Now we're thinking about how to catch these comments .

First , We need to construct this address , Let the crawler crawl the comments according to this address , How to construct ?

Let's go back and analyze the structure of the comment address :

https://api.bilibili.com/x/v2/reply?jsonp=jsonp&pn=1&type=1&oid=85246284&sort=2&_=1587209958023

Parameters pn=1, This is the page number .

oid=85246284 , This oid Where can I get it ?

We put 85246284 Search in the source code , It is found that this parameter exists in many places , Call him aid Well .

We just need to get this aid, You can construct the comment address .

We need a 【 Temporary data 】 Components , Temporarily store the variables inside , You can use it at will .

Add a new one aid Variable .

Now the flow chart is as follows :

test result :

Get this aid after , How to construct an address ?

Let's introduce another 【 The data processing 】 Components , Further process data into addresses .

source , We choose “ nothing “, Rule acquisition method , Choose a template language .

https://api.bilibili.com/x/v2/reply?jsonp=jsonp&pn=1&type=1&oid={

{

{tempVar.aid}}}&sort=2What we defined above aid Variable , Use tempVar.aid Can be embedded inside .

The test results are as follows :

Got the address , The next step ?

We jump to a new comment page .

At this time, you need to introduce a jump component , Here's the picture :

Get the rules , We choose 【 Original value 】, The so-called original value , seeing the name of a thing one thinks of its function , Is to use the data of upstream components , No changes .

Jump to the comments page , Pull in a 【 Details page 】 Components

Now? , We need to extract the desired data from this page .

Put the comment address in the debugging , Pictured :

The comment data is multiple , How do we extract ?

We need to introduce a 【 Field loop area 】 Components .

Here, the bound fields are grouped , Remember to select the comment field .

Data acquisition is directly filled in data.replies

The test results are as follows :

Maybe this is not intuitive enough , Click on the bottom 【trace】, You can see more details :

After getting this cyclic data ? You can use 【 Data extractor 】 Directly extract the data .

fill :

content.message

Why fill in this ?

Let's go back to the above analysis json In the data structure

test result :

ok.

Grab barrage data

According to the above analysis method , Find the bullet curtain ajax Request address :

https://api.bilibili.com/x/v1/dm/list.so?oid=145727684

The barrage data is all in it .

How to get ....

In the same way . Length is too long , No more introduction .

The whole flow chart is finally like this :

Including setting page turning and so on .

This is the preview of the captured data :

View comment data separately :

Easily export data :

Final , We don't have to write any code , It doesn't need much advanced technology , The whole crawling process is easily completed , If you master this tool , It's easy to create a complex web crawler application in ten minutes .

Interested users can use this product to capture other data , Including Weibo 、 Zhihu and so on .

Cloud gathering reptile :http://www.yuncaix.com/

边栏推荐

- 墨天轮访谈 | IvorySQL王志斌—IvorySQL,一个基于PostgreSQL的兼容Oracle的开源数据库

- Install ffmpeg in LNMP environment and use it in yii2

- 你真的需要自动化测试吗?

- 吴恩达机器学习新课程又来了!旁听免费,小白友好

- Understand (DI) dependency injection in PHP

- 一文搞懂php中的(DI)依赖注入

- 亚马逊在中国的另一面

- Self cultivation and learning encouragement

- 現在,耳朵也要進入元宇宙了

- The release function completed 02 "IVX low code sign in system production"

猜你喜欢

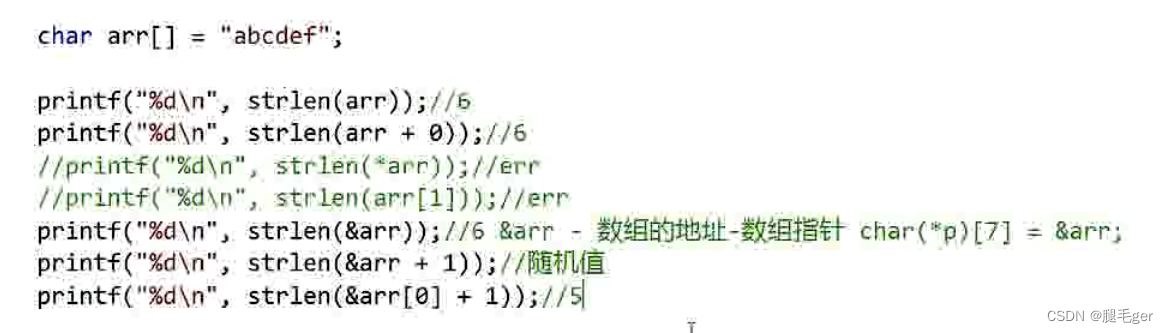

About sizeof() and strlen in array

Rebeco: using machine learning to predict stock crash risk

协作+安全+存储,云盒子助力深圳爱德泰重构数据中心

MySQL modifies and deletes tables in batches according to the table prefix

Time management understood after working at home | community essay solicitation

【Harmony OS】【ARK UI】ETS 上下文基本操作

严重的PHP缺陷可导致QNAP NAS 设备遭RCE攻击

【Harmony OS】【ArkUI】ets开发 图形与动画绘制

Zuckerberg's latest VR prototype is coming. It is necessary to confuse virtual reality with reality

How to raise key issues in the big talk club?

随机推荐

程序猿职业发展9项必备软技能

签到功能完成03《ivx低代码签到系统制作》

亚马逊在中国的另一面

Russian Airi Research Institute, etc. | SEMA: prediction of antigen B cell conformation characterization using deep transfer learning

【Rust投稿】从零实现消息中间件(6)-CLIENT

协作+安全+存储,云盒子助力深圳爱德泰重构数据中心

Svn deployment

OpenSUSE environment variable settings

Solution to the problem that Linux crontab timed operation Oracle does not execute (crontab environment variable problem)

The more AI evolves, the more it resembles the human brain! Meta found the "prefrontal cortex" of the machine. AI scholars and neuroscientists were surprised

Is it safe for tonghuashun securities to open an account

Peking University has a new president! Gongqihuang, academician of the Chinese Academy of Sciences, took over and was admitted to the Physics Department of Peking University at the age of 15

Time management understood after working at home | community essay solicitation

陆奇首次出手投资量子计算

扎克伯格最新VR原型机来了,要让人混淆虚拟与现实的那种

OpenSUSE environment PHP connection Oracle

On the self-cultivation of an excellent red team member

About sizeof() and strlen in array

A new generation of cascadable Ethernet Remote i/o data acquisition module

Sleep more, you can lose weight. According to the latest research from the University of Chicago, sleeping more than 1 hour a day is equivalent to eating less than one fried chicken leg