当前位置:网站首页>“IRuntime”: 未声明的标识符

“IRuntime”: 未声明的标识符

2022-07-24 21:32:00 【AI视觉网奇】

“IRuntime”: 未声明的标识符

完整用法:

TensorRT 系列 (1)模型推理_洪流之源的博客-CSDN博客_tensorrt 推理

// tensorRT include

#include <NvInfer.h>

#include <NvInferRuntime.h>

// cuda include

#include <cuda_runtime.h>

// system include

#include <stdio.h>

#include <math.h>

#include <iostream>

#include <fstream>

#include <vector>

using namespace std;

// 上一节的代码

class TRTLogger : public nvinfer1::ILogger

{

public:

virtual void log(Severity severity, nvinfer1::AsciiChar const* msg) noexcept override

{

if(severity <= Severity::kINFO)

{

printf("%d: %s\n", severity, msg);

}

}

} logger;

nvinfer1::Weights make_weights(float* ptr, int n)

{

nvinfer1::Weights w;

w.count = n;

w.type = nvinfer1::DataType::kFLOAT;

w.values = ptr;

return w;

}

bool build_model()

{

TRTLogger logger;

// 这是基本需要的组件

nvinfer1::IBuilder* builder = nvinfer1::createInferBuilder(logger);

nvinfer1::IBuilderConfig* config = builder->createBuilderConfig();

nvinfer1::INetworkDefinition* network = builder->createNetworkV2(1);

// 构建一个模型

/*

Network definition:

image

|

linear (fully connected) input = 3, output = 2, bias = True w=[[1.0, 2.0, 0.5], [0.1, 0.2, 0.5]], b=[0.3, 0.8]

|

sigmoid

|

prob

*/

const int num_input = 3;

const int num_output = 2;

float layer1_weight_values[] = {1.0, 2.0, 0.5, 0.1, 0.2, 0.5};

float layer1_bias_values[] = {0.3, 0.8};

nvinfer1::ITensor* input = network->addInput("image", nvinfer1::DataType::kFLOAT, nvinfer1::Dims4(1, num_input, 1, 1));

nvinfer1::Weights layer1_weight = make_weights(layer1_weight_values, 6);

nvinfer1::Weights layer1_bias = make_weights(layer1_bias_values, 2);

auto layer1 = network->addFullyConnected(*input, num_output, layer1_weight, layer1_bias);

auto prob = network->addActivation(*layer1->getOutput(0), nvinfer1::ActivationType::kSIGMOID);

// 将我们需要的prob标记为输出

network->markOutput(*prob->getOutput(0));

printf("Workspace Size = %.2f MB\n", (1 << 28) / 1024.0f / 1024.0f);

config->setMaxWorkspaceSize(1 << 28);

builder->setMaxBatchSize(1);

nvinfer1::ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

if(engine == nullptr)

{

printf("Build engine failed.\n");

return false;

}

// 将模型序列化,并储存为文件

nvinfer1::IHostMemory* model_data = engine->serialize();

FILE* f = fopen("engine.trtmodel", "wb");

fwrite(model_data->data(), 1, model_data->size(), f);

fclose(f);

// 卸载顺序按照构建顺序倒序

model_data->destroy();

engine->destroy();

network->destroy();

config->destroy();

builder->destroy();

printf("Done.\n");

return true;

}

vector<unsigned char> load_file(const string& file)

{

ifstream in(file, ios::in | ios::binary);

if (!in.is_open())

return {};

in.seekg(0, ios::end);

size_t length = in.tellg();

std::vector<uint8_t> data;

if (length > 0){

in.seekg(0, ios::beg);

data.resize(length);

in.read((char*)&data[0], length);

}

in.close();

return data;

}

void inference(){

// ------------------------------ 1. 准备模型并加载 ----------------------------

TRTLogger logger;

auto engine_data = load_file("engine.trtmodel");

// 执行推理前,需要创建一个推理的runtime接口实例。与builer一样,runtime需要logger:

nvinfer1::IRuntime* runtime = nvinfer1::createInferRuntime(logger);

// 将模型从读取到engine_data中,则可以对其进行反序列化以获得engine

nvinfer1::ICudaEngine* engine = runtime->deserializeCudaEngine(engine_data.data(), engine_data.size());

if(engine == nullptr){

printf("Deserialize cuda engine failed.\n");

runtime->destroy();

return;

}

nvinfer1::IExecutionContext* execution_context = engine->createExecutionContext();

cudaStream_t stream = nullptr;

// 创建CUDA流,以确定这个batch的推理是独立的

cudaStreamCreate(&stream);

/*

Network definition:

image

|

linear (fully connected) input = 3, output = 2, bias = True w=[[1.0, 2.0, 0.5], [0.1, 0.2, 0.5]], b=[0.3, 0.8]

|

sigmoid

|

prob

*/

// ------------------------------ 2. 准备好要推理的数据并搬运到GPU ----------------------------

float input_data_host[] = {1, 2, 3};

float* input_data_device = nullptr;

float output_data_host[2];

float* output_data_device = nullptr;

cudaMalloc(&input_data_device, sizeof(input_data_host));

cudaMalloc(&output_data_device, sizeof(output_data_host));

cudaMemcpyAsync(input_data_device, input_data_host, sizeof(input_data_host), cudaMemcpyHostToDevice, stream);

// 用一个指针数组指定input和output在gpu中的指针。

float* bindings[] = {input_data_device, output_data_device};

// ------------------------------ 3. 推理并将结果搬运回CPU ----------------------------

bool success = execution_context->enqueueV2((void**)bindings, stream, nullptr);

cudaMemcpyAsync(output_data_host, output_data_device, sizeof(output_data_host), cudaMemcpyDeviceToHost, stream);

cudaStreamSynchronize(stream);

printf("output_data_host = %f, %f\n", output_data_host[0], output_data_host[1]);

// ------------------------------ 4. 释放内存 ----------------------------

printf("Clean memory\n");

cudaStreamDestroy(stream);

execution_context->destroy();

engine->destroy();

runtime->destroy();

// ------------------------------ 5. 手动推理进行验证 ----------------------------

const int num_input = 3;

const int num_output = 2;

float layer1_weight_values[] = {1.0, 2.0, 0.5, 0.1, 0.2, 0.5};

float layer1_bias_values[] = {0.3, 0.8};

printf("手动验证计算结果:\n");

for(int io = 0; io < num_output; ++io)

{

float output_host = layer1_bias_values[io];

for(int ii = 0; ii < num_input; ++ii)

{

output_host += layer1_weight_values[io * num_input + ii] * input_data_host[ii];

}

// sigmoid

float prob = 1 / (1 + exp(-output_host));

printf("output_prob[%d] = %f\n", io, prob);

}

}

int main()

{

if(!build_model())

{

return -1;

}

inference();

return 0;

}

原文链接:https://blog.csdn.net/weicao1990/article/details/125034572

makefile:

cc := g++

name := pro

workdir := workspace

srcdir := src

objdir := objs

stdcpp := c++11

cuda_home := /home/liuhongyuan/miniconda3/envs/trtpy/lib/python3.8/site-packages/trtpy/trt8cuda112cudnn8

syslib := /home/liuhongyuan/miniconda3/envs/trtpy/lib/python3.8/site-packages/trtpy/lib

cpp_pkg := /home/liuhongyuan/miniconda3/envs/trtpy/lib/python3.8/site-packages/trtpy/cpp-packages

cuda_arch :=

nvcc := $(cuda_home)/bin/nvcc -ccbin=$(cc)

# 定义cpp的路径查找和依赖项mk文件

cpp_srcs := $(shell find $(srcdir) -name "*.cpp")

cpp_objs := $(cpp_srcs:.cpp=.cpp.o)

cpp_objs := $(cpp_objs:$(srcdir)/%=$(objdir)/%)

cpp_mk := $(cpp_objs:.cpp.o=.cpp.mk)

# 定义cu文件的路径查找和依赖项mk文件

cu_srcs := $(shell find $(srcdir) -name "*.cu")

cu_objs := $(cu_srcs:.cu=.cu.o)

cu_objs := $(cu_objs:$(srcdir)/%=$(objdir)/%)

cu_mk := $(cu_objs:.cu.o=.cu.mk)

# 定义opencv和cuda需要用到的库文件

link_cuda := cudart cudnn

link_trtpro :=

link_tensorRT := nvinfer

link_opencv :=

link_sys := stdc++ dl

link_librarys := $(link_cuda) $(link_tensorRT) $(link_sys) $(link_opencv)

# 定义头文件路径,请注意斜杠后边不能有空格

# 只需要写路径,不需要写-I

include_paths := src \

$(cuda_home)/include/cuda \

$(cuda_home)/include/tensorRT \

$(cpp_pkg)/opencv4.2/include

# 定义库文件路径,只需要写路径,不需要写-L

library_paths := $(cuda_home)/lib64 $(syslib) $(cpp_pkg)/opencv4.2/lib

# 把library path给拼接为一个字符串,例如a b c => a:b:c

# 然后使得LD_LIBRARY_PATH=a:b:c

empty :=

library_path_export := $(subst $(empty) $(empty),:,$(library_paths))

# 把库路径和头文件路径拼接起来成一个,批量自动加-I、-L、-l

run_paths := $(foreach item,$(library_paths),-Wl,-rpath=$(item))

include_paths := $(foreach item,$(include_paths),-I$(item))

library_paths := $(foreach item,$(library_paths),-L$(item))

link_librarys := $(foreach item,$(link_librarys),-l$(item))

# 如果是其他显卡,请修改-gencode=arch=compute_75,code=sm_75为对应显卡的能力

# 显卡对应的号码参考这里:https://developer.nvidia.com/zh-cn/cuda-gpus#compute

# 如果是 jetson nano,提示找不到-m64指令,请删掉 -m64选项。不影响结果

cpp_compile_flags := -std=$(stdcpp) -w -g -O0 -m64 -fPIC -fopenmp -pthread

cu_compile_flags := -std=$(stdcpp) -w -g -O0 -m64 $(cuda_arch) -Xcompiler "$(cpp_compile_flags)"

link_flags := -pthread -fopenmp -Wl,-rpath='$$ORIGIN'

cpp_compile_flags += $(include_paths)

cu_compile_flags += $(include_paths)

link_flags += $(library_paths) $(link_librarys) $(run_paths)

# 如果头文件修改了,这里的指令可以让他自动编译依赖的cpp或者cu文件

ifneq ($(MAKECMDGOALS), clean)

-include $(cpp_mk) $(cu_mk)

endif

$(name) : $(workdir)/$(name)

all : $(name)

run : $(name)

@cd $(workdir) && ./$(name) $(run_args)

$(workdir)/$(name) : $(cpp_objs) $(cu_objs)

@echo Link [email protected]

@mkdir -p $(dir [email protected])

@$(cc) $^ -o [email protected] $(link_flags)

$(objdir)/%.cpp.o : $(srcdir)/%.cpp

@echo Compile CXX $<

@mkdir -p $(dir [email protected])

@$(cc) -c $< -o [email protected] $(cpp_compile_flags)

$(objdir)/%.cu.o : $(srcdir)/%.cu

@echo Compile CUDA $<

@mkdir -p $(dir [email protected])

@$(nvcc) -c $< -o [email protected] $(cu_compile_flags)

# 编译cpp依赖项,生成mk文件

$(objdir)/%.cpp.mk : $(srcdir)/%.cpp

@echo Compile depends C++ $<

@mkdir -p $(dir [email protected])

@$(cc) -M $< -MF [email protected] -MT $(@:.cpp.mk=.cpp.o) $(cpp_compile_flags)

# 编译cu文件的依赖项,生成cumk文件

$(objdir)/%.cu.mk : $(srcdir)/%.cu

@echo Compile depends CUDA $<

@mkdir -p $(dir [email protected])

@$(nvcc) -M $< -MF [email protected] -MT $(@:.cu.mk=.cu.o) $(cu_compile_flags)

# 定义清理指令

clean :

@rm -rf $(objdir) $(workdir)/$(name) $(workdir)/*.trtmodel

# 防止符号被当做文件

.PHONY : clean run $(name)

# 导出依赖库路径,使得能够运行起来

export LD_LIBRARY_PATH:=$(library_path_export)

原文链接:https://blog.csdn.net/weicao1990/article/details/125034572

重点提炼:

1. 必须使用createNetworkV2,并指定为1(表示显性batch),createNetwork已经废弃,非显性batch官方不推荐,这个方式直接影响推理时enqueue还是enqueueV2;

2. builder、config等指针,记得释放,否则会有内存泄漏,使用ptr->destroy()释放;

3. markOutput表示是该模型的输出节点,mark几次,就有几个输出,addInput几次就有几个输入;

4. workspaceSize是工作空间大小,某些layer需要使用额外存储时,不会自己分配空间,而是为了内存复用,直接找tensorRT要workspace空间;

5. 一定要记住,保存的模型只能适配编译时的trt版本、编译时指定的设备,也只能保证在这种配置下是最优的。如果用trt跨不同设备执行,有时候可以运行,但不是最优的,也不推荐;

6. bindings是tensorRT对输入输出张量的描述,bindings = input-tensor + output-tensor。比如input有a,output有b, c, d,那么bindings = [a, b, c, d],bindings[0] = a,bindings[2] = c;

7. enqueueV2是异步推理,加入到stream队列等待执行。输入的bindings则是tensors的指针(注意是device pointer);

8. createExecutionContext可以执行多次,允许一个引擎具有多个执行上下文。

————————————————

版权声明:本文为CSDN博主「洪流之源」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/weicao1990/article/details/125034572

边栏推荐

- npm Warn config global `--global`, `--local` are deprecated. Use `--location=global` instead

- Rce (no echo)

- [advanced data mining technology] Introduction to advanced data mining technology

- [crawler knowledge] better than lxml and BS4? Use of parser

- Multiplication and addition of univariate polynomials

- [install PG]

- Baidu classic interview question - determine prime (how to optimize?)

- Two methods of how to export asynchronous data

- Scientific computing toolkit SciPy image processing

- Es+redis+mysql, the high availability architecture design is awesome! (supreme Collection Edition)

猜你喜欢

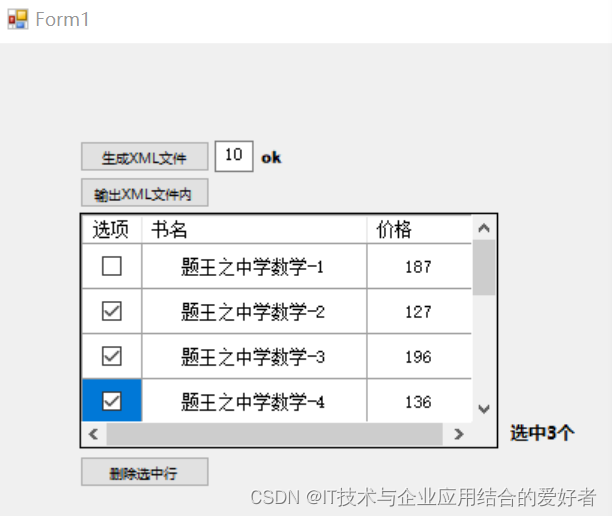

C WinForm actual operation XML code, including the demonstration of creating, saving, querying and deleting forms

npm Warn config global `--global`, `--local` are deprecated. Use `--location=global` instead

CAD sets hyperlinks to entities (WEB version)

Preview and save pictures using uni app

Summary of yarn capacity scheduler

Use of cache in C #

![[feature transformation] feature transformation is to ensure small information loss but high-quality prediction results.](/img/ad/6f5d97caa3f5163197ba435276a719.png)

[feature transformation] feature transformation is to ensure small information loss but high-quality prediction results.

MySQL - multi table query - seven join implementations, set operations, multi table query exercises

Lecun proposed that mask strategy can also be applied to twin networks based on vit for self supervised learning!

Baidu PaddlePaddle easydl helps improve the inspection efficiency of high-altitude photovoltaic power stations by 98%

随机推荐

250 million, Banan District perception system data collection, background analysis, Xueliang engineering network and operation and maintenance service project: Chinatelecom won the bid

Smarter! Airiot accelerates the upgrading of energy conservation and emission reduction in the coal industry

High soft course summary

Five digital transformation strategies of B2B Enterprises

Problems with SQLite compare comparison tables

Lecun proposed that mask strategy can also be applied to twin networks based on vit for self supervised learning!

[development tutorial 6] crazy shell arm function mobile phone - interruption experiment tutorial

[Matplotlib drawing]

How about Urumqi Shenwan Hongyuan securities account opening? Is it safe?

Bring new people's experience

Baidu interview question - judge whether a positive integer is to the power of K of 2

90% of people don't know the most underestimated function of postman!

[jzof] 05 replace spaces

Shenzhen Merchants Securities account opening? Is it safe to open a mobile account?

ERROR 2003 (HY000): Can‘t connect to MySQL server on ‘localhost:3306‘ (10061)

怎么在中金证券购买新课理财产品?收益百分之6

[shallow copy and deep copy], [heap and stack], [basic type and reference type]

whistle ERR_ CERT_ AUTHORITY_ INVALID

How to use named slots

[crawler knowledge] better than lxml and BS4? Use of parser