当前位置:网站首页>[crawler knowledge] better than lxml and BS4? Use of parser

[crawler knowledge] better than lxml and BS4? Use of parser

2022-07-24 21:16:00 【Elder brother Xiancao】

Text extraction

In the crawler , A very important step is to extract the required text data from the source code , The methods of extraction are very diverse , For example, you can use python Built in string functions , Or use regular expressions . If it is structured data , May also use json etc.

Of course , Generally speaking , image html Data in this format , It is troublesome to use a general text extraction method , The efficiency is not high . And by xml The way of parser , Extracting data directly , Is relatively easy , Common parsers are lxml as well as beautifulsoup4

About lxml and bs4 Advantages and disadvantages , We have also made a comparison in previous articles , In a nutshell , We can think of it like this : Generally speaking ,lxml Better at using xpath The parser , and bs4 Better at using css The parser . Of course , You can use any one , It is not necessary to learn both , Just if you prefer to use xpath Words , You can consider lxml, If you prefer css Words , Consider using bs4

At this time, someone has questions ,“ Children do multiple choice questions , All I want ! Why can't I use it at the same time xpath and css Well ? For example, I use scrapy Medium Selector Isn't it better ?“

Such is the case , For example, I wrote in this article before Contrast bs4 and scrapy Of Selector, it turns out to be the case that Selector It really works , But not many people use it , Why is that ?

One possible reason is , Many people may not want to specifically Download for a parser scrapy, ok , This is the theme of this article , Actually scrapy The parser in , It can be downloaded and used separately , It is contained in parsel In , If you don't want to download scrapy, It can also be done through pip install parsel To download separately scrapy The parser

parsel Use

Text preparation

If you want to use a text parser , First of all, we definitely need a text . Here I wrote a simple paragraph html, It is used as the text to be parsed in this article , As shown below , I will save it to by default html variable

<!DOCTYPE html>

<html>

<head>

<meta charset="UTF-8">

<title> Test documentation </title>

</head>

<body>

<div class="wrapper">

<div class="inner">

<span class="content"> This is the content of the first part </span>

<img src="http://localhost/1.jpg" alt=" Figure 1 ">

</div>

<div class="inner">

<span class="content"> This is the content of the second part </span>

<img src="http://localhost/2.jpg" alt=" Figure 2 ">

</div>

<div class="inner">

<span class="content"> This is the content of the third part </span>

<img src="http://localhost/3.jpg" alt=" Figure 3 ">

</div>

<div class="inner">

<span class="content"> This is the fourth part </span>

<img src="http://localhost/4.jpg" alt=" Figure 4 ">

</div>

<div class="inner">

<span class="content"> This is the content of the fifth part </span>

<img src="http://localhost/5.jpg" alt=" Figure 5 ">

</div>

</div>

<br />

<br />

<input type="button" id="confirm" value=" I've read it all " />

<br />

<br />

<a class="index" href="http://localhost/index"> Click to enter the home page </a>

</body>

</html>Use xpath

adopt parsel Medium Selector Conduct xpath The parsing of is very easy , First you need to create a Selector object , Then go straight through s.xpath() Use xpath, For results , If you need to get a use get(), If you need to get full use extract()

from parsel import Selector

# html The content in should be the one I provided above html Text , For the sake of space , I will not make a copy of it

s = Selector(html)

# Extract all span The text in the , At this point, you get a list

r1 = s.xpath("//div[@class='inner']/span/text()").extract()

print(r1)

# Extract all img The address of , At this point, you get a list

r2 = s.xpath("//img/@src").extract()

print(r2)

# Pick up a a The text in the label , At this point, you no longer get a list

r3 = s.xpath("//a/text()").get()

print(r3)Use css

Same as xpath, Only need to s.xpath() Change it to s.css() that will do

from parsel import Selector

# html The content in should be the one I provided above html Text , For the sake of space , I will not make a copy of it

s = Selector(html)

# Extract all span The text in the , At this point, you get a list

r1 = s.css(".inner span::text").extract()

print(r1)

# Extract all img The address of , At this point, you get a list

r2 = s.css("img::attr(src)").extract()

print(r2)

# Pick up a a The text in the label , At this point, you no longer get a list

r3 = s.css("a::text").get()

print(r3)Using regular expressions

adopt s.re() Match all , adopt s.re_first() Match one , Be careful , The result of regular expression does not need to use extract() and get(), What you get is the desired result

from parsel import Selector

# html The content in should be the one I provided above html Text , For the sake of space , I will not make a copy of it

s = Selector(html)

# Extract all span The text in the , At this point, you get a list

r1 = s.re("<span.*?>(.*?)</span>")

print(r1)

# Extract all img The address of , At this point, you get a list

r2 = s.re("<img.*?src=\"(.*?)\"")

print(r2)

# Pick up a a The text in the label , At this point, you no longer get a list

r3 = s.re_first("<a.*?>(.*?)</a>")

print(r3)In that case , The use of regular expressions is better than xpath and css It's going to be a lot of trouble , Such is the case , But it is not used in general practice , It can work with xpath as well as css Used in conjunction with the parser , Through the first xpath and css extract , Then through regular matching

from parsel import Selector

# html The content in should be the one I provided above html Text , For the sake of space , I will not make a copy of it

s = Selector(html)

# Through the first xpath Extract to input, Then match the contents by regular

r = s.xpath("//input").re_first("value=\"(.*?)\"")

print(r)parsel The benefits of

Why do I think parsel Compared with lxml and bs4 It's easy to use ? I think it has the following advantages

First of all , Its functions are very comprehensive , At the same time, you can use xpath,css as well as re Regular matching , You can use whatever you want , Don't worry about it any more

second , It is simpler to use , For example, when initializing the selector , Just pass in a paragraph html That's all right. , and xpath(),css(),re() Methods , It can also be used directly , It's very convenient

Third , In the use of get() When , If you don't get results , No mistake. , Will not ignore , But give None Result , This feature is very useful

Fourth , Its versatility is very high , Without using scrapy When writing a program , Just introduce parsel Medium Selector You can use it , But in the use of scrapy When writing a program, you don't have to say , It can be used directly

although , Everyone has their own habits , Of course, you may also feel lxml and bs4 Better for you , I don't deny it . however , In my submission parsel The function of is very good , It is absolutely worth learning and using

If you want to read more about parsel Information , I strongly suggest you read Mr. Cui Qingcai's article , The reference address is as follows : Cui Qingcai - Emerging web page parsing tool parsel

边栏推荐

- Lecun proposed that mask strategy can also be applied to twin networks based on vit for self supervised learning!

- How to prevent weight under Gao Bingfa?

- How to design the order system in e-commerce projects? (supreme Collection Edition)

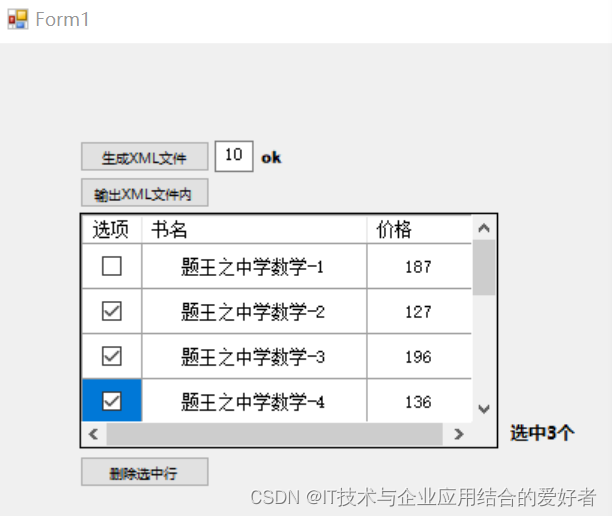

- C WinForm actual operation XML code, including the demonstration of creating, saving, querying and deleting forms

- Evaluation of four operation expressions

- A new UI testing method: visual perception test

- Information system project manager must recite the core examination site (47) project subcontract

- RESNET interpretation and 1 × 1 Introduction to convolution

- Summary of communication with customers

- The relationship between cloud computing and digital transformation has finally been clarified

猜你喜欢

Lenovo Filez helps Zhongshui North achieve safe and efficient file management

Drive subsystem development

How to set appium script startup parameters

![[basic data mining technology] KNN simple clustering](/img/df/f4a3d9b8a636ea968c98d705547be7.png)

[basic data mining technology] KNN simple clustering

whistle ERR_ CERT_ AUTHORITY_ INVALID

C # image template matching and marking

Lecun proposed that mask strategy can also be applied to twin networks based on vit for self supervised learning!

Baidu PaddlePaddle easydl helps improve the inspection efficiency of high-altitude photovoltaic power stations by 98%

Day5: three pointers describe a tree

C WinForm actual operation XML code, including the demonstration of creating, saving, querying and deleting forms

随机推荐

RESNET interpretation and 1 × 1 Introduction to convolution

Lazily doing nucleic acid and making (forging health code) web pages: detained for 5 days

怎么在中金证券购买新课理财产品?收益百分之6

Overloaded & lt; for cv:: point;, But VS2010 cannot find it

APR learning failure problem location and troubleshooting

PC port occupation release

[advanced data mining technology] Introduction to advanced data mining technology

Do you want to verify and use the database in the interface test

MySQL - multi table query - seven join implementations, set operations, multi table query exercises

Shenzhen Merchants Securities account opening? Is it safe to open a mobile account?

Nested printing in CAD web pages

Bring new people's experience

Which bank outlet in Zhejiang can buy ETF fund products?

96. Strange tower of Hanoi

OpenGL (1) vertex buffer

[feature construction] construction method of features

Selenium test page content download function

Codeforces Round #808 (Div. 2)(A~D)

Spark related FAQ summary

High soft course summary