当前位置:网站首页>torchvision. models._ utils. Intermediatelayergetter tutorial

torchvision. models._ utils. Intermediatelayergetter tutorial

2022-06-27 09:51:00 【jjw_ zyfx】

Don't look at the examples

import torch

import torchvision

m = torchvision.models.resnet18(pretrained=True)

print(m)

new_m = torchvision.models._utils.IntermediateLayerGetter(m,

{

'layer1': 'feat1', 'layer3': 'feat2'})

out = new_m(torch.rand(1, 3, 224, 224))

print([(k, v.shape) for k, v in out.items()])

# The output is :

# [('feat1', torch.Size([1, 64, 56, 56])), ('feat2', torch.Size([1, 256, 14, 14]))]

# Because the result is too long, I just want to explain :IntermediateLayerGetter The first parameter of is the model , That is, here is resnet18,

# The second parameter is a dictionary , among 'layer1': 'feat1', Medium layer1 representative resnet18 Medium layer1 That is, only

# resnet18 Medium layer1 Layer can be ,feat1 Is the name of the small module of the network you want to build .'layer3': 'feat2'

# Empathy layer3 Means you want to resnet18 From the beginning to layer3 layer ( contain layer3 layer ) end , And use feat2 As a small module name

Complete output results :

ResNet(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(1): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer2): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer3): Sequential(

(0): BasicBlock(

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer4): Sequential(

(0): BasicBlock(

(conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Linear(in_features=512, out_features=1000, bias=True)

)

[('feat1', torch.Size([1, 64, 56, 56])), ('feat2', torch.Size([1, 256, 14, 14]))]

边栏推荐

- 产品力对标海豹/Model 3,长安深蓝SL03预售17.98万起

- Scientists develop two new methods to provide stronger security protection for intelligent devices

- 初步认识pytorch

- es 根据索引名称和索引字段更新值

- std::memory_ order_ seq_ CST memory order

- Tdengine invitation: be a superhero who uses technology to change the world and become TD hero

- R language plot visualization: visualize the normalized histograms of multiple data sets, add density curve KDE to the histograms, set different histograms to use different bin sizes, and add edge whi

- 10 常见网站安全攻击手段及防御方法

- 队列,双向队列,及其运用

- Take you to play with the camera module

猜你喜欢

Semi-supervised Learning入门学习——Π-Model、Temporal Ensembling、Mean Teacher简介

E+h secondary meter repair pH transmitter secondary display repair cpm253-mr0005

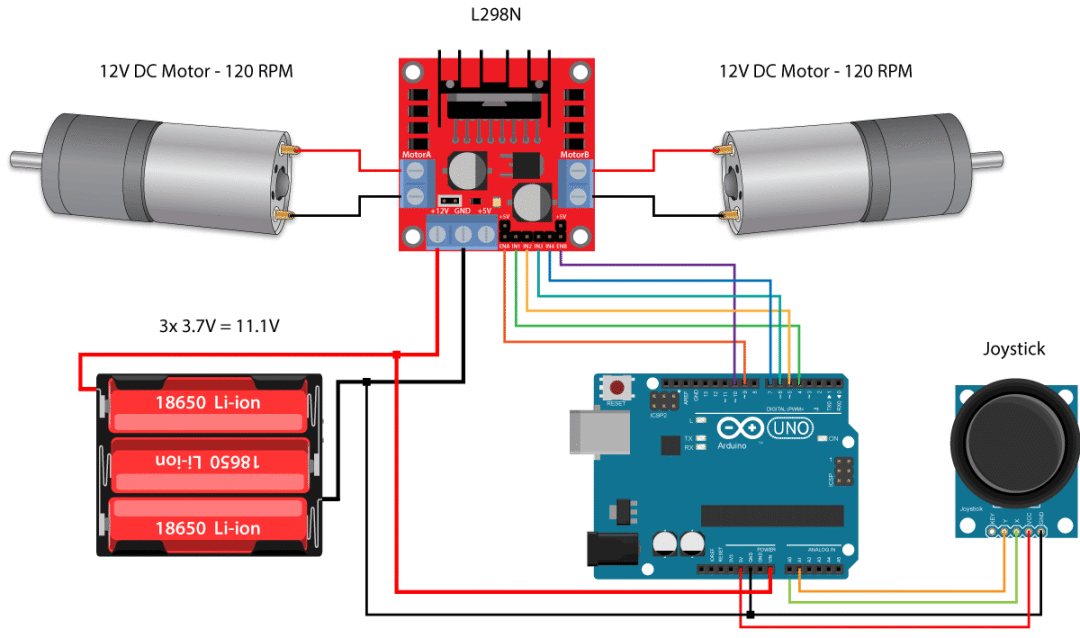

Principle and application of the most complete H-bridge motor drive module L298N

隐私计算FATE-离线预测

初步认识pytorch

C# Any()和AII()方法

Tdengine invitation: be a superhero who uses technology to change the world and become TD hero

ucore lab3

ucore lab4

反编译jar包,修改后重新编译为jar包

随机推荐

std::memory_ order_ seq_ CST memory order

Some exercises about binary tree

Use aspese slides to convert PPT to PDF

强化学习中好奇心机制

Google browser chropath plug-in

Reorganize common shell scripts for operation and maintenance frontline work

Use aspese Cells convert Excel to PDF

Location and solution of network connection failure of primary online mobile terminal Report

Explain the imaging principle of various optical instruments in detail

C# Any()和AII()方法

6月23日《Rust唠嗑室》第三期B站视频地址

R語言plotly可視化:可視化多個數據集歸一化直方圖(historgram)並在直方圖中添加密度曲線kde、設置不同的直方圖使用不同的分箱大小(bin size)、在直方圖的底部邊緣添加邊緣軸須圖

BufferedWriter 和 BufferedReader 的使用

【生动理解】深度学习中常用的各项评价指标含义TP、FP、TN、FN、IoU、Accuracy

This application failed to start because it could not find or load the QT platform plugin

When does the mobile phone video roll off?

Quick start CherryPy (1)

The R language uses the preprocess function of the caret package for data preprocessing: Center all data columns (subtract the average value from each data column), and set the method parameter to cen

js 所有的网络请求方式

unity--newtonsoft.json解析