当前位置:网站首页>Sealos packages and deploys kubesphere container platform

Sealos packages and deploys kubesphere container platform

2022-07-24 06:52:00 【freesharer】

Sealos Packaged deployment KubeSphere Container platform

sealos It's based on Kubernetes Kernel cloud operating system , Be able to freely combine various distributed applications , Easy customization and rapid packaging deployment Kubernetes Any highly available distributed application on .

kubesphere Deploy Pre requirements :

- Kubernetes edition :1.20.x、1.21.x、1.22.x、1.23.x( experimental );

- Kubernetes There are already default storage classes in the cluster .

kubeshere Apply the packaging process :

- download kubeshpere Image list

- download kubesphere Deploy yaml file

- establish Dockerfile And use sealos Build a mirror image

Image making in Networked Environment

1、 Prepare a networked machine , With ubuntu 22.04 LTS For example , install sealos Tools

wget -c https://sealyun-home.oss-cn-beijing.aliyuncs.com/sealos-4.0/latest/sealos-amd64 -O sealos && \

chmod +x sealos && mv sealos /usr/bin

2、 establish kubesphere Apply image to build directory , May refer to sealos The official sample

mkdir -p /root/kubesphere/v3.3.0/

cd /root/kubesphere/v3.3.0/

mkdir -p images/shim

mkdir manifests

3、 download kubesphere Mirror the list to images/shim/ Under the table of contents

wget https://github.com/kubesphere/ks-installer/releases/download/v3.3.0/images-list.txt -P images/shim/

Remove the comment line from the image list , The list is kubesphere Full image , common 153 individual , Subsequent construction will be time-consuming .

cat images/shim/images-list.txt |grep -v "^#" > images/shim/KubesphereImageList

rm -rf images/shim/images-list.txt

4、 download kubesphere yaml File to manifests Under the table of contents

wget https://raw.githubusercontent.com/kubesphere/ks-installer/v3.3.0/deploy/kubesphere-installer.yaml -P manifests/

wget https://raw.githubusercontent.com/kubesphere/ks-installer/v3.3.0/deploy/cluster-configuration.yaml -P manifests/

5、 establish Dockerfile file

cat > Dockerfile<<EOF FROM scratch COPY manifests ./manifests COPY registry ./registry CMD ["kubectl apply -f manifests/kubesphere-installer.yaml","kubectl apply -f manifests/cluster-configuration.yaml"] EOF

The final directory structure is as follows

[email protected]:~/kubesphere/v3.3.0# tree

.

├── Dockerfile

├── images

│ └── shim

│ └── KubesphereImageList

└── manifests

├── cluster-configuration.yaml

└── kubesphere-installer.yaml

3 directories, 4 files

6、 Use sealos structure kubesphere Apply image

sealos build -f Dockerfile -t docker.io/willdockerhub/kubesphere:v3.3.0

Look at the built image

[email protected]:~# sealos images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/willdockerhub/kubesphere v3.3.0 831b87502b54 18 hours ago 11.5 GB

Because the image is too large , Try to push to dockerhub Failure

sealos login docker.io -u xxx -p xxx

sealos push docker.io/willdockerhub/kubesphere:v3.3.0

Here, choose to push to Alibaba cloud image warehouse ( More time-consuming , Operate carefully , It is recommended that the image be used locally , Do not push to remote warehouse )

sealos login registry.cn-shenzhen.aliyuncs.com -u xxx -p xxx

sealos tag docker.io/willdockerhub/kubesphere:v3.3.0 registry.cn-shenzhen.aliyuncs.com/cnmirror/kubesphere:v3.3.0

sealos push registry.cn-shenzhen.aliyuncs.com/cnmirror/kubesphere:v3.3.0

Sign in Alibaba cloud image warehouse see

7、 pack kubesphere Apply image file

sealos save -o kubesphere_v3.3.0.tar registry.cn-shenzhen.aliyuncs.com/cnmirror/kubesphere:v3.3.0

8、 Other dependent application downloads ,sealos Official dockerhub Provide a large number of produced application images , If you don't need to customize the configuration parameters, you can directly pull and use

sealos pull labring/kubernetes:v1.22.11

sealos pull labring/calico:v3.22.1

sealos pull labring/openebs:v1.9.0

sealos save -o kubernetes_v1.22.11.tar labring/kubernetes:v1.22.11

sealos save -o calico_v3.22.1.tar labring/calico:v3.22.1

sealos save -o openebs_v1.9.0.tar labring/openebs:v1.9.0

Application image description :

- labring/kubernetes:v1.22.11:kubernetes The core

- labring/calico:v3.22.1: kubernetes Cluster needs calico The network plugin

- labring/openebs:v1.9.0: Deploy kubesphere Rely on persistent storage , Choose here openebs Local volume

8、 Copy the above packaged image files and sealos Binary files to offline environment , If you deploy for an online environment , There is no need for save operation .

Offline installation kubesphere

With 3 individual master Nodes and 1 individual worker Node as an example , The operating system type is Ubuntu server 22.04 LTS, Configure to 4C/16G/100GB disk .

sealos Pre deployment requirements :

- The host names of all nodes need to be configured in advance and must be different .

- All the following operations must be performed on the first node of the cluster , Currently, deployment on nodes outside the cluster is not supported .

1、 install sealos

mv sealos /usr/bin

2、 Unzip the image package

sealos load -i kubernetes_v1.22.11.tar

sealos load -i kubesphere_v3.3.0.tar

sealos load -i calico_v3.22.1.tar

sealos load -i openebs_v1.9.0.tar

3、 Execute the following command to deploy directly

explain : Each application component can also be deployed separately , For example, complete kubernetes After the core components are deployed , It can be executed sealos run labring/calico:v3.22.1 Deploy subsequent applications in turn .

sealos run \

--masters 192.168.72.50,192.168.72.51,192.168.72.52 \

--nodes 192.168.72.53 -p 123456 \

labring/kubernetes:v1.22.11 \

labring/calico:v3.22.1 \

labring/openebs:v1.9.0 \

registry.cn-shenzhen.aliyuncs.com/cnmirror/kubesphere:v3.3.0

View the deployment log , The overall time consumption is about 15 minute

[email protected]:~# sealos run \

--masters 192.168.72.50,192.168.72.51,192.168.72.52 \

--nodes 192.168.72.53 -p 123456 \

labring/kubernetes:v1.22.11 \

labring/calico:v3.22.1 \

labring/openebs:v1.9.0 \

registry.cn-shenzhen.aliyuncs.com/cnmirror/kubesphere:v3.3.0

2022-07-23 10:58:02 [INFO] Start to create a new cluster: master [192.168.72.50 192.168.72.51 192.168.72.52], worker [192.168.72.53]

2022-07-23 10:58:02 [INFO] Executing pipeline Check in CreateProcessor.

2022-07-23 10:58:02 [INFO] checker:hostname [192.168.72.50:22 192.168.72.51:22 192.168.72.52:22 192.168.72.53:22]

2022-07-23 10:58:05 [INFO] checker:timeSync [192.168.72.50:22 192.168.72.51:22 192.168.72.52:22 192.168.72.53:22]

2022-07-23 10:58:06 [INFO] Executing pipeline PreProcess in CreateProcessor.

Resolving "labring/kubernetes" using unqualified-search registries (/etc/containers/registries.conf)

Trying to pull docker.io/labring/kubernetes:v1.22.11...

Getting image source signatures

Copying blob 030fcef18dcb [--------------------------------------] 0.0b / 382.3MiB (skipped: 0.0b = 0.00%)

......

......

2022-07-23 11:14:44 [INFO] guest cmd is kubectl apply -f manifests/kubesphere-installer.yaml

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io created

namespace/kubesphere-system created

serviceaccount/ks-installer created

clusterrole.rbac.authorization.k8s.io/ks-installer created

clusterrolebinding.rbac.authorization.k8s.io/ks-installer created

deployment.apps/ks-installer created

2022-07-23 11:14:46 [INFO] guest cmd is kubectl apply -f manifests/cluster-configuration.yaml

clusterconfiguration.installer.kubesphere.io/ks-installer created

2022-07-23 11:14:48 [INFO] succeeded in creating a new cluster, enjoy it!

2022-07-23 11:14:48 [INFO]

___ ___ ___ ___ ___ ___

/\ \ /\ \ /\ \ /\__\ /\ \ /\ \

/::\ \ /::\ \ /::\ \ /:/ / /::\ \ /::\ \

/:/\ \ \ /:/\:\ \ /:/\:\ \ /:/ / /:/\:\ \ /:/\ \ \

_\:\~\ \ \ /::\~\:\ \ /::\~\:\ \ /:/ / /:/ \:\ \ _\:\~\ \ \

/\ \:\ \ \__\ /:/\:\ \:\__\ /:/\:\ \:\__\ /:/__/ /:/__/ \:\__\ /\ \:\ \ \__\

\:\ \:\ \/__/ \:\~\:\ \/__/ \/__\:\/:/ / \:\ \ \:\ \ /:/ / \:\ \:\ \/__/

\:\ \:\__\ \:\ \:\__\ \::/ / \:\ \ \:\ /:/ / \:\ \:\__\

\:\/:/ / \:\ \/__/ /:/ / \:\ \ \:\/:/ / \:\/:/ /

\::/ / \:\__\ /:/ / \:\__\ \::/ / \::/ /

\/__/ \/__/ \/__/ \/__/ \/__/ \/__/

Website :https://www.sealos.io/

Address :github.com/labring/sealos

4、 see kubernetes State of the cluster

[email protected]:~# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

node01 Ready control-plane,master 6m19s v1.22.11 192.168.72.50 <none> Ubuntu 22.04 LTS 5.15.0-41-generic containerd://1.6.2

node02 Ready control-plane,master 5m12s v1.22.11 192.168.72.51 <none> Ubuntu 22.04 LTS 5.15.0-41-generic containerd://1.6.2

node03 Ready control-plane,master 4m16s v1.22.11 192.168.72.52 <none> Ubuntu 22.04 LTS 5.15.0-41-generic containerd://1.6.2

node04 Ready <none> 3m59s v1.22.11 192.168.72.53 <none> Ubuntu 22.04 LTS 5.15.0-41-generic containerd://1.6.2

5、 see kubesphere pods state

[email protected]:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-system calico-kube-controllers-57fbd7bd59-7chg8 1/1 Running 0 11m

calico-system calico-node-d22gx 1/1 Running 0 11m

calico-system calico-node-pzkqn 1/1 Running 0 11m

calico-system calico-node-s5blv 1/1 Running 0 11m

calico-system calico-node-xdb5q 1/1 Running 0 11m

calico-system calico-typha-d5c88bd64-gj7p6 1/1 Running 0 11m

calico-system calico-typha-d5c88bd64-wgwq7 1/1 Running 0 11m

kube-system coredns-78fcd69978-hhkz5 1/1 Running 0 13m

kube-system coredns-78fcd69978-j5tc9 1/1 Running 0 13m

kube-system etcd-node01 1/1 Running 0 14m

kube-system etcd-node02 1/1 Running 0 12m

kube-system etcd-node03 1/1 Running 0 11m

kube-system kube-apiserver-node01 1/1 Running 0 13m

kube-system kube-apiserver-node02 1/1 Running 0 12m

kube-system kube-apiserver-node03 1/1 Running 0 11m

kube-system kube-controller-manager-node01 1/1 Running 1 (12m ago) 14m

kube-system kube-controller-manager-node02 1/1 Running 0 12m

kube-system kube-controller-manager-node03 1/1 Running 0 11m

kube-system kube-proxy-dfpph 1/1 Running 0 11m

kube-system kube-proxy-f2spn 1/1 Running 0 12m

kube-system kube-proxy-fp48n 1/1 Running 0 13m

kube-system kube-proxy-q6gt7 1/1 Running 0 11m

kube-system kube-scheduler-node01 1/1 Running 1 (12m ago) 13m

kube-system kube-scheduler-node02 1/1 Running 0 12m

kube-system kube-scheduler-node03 1/1 Running 0 11m

kube-system kube-sealyun-lvscare-node04 1/1 Running 0 11m

kube-system snapshot-controller-0 1/1 Running 0 8m45s

kubesphere-controls-system default-http-backend-5bf68ff9b8-lf6p8 1/1 Running 0 7m4s

kubesphere-controls-system kubectl-admin-6dbcb94855-j6tct 1/1 Running 0 39s

kubesphere-monitoring-system alertmanager-main-0 2/2 Running 0 3m36s

kubesphere-monitoring-system alertmanager-main-1 2/2 Running 0 3m36s

kubesphere-monitoring-system alertmanager-main-2 2/2 Running 0 3m36s

kubesphere-monitoring-system kube-state-metrics-7bdc7484cf-qjv2q 3/3 Running 0 3m47s

kubesphere-monitoring-system node-exporter-2d5qq 2/2 Running 0 3m50s

kubesphere-monitoring-system node-exporter-42fsd 2/2 Running 0 3m50s

kubesphere-monitoring-system node-exporter-8t5p7 2/2 Running 0 3m50s

kubesphere-monitoring-system node-exporter-lhtzb 2/2 Running 0 3m49s

kubesphere-monitoring-system notification-manager-deployment-78664576cb-qv8kw 2/2 Running 0 2m44s

kubesphere-monitoring-system notification-manager-deployment-78664576cb-wxvmc 2/2 Running 0 2m44s

kubesphere-monitoring-system notification-manager-operator-7d44854f54-9d2vs 2/2 Running 0 3m7s

kubesphere-monitoring-system prometheus-k8s-0 2/2 Running 0 3m43s

kubesphere-monitoring-system prometheus-k8s-1 2/2 Running 0 3m43s

kubesphere-monitoring-system prometheus-operator-8955bbd98-vx7k2 2/2 Running 0 3m51s

kubesphere-system ks-apiserver-7f4c99bb7-d8qqk 1/1 Running 1 (35s ago) 7m4s

kubesphere-system ks-apiserver-7f4c99bb7-kdcwd 1/1 Running 0 7m4s

kubesphere-system ks-apiserver-7f4c99bb7-rgs24 1/1 Running 0 7m4s

kubesphere-system ks-console-54bd5bcbc6-92w5x 1/1 Running 0 7m4s

kubesphere-system ks-console-54bd5bcbc6-m2f4x 1/1 Running 0 7m4s

kubesphere-system ks-console-54bd5bcbc6-zvj5k 1/1 Running 0 7m4s

kubesphere-system ks-controller-manager-8f6b985c5-glzfw 1/1 Running 0 7m4s

kubesphere-system ks-controller-manager-8f6b985c5-l6j5n 1/1 Running 0 7m4s

kubesphere-system ks-controller-manager-8f6b985c5-zs945 1/1 Running 0 7m4s

kubesphere-system ks-installer-6976cf49f5-4r94t 1/1 Running 0 11m

kubesphere-system redis-d744b7468-c5pqc 1/1 Running 0 8m35s

openebs openebs-localpv-provisioner-5c759b49cf-qrdwz 1/1 Running 0 11m

openebs openebs-ndm-cluster-exporter-7868564dcc-l9q4r 1/1 Running 0 11m

openebs openebs-ndm-node-exporter-mlgsb 1/1 Running 0 9m56s

openebs openebs-ndm-operator-84f74f957b-cp8tv 1/1 Running 0 11m

tigera-operator tigera-operator-d499f5c8f-575x2 1/1 Running 0 11m

Sign in kubesphere Container platform

By default NodePort Exposure Services

Console address :http://192.168.72.50:30880/

user name :admin

password :[email protected]

Log in to the console as follows :

kubesphere Designed for pluggable , Other functions can be enabled by modifying the configuration file , With devops Take functional components as an example , stay ClusterConfiguration Editor in chief yaml, find devops enabled Change the field to true

After the components operate normally, the following

边栏推荐

- 【LVGL布局】柔性布局

- You don't know these pits. You really don't dare to use BigDecimal

- 【LVGL(4)】对象的事件及事件冒泡

- Introduction, architecture and principle of kubernetes

- I have seven schemes to realize web real-time message push, seven!

- It's not too much to fight a landlord in idea!

- Special effects - click the mouse and the randomly set text will appear

- JS - numerical processing (rounding, rounding, random numbers, etc.)

- 广度优先搜索(模板使用)

- Several common problems of SQL server synchronization database without public IP across network segments

猜你喜欢

华为专家自述:如何成为优秀的工程师

![[small object velocimeter] only principle, no code](/img/df/b8a94d93d4088ebe8d306945fd9511.png)

[small object velocimeter] only principle, no code

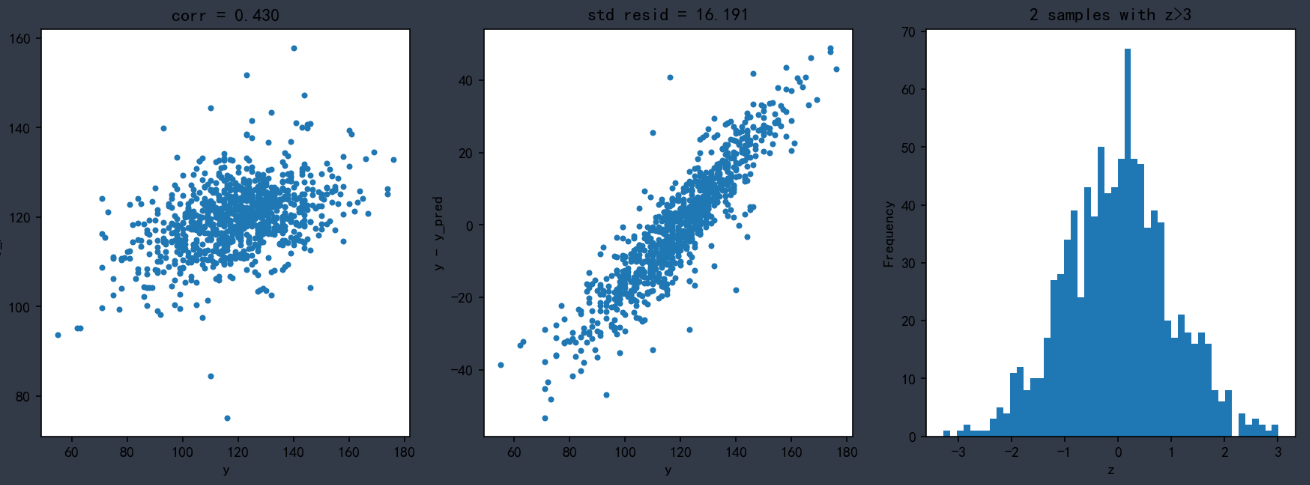

Machine learning case: smoking in pregnant women and fetal health

Solution: exit status 1 and exit status 145 appear when the console uses NVM to control the node version

STM32基于 FatFs R0.14b&SD Card 的MP3音乐播放器(也算是FatFs的简单应用了吧)

Redis distributed cache learning notes

Special effects - click the mouse and the randomly set text will appear

Record the pits encountered in the deserialization of phpserializer tool class

STM32外部中断(寄存器版本)

PyQt5入门——学生管理系统

随机推荐

[lvgl (important)] style attribute API function and its parameters

Detailed explanation of class loader and parental delegation mechanism

随机森林、LGBM基于贝叶斯优化调参

Jenkins CI CD

LM393 电压比较器及其典型电路介绍

[lvgl (5)] label usage

HashSet to array

Redis basic type - combined with set

[lvgl] API functions for setting, changing and deleting styles of components

Jenkins CI CD

Take you to understand the inventory deduction principle of MySQL database

I have seven schemes to realize web real-time message push, seven!

STM32外部中断(寄存器版本)

分组后返回每组中的最后一条记录 GROUP_CONCAT用法

【LVGL(5)】标签的(label)用法

Several common problems of SQL server synchronization database without public IP across network segments

Directory and file management

【学习笔记】Web页面渲染的流程

Nodejs enables multi process and inter process communication

Redis special data type Geo