当前位置:网站首页>[NLP] this year's college entrance examination English AI score is 134. The research of Fudan Wuda alumni is interesting

[NLP] this year's college entrance examination English AI score is 134. The research of Fudan Wuda alumni is interesting

2022-06-28 10:03:00 【Demeanor 78】

bright and quick From the Aofei temple

qubits | official account QbitAI

After the challenge of writing Chinese composition ,AI Now I'm eyeing college entrance examination English .

Turns out, good guy , This year's college entrance examination English ( National A-test paper ) Get started , Just take 134 branch .

And it's not an accidental supernormal play .

stay 2018-2021 Year of 10 In the test of true questions ,AI All scores are 125 More than , The highest record is 138.5 branch , Listening and reading comprehension have also taken Full marks .

That's why CMU Proposed by scholars , College entrance examination English test AI System Qin.

Its parameter quantity is only GPT-3 Of 16 One of the points , The average score is better than GPT-3 Higher than 15 branch .

The secret behind it is called Reconstruction pre training (reStructured Pre-training), It is a new learning paradigm proposed by the author .

The specific term , Is to put Wikipedia 、YouTube And so on , Feed me again AI Training , So that AI It has stronger generalization ability .

The two scholars used enough 100 Multi page The paper of , This new paradigm is explained in depth .

that , What does this paradigm mean ?

Let's dig deep ~

What is refactoring pre training ?

The title of the thesis is very simple , Call reStructured Pre-training( Reconstruction pre training ,RST).

The core point of view is one sentence , want Value data ah !

The author thinks that , Valuable information is everywhere in the world , And now AI The system does not make full use of the information in the data .

Like Wikipedia ,Github, It contains various signals for model learning : Entity , Relationship , Text in this paper, , Text theme, etc . These signals have not been considered before due to technical bottlenecks .

therefore , In this paper, the author proposes a method , Neural network can be used to Storage and access Data containing various types of information .

They are in units of signals 、 Structured presentation data , This is very similar to data science, where we often construct data into tables or JSON Format , And then through special language ( Such as SQL) To retrieve the required information .

The specific term , The signal here , Actually, it refers to the useful information in the data .

For example “ Mozart was born in Salzburg ” In this sentence ,“ Mozart ”、“ Salzburg ” It's a signal .

then , It is necessary to mine data on various platforms 、 Pick up the signal , The author compares this process to looking for treasure from a mine .

Next , utilize prompt Method , These signals from different places can be unified into one form .

Last , Then integrate and store the reorganized data into the language model .

thus , The research can be started from 10 Data sources , Unified 26 Kind of Different types of signals , Make the model have strong generalization ability .

It turns out that , In multiple datasets ,RST-T、RST-A Zero sample learning performance , all be better than GPT-3 Small sample learning performance .

In order to further test the performance of the new method , The author also thought of making AI Do the college entrance examination Methods .

They said , At present, many working methods are sinicized GPT-3 The idea of , It also follows in the application scenario of evaluation OpenAI、DeepMind.

such as GLUE Evaluation benchmark 、 Protein folding score, etc .

Based on the present AI Observation of model development , The author thinks that we can open up a new track to try , So I thought of using the college entrance examination to AI Practice hands .

They found it for several years 10 Set of test papers for marking , Ask the high school teachers to grade .

Like hearing / Read pictures and understand such topics , And machine vision 、 Scholars in the field of speech recognition help .

Final , This set of college entrance examination English has been refined AI Model , You can also call her Qin.

As you can see from the test results ,Qin It's definitely Xueba level ,10 The scores of the test paper set are all higher than T0pp and GPT-3.

Besides , The author also puts forward the college entrance examination benchmark.

They feel that the task of many evaluation benchmarks is very single , Most of them have no practical value , It is also difficult to compare with human conditions .

The college entrance examination covers a variety of knowledge points , There are also human scores to compare directly , It can be said that killing two birds with one stone .

NLP The fifth paradigm of ?

If you look at it from a deeper level , The author thinks that , Refactoring pre training may become NLP A new paradigm of , Namely the Preliminary training / fine-tuning The process is regarded as data storage / visit The process .

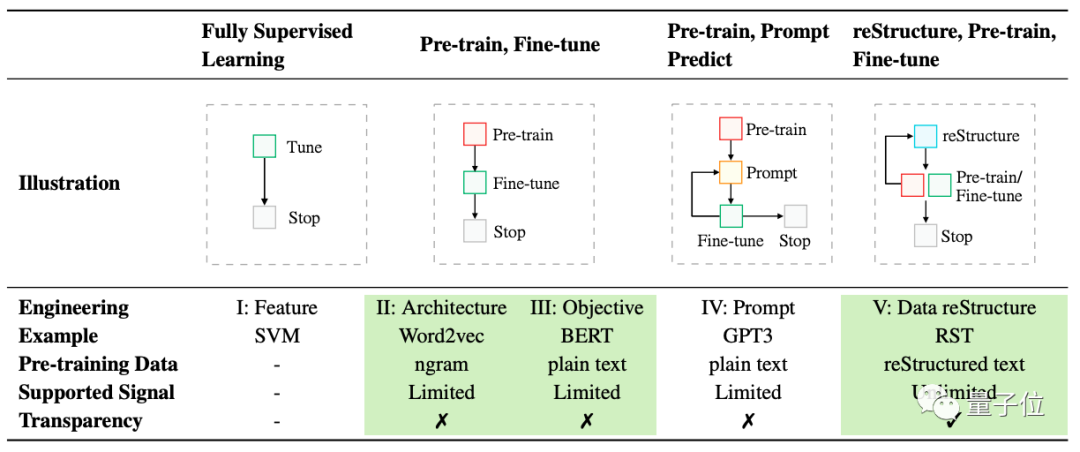

before , The author will NLP The development of has been summed up as 4 There are paradigms :

P1. Fully supervised learning in the age of non neural networks (Fully Supervised Learning, Non-Neural Network)

P2. Fully supervised learning based on neural network (Fully Supervised Learning, Neural Network)

P3. Preliminary training , Fine tuning paradigm (Pre-train, Fine-tune)

P4. Preliminary training , Tips , Prediction paradigm (Pre-train, Prompt, Predict)

But based on the present NLP Observation of development , They thought maybe they could data-centric The way to look at things .

That is to say , Pre training / Fine tune 、few-shot/zero-shot The differentiation of such concepts will be more ambiguous , The core only focuses on one point ——

How much valuable information 、 How much .

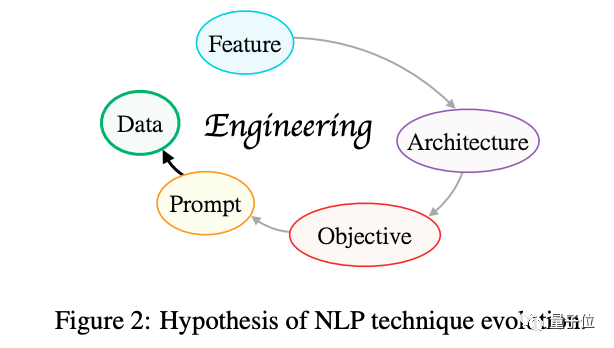

Besides , They also put forward a NLP The evolutionary hypothesis .

The core idea is , The direction of technological development always follows this —— Do less to achieve better 、 A more general-purpose system .

The author thinks that ,NLP Experienced Feature Engineering 、 Architecture Engineering 、 Target project 、 Prompt project , At present, it is developing in the direction of data engineering .

Fudan Wuda alumni create

One of the achievements of this thesis Weizhe Yuan.

She graduated from Wuhan University , After that, he went to Carnegie Mellon University for postgraduate study , Study data science .

My research interests focus on NLP Task text generation and evaluation .

last year , She was AAAI 2022、NeurIPS 2021 Received one paper respectively , Also received ACL 2021 Best Demo Paper Award.

The corresponding author of this paper is the Institute of language technology, Carnegie Mellon University (LTI) Postdoctoral researcher of Liu Pengfei .

He is in 2019 He received his doctorate from the Department of computer science of Fudan University in , Under the guidance of Professor Qiu Xipeng 、 Professor Huang xuanjing .

Research interests include NLP Model interpretability 、 The migration study 、 Task learning, etc .

During the doctorate , He has won scholarships in various computer fields , Include IBM Doctoral Scholarship 、 Microsoft scholar Scholarship 、 Tencent AI Scholarship 、 Baidu Scholarship .

One More Thing

It is worth mentioning that , When liupengfei introduced this work to us , To be frank “ At first, we didn't plan to contribute ”.

This is because they do not want the format of the conference paper to limit the imagination of the paper .

We decided to tell this paper as a story , And give “ readers ” A movie experience .

That's why we're on page three , Set up a “ Viewing mode “ The panorama of .

Is to take you to understand NLP History of development , And what the future looks like , So that every researcher can have a certain sense of substitution , Feel yourself leading the pre training language models (PLMs) A process of mine treasure hunt towards a better tomorrow .

The end of the paper , There are also some surprise eggs .

such as PLMs Theme expression pack :

And the illustration at the end :

In this way ,100 Multi page I won't be tired after reading my thesis  ~

~

Address of thesis :

https://arxiv.org/abs/2206.11147

— End —

Past highlights

It is suitable for beginners to download the route and materials of artificial intelligence ( Image & Text + video ) Introduction to machine learning series download Chinese University Courses 《 machine learning 》( Huang haiguang keynote speaker ) Print materials such as machine learning and in-depth learning notes 《 Statistical learning method 》 Code reproduction album machine learning communication qq Group 955171419, Please scan the code to join wechat group

边栏推荐

- [200 opencv routines] 213 Draw circle

- MySQL基础知识点总结

- Install using snap in opencloudos NET 6

- JVM系列(2)——垃圾回收

- 股票开户用中金证券经理发的开户二维码安全吗?知道的给说一下吧

- Instant messaging and BS architecture simulation of TCP practical cases

- PMP考试重点总结八——监控过程组(2)

- R语言使用car包中的avPlots函数创建变量添加图(Added-variable plots)、在图像交互中,在变量添加图中手动标识(添加)对于每一个预测变量影响较大的强影响点

- 自定义异常类及练习

- Google开源依赖注入框架-Guice指南

猜你喜欢

How to distinguish and define DQL, DML, DDL and DCL in SQL

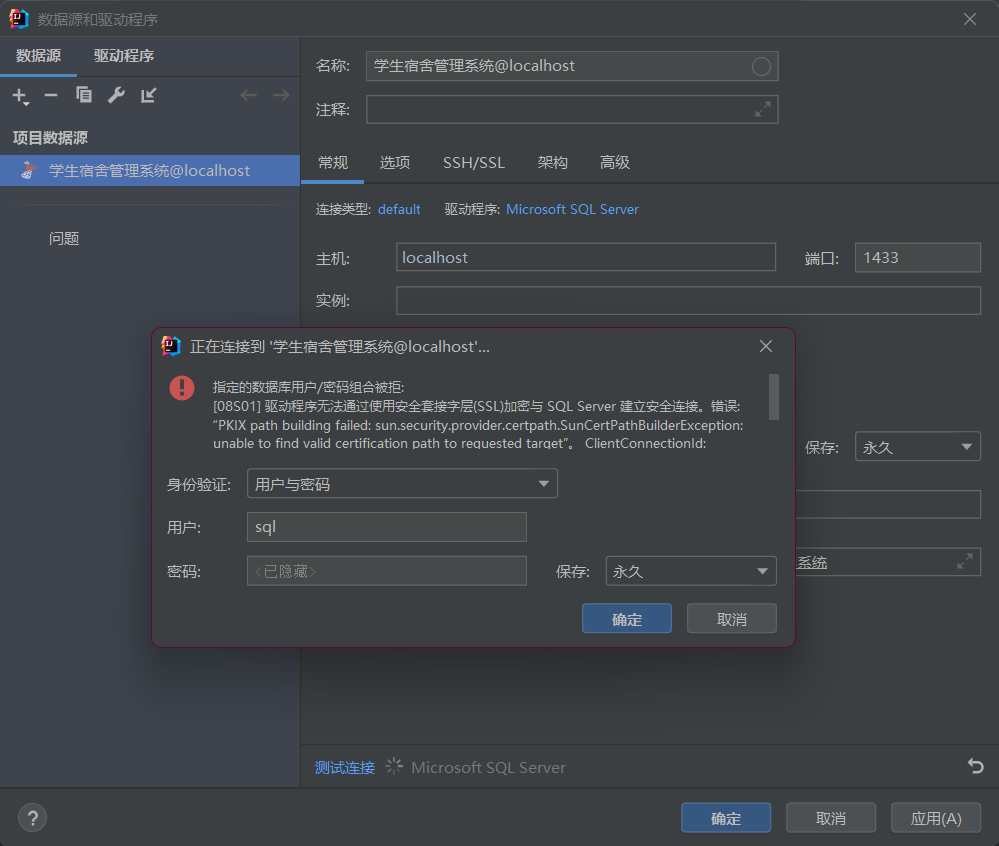

idea连接sql sever失败

Au revoir! Navigateur ie, cette route Edge continue pour IE

PMP考试重点总结五——执行过程组

PMP考试重点总结六——图表整理

为什么 Istio 要使用 SPIRE 做身份认证?

Key summary V of PMP examination - execution process group

![[Unity]EBUSY: resource busy or locked](/img/72/d3e46a820796a48b458cd2d0a18f8f.png)

[Unity]EBUSY: resource busy or locked

理想中的接口自动化项目

Matplotlib属性及注解

随机推荐

[Unity]EBUSY: resource busy or locked

Threads and processes

小米旗下支付公司被罚 12 万,涉违规开立支付账户等:雷军为法定代表人,产品包括 MIUI 钱包 App

优秀笔记软件盘点:好看且强大的可视化笔记软件、知识图谱工具Heptabase、氢图、Walling、Reflect、InfraNodus、TiddlyWiki

idea连接sql sever失败

缓存之王Caffeine Cache,性能比Guava更强

Matplotlib attribute and annotation

Dolphin scheduler uses system time

flink cep 跳过策略 AfterMatchSkipStrategy.skipPastLastEvent() 匹配过的不再匹配 碧坑指南

线程和进程

PMP考试重点总结八——监控过程组(2)

通过PyTorch构建的LeNet-5网络对手写数字进行训练和识别

理想中的接口自动化项目

Stutter participle_ Principle of word breaker

桥接模式(Bridge)

Key summary VII of PMP examination - monitoring process group (1)

Crawler small operation

Sword finger offer | linked list transpose

Restful style

An error is reported when uninstalling Oracle