当前位置:网站首页>What if Oracle 19C migration encounters large lob tables?

What if Oracle 19C migration encounters large lob tables?

2022-07-25 07:11:00 【51CTO】

The authors introduce

Liang mingtu , Chief architect of new torch network , More than ten years of database operation and maintenance 、 Database design 、 Data governance and system planning and construction experience , Have Oracle OCM、Togaf Enterprise architect ( Appraisal level )、IBM CATE Etc , Once obtained dbaplus year MVP And Hua Weiyun MVP Such as the honor , And participated in the compilation of national standards for data asset management . In the database operation and maintenance management and architecture design 、 Operation and maintenance system planning 、 There is in-depth research on data asset management .

Wang Tao , New torch network senior database expert , Long term service to operators 、 Finance 、 Manufacturing and government enterprise customers . Take root in the customer line , Many times leading operators to upgrade the database version , Good at the research and application of data cutover and synchronization technology , He has rich experience in actual combat .

background

from Oracle The official database service supports the life cycle table , We can see it clearly Oracle 11g Has passed the main support life cycle ,2020 No more support for . Based on this background , A customer's application system database will be from IBM AIX The minicomputer environment is migrated to a domestic database all-in-one machine , At the same time, the database version is from 11g Upgrade directly to 19c.

LOB Field problems

Through the analysis of , This database has a small amount of data , Only a small area 3TB, At the same time, due to the sufficient downtime , Consider taking a data pump datapump To achieve data migration . But after a close look , Found a single table in the database 2TB, Check again 2TB Basically, it's all lob Field , And it's not a partition table , This is a little tricky .

Judging from past experience , This big capacity TB Class lob surface , Using the usual way of export , Maybe the rate will be reported Ora-01555.

A little bit of testing , Sure enough .

resolvent

The general method can be modified undo_retention Parameters and lob Field retention Set to solve , As follows :

However, the current database is a production environment , It is better to do less risk engineering such as parameter modification , So we need to find another way . since ORA-01555 It's caused by a long query , We can try to reduce the amount of data poured out .

Finally decided to use Expdp Of Query Have a try , however 2TB A single table of the amount of data lob For the first time , So, according to which conditions Query Export ?

The first thing to consider is to export according to the primary key and index columns , This will be more efficient . After the confirmation , Here comes the question , The index column does not satisfy the uniform batching condition , So this idea doesn't work .

How can we get even ? It seems that it can only be used Rowid Give it a try .

First Rowid Is a relative unique address value used to locate a record in the database . Usually , The value is determined and unique when the row data is inserted into the database table .Rowid Is a pseudo column , It doesn't actually exist in the entity table .

It is Oracle When reading data rows in a table , A pseudo column encoded according to the physical address information of each row of data . So based on a row of data Rowid Can find a line of data physical address information , To quickly locate the data row , And use Rowid The speed of single record location is the fastest .

Above, Rowid Structure diagram , It mainly includes 4 Parts of :

- The first part 6 Who said : Of the data object where the row data is located Data_object_id

- The second part 3 Who said : Of the relative data file where the line data is located id

- The third part 6 Who said : The number of the data block in which the data row is located

- The fourth part 3 Who said : The number of the row of data in this row

An extension Rowid use 10 individual byte To store , common 80bit, among obj#32bit, rfile#10bit, block#22bit, row#16bit. So the relative file number cannot exceed 1023, In other words, the data file of a table space cannot exceed 1023 individual ( There is no file number 0 The file of ), One Datafile There can only be 2^22=4M individual block, One block Can't exceed 2^16=64K The origin of row data .

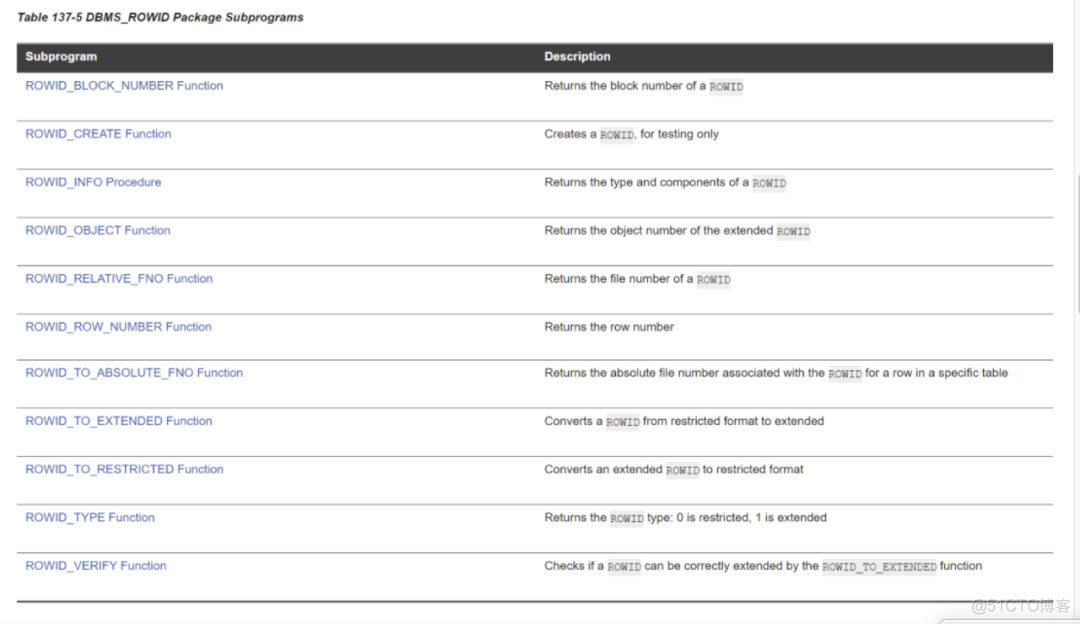

I understand Rowid after , How to carry out uniform batching ? We can use Oracle Provided DBMS_ROWID package .

The export script is modified as follows :

Parameter description :

- Content=DATA_ONLY: Export only the data in the table , Export will be faster , It's also faster to import ,index Something like that is data After import, it should be handled separately ;

- COMPRESSION=DATA_ONLY: Too much data , Save a space , More efficient transmission to new environments ;

- Query=“……”: The table data is exported in batches according to the conditions .

Why choose rowid_block_number Well ? Because of the requirement of exporting this large table ,Object_id Just one , Can't divide batches ,Fileid Only 150 individual ,BLOCK_ID yes 126924 individual ,ROW_NUMBER yes 19, Data volume value is carried out Mod The smaller the difference in the remaining batches , So use rowid_block_number. After using this method, the data is exported smoothly .

Copy the parameter file and modify the remainder of the module , It is divided into ten processes to execute concurrently . View all export logs , The time consumption of each batch is not different , A plan that satisfies uniform batch derivation .

Summary

Encounter super large lob Table export requires , The general idea is to reduce the amount of data exported from a single table by partitioning or filtering queries as much as possible , Reduce overall time consumption , Methods can be divided into :

1、 See if it's a partition table , The partition table is exported by partition :

2、 Business communication , Whether there are evenly distributed field values , Export in batches according to field values , for example :

3、 Not satisfied with the above can use this article rowid Method to export :

How to innovate and innovate the database in the cloud era ? How to move and build the core database of financial industry safely and smoothly ? How can open source technology exert its strength in actual business scenarios ?11 month 6 Japan ,DAMS The China data intelligent management summit will be held in Shanghai , Specially designed 【 Database segmentation 】, Some topics are as follows :

- 《All in Cloud Time , Next generation cloud native database technology and trend 》 Alibaba Group vice president Fei Fei Li ( Flying cutter )

- 《 Demand and selection of distributed database in financial industry 》 Industrial and Commercial Bank of China Deputy general manager of the third Department of data center system Zhao Yongtian

- 《 From the evolution of self research, we can see the distributed database 》 in China UnionPay Cloud Computing Center team leader Zhou Jiajing

- 《 Open source database MySQL Application practice in Minsheng Bank 》 Minsheng Bank project manager Xu Chunyang

Scan the code immediately and enjoy Early bird price , Stand firm in the changes of database !

边栏推荐

- 【愚公系列】2022年7月 Go教学课程 016-运算符之逻辑运算符和其他运算符

- Tp5.1 foreach adds a new field in the controller record, and there is no need to write all the other fields again without changing them (not operating in the template) (paging)

- 常吃发酵馒头是否会伤害身体

- Will eating fermented steamed bread hurt your body

- The idea of the regular crawler of the scratch

- 论文阅读:UNET 3+: A FULL-SCALE CONNECTED UNET FOR MEDICAL IMAGE SEGMENTATION

- Luo min's backwater battle in qudian

- Cointelegraph撰文:依托最大的DAO USDD成为最可靠的稳定币

- vulnhub CyberSploit: 1

- [daily question] sword finger offer II 115. reconstruction sequence

猜你喜欢

Basic usage of thread class

Upload and download multiple files using web APIs

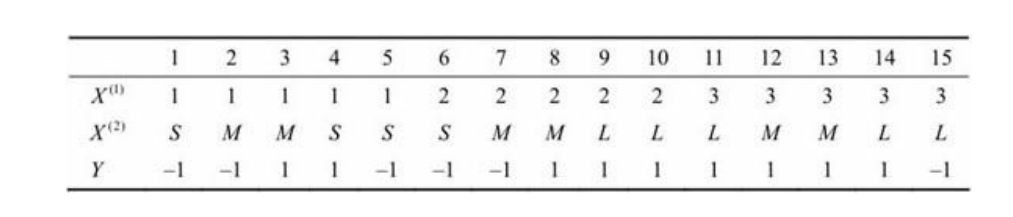

Statistical learning -- naive Bayesian method

如何在KVM环境中使用网络安装部署多台虚拟服务器

Alibaba cloud image address & Netease cloud image

机器人工程-教学品质-如何判定

LeetCode46全排列(回溯入门)

![[300 + selected interview questions from big companies continued to share] big data operation and maintenance sharp knife interview question column (V)](/img/cf/44b3983dd5d5f7b92d90d918215908.png)

[300 + selected interview questions from big companies continued to share] big data operation and maintenance sharp knife interview question column (V)

vulnhub CyberSploit: 1

【每日一题】1184. 公交站间的距离

随机推荐

QT6 with vs Code: compiling source code and basic configuration

大话西游服务端启动注意事项

leetcode刷题:动态规划06(整数拆分)

How can dbcontext support the migration of different databases in efcore advanced SaaS system

30 times performance improvement -- implementation of MyTT index library based on dolphin DB

批量导入数据,一直提示 “失败原因:SQL解析失败:解析文件失败::null”怎么回事?

【云原生】原来2020.0.X版本开始的OpenFeign底层不再使用Ribbon了

Electronic Association C language level 2 60, integer parity sort (real question in June 2021)

不只是日志收集,项目监控工具Sentry的安装、配置、使用

[knowledge summary] block and value range block

Two week learning results of machine learning

Leetcode 206. reverse linked list I

JS data type judgment - Case 6 delicate and elegant judgment of data type

Decrypting numpy is a key difficulty in solving the gradient

[daily question] sword finger offer II 115. reconstruction sequence

Leave the factory and sell insurance

%d,%s,%c,%x

[computer explanation] what should I pay attention to when I go to the computer repair shop to repair the computer?

Openatom xuprechain open source biweekly report | 2022.7.11-2022.7.22

Robot engineering - teaching quality - how to judge