当前位置:网站首页>Multi sensor fusion track fusion

Multi sensor fusion track fusion

2022-06-24 07:02:00 【AI vision netqi】

That's a good one :

Chapter 6 Driverless sensor fusion And multi-objective tracking - You know

Title map from matlab Public class --sensor fusion and tracking Infringement and deletion .

At present, the front-line engineers of automatic driving companies , I've heard of multisensor fusion more or less ,sensor fusion The term . This field can be said to be a hot pastry in the field of automatic driving technology , Why? ?

- sensor fusion It is an indispensable part of the autopilot software stack .sensor fusion In fact, it is necessary to distinguish between two positions , One is sensor fusion for localization, Chinese is multi-sensor fusion location , Or integrated navigation , Belongs to the positioning group , The other is sensor fusion for perception, Chinese is multi-sensor fusion sensing , Belonging to the perception group . Although the underlying skills of the two positions are highly similar , But in terms of difficulty , The multi-sensor fusion sensing is more difficult , More challenges , More and more complicated sensors are used , Here we mainly deal with fusion perception .

- sensor fusion There is no corresponding professional direction in this field in the school , It's an interdisciplinary problem , It is also one of the typical examples of the industry leading in school education ( Well, if you want to say that all the theoretical foundations of multi-sensor fusion come from the classical theory , From a single point of view, there is no refutation ), I saw a sentence recently :engineering is sometimes more art than science, It fits perfectly here , Because a lot of sensor fusion Technical problems come from the front line of driverless road test , And now the sensor technology has made rapid progress , High wire harness lidar、 solid state lidar、4D Imaging level millimeter wave radar 、 Ultra high dynamic range and low illumination cameras, etc , As soon as the manufacturer has samples, they will immediately supply them to the automatic driving R & D Department of the mainstream car factory or a special automatic driving company for on-board test , It is difficult for schools to have such a timely supply chain channel .

Get down to business ,sensor fusion What kind of process is it ? In short , It is generally divided into the following three steps :

- Time synchronization (time synchronization), Ensure that the time reference of various sensors is consistent . I am here Third articles There is one saying. , What are the perceived pain points of unmanned driving ? This problem has been introduced in , Here is an excerpt :“ The original data frame rates of different sensing sensors are different , The camera 10~60Hz,Lidar Typical 10Hz, Millimeter waves are easily hundreds of Hz. Different triggering methods : The typical external trigger is the camera , The typical internal trigger is lidar And millimeter wave ; Different time benchmarks : With its own time benchmark , For example, synchronization GPS PPS The signal Lidar, There is a need to provide an external timestamp , For example, hanging on CAN Millimeter wave on the bus 、 ultrasonic .” To solve this problem , Generally, the automatic driving company needs to develop its own special hardware synchronization board , With FPGA Most schemes , because FPGA It can be easily connected to sensor devices with different interfaces , Including the Internet port 、USB3.0、CAN etc. , And pass verilog Logic triggers level signals to different devices , Combined with the synchronization protocol supported by the sensor itself , For example, high-precision time synchronization PTP agreement , Generally, you can do ms Level synchronization , Even higher accuracy .

- Data Association (data association), That is, find out which sensor data represents the same object ? for instance , There is a car on the road ahead ,lidar Provides point clouds and 3D bounding box, The image provides 2D bounding box, Millimeter wave radar provides several radar points , How to put these raw data and semantic data find , Put it in the... Representing this car object struct Go inside ? The common practice is through space projection and coordinate system transformation , such as lidar data Usually in map In coordinate system , adopt 3D-2D transformation , Can be mapped to an image 2D Coordinate system , And the image 2D The results match . Um. , Here is the matching algorithm , The core of data association is how to do feature matching , Research in this area has been progressing , Commonly used KM matching( Hungarian algorithm ), In recent years, through deep learning methods , The high-dimensional features of the object are directly generated during the target detection , And then to match , The result will be more promising, But this part of the work is still relatively new , It will take some time for engineering .

- Solve the state estimation problem , That forecast - observation - Update cycle ( In fact, we only need prediction and update These two steps ), Here we only introduce the most extensive Bayesian probability method , Various kalman filter, as well as partical filter Can fall into this category . This is too big to expand , It is simply the state of the object 、 Sensor error 、 Observation errors can be expressed as Gaussian or non Gaussian processes , We're going to build equations of motion and equations of observation , Solve again ( Ah, these words are simply condensed beyond recognition , Please SLAM Don't hit me ...)

Back to the topic , As summarized above sensor fusion The process , Follow object tracking What does it matter ?

The reason is that in a word , These two problems are both state estimation problems , The essence is to solve prediction-update.

A more complete statement is , In order to achieve stable object tracking, We need a variety of sensors , for example lidar/camera/radar, however 1) Each sensor has its own limitations , May cause miss tracking or false tracking 2) To solve multiple object tracking (MOT) The question is , We don't want to use... That doesn't represent the object sensor data , To estimate the state of the object . The basis of these questions , Must be solved first sensor fusion problem .

therefore We can sensor fusion Understand it as a basic module , With it , You can achieve :

- Stable positioning ,sensor fusion for localization;

- Stable target detection ,sensor fusion for detection;

- Stable single 、 Multitarget tracking ,sensor fusion for single / multiple object tracking;

So many yards today , The above two questions ,sensor fusion and object tracking, It involves the mastery of a number of underlying technologies , In the academic and industrial circles, it is developing rapidly with the wave of automatic driving , In the future, I will update more technical details and Technological Development frontiers .

边栏推荐

- RealNetworks vs. Microsoft: the battle in the early streaming media industry

- Nine unique skills of Huawei cloud low latency Technology

- 原神方石机关解密

- In the half year, there were 2.14 million paying users, a year-on-year increase of 62.5%, and New Oriental online launched its private domain

- 年中了,准备了少量的自动化面试题,欢迎来自测

- How do I check the IP address? What is an IP address

- About Stacked Generalization

- Localized operation on cloud, the sea going experience of kilimall, the largest e-commerce platform in East Africa

- Go excel export tool encapsulation

- Spark累加器和廣播變量

猜你喜欢

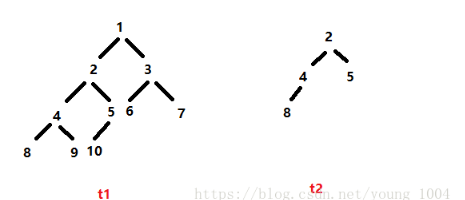

leetcode:剑指 Offer 26:判断t1中是否含有t2的全部拓扑结构

【JUC系列】Executor框架之CompletionFuture

leetcode:1856. Maximum value of minimum product of subarray

你有一个机会,这里有一个舞台

应用配置管理,基础原理分析

About Stacked Generalization

![Jumping game ii[greedy practice]](/img/e4/f59bb1f5137495ea357462100e2b38.png)

Jumping game ii[greedy practice]

leetcode:1856. 子数组最小乘积的最大值

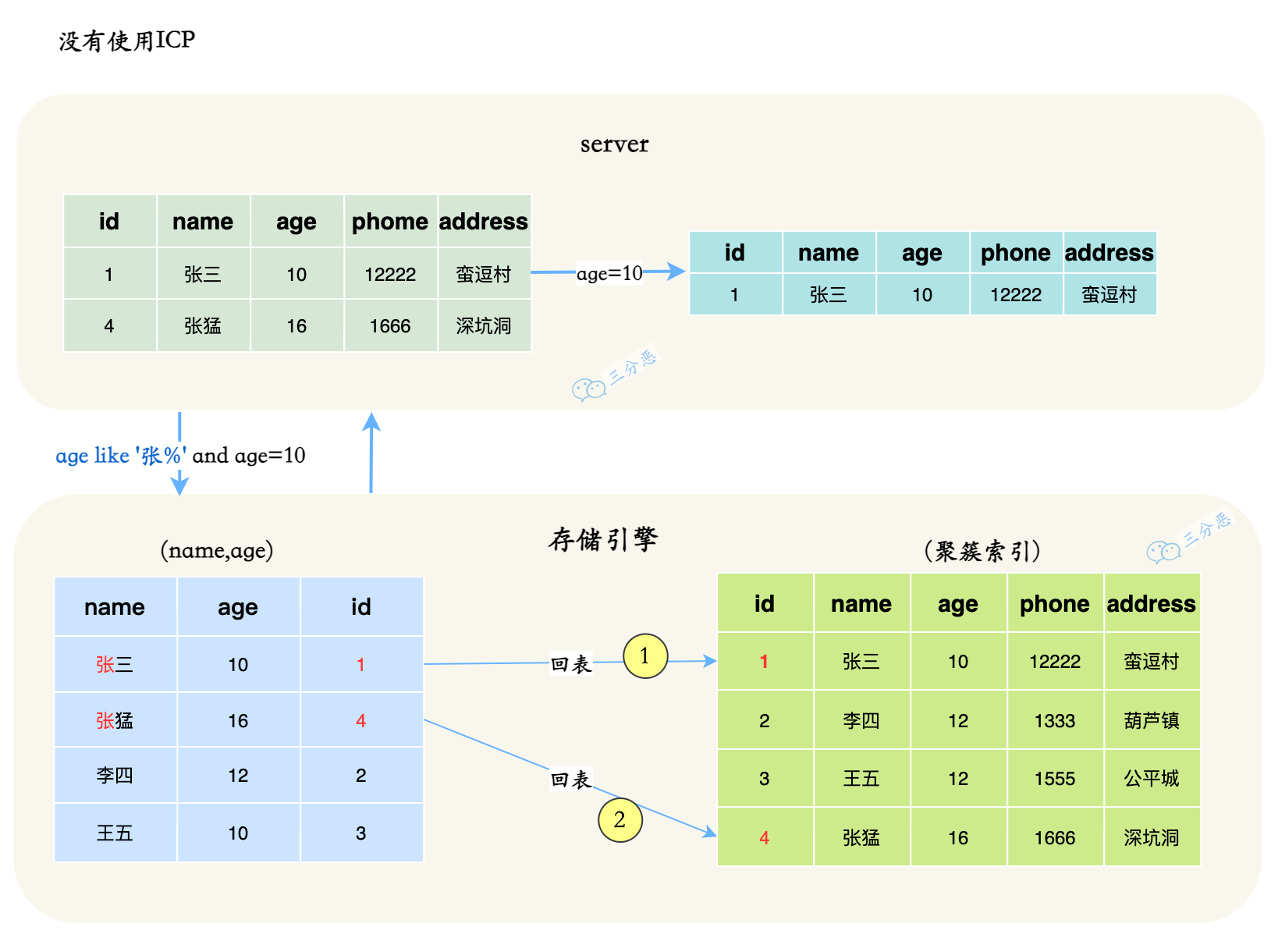

Counter attack of flour dregs: MySQL 66 questions, 20000 words + 50 pictures

Typora收费?搭建VS Code MarkDown写作环境

随机推荐

The three-year action plan of the Ministry of industry and information technology has been announced, and the security industry has ushered in major development opportunities!

为什么要用lock 【readonly】object?为什么不要lock(this)?

LuChen technology was invited to join NVIDIA startup acceleration program

Internet cafe management system and database

Spark accumulators and broadcast variables

Asp+access web server reports an error CONN.ASP error 80004005

[binary number learning] - Introduction to trees

JSON online parsing and the structure of JSON

成为 TD Hero,做用技术改变世界的超级英雄 | 来自 TDengine 社区的邀请函

Basic knowledge of wechat applet cloud development literacy chapter (I) document structure

leetcode:84. 柱状图中最大的矩形

Accumulateur Spark et variables de diffusion

35 year old crisis? It has become a synonym for programmers

Spark参数调优实践

Oracle SQL comprehensive application exercises

With a goal of 50million days' living, pwnk wants to build a "Disneyland" for the next generation of young people

Talk about how to dynamically specify feign call service name according to the environment

Easy car Interviewer: talk about MySQL memory structure, index, cluster and underlying principle!

Overview of new features in mongodb5.0

目标5000万日活,Pwnk欲打造下一代年轻人的“迪士尼乐园”