当前位置:网站首页>About Stacked Generalization

About Stacked Generalization

2022-06-24 06:33:00 【梦想家DBA】

Ensemble methods are an excellent way to predictive performance on your machine learning problems.Stacked Generalization or stacking is an ensemble technique that uses a new model to learn how to best combine the predictions from two or more models trained on your dataset.

- How to learn to combine the prediction from multiple models

- How to apply stacked generalization to a real-world predictive modeling problem.

1.2 Tutorial

This tutorial is broken down into 3 steps:

- Submodels and Aggregator

- Combining Predictions

- Sonar Case Study

1.2.1 Submodels and Aggregator

we are going to use two models as submodels for stacking and a linear model as the aggregator model.

This part is divided into 3 sections:

- Submodel # 1 : K-Nearest Neighbors

- Submodel # 2 : Perceptron

- Aggregator Model: Logistic Regression

Each model will be described in terms of the functions used to train the model and a function used to make predictions.

Submodel #1: k-Nearest Neighbors

KNN uses the entire training dataset as the model.training the model involves retaining the training dataset.Below is a function named knn_model() that does just this.

Below are these helper functions that involve making predictions for a KNN model.The function euclidean_distance() calculates the distance between tow rows of data,get_neighbors() function locates all neighbors in the training dataset for a new row of data and knn_predict() makes a prediction from the neighbors for a new of data.

# Calculate the Euclidean distance between two vectors

def euclidean_distance(row1,row2):

distance = 0.0

for i in range(len(row1)-1):

distance += (row1[i] - row2[i])**2

return sqrt(distance)

# Locate neighbors for a new row

def get_neighbors(train, test_row,num_neighbors):

distances = list()

for train_row in train:

dist = euclidean_distance(test_row, train_row)

distance.append((train_row,dist))

distance.sort(key = lambda tup: tup[1])

neighbors = list()

for i in range(num_neighbors):

neighbors.append(distances[i][0])

return neighbors

# Make a prediction with KNN

def knn_predict(model, test_row,num_neighbors=2):

neighbors = get_neighbors(model, test_row,num_neighbors)

output_values = [value[-1] for row in neighbors]

prediction = max(set(output_values),key=output_values.count)

return prediction

Submodel #2 Perceptron

The model for perceptron algorithm is a set of weights learned from the training data.In order to train the weights, many predictions need to made on the training data in order to calculate error values.Therefore, both model training and prediction require a function for prediction.

Below are the helper function for implementing the Perceptron algorithm.

The perceptron_model() function trains the Perceptron model on the training dataset.

perceptron_predict() is used to make a prediction for a row of data.

# Functions to train and make predictions with a Perceptron Model

# Make a prediction with weights

def percetron_predict(model,row):

activation = model[0]

for i in range(len(row)-1):

activation += model[i + 1] * row[i]

return 1.0 if activation >= 0.0 else 0.0

# Estimate Perceptron weights using stochastic gradient descent

def perceptron_model(train,l_rate=0.01, n_epoch=5000):

weights = [0.0 for i in range(len(train[0]))]

for epoch in range(n_epoch):

for row in train:

prediction = perceptron_predict(weights,row)

error = row[-1] - prediction

weights[0] = weights[0] + l_rate * error

for i in range(len(row)-1):

weights[i+1] = weights[i + 1] + l_rate * error * row[i]

return weights

Aggregator Model: Logistic Regression

Like the Perceptron algorithm,Logistic Regression uses a set of weights , called coefficients,as the representation of the model.

Below are the helper functions for implementing the logistic regression algorthim. The logistic_regression_model() function is used to train the coefficients on the training dataset and logistic_regression_predict() is used to make a prediction for a row of data.

# Function to train and make predictions with a logistic regression Model

# Make a predciton with coefficients

def logistic_regression_predict(model, row):

yhat = model[0]

for i in range(len(row)-1):

yhat += model[i + 1] * row[i]

return 1.0 / (1.0 + exp(-yhat))

# Estimate logistic regression coefficients using stochastic gradient descent

def logistic_regression_model(train, l_rate=0.01,n_epoch=5000):

coef = [0.0 for i in range(len(train[0]))]

for epoch in range(n_epoch):

for row in train:

yhat = logistic_regression_predict(coef, row)

error = row[-1] - yhat

coef[0] = coef[0] + l_rate * error * yhat * (1.0 - yhat) * row[i]

return coefthe logistic_regression_model() defines a learning rate and number of epochs as default parameters.

1.2.2 Combining Predictions

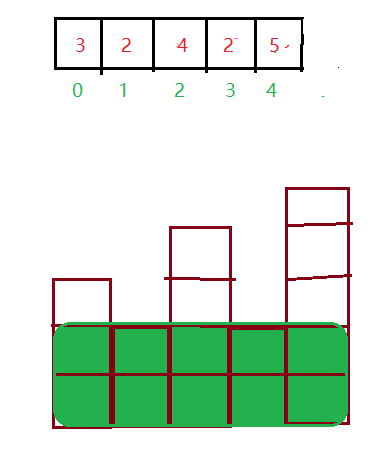

A new training dataset can be constructed from the predictions of the submodels,as follows:

- Each row represents one row in the training dataset.

- The first column contains predictions for each row in the training dataset made by the first submodel,such as k-Nearest Neighbors.

- The second column contain predictions for each row in the training dataset made by the second submodel,such as the Perceptron algortihm.

- The third column contains the expected output value for the row in the training dataset.

Below is a contrived example of what a constructed stacking dataset may look like:

KNN, Per, Y

0, 0 0

1, 0 1

0, 1 0

1, 1 1

0, 1 0Below is a function named to_stacked_row() that implements this procedure for creating new rows for this stacked dataset.

# Make predictions with submodels and construct a new stacked row

def to_stacked_row(models, predict_list, row):

stacked_row = list()

for i in range(len(models)):

prediction = predict_list[i](models[i],row)

stacked_row.append(prediction)

stacked_row.append(row[-1])

return stacked_rowBelow is an updated version of the to_stacked_row() function that implements this improvement

# Make predictions with sub-models and construct a new stacked row

def to_stacked_row(models, predict_list, row):

stacked_row = list()

for i in range(len(models)):

prediction = predict_list[i](models[i],row)

stacked_row.append(prediction)

stacked_row.append(row[-1])

return row[0:len(row)-1] + stacked_row1.2.3 Sonar Case Study

we will apply the stacking algorithm to the Sonar dataset.

# Stacking on the sonar dataset

from random import seed

from random import randrange

from csv import reader

from math import sqrt

from math import exp

# Load a CSV file

def load_csv(filename):

dataset = list()

with open(filename,'r') as file:

csv_reader = reader(file)

for row in csv_reader:

if not row:

continue

dataset.append(row)

return dataset

# Convert string column to float

def str_column_to_float(dataset, column):

for row in dataset:

row[column] = float(row[column].strip())

# Convert string column to integer

def str_column_to_int(dataset, column):

class_values = [row[column] for row in dataset]

unique = set(class_values)

lookup = dict()

for i,value in enumerate(unique):

lookup[value] = i

for row in dataset:

row[column] = lookup[row[column]]

return lookup

# Split a dataset into k folds

def cross_validation_split(dataset, n_folds):

dataset_split = list()

dataset_copy = list(dataset)

fold_size = int(len(dataset)/ n_folds)

for i in range(n_folds):

fold = list()

while len(fold) < fold_size:

index = randrange(len(dataset_copy))

fold.append(dataset_copy.pop(index))

dataset_split.append(fold)

return dataset_split

# Calculate accuracy percentage

def accuracy_metric(actual, predicted):

correct = 0

for i in range(len(actual)):

if actual[i] == predicted[i]:

correct += 1

return correct / float(len(actual)) * 100.0

# Evaluate an algorithm using a cross validation split

def evaluate_algorithm(dataset, algorithm,n_folds, *args):

folds = cross_validation_split(dataset,n_folds)

scores = list()

for fold in folds:

train_set = list(folds)

train_set.remove(fold)

train_set = sum(train_set,[])

test_set = list()

for row in fold:

row_copy = list(row)

test_set.append(row_copy)

row_copy[-1] = None

predicted = algorithm(train_set, test_set, *args)

actual = [row[-1] for row in fold]

accuracy = accuracy_metric(actual, predicted)

scores.append(accuracy)

return scores

# Calculate the Euclidean distance between two vectors

def euclidean_distance(row1, row2):

distance = 0.0

for i in range(len(row1)-1):

distance += (row1[i]-row2[i])**2

return sqrt(distance)

# Locate neighbors for a new row

def get_neighbors(train,test_row, num_neighbors):

distances = list()

for train_row in train:

dist = euclidean_distance(test_row,train_row)

distances.append((train_row, dist))

distances.sort(key=lambda tup: tup[1])

neighbors = list()

for i in range(num_neighbors):

neighbors.append(distances[i][0])

return neighbors

# Make a prediction with KNN

def knn_predict(model, test_row, num_neighbors=2):

neighbors = get_neighbors(model, test_row, num_neighbors)

output_values = [row[-1] for row in neighbors]

prediction = max(set(output_values),key=output_values.count)

return prediction

# Prepare the kNN model

def knn_model(train):

return train

# Make a prediction with weights

def perceptron_predict(model, row):

activation = model[0]

for i in range(len(row)-1):

activation += model[i + 1] * row[i]

return 1.0 if activation >= 0.0 else 0.0

# Estimate Perceptron weights using stochastic gradient descent

def perceptron_model(train, l_rate=0.01, n_epoch=5000):

weights = [0.0 for i in range(len(train[0]))]

for i in range(n_epoch):

for row in train:

prediction = perceptron_predict(weights, row)

error = row[-1] - prediction

weights[0] = weights[0] + l_rate * error

for i in range(len(row)-1):

weights[i+1] = weights[i + 1] + l_rate * error * row[i]

return weights

# Make a prediction with coefficients

def logistic_regression_predict(model, row):

yhat = model[0]

for i in range(len(row)-1):

yhat += model[i + 1] * row[i]

return 1.0 / (1.0 + exp(-yhat))

# Estimate logisctic regression coefficients using stochastic gradient descent

def logistic_regression_model(train, l_rate=0.1, n_epoch=5000):

coef = [0.0 for i in range(len(train[0]))]

for i in range(n_epoch):

for row in train:

yhat = logistic_regression_predict(coef,row)

error = row[-1] - yhat

coef[0] = coef[0] + l_rate * error * yhat * (1.0 - yhat)

for i in range(len(row)-1):

coef[i + 1] = coef[i + 1] + l_rate * error * yhat * (1.0 - yhat) * row[i]

return coef

# Make prediction with sub-models and construct a new stacked row

def to_stacked_row(models, predict_list, row):

stacked_row = list()

for i in range(len(models)):

prediction = predict_list[i](models[i],row)

stacked_row.append(prediction)

stacked_row.append(row[-1])

return row[0:len(row)-1] + stacked_row

# stacked Generalization Algortihm

def stacking(train, test):

model_list = [knn_model, perceptron_model]

predict_list = [knn_predict,perceptron_predict]

models = list()

for i in range(len(model_list)):

model = model_list[i](train)

models.append(model)

stacked_dataset = list()

for row in train:

stacked_row = to_stacked_row(models,predict_list,row)

stacked_dataset.append(stacked_row)

stacked_model = logistic_regression_model(stacked_dataset)

predictions = list()

for row in test:

stacked_row = to_stacked_row(models, predict_list,row)

stacked_dataset.append(stacked_row)

prediction = logistic_regression_predict(stacked_model, stacked_row)

prediction = round(prediction)

predictions.append(prediction)

return predictions

# Test stacking on the sonar dataset

seed(1)

# load and prepare data

filename = 'sonar.all-data.csv'

dataset = load_csv(filename)

# convert string attributes to integers

for i in range(len(dataset[0])-1):

str_column_to_float(dataset, i)

# convert class column to integers

str_column_to_int(dataset, len(dataset[0])-1)

n_folds = 3

scores = evaluate_algorithm(dataset, stacking,n_folds)

print('Scores: %s' % scores)

print('Mean Accuracy: %.3f%%' % (sum(scores)/float(len(scores))))

边栏推荐

- 10 year old drivers who have been engaged in software testing tell you what type of software is suitable for automation

- Papamelon 11 number of calculation permutation [combinatorics]

- Little transparent apprentice's way to go ashore

- Microsoft Security, which frequently swipes the network security circle, gives us some enlightenment this time?

- Easynvr is optimized when a large number of videos are not online or unstable due to streaming failure

- Correct way to update Fedora image Yum source to Tencent cloud Yum source

- Member management system PC side building tutorial (I)

- Coding platform project construction guide

- Small programs import Excel data in batches, and cloud development database exports CVS garbled code solution

- Implementation of code rate and frame rate statistics in easyplayer RTSP player

猜你喜欢

创客教育给教师发展带来的挑战

云上本地化运营,东非第一大电商平台Kilimall的出海经

Enter the software test pit!!! Software testing tools commonly used by software testers software recommendations

35岁危机?内卷成程序员代名词了

leetcode:84. 柱状图中最大的矩形

![[fault announcement] one stored procedure brings down the entire database](/img/7c/e5adda73a077fe4b8f04b59d1e0e1e.jpg)

[fault announcement] one stored procedure brings down the entire database

目标5000万日活,Pwnk欲打造下一代年轻人的“迪士尼乐园”

Manual for automatic testing and learning of anti stepping pits, one for each tester

Oracle case: ohasd crash on AIX

![跳跃游戏II[贪心练习]](/img/e4/f59bb1f5137495ea357462100e2b38.png)

跳跃游戏II[贪心练习]

随机推荐

leetcode:85. 最大矩形

What transmission modes does the IOT data gateway support

[fault announcement] one stored procedure brings down the entire database

How to quickly master the orders message in sportisimo EDI project?

How to choose CMS website system for website construction

The three-year action plan of the Ministry of industry and information technology has been announced, and the security industry has ushered in major development opportunities!

WordPress pill applet build applet from zero to one [wordpress pagoda configure SSL pseudo static]

Source code analysis of current limiting component uber/ratelimit

leetcode:84. 柱状图中最大的矩形

创客教育给教师发展带来的挑战

Skips allowed on SAP QM material master inspection type

Basic knowledge of wechat applet cloud development literacy chapter (I) document structure

跳跃游戏II[贪心练习]

10 year old drivers who have been engaged in software testing tell you what type of software is suitable for automation

Kangaroo cloud: the overall architecture and key technical points of building a real-time computing platform based on Flink

Easynvr is optimized when a large number of videos are not online or unstable due to streaming failure

Architecture: rest and HATEOAS

EEG microstate as a continuous phenomenon

Let's talk about BOM and DOM (5): dom of all large Rovers and the pits in BOM compatibility

Introduction to QWidget attribute table in QT Designer