当前位置:网站首页>The problem of low video memory in yolov5 accelerated multi GPU training

The problem of low video memory in yolov5 accelerated multi GPU training

2022-06-26 00:10:00 【Invincible Zhang Dadao】

yolov5 many GPU Training video memory is low

Before the change :

According to the configuration , stay train.py The configuration is as follows :

function python train.py after nvidia-smi The display memory occupation is as follows :

After modification

Reference resources yolov5 Official issue in , The distributed multiprocess method mentioned by someone :

stay yolov5 Running in a virtual environment , find torch Of distributed Environment : Like mine in conda3/envs/rcnn/lib/python3.6/site-packages/torch/distributed/;

stay distributed Under the document , Create a new multi process script , Name it yolov5_launch.py:

import sys

import subprocess

import os

from argparse import ArgumentParser, REMAINDER

def parse_args():

""" Helper function parsing the command line options @retval ArgumentParser """

parser = ArgumentParser(description="PyTorch distributed training launch "

"helper utility that will spawn up "

"multiple distributed processes")

# Optional arguments for the launch helper

parser.add_argument("--nnodes", type=int, default=1,

help="The number of nodes to use for distributed "

"training")

parser.add_argument("--node_rank", type=int, default=0,

help="The rank of the node for multi-node distributed "

"training")

parser.add_argument("--nproc_per_node", type=int, default=2,

help="The number of processes to launch on each node, "

"for GPU training, this is recommended to be set "

"to the number of GPUs in your system so that "

"each process can be bound to a single GPU.")# Change it to your corresponding GPU The number of

parser.add_argument("--master_addr", default="127.0.0.1", type=str,

help="Master node (rank 0)'s address, should be either "

"the IP address or the hostname of node 0, for "

"single node multi-proc training, the "

"--master_addr can simply be 127.0.0.1")

parser.add_argument("--master_port", default=29528, type=int,

help="Master node (rank 0)'s free port that needs to "

"be used for communication during distributed "

"training")

parser.add_argument("--use_env", default=False, action="store_true",

help="Use environment variable to pass "

"'local rank'. For legacy reasons, the default value is False. "

"If set to True, the script will not pass "

"--local_rank as argument, and will instead set LOCAL_RANK.")

parser.add_argument("-m", "--module", default=False, action="store_true",

help="Changes each process to interpret the launch script "

"as a python module, executing with the same behavior as"

"'python -m'.")

parser.add_argument("--no_python", default=False, action="store_true",

help="Do not prepend the training script with \"python\" - just exec "

"it directly. Useful when the script is not a Python script.")

# # positional

# parser.add_argument("training_script", type=str,default=r"train,py"

# help="The full path to the single GPU training "

# "program/script to be launched in parallel, "

# "followed by all the arguments for the "

# "training script")

# # rest from the training program

# parser.add_argument('training_script_args', nargs=REMAINDER)

return parser.parse_args()

def main():

args = parse_args()

args.training_script = r"yolov5-master/train.py"# Change it to what you want to train train.py The absolute path of

# world size in terms of number of processes

dist_world_size = args.nproc_per_node * args.nnodes

# set PyTorch distributed related environmental variables

current_env = os.environ.copy()

current_env["MASTER_ADDR"] = args.master_addr

current_env["MASTER_PORT"] = str(args.master_port)

current_env["WORLD_SIZE"] = str(dist_world_size)

processes = []

if 'OMP_NUM_THREADS' not in os.environ and args.nproc_per_node > 1:

current_env["OMP_NUM_THREADS"] = str(1)

print("*****************************************\n"

"Setting OMP_NUM_THREADS environment variable for each process "

"to be {} in default, to avoid your system being overloaded, "

"please further tune the variable for optimal performance in "

"your application as needed. \n"

"*****************************************".format(current_env["OMP_NUM_THREADS"]))

for local_rank in range(0, args.nproc_per_node):

# each process's rank

dist_rank = args.nproc_per_node * args.node_rank + local_rank

current_env["RANK"] = str(dist_rank)

current_env["LOCAL_RANK"] = str(local_rank)

# spawn the processes

with_python = not args.no_python

cmd = []

if with_python:

cmd = [sys.executable, "-u"]

if args.module:

cmd.append("-m")

else:

if not args.use_env:

raise ValueError("When using the '--no_python' flag, you must also set the '--use_env' flag.")

if args.module:

raise ValueError("Don't use both the '--no_python' flag and the '--module' flag at the same time.")

cmd.append(args.training_script)

if not args.use_env:

cmd.append("--local_rank={}".format(local_rank))

# cmd.extend(args.training_script_args)

process = subprocess.Popen(cmd, env=current_env)

processes.append(process)

for process in processes:

process.wait()

if process.returncode != 0:

raise subprocess.CalledProcessError(returncode=process.returncode,

cmd=cmd)

if __name__ == "__main__":

# import os

# os.environ['CUDA_VISIBLE_DEVICES'] = "0,1"

main()

Run the above script : python yolov5_launch.py

Video memory usage exceeds 80%, Note that here you can put train.py In the configuration batch_size turn up ;

Another way

See another way on the Internet , No distributed It is troublesome to create a new file under the folder , stay

python -m torch.distributed.launch --nproc_per_node 2 train.py --batch-size 64 --data data/Allcls_one.yaml --weights weights/yolov5l.pt --cfg models/yolov5l_1cls.yaml --epochs 1 --device 0,1

During training , stay python Followed by -m torch.distributed.launch --nproc_per_node ( Change it to your gpu The number of ) Run again train.py Then add various configuration files

This method is feasible through personal test , It is simpler and more effective than the first method !

边栏推荐

- 《网络是怎么样连接的》读书笔记 - 集线器、路由器和路由器(三)

- 10.4.1、數據中臺

- 兆欧表电压档位选择_过路老熊_新浪博客

- Redis之内存淘汰机制

- Redis memory elimination mechanism

- ssh的复习

- How to configure SQL Server 2008 Manager_ Old bear passing by_ Sina blog

- Joint simulation of STEP7 and WinCC_ Old bear passing by_ Sina blog

- Servlet response下载文件

- 10.2.2、Kylin_kylin的安装,上传解压,验证环境变量,启动,访问

猜你喜欢

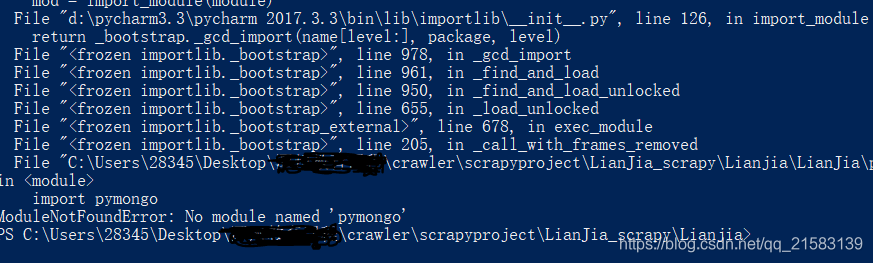

About the solution to prompt modulenotfounderror: no module named'pymongo 'when running the scratch project

Lazy people teach you to use kiwi fruit to lose 16 kg in a month_ Old bear passing by_ Sina blog

Studio5k V28 installation and cracking_ Old bear passing by_ Sina blog

EasyConnect连接后显示未分配虚拟地址

10.4.1、数据中台

文献调研(三):数据驱动的建筑能耗预测模型综述

Simulation connection between WinCC and STEP7_ Old bear passing by_ Sina blog

文献调研(二):基于短期能源预测的建筑节能性能定量评估

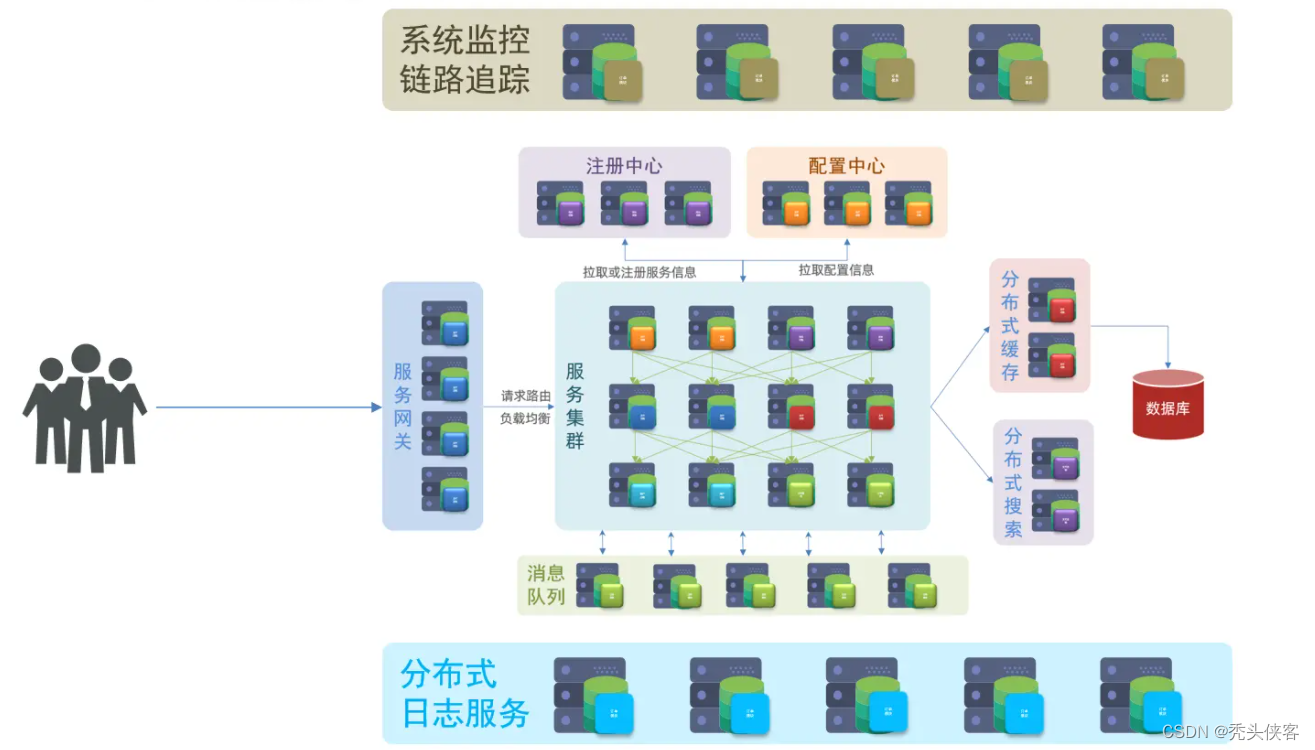

什么是微服务

关于scrapy爬虫时,由spider文件将item传递到管道的方法注意事项

随机推荐

14.1.1 promethues monitoring, four data types metrics, pushgateway

Bit Compressor [蓝桥杯题目训练]

Multi-Instance Redo Apply

Explain in detail the three types of local variables, global variables and static variables

SMT行业AOI,X-RAY,ICT分别是什么?作用是?

farsync 简易测试

STEP7 master station and remote i/o networking_ Old bear passing by_ Sina blog

ASA如何配置端口映射及PAT

文献调研(四):基于case-based reasoning、ANN、PCA的建筑小时用电量预测

DNS复习

11.1.2、flink概述_Wordcount案例

Redis之内存淘汰机制

10.3.1、FineBI_finebi的安装

关于二分和双指针的使用

How to configure SQL Server 2008 Manager_ Old bear passing by_ Sina blog

Circuit de fabrication manuelle d'un port série de niveau USB à TTL pour PL - 2303hx Old bear passing Sina blog

14.1.1、Promethues监控,四种数据类型metrics,Pushgateway

Redis之哨兵

10.4.1、數據中臺

About Simple Data Visualization