当前位置:网站首页>Introduction to the scratch crawler framework

Introduction to the scratch crawler framework

2022-07-25 12:20:00 【Tota King Li】

scrapy Introduce

Scrapy,Python A fast development 、 High level screen grabs and web Grabbing framework , Used to grab web Site and extract structured data from the page .Scrapy A wide range of uses , Can be used for data mining 、 Monitoring and automated testing .

Scrapy The attraction is that it's a framework , Anyone can modify it conveniently according to their needs . It also provides a base class for many types of reptiles , Such as BaseSpider、sitemap Reptiles, etc , The latest version offers web2.0 Reptile support .

scrapy data flow( flow chart )

Scrapy Used Twisted As a framework ,Twisted Something special is that it's event driven , And more suitable for asynchronous code . Operations that block threads include accessing files 、 Database or Web、 Generate a new process and need to process the output of the new process ( If running shell command )、 Code that performs system level operations ( Such as waiting for the system queue ),Twisted Provides methods that allow the above operations to be performed without blocking code execution .

The chart below shows Scrapy Architecture components , And run scrapy Data flow when , The red arrow in the figure indicates .

Scrapy Data flow is the core engine executed by (engine) control , The process goes like this :

- The crawler engine gets the initial request and starts crawling

- The crawler engine starts to request the scheduler , And prepare to crawl for the next request .

- The crawler scheduler returns the next request to the crawler engine .

- The engine sends the request to the downloader , Download network data through download middleware

- Once the downloader completes the page download , Return the download results to the crawler engine .

- The engine returns the response of the downloader to the crawler through the middleware for processing .

- The crawler handles the response , And return the processed... Through the middleware items, And new requests to the engine

- The engine sends the processed items Pipeline to the project , Then return the result to the scheduler , The scheduler plans to handle the next request grab

- Repeat the process , Until you've climbed all the url request

The picture above shows scrapy Workflow of all components , The following describes each component separately :

The crawler engine (ENGINE)

The crawler engine is responsible for controlling the data flow between various components , When some operations trigger events, they all pass engine To deal with it .Downloader

adopt engine Request to download Uncle Wang's data and respond the result to engine.Scheduler

Dispatch to receive engine And put the request in the queue , And return to engineSpider

Spider Request , And deal with engine Return to it the data responded by the downloader , With items And data requests within rules (url) Return to enginePipeline project (item pipeline)

Responsible for handling engine return spider The parsed data , And persistent data , For example, store data in a database or file .- Download Middleware

Download middleware is engine Interact with downloader components , With hook ( plug-in unit ) There is a form of , Instead of receiving requests 、 Process the download of data and respond the results to engine. - spider middleware

spider Middleware is engine and spider Interaction components between , With hook ( plug-in unit ) There is a form of , Can replace processing response And return to engine items And a new request set .

How to create scrapy Environment and projects

# Create a virtual environment

virtualenv --no-site-packages Environment name

# Enter the virtual environment folder

cd Virtual environment folder

# Run virtual environment

cd Scrapits

activate

# Can be updated pip

python -m pip install -U pip

# In a virtual environment windows Lower installation Twisted-18.4.0-cp36-cp36m-win32.wh

pip insttall E:\Twisted-18.4.0-cp36-cp36m-win32.wh

# Install... In a virtual environment scrapy

pip install scrapy

# Create your own projects ,( You can create project folders separately ) Switch to the created file in the virtual environment

scrapy startproject Project name

# establish spider( spider )

scrapy genspider Spider name Address allowed to access

for example :

scrapy genspider movie movie.douban.com

scrapy Project structure

- items.py Responsible for the establishment of data model

- middlewares.py middleware

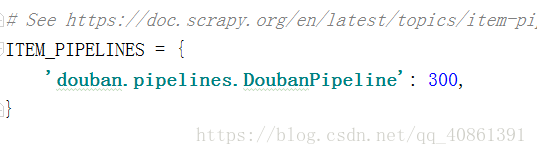

- pipelines.py Responsible for spider Processing of return data .

- settings.py Responsible for the configuration of the whole crawler .

- spiders Catalog Responsible for storing inherited from scrapy Reptiles .

- scrapy.cfg scrapy Basic configuration

边栏推荐

- 1.1.1 欢迎来到机器学习

- Eureka注册中心开启密码认证-记录

- Ups and downs of Apple's supply chain in the past decade: foreign head teachers and their Chinese students

- 【AI4Code】《Pythia: AI-assisted Code Completion System》(KDD 2019)

- R language ggpubr package ggarrange function combines multiple images and annotates_ Figure function adds annotation, annotation and annotation information for the combined image, adds image labels fo

- 919. Complete binary tree inserter: simple BFS application problem

- Transformer variants (spark transformer, longformer, switch transformer)

- I advise those students who have just joined the work: if you want to enter the big factory, you must master these concurrent programming knowledge! Complete learning route!! (recommended Collection)

- Ansible

- 【三】DEM山体阴影效果

猜你喜欢

第一个scrapy爬虫

NLP知识----pytorch,反向传播,预测型任务的一些小碎块笔记

【AI4Code】《IntelliCode Compose: Code Generation using Transformer》 ESEC/FSE 2020

防范SYN洪泛攻击的方法 -- SYN cookie

客户端开放下载, 欢迎尝鲜

scrapy爬虫爬取动态网站

Learning to pre train graph neural networks

那些离开网易的年轻人

【Debias】Model-Agnostic Counterfactual Reasoning for Eliminating Popularity Bias in RS(KDD‘21)

氢能创业大赛 | 国家能源局科技司副司长刘亚芳:构建高质量创新体系是我国氢能产业发展的核心

随机推荐

Client open download, welcome to try

Fault tolerant mechanism record

和特朗普吃了顿饭后写下了这篇文章

scrapy 设置随机的user_agent

【AI4Code】《CodeBERT: A Pre-Trained Model for Programming and Natural Languages》 EMNLP 2020

R语言ggplot2可视化:可视化散点图并为散点图中的部分数据点添加文本标签、使用ggrepel包的geom_text_repel函数避免数据点之间的标签互相重叠(为数据点标签添加线段、指定线段的角度

【Debias】Model-Agnostic Counterfactual Reasoning for Eliminating Popularity Bias in RS(KDD‘21)

WPF project introduction 1 - Design and development of simple login page

R语言ggplot2可视化:使用ggpubr包的ggviolin函数可视化小提琴图、设置add参数在小提琴内部添加抖动数据点以及均值标准差竖线(jitter and mean_sd)

面试官:“同学,你做过真实落地项目吗?”

Go garbage collector Guide

Web programming (II) CGI related

氢能创业大赛 | 国家能源局科技司副司长刘亚芳:构建高质量创新体系是我国氢能产业发展的核心

keepalived实现mysql的高可用

Figure neural network for recommending system problems (imp-gcn, lr-gcn)

Implement anti-theft chain through referer request header

【AI4Code】《CoSQA: 20,000+ Web Queries for Code Search and Question Answering》 ACL 2021

【Debias】Model-Agnostic Counterfactual Reasoning for Eliminating Popularity Bias in RS(KDD‘21)

第一个scrapy爬虫

Mirror Grid