当前位置:网站首页>Learning to pre train graph neural networks

Learning to pre train graph neural networks

2022-07-25 12:02:00 【Shangshanxianger】

Bloggers once collated an article Figure pre training article , Since then, there have been many Graph Do on Pretraning Articles are emerging one after another , But basically ten thousand changes cannot leave its origin , It's all in node-level and graph-level Do self supervised learning .

Why is the self-monitoring strategy effective ?

- Multilayer structure , Go again when the lower floor is fixed train The upper

- multitasking , You can go to bias More general

- Pre training in the same field , Learn more about

But there is always a gap between pre training and fine-tuning , How to solve this gap Become a thorny problem , In this blog post, bloggers will sort out several solutions .

Learning to Pre-train Graph Neural Networks

This article comes from AAAI 2021. Its core idea is actually : How to alleviate GNN Optimization error between pre training and fine tuning ?

First, the author demonstrates GNN Pre training is a two-stage process :

- Pre-traning. First, carry out pre training on large-scale graph data set . That is, for parameters theta Update to minimize : θ 0 = a r g m i n θ L p r e ( f θ ; D p r e ) \theta_0=argmin_{\theta} L^{pre}(f_{\theta};D^{pre}) θ0=argminθLpre(fθ;Dpre)

- Fine-tuning. Fine tune downstream data . Train with the last step θ 0 \theta_0 θ0 Fine tune on , That is, gradient descent : θ 1 = θ 0 − η ∇ θ 0 L f i n e ( f θ 0 ; D t r ) \theta_1=\theta_0-\eta \nabla_{\theta_0} L^{fine}(f_{\theta_0};D^{tr}) θ1=θ0−η∇θ0Lfine(fθ0;Dtr)

The author believes that there are some differences between these two steps , That is to say fine-turning Although it is used θ 0 \theta_0 θ0, but θ 0 \theta_0 θ0 Is constant , It is right fine-tuning The data is invisible , That is, how to fine tune downstream will not be considered . This will cause Pre-traning and Fine-tuning Optimization deviation between , This difference affects the migration effect of the pre training model to a certain extent .

therefore , The author proposes a self supervised pre training strategy L2P-GNN, Two key points bloggers think are :

- stay pre–traning In doing Fine-tuning. That is, since there is gap, So in pre–traning In the process of Fine-tuning Things are good . Some similar borrowings Meta learning Thought , Learn how to learn.

- stay node-level and graph-level Do self supervised learning .

The model architecture is shown in the figure above , Here's the important thing task construction and dual adaptation These two parts .

Task Construction

In order to be in pre–traning In the process of Fine-tuning Things about , The author's idea is to divide the data set into training and testing Just fine . For needs Pre-training The multiple task, Every task Will be divided like this , Corresponds to support set and query set.

In order to simulate the fine-tuning on the downstream training set , Just train the loss function directly on the support set to obtain transferable prior knowledge , Then we can adapt its performance on the query set .

Dual Adaptation

In order to narrow the gap between pre training and fine-tuning process , In the process of pre training, the ability of optimizing the model to quickly adapt to new tasks is very important . In order to encode both local and global information into prior information , Therefore, the author proposes that dual adaptation is node and graph Update at two levels .

- Node level adaptation .. This method is consistent with the previous article , It is also to sample and then calculate : L n o d e ( ψ ; S G c ) = ∑ − l n ( σ ( h u T h v ) ) − l n ( σ ( h u T h v ′ ) ) L^{node}(\psi;S^c_G)=\sum -ln(\sigma(h^T_uh_v))-ln(\sigma(h^T_uh_v')) Lnode(ψ;SGc)=∑−ln(σ(huThv))−ln(σ(huThv′)) At this point, update the parameters at the node level : ψ ′ = ψ − α ∂ ∑ L n o d e ( ψ ; S G c ) ∂ ψ \psi'=\psi-\alpha \frac{\partial \sum L^{node}(\psi;S^c_G)}{\partial \psi} ψ′=ψ−α∂ψ∂∑Lnode(ψ;SGc)

- Map level adaptation . alike , Calculate by using the method of subgraph ( The diagram is represented by pooling obtain ): L g r a p h ( ω ; S G ) = ∑ − l o g ( σ ( h S G c T h G ) ) − l n ( σ ( h S G c T h G ′ ) ) L^{graph}(\omega;S_G)=\sum -log(\sigma(h^T_{S^c_G}h_G))-ln(\sigma(h^T_{S^c_G}h_G')) Lgraph(ω;SG)=∑−log(σ(hSGcThG))−ln(σ(hSGcThG′)) Then the graph level parameters are updated : ω ′ = ω − β ∂ L g r a p h ( ω ; S G ) ∂ ω \omega'=\omega-\beta \frac{\partial L^{graph}(\omega;S_G)}{\partial \omega} ω′=ω−β∂ω∂Lgraph(ω;SG)

- Optimization of prior knowledge . After node level and graph level adaptation , Global prior knowledge has been adapted θ \theta θ For task specific knowledge θ ′ = { ψ ′ , ω ′ } \theta'=\{\psi',\omega'\} θ′={ ψ′,ω′}. Then use it to optimize the back propagation θ \theta θ:

θ ← θ − γ ∂ ∑ L ( θ ′ ; Q G ) ∂ θ \theta \leftarrow \theta-\gamma \frac{\partial \sum L(\theta';Q_G)}{\partial \theta} θ←θ−γ∂θ∂∑L(θ′;QG) L ( θ ′ ; Q G ) = 1 k ∑ L n o d e ( ψ ; S G c ) + L g r a p h ( ω ; S G ) L(\theta';Q_G)=\frac{1}{k}\sum L^{node}(\psi;S^c_G)+L^{graph}(\omega;S_G) L(θ′;QG)=k1∑Lnode(ψ;SGc)+Lgraph(ω;SG)

paper:https://yuanfulu.github.io/publication/AAAI-L2PGNN.pdf

code:https://github.com/rootlu/L2P-GNN

Adaptive Transfer Learning on GNN

come from KDD2021. The traditional pre training scheme does not design downstream adaptive learning , It is impossible to achieve consistency between upstream and downstream . Therefore, the author designs a weight model with the help of meta learning adaptive auxilizry loss weighting model To control the upstream self-supervised Tasks and downstream target task Consistency between .

- traditional method . Self supervised task learning on a large amount of unlabeled data + Use the node representation learned by the self supervised task to assist the learning of the target task .

- The author's transfer Method . use joint loss To fine tune the parameters , This will adaptively preserve pre-training Effective information of the stage , That is, by calculating the cosine similarity between the auxiliary task and the target task gradient similarity To learn Adaptive Auxiliary Loss Weighting, To quantify the consistency between the auxiliary task and the target task .

paper:https://arxiv.org/abs/2107.08765

边栏推荐

- Video Caption(跨模态视频摘要/字幕生成)

- The first C language program (starting from Hello World)

- 【高并发】我用10张图总结出了这份并发编程最佳学习路线!!(建议收藏)

- Classification parameter stack of JS common built-in object data types

- 创新突破!亚信科技助力中国移动某省完成核心账务数据库自主可控改造

- Brpc source code analysis (VIII) -- detailed explanation of the basic class eventdispatcher

- 【GCN-RS】Region or Global? A Principle for Negative Sampling in Graph-based Recommendation (TKDE‘22)

- Power BI----这几个技能让报表更具“逼格“

- How to solve the problem of the error reported by the Flink SQL client when connecting to MySQL?

- 'C:\xampp\php\ext\php_zip.dll' - %1 不是有效的 Win32 应用程序 解决

猜你喜欢

Oil monkey script link

MySQL historical data supplement new data

阿里云技术专家秦隆:可靠性保障必备——云上如何进行混沌工程

微星主板前面板耳机插孔无声音输出问题【已解决】

Brpc source code analysis (VII) -- worker bthread scheduling based on parkinglot

Attendance system based on w5500

![[multimodal] transferrec: learning transferable recommendation from texture of modality feedback arXiv '22](/img/02/5f24b4af44f2f9933ce0f031d69a19.png)

[multimodal] transferrec: learning transferable recommendation from texture of modality feedback arXiv '22

【Debias】Model-Agnostic Counterfactual Reasoning for Eliminating Popularity Bias in RS(KDD‘21)

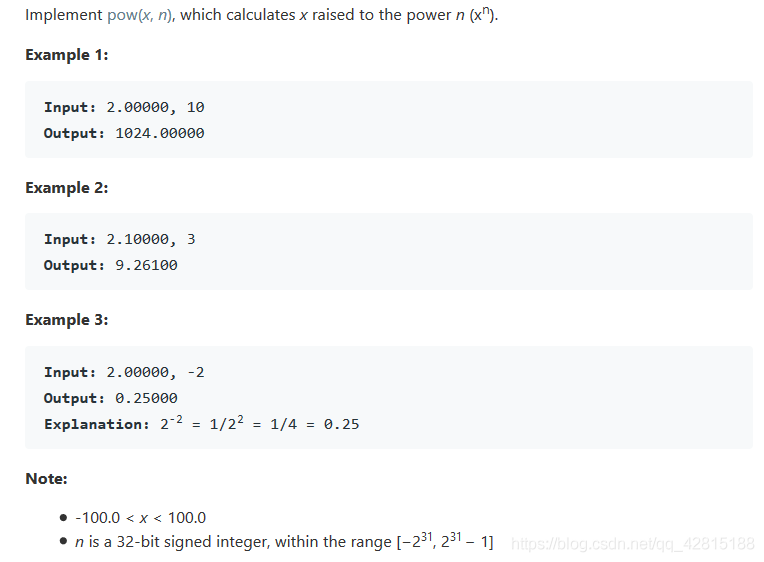

LeetCode 50. Pow(x,n)

Qin long, a technical expert of Alibaba cloud: a prerequisite for reliability assurance - how to carry out chaos engineering on the cloud

随机推荐

PHP curl post x-www-form-urlencoded

基于TCP/IP在同一局域网下的数据传输

selenium使用———安装、测试

Transformer变体(Routing Transformer,Linformer,Big Bird)

[GCN multimodal RS] pre training representations of multi modal multi query e-commerce search KDD 2022

PL/SQL入门,非常详细的笔记

30 sets of Chinese style ppt/ creative ppt templates

微信公众号开发 入手

Attendance system based on w5500

知识图谱用于推荐系统问题(MVIN,KERL,CKAN,KRED,GAEAT)

任何时间,任何地点,超级侦探,认真办案!

Brpc source code analysis (VII) -- worker bthread scheduling based on parkinglot

The JSP specification requires that an attribute name is preceded by whitespace

JS常用内置对象 数据类型的分类 传参 堆栈

【GCN多模态RS】《Pre-training Representations of Multi-modal Multi-query E-commerce Search》 KDD 2022

brpc源码解析(三)—— 请求其他服务器以及往socket写数据的机制

创新突破!亚信科技助力中国移动某省完成核心账务数据库自主可控改造

Application of comparative learning (lcgnn, videomoco, graphcl, XMC GaN)

程序员送给女孩子的精美礼物,H5立方体,唯美,精致,高清

LeetCode第303场周赛(20220724)