当前位置:网站首页>Fbnet/fbnetv2/fbnetv3: Facebook's lightweight network exploration in NAS | lightweight network

Fbnet/fbnetv2/fbnetv3: Facebook's lightweight network exploration in NAS | lightweight network

2022-06-24 12:25:00 【VincentLee】

FBNet The series is based entirely on NAS Lightweight network family of methods , Analyze the shortcomings of current search methods , Gradually increase innovative improvements ,FBNet Combined with the DNAS And resource constraints ,FBNetV2 Joined the channel And input resolution search ,FBNetV3 It uses accuracy prediction to search the network structure quickly undefined

source : Xiaofei's algorithm Engineering Notes official account

FBNet

The paper : FBNet: Hardware-Aware Efficient ConvNet Design via Differentiable Neural Architecture Search | CVPR 2019

- Address of thesis :https://arxiv.org/abs/1812.03443

- Paper code :https://github.com/facebookresearch/mobile-vision

Introduction

Recently, convolution network design focuses on accuracy , It also needs to take into account the operational performance , Especially on mobile devices , This makes the design of convolutional neural network more difficult , The main difficulties are as follows :

- Intractable design space, Because convolution network has many parameters , It makes the design space very complicated , At present, many methods propose automatic search , Can simplify the process of artificial design , But this method usually needs a lot of calculation .

- Nontransferable optimality, The performance of convolutional networks depends on many factors , Such as input resolution and target device , Different network parameters need to be adjusted for different resolutions , And the same block The efficiency on different devices can also be quite different , So we need to tune the network under specific conditions .

- Inconsistent efficiency metrics, Most efficiency indicators are not only related to network structure , It's also related to the hardware and software settings on the target device . In order to simplify the , Many researches use hardware independent index to express convolution efficiency , such as FLOPs, but FLOPs It's not always the same as performance , Also follow block It's related to the implementation of , This makes network design more difficult .

To solve the above problems , The paper proposes FBNet, Use a differentiable neural network to search for (DNAS) To discover hardware related lightweight convolutional networks , Flow chart 1 Shown .DNAS Method to express the whole search space as HYPERNET , The problem of finding the optimal network structure is transformed into finding the optimal candidate block Distribution , Training by gradient descent block The distribution of , And you can choose different... For each layer of the network block. In order to better estimate the network delay , Each candidate was measured and recorded in advance block The actual time delay of , In the estimation, it can be accumulated directly according to the network structure and the corresponding delay .

Method

DNAS The network structure search problem is formulated as :

Given the structure space $\mathcal{A}$, Looking for the best structure $a\in \mathcal{A}$, After training well $w_a$ after , To minimize the loss $\mathcal{L}(a, w_a)$, This paper mainly focuses on 3 One factor : search space $\mathcal{A}$、 Consider the loss function of the actual delay $\mathcal{L}(a, w_a)$ And efficient search algorithms .

- The Search Space

Most of the previous methods searched the cell structure , And then stack it up into a whole network , But actually , The influence of the same cell structure on the accuracy and delay of the network is very different in different layers . So , This paper constructs the whole network structure (macro-architecture) fixed layer-wise search space , Each layer can choose different structures block, The overall network structure is shown in table 1 Shown , The structure of the first and the last three layers is fixed , The rest of the layer structure needs to be searched . The front layer has a high resolution , A small number of cores is set manually to ensure the lightness of the network .

layer-wise The search space is shown in the figure 3 Shown , be based on MobileNetV2 and ShuffleNet The classic structural design of , By setting different convolution kernel sizes $K$(3 or 5)、 Expansion rate $e$ And grouping numbers to construct different candidates block. if block The input and output resolutions are the same , Then add element-wise Of shortcut, And if you use block convolution , The convolution output needs to be processed channel shuffle.

The experiments in this paper include 9 Species candidates block, Each of these block The super parameters of are shown in table 2 Shown . in addition , also skip structure , Direct mapping of input to output , To shorten the depth of the overall network . Overall speaking , The whole network contains 22 A layer to search for , Each layer starts from 9 Candidates block Choose from , share $9^{22}$ It's a possible structure .

- Latency-Aware Loss Function The formula 1 The loss function in is not only to reflect the accuracy , It also reflects the delay on the target hardware . therefore , Define the following loss function :

$CE(a, w_a)$ It's cross entropy loss ,$LAT(a)$ Represents the delay of the current structure on the target hardware ,$\alpha$ Control the magnitude of the overall loss function ,$\beta$ Adjust the amplitude of the delay term . The calculation of time delay may be time-consuming , The paper uses block Delay of lookup Table to estimate the overall size of the network :

$b^{(a)}_l$ For structure $a$ in $l$ Layer of block, This method of estimation assumes that block The calculations are independent of each other , Yes CPUs and DSPs And so on , In this way , Be able to quickly estimate $10^{21}$ The actual delay of a network .

- The Search Algorithm In this paper, the search space is represented as random HYPERNET , HYPERNET is a table 1 The overall structure , Each layer contains 9 Tables 2 Parallel of block. In reasoning , The candidate block The probability of being executed is :

$\theta_l$ It includes decisions $l$ Layer each candidate block The parameter of sampling probability ,$l$ The output of the layer can be expressed as :

$m_{l,i}$ yes ${0, 1}$ A random variable , Random assignment according to sampling probability , Layer output for all block The sum of the output of . therefore , Network structure $a$ The sampling probability can be expressed as :

$\theta$ Contains all the block Of $\theta_{l,i}$, Based on the above definition , You can put the formula 1 The discrete optimization problem is transformed into :

such , A weight $wa$ It's derivable , but $\theta$ It's still not derivable , because $m{l,i}$ It's discrete , For this reason will $m_{l,i}$ The generation method of is converted to Gumbel Softmax:

$g{l,i} \sim Gumbel(0,1)$ by Gumbel Distributed random noise ,$\tau$ Is the temperature parameter . When $\tau$ near 0 when ,$m{l,i}$ Be similar to one-shot, When $\tau$ The bigger it is ,$m_{l,i}$ Similar to continuous random variables . such , The formula 2 The loss of cross entropy can be used to $w_a$ and $\theta$ Derivation , And the delay term $LAT$ It can also be rewritten as :

Due to the use lookup form , therefore $LAT(b{l,i})$ It's a constant factor , The Internet $a$ The overall time delay of $m{l,i}$ and $\theta_{l,i}$ It's also derivable . thus , Loss function versus weight $w_a$ And structural variables $\theta$ It's all derivable , have access to SGD To optimize the loss function efficiently .

The search process is equivalent to the training process of random hypernets , During the training , Calculation $\partial\mathcal{L}/\partial wa$ Update HYPERNET every block A weight , stay block After training , Every block The contribution to accuracy and delay is different , Calculation $\partial\mathcal{L}/\partial \theta$ To update each block The sampling probability of $P{\theta}$. After super net training , By sampling network distribution $P_{\theta}$ Get the optimal network structure .

Experiments

Compared with other lightweight networks in ImageNet Performance comparison on .

Performance comparison under specific resource and device conditions .

Conclustion

This paper presents a differentiable neural network search method , The discrete element structure selection is transformed into the continuous element structure probability distribution , In addition, the target device delay is added to the optimization process , Combined with the weight sharing of HYPERNET , It can quickly generate end-to-end High-Performance Lightweight network under specific conditions . But the purpose of the paper is block The framework is based on the current mainstream MobileNetV2 and ShuffleNet Design , It is more about searching its structural parameters , So there are certain constraints in the network structure .

FBNetV2

The paper : FBNetV2: Differentiable Neural Architecture Search for Spatial and Channel Dimensions | CVPR 2020

- Address of thesis :https://arxiv.org/abs/2004.05565

- Paper code :https://github.com/facebookresearch/mobile-vision

Introduction

DNAS The optimal subnet is sampled by training the supernets containing all candidate networks , Although the search speed is fast , But it takes a lot of memory , So the search space is generally smaller than other methods , And the memory consumption and computation consumption increase linearly with the search dimension .

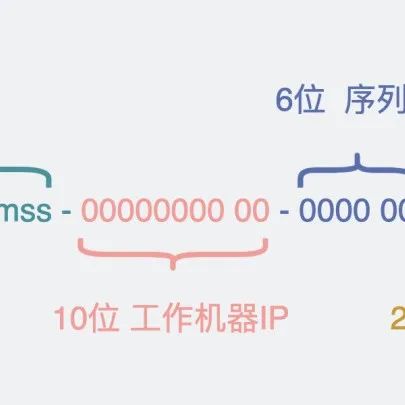

To solve this problem , The paper proposes DMaskingNAS, take channel The number and the input resolution are in the order of mask And sampling into the super network , With a small amount of memory and computation , A substantial increase $10^{14}$ Multiple search space .

Channel Search

DNAS Generally, candidates will be block All instantiated in HYPERNET , In the process of training, the candidates block Make a selection , Direct will channel Adding dimensions to the search space will increase the amount of memory and computation .

The conventional implementation methods are as follows Step A, Instantiate convolutions of different sizes , In order to make the output of convolution of different dimensions can be fused , The characteristics of smaller dimensions are analyzed Step B Of zero padding.Step B Can be converted into Step C,3 Two convolution output features of the same size , Reuse 3 Different mask The output is mask( Blue 0, White is 1). because Step C Of 3 Each convolution has the same size and input , It can be implemented with a weight sharing convolution , namely Step D. take Step D Of mask Merge first , And multiply it by the convolution output , This saves computation and memory , The resulting Step E, Only required 1 Subconversion and 1 A feature map is enough .

Input Resolution Search

Follow channel Joining the search space is similar to , Input resolution ,DNAS This is also implemented by instantiating all layers for each different input resolution , This will double the amount of computation and memory , There are also some unavoidable problems :

- Feature output cannot be fused . The input resolution is different block The output size is different , Pictured A, No direct fusion . Generally, it can be carried out as shown in the figure B Of zero padding Solve the problem of size consistency , But this can cause pixel misalignment problems , So take the graph C Of Interspersing zero-padding Sampling method ( Nearest neighbor +zero-padding) To avoid pixel misalignment and pixel contamination .

- The sampled feature map results in the decrease of receptive domain . Pictured D, hypothesis F by $3\times 3$ Convolution , The sampled feature map results in a single convolution covering only $2\times 2$ Valid input for , Therefore, before convolution operation, it is necessary to compress the sampled characteristic image , After convolution operation, extend and recover , Pictured E. The actual picture above E It can be realized by hole convolution , That is to avoid additional memory applications , It also avoids the modification of convolution kernel .

In the experimental part of this paper, the configuration of input resolution and the search process are not described , Just showing the results of the experiment , And the author only opens up the search network , There is no open source search code . It is speculated that the same HYPERNET should be used to extract features from different resolution inputs , And then combine the final output for training , Finally, take the resolution with the largest weight , Pictured 2 Shown ,$F$ For shared hypernets , If you have a friend who knows me, please let me know .

Experiments

The overall network structure set during the search and each candidate block, contain $10^{35}$ A candidate network .

Search for multiple FBNetV2 The Internet , Each network has different resource requirements .

Compared with other networks .

Conclustion

Mentioned before FBNet Of block The framework is based on the current mainstream MobileNetV2 and ShuffleNet Design , It is more about searching its structural parameters , So there are certain constraints in the network structure .FBNetV2 There's a coming $10^{14}$ Double the rise , All aspects of the effect is better than most of the current network , But on the whole , The paper is more like a quantitative method , Because the base is fixed to the structure design of the existing network .

FBNetV3

The paper : FBNetV3: Joint Architecture-Recipe Search using Neural Acquisition Function

- Address of thesis :https://arxiv.org/abs/2006.02049

Introduction

FBNetV3 For now, it's just arxiv On , The paper holds that the current NAS Most of the methods only satisfy the search of network structure , It doesn't care whether the training parameters are set properly in the network performance verification , This can lead to model performance degradation . So , The paper proposes JointNAS, In the case of resource constraints , Search for the most accurate training parameters and network structure .

JointNAS

JointNAS The optimization objective can be formulated as :

$A$、$h$ and $\Omega$ They represent the network structure embedding、 Training parameters embedding And search space ,$acc$ Calculate the accuracy of the current structure and training parameters ,$g_i$ and $\gamma$ They are resource consumption calculation and resource quantity calculation .

JointNAS The search process is like Alg. 1 Shown , Divide the search into two phases :

- Coarse grained stage (coarse-grained), In this stage, the candidate network structure with high performance is searched iteratively - Super parameter pairs and training accuracy predictors .

- Fine grained stage (fine-grained stages), With the help of the accuracy predictor trained in coarse-grained stage , Fast evolutionary algorithm search for candidate networks , The search integrates the super parameter optimizer proposed in this paper AutoTrain.

Coarse-grained search: Constrained iterative optimization

Coarse grained search generates an accuracy predictor and a high performance candidate network set .

- Neural Acquisition Function

The structure of the predictor is shown in the figure 4 Shown , It contains a structural encoder and two head, They are auxiliary agents head And accuracy head. agent head Predict the properties of the network (FLOPs Or parameter quantity, etc ), Mainly used in encoder pre training , Accuracy rate head According to the training parameters and network structure prediction accuracy , Using agents head The pre trained encoder is in the process of iterative optimization fine-tuned.

- Step 1. Pre-train embedding layer

The predictor contains a pre training process , First, the training model takes the network structure as the input , Predict the properties of the network (FLOPs Or parameter quantity, etc ), Such training data is very easy to obtain , Randomly generate a large number of networks and calculate their properties , Then share the encoder with the accuracy head, And then officially launch the follow-up network search . The pre training of the encoder can significantly improve the accuracy and stability of the predictor , The effect is as shown in the picture 5 Shown .

- Step 2. Constrained iterative optimization First, the network structure is sampled from the search space using Quasi Monte Carlo method - Hyperparameter pair , And then train the predictor iteratively :

- Select one based on the predictor results batch Qualified network structure - Hyperparameter pair

- Train and test the network structure - The accuracy of superparametric pairs , The training used an early stop strategy . Take the final accuracy of the first iteration of the network and each epoch The accuracy of , Draw each epoch Network ranking and final ranking correlation curve , Pictured 3 Shown , Take the correlation as 0.92 As a training cycle .

- Update predictor , The front of the predictor 50 individual epoch Fixed encoder parameters , Then we use the learning measurement of the gradual decline of learning rate . Accuracy prediction head Use Huber loss Training , Be able to bear the influence of outliers on model training .

This iterative process can reduce the number of candidates , Avoid unnecessary validation , Improve the efficiency of exploration .

Fine-grained search: Predictor-based evolutionary search

The second stage uses adaptive genetic algorithms , Choose the optimal network structure in the first stage - Training parameter pairs as the first generation population . In each iteration , Mutate the population to produce new subgroups that satisfy the constraints , Use the predictor trained in coarse-grained stage to quickly predict the individual score , Choose the best $K$ Individual network structure - Training parameter pairs as the next generation population . Calculate the highest score growth of the current iteration relative to the previous iteration , Exit when growth is not enough , The final high accuracy network structure and the corresponding training parameters are obtained .

We need to pay attention to , When resource constraints change , The predictor can still be reused , It can quickly search the appropriate network structure and training parameters using fine-grained phase .

Search space

The search space is shown in the table 1 Shown , contain $10^{17}$ A network structure and $10^{7}$ It's a training super parameter .

Experiments

Fixed network structure , Test the effectiveness of the training parameter search .

Communicate with other networks ImageNet Performance comparison .

Conclustion

FBNetV3 Completely detached from FBNetV2 and FBNet The design of the , The accuracy predictors and genetic algorithms used are already in use NAS There are many applications in this field , The main highlight is that training parameters are added to the search process , This is very important for performance improvement .

Conclustion

FBNet The series is based entirely on NAS Lightweight network family of methods , Analyze the shortcomings of current search methods , Gradually increase innovative improvements ,FBNet Combined with the DNAS And resource constraints ,FBNetV2 Joined the channel And input resolution search ,FBNetV3 It uses accuracy prediction to search the network structure quickly , Looking forward to complete open source code .

If this article helps you , Please give me a compliment or watch it ~undefined More on this WeChat official account 【 Xiaofei's algorithm Engineering Notes 】

边栏推荐

- Example of SMS interface verification code function implemented by ThinkPHP framework

- How to develop mRNA vaccine? 27+ pancreatic cancer antigen and immune subtype analysis to tell you the answer!

- 打新债可以申请多少 开户是安全的吗

- Ten thousand campus developers play AI in a fancy way. It's enough to see this picture!

- I'm in Shenzhen. Where can I open an account? Is it safe to open an account online now?

- Pipeline post instruction

- mRNA疫苗的研制怎么做?27+ 胰腺癌抗原和免疫亚型的解析来告诉你答案!

- How does wechat and QQ chat work? So simple!!!

- 嵌入式必学!硬件资源接口详解——基于ARM AM335X开发板 (上)

- 2022年有什么低门槛的理财产品?钱不多

猜你喜欢

随机推荐

RTMP streaming platform easydss video on demand interface search bar development label fuzzy query process introduction

集群控制管理

分布式系统解决之道:目录、消息队列、事务系统及其他

What should music website SEO do?

New progress in the construction of meituan's Flink based real-time data warehouse platform

可变参数模板实现max(接受多个参数,两种实现方式)

《梦华录》要大结局了,看超前点映不如先来学学它!

[day ui] alert component learning

【老卫搞机】090期:键盘?主机?全功能键盘主机!

2021-06-03: Boolean operation. Given a Boolean expression and an expected cloth

【云驻共创】解读HarmonyOS 应用与服务生态

Practice of dynamic load balancing based on open source tars

打新债可以申请多少 开户是安全的吗

What are the low threshold financial products in 2022? Not much money

11+的基于甲基化组和转录组综合分析识别葡萄膜黑色素瘤中新的预后 DNA 甲基化特征~

"Meng Hua Lu" is about to have a grand finale. It's better to learn it first than to look ahead!

Collation of related papers on root cause analysis

广发证券靠谱吗?开证券账户安全吗?

[Architect (Part 41)] installation of server development and connection to redis database

[206] use PHP language to generate the code of go language