当前位置:网站首页>3、 Automatically terminate training

3、 Automatically terminate training

2022-06-25 08:51:00 【Beyond proverb】

occasionally , When the model loses the expected effect of the function value , You can finish your training , On the one hand, save time , On the other hand, prevent over fitting

here , Set the loss function value to be less than 0.4, Training stopped

from tensorflow import keras

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

class myCallback(tf.keras.callbacks.Callback):

def on_epoch_end(self,epoch,logs={

}):

if(logs.get('loss')<0.4):

print("\nLoss is low so cancelling training!")

self.model.stop_training = True

callbacks = myCallback()

mnist = tf.keras.datasets.fashion_mnist

(training_images,training_labels),(test_images,test_labels) = mnist.load_data()

training_images_y = training_images/255.0

test_images_y = test_images/255.0

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(512,activation=tf.nn.relu),

tf.keras.layers.Dense(10,activation=tf.nn.softmax)

])

model.compile(optimizer='adam',loss='sparse_categorical_crossentropy',metrics=['accuracy'])

model.fit(training_images_y,training_labels,epochs=5,callbacks=[callbacks])

""" Colocations handled automatically by placer. Epoch 1/5 60000/60000 [==============================] - 12s 194us/sample - loss: 0.4729 - acc: 0.8303 Epoch 2/5 59712/60000 [============================>.] - ETA: 0s - loss: 0.3570 - acc: 0.8698 Loss is low so cancelling training! 60000/60000 [==============================] - 11s 190us/sample - loss: 0.3570 - acc: 0.8697 """

边栏推荐

- WebGL谷歌提示内存不够(RuntimeError:memory access out of bounds,火狐提示索引超出界限(RuntimeError:index out of bounds)

- What does openid mean? What does "token" mean?

- Data preprocessing: discrete feature coding method

- Iframe is simple to use, iframe is obtained, iframe element value is obtained, and iframe information of parent page is obtained

- 检测点是否在多边形内

- 六级易混词整理

- atguigu----01-脚手架

- Various synchronous learning notes

- Trendmicro:apex one server tools folder

- 2021 "Ai China" selection

猜你喜欢

wav文件(波形文件)格式分析与详解

Webgl Google prompt memory out of bounds (runtimeerror:memory access out of bounds, Firefox prompt index out of bounds)

C#程序终止问题CLR20R3解决方法

atguigu----17-生命周期

WebGL谷歌提示内存不够(RuntimeError:memory access out of bounds,火狐提示索引超出界限(RuntimeError:index out of bounds)

View all listening events on the current page by browser

Jmeter接口测试,关联接口实现步骤(token)

C language: count the number of words in a paragraph

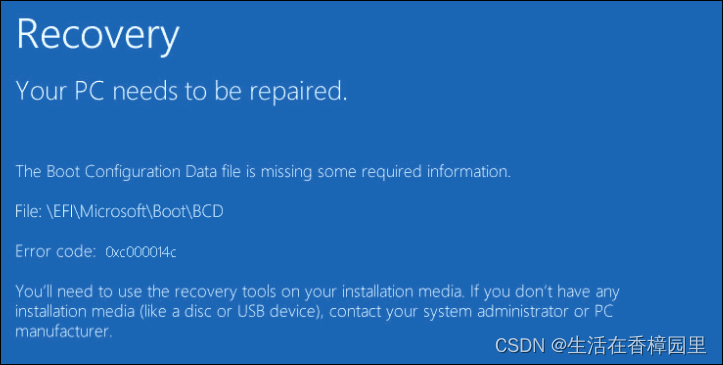

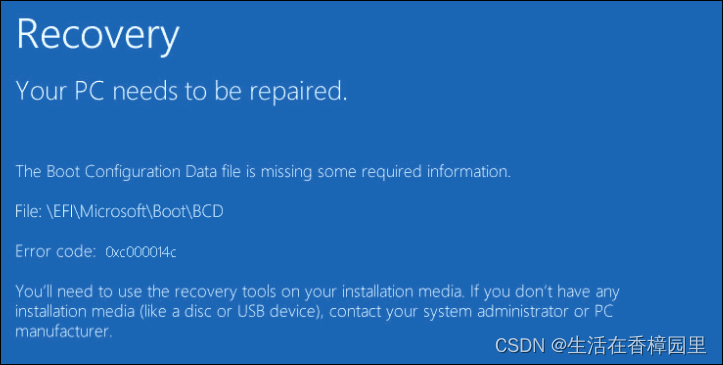

UEFI:修复 EFI/GPT Bootloader

UEFI: repair efi/gpt bootloader

随机推荐

《乔布斯传》英文原著重点词汇笔记(一)【 Introduction 】

Unity发布webGL的时候JsonConvert.SerializeObject()转换失败

Unknown table 'column of MySQL_ STATISTICS‘ in information_ schema (1109)

The city chain technology platform is realizing the real value Internet reconstruction!

Meaning of Jieba participle part of speech tagging

Sharepoint:sharepoint server 2013 and adrms Integration Guide

ICer必须知道的35个网站

2021 "Ai China" selection

In Section 5 of bramble pie project practice, Nokia 5110 LCD is used to display Hello World

WebGL发布之后不可以输入中文解决方案

第十五周作业

一、单个神经元网络构建

Sharepoint:sharepoint 2013 with SP1 easy installation

¥3000 | 录「TBtools」视频,交个朋友&拿现金奖!

RMB 3000 | record "tbtools" video, make a friend and get a cash prize!

mysql之Unknown table ‘COLUMN_STATISTICS‘ in information_schema (1109)

Fault: 0x800ccc1a error when outlook sends and receives mail

How can games copied from other people's libraries be displayed in their own libraries

《乔布斯传》英文原著重点词汇笔记(五)【 chapter three 】

jmeter中csv参数化