当前位置:网站首页>Sorting out common problems after crawler deployment

Sorting out common problems after crawler deployment

2022-06-23 06:06:00 【Python Yixi】

After the crawler local test run passes , Some students can't wait to deploy the program to the server for formal operation , Then after running for a period of time, there are various errors and even the program exits , Here are some common problems for reference :

1、 Local debugging only indicates that the process from request to data analysis has been completed , But it does not mean that the program can collect data stably for a long time , The collected websites need to be tested automatically , Generally, it is recommended to conduct stability test according to a certain number of times or time , Take a look at the response and anti - crawling of the website

2、 The program needs to add exception protection for data processing , If the data requirements are not high , It can be run in a single thread , If the data requirements are high , It is recommended to add multithreading , Improve the processing performance of the program

3、 According to the collected data requirements and website conditions , Configure the appropriate crawler agent , This can reduce the risk of website anti - crawling , Crawler agent selection comparison , Focus on network latency 、IP Pool size and request success rate , In this way, you can quickly select the appropriate crawler agent products

Here is a demo Program , Used to count requests and IP Distribution , It can also be modified into a data acquisition program as required :

#! -- encoding:utf-8 --

import requests

import random

import requests.adapters

# Target page to visit

targetUrlList = [

"https://",

"https://",

"https://",

]

# proxy server ( The product's official website h.shenlongip.com)

proxyHost = " h.shenlongip.com"

proxyPort = " "

# Proxy authentication information

proxyUser = "username"

proxyPass = "password"

proxyMeta = "http://%(user)s:%(pass)[email protected]%(host)s:%(port)s" % {

"host": proxyHost,

"port": proxyPort,

"user": proxyUser,

"pass": proxyPass,

}

# Set up http and https All visits are made with HTTP agent

proxies = {"http": proxyMeta,

"https": proxyMeta,

}

# Set up IP Switch head

tunnel = random.randint(1, 10000)

headers = {"Proxy-Tunnel": str(tunnel)}class HTTPAdapter(requests.adapters.HTTPAdapter):

def proxy_headers(self, proxy):

headers = super(HTTPAdapter, self).proxy_headers(proxy)

if hasattr(self, 'tunnel'):

headers['Proxy-Tunnel'] = self.tunnel

return headers

# Visit the website three times , Use the same tunnel sign , Can maintain the same extranet IP

for i in range(3):

s = requests.session()

a = HTTPAdapter()

# Set up IP Switch head

a.tunnel = tunnel

s.mount('https://', a)for url in targetUrlList:

r = s.get(url, proxies=proxies)

print r.text

边栏推荐

- 金融科技之高效办公(一):自动生成信托计划说明书

- Cloud native database is the future

- Ant Usage Summary (III): batch packaging apk

- SQL表名与函数名相同导致SQL语句错误。

- 阿里云 ACK One、ACK 云原生 AI 套件新发布,解决算力时代下场景化需求

- jvm-04. Object's memory layout

- Leetcode topic analysis: factorial training zeroes

- Adnroid activity screenshot save display to album view display picture animation disappear

- Wechat tried out the 1065 working system, and was forced to leave work at 18:00; It is said that Apple will no longer develop off screen fingerprint identification; Amd chief independent GPU architect

- Cryptography series: certificate format representation of PKI X.509

猜你喜欢

jvm-04.对象的内存布局

Efficient office of fintech (I): automatic generation of trust plan specification

Explicability of counter attack based on optimal transmission theory

jvm-01.指令重排

编址和编址单位

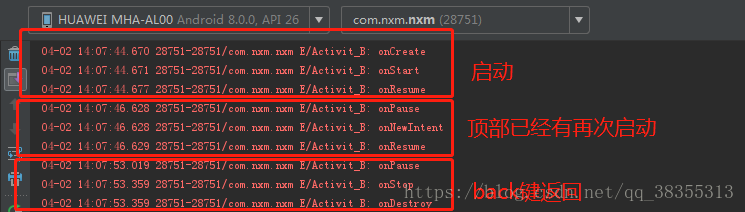

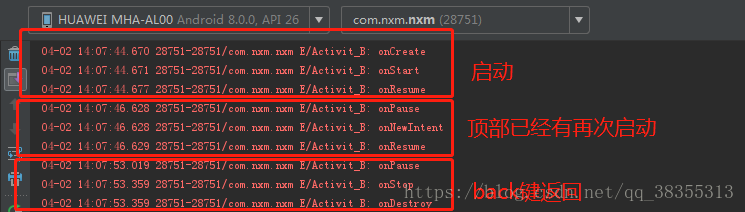

Activity startup mode and life cycle measurement results

Visual studio debugging tips

Activity启动模式和生命周期实测结果

【Leetcode】431. Encode N-ary Tree to Binary Tree(困难)

最优传输理论下对抗攻击可解释性

随机推荐

Android handler memory leak kotlin memory leak handling

Pyinstaller sklearn报错的问题

Pat class B 1017 C language

jvm-05. garbage collection

Infotnews | which Postcard will you receive from the universe?

APP SHA1获取程序 百度地图 高德地图获取SHA1值的简单程序

【Cocos2d-x】自定义环形菜单

Pat class B 1025 reverse linked list

Explanation of penetration test process and methodology (Introduction to web security 04)

PAT 乙等 1012 C语言

exe闪退的原因查找方法

论文笔记: 多标签学习 LSML

使用aggregation API扩展你的kubernetes API

PAT 乙等 1019 C语言

ORB_ Slam2 operation

Visual studio debugging tips

PAT 乙等 1021 个位数统计

关于安装pip3 install chatterbot报错的问题

使用链表实现两个多项式相加和相乘

Multiple strings for leetcode topic resolution