当前位置:网站首页>Paper notes: multi label learning dm2l

Paper notes: multi label learning dm2l

2022-06-24 08:21:00 【Minfan】

Abstract : Share your understanding of the paper . See the original Ma, Z.-C., & Chen, S.-C. (2021). Expand globally, shrink locally: Discrimi-nant multi-label learning with missing labels. Pattern Recognition, 111, 107675.

1. Contribution of thesis

- Optimize both globally and locally ;

- Support nonlinear transformation with kernel function ;

- Theoretical analysis in place .

2. Basic symbols

| Symbol | meaning | explain |

|---|---|---|

| X ∈ R n × d \mathbf{X} \in \mathbb{R}^{n \times d} X∈Rn×d | Attribute matrix | |

| X k ∈ R n k × d \mathbf{X}_k \in \mathbb{R}^{n_k \times d} Xk∈Rnk×d | With k k k Attribute submatrix of labels | |

| Y ∈ { − 1 , + 1 } n × c \mathbf{Y} \in \{-1, +1\}^{n \times c} Y∈{ −1,+1}n×c | Label matrix | |

| Y ~ ∈ { − 1 , + 1 } n × l \tilde{\mathbf{Y}} \in \{-1, +1\}^{n \times l} Y~∈{ −1,+1}n×l | Observed label matrix | |

| Ω = { 1 , … , n } × { 1 , … , c } \mathbf{\Omega} = \{1, \dots, n\} \times \{1, \dots, c\} Ω={ 1,…,n}×{ 1,…,c} | Observation tag location set | |

| W ∈ R m × l \mathbf{W} \in \mathbb{R}^{m \times l} W∈Rm×l | coefficient matrix | It's still a linear model |

| w i ∈ R m \mathbf{w}_i \in \mathbb{R}^m wi∈Rm | The coefficient vector of a label | |

| C ∈ R l × l \mathbf{C} \in \mathbb{R}^{l \times l} C∈Rl×l | Label correlation matrix | Pairwise correlation , Does not satisfy symmetry |

3. Algorithm

Basic optimization objectives :

min 1 2 ∥ R Ω ( X W ) − Y ~ ∥ F 2 + λ d ∥ X W ∥ ∗ (1) \min \frac{1}{2} \|R_{\Omega}(\mathbf{XW}) - \tilde{\mathbf{Y}}\|_F^2 + \lambda_d \|\mathbf{XW}\|_*\tag{1} min21∥RΩ(XW)−Y~∥F2+λd∥XW∥∗(1)

among ,

- The loss function section does not consider missing values , This is a normal operation .

- Kernel regularity (nuclear norm) The prediction matrix is partially considered , Not just X W \mathbf{XW} XW, It's a little strange. .

The optimization objective after considering the label structure :

min 1 2 ∥ R Ω ( X W ) − Y ~ ∥ F 2 + λ d ( ∑ k = 1 c ∥ X k W ∥ ∗ − ∥ X W ∥ ∗ ) , (2) \min \frac{1}{2} \|R_{\Omega}(\mathbf{XW}) - \tilde{\mathbf{Y}}\|_F^2 + \lambda_d \left(\sum_{k = 1}^c \|\mathbf{X}_k\mathbf{W}\|_* - \|\mathbf{XW}\|_*\right), \tag{2} min21∥RΩ(XW)−Y~∥F2+λd(k=1∑c∥XkW∥∗−∥XW∥∗),(2)

among ,

- ∥ X k W ∥ ∗ \|\mathbf{X}_k\mathbf{W}\|_* ∥XkW∥∗ It expresses the local label structure , The smaller the better ;

- ∥ X W ∥ ∗ \|\mathbf{XW}\|_* ∥XW∥∗ It expresses the global label structure , The bigger the better ( More separable , The higher the amount of information ).

- These two points are the source of the topic .

Add nonlinear optimization objective :

min 1 2 ∥ R Ω ( X W ) − Y ~ ∥ F 2 + λ d ( ∑ k = 1 c ∥ f ( X k ) W ∥ ∗ − ∥ f ( X ) W ∥ ∗ ) , (5) \min \frac{1}{2} \|R_{\Omega}(\mathbf{XW}) - \tilde{\mathbf{Y}}\|_F^2 + \lambda_d \left(\sum_{k = 1}^c \|f(\mathbf{X}_k)\mathbf{W}\|_* - \|f(\mathbf{X})\mathbf{W}\|_*\right), \tag{5} min21∥RΩ(XW)−Y~∥F2+λd(k=1∑c∥f(Xk)W∥∗−∥f(X)W∥∗),(5)

among f ( ⋅ ) f(\cdot) f(⋅) Nonlinear transformation caused by kernel function .

4. Summary

- Another pile of theoretical proofs .

边栏推荐

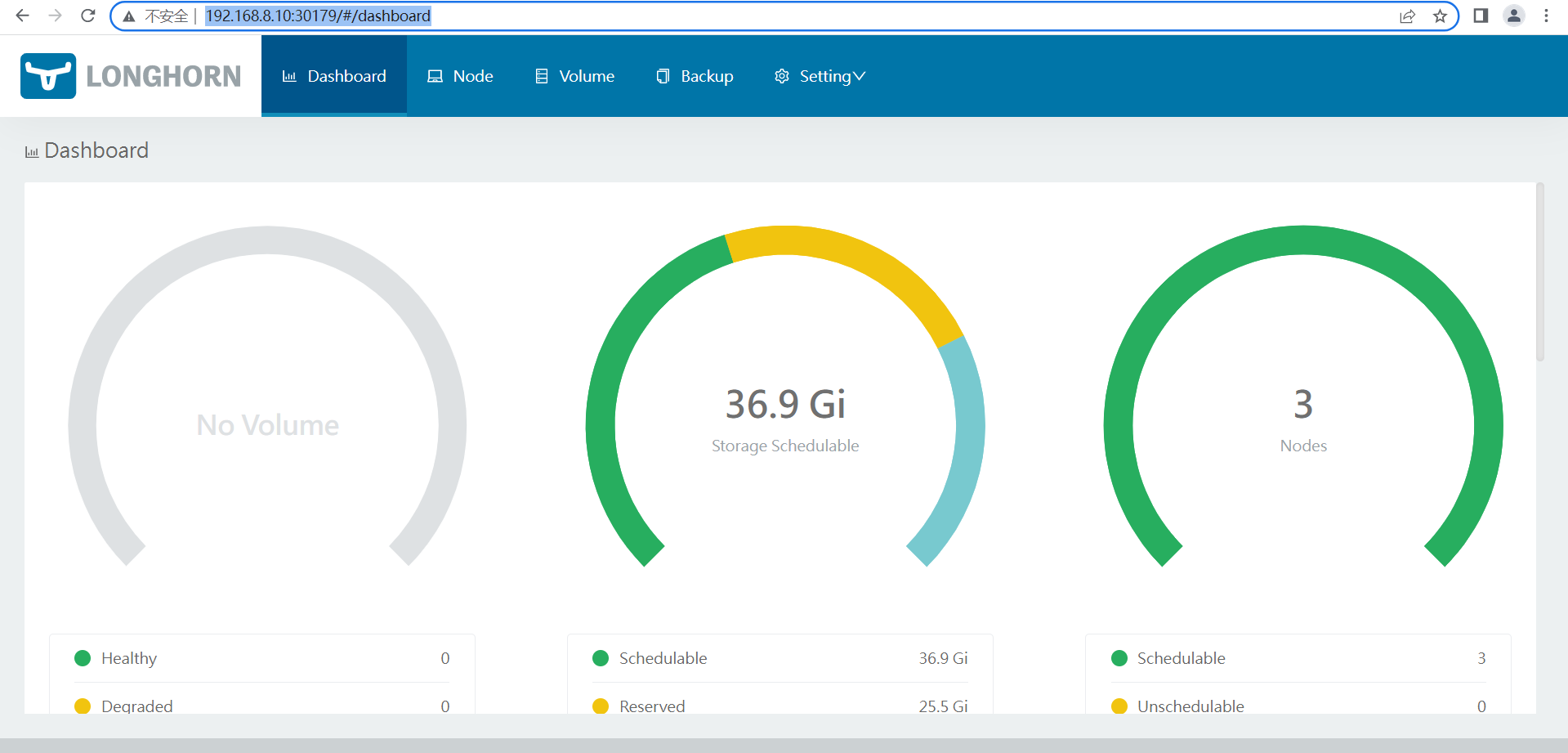

- longhorn安装与使用

- Getting started with crawler to giving up 06: crawler play Fund (with code)

- Analysis of abnormal problems in domain name resolution in kubernetes

- VsCode主题推荐

- Phonics

- Atguigu---16-custom instruction

- Learning event binding of 3D visualization from scratch

- C# Lambda

- 软件工程导论——第二章——可行性研究

- 宝塔面板安装php7.2安装phalcon3.3.2

猜你喜欢

2021-03-09 COMP9021第七节课笔记

对于flex:1的详细解释,flex:1

longhorn安装与使用

LabVIEW查找n个元素数组中的质数

The first exposure of Alibaba cloud's native security panorama behind the only highest level in the whole domain

宝塔面板安装php7.2安装phalcon3.3.2

Leetcode 207: course schedule (topological sorting determines whether the loop is formed)

解决笔记本键盘禁用失败问题

有关iframe锚点,锚点出现上下偏移,锚点出现页面显示问题.iframe的srcdoc问题

搜索与推荐那些事儿

随机推荐

Small sample fault diagnosis - attention mechanism code - Implementation of bigru code parsing

11-- longest substring without repeated characters

【毕业季】你好陌生人,这是一封粉色信笺

Analysis of abnormal problems in domain name resolution in kubernetes

2021-03-04 COMP9021第六节课笔记

Simple summary of lighting usage

根据网络上的视频的m3u8文件通过ffmpeg进行合成视频

OC Extension 检测手机是否安装某个App(源码)

软件过程与项目管理期末复习与重点

解决笔记本键盘禁用失败问题

Optimization and practice of Tencent cloud EMR for cloud native containerization based on yarn

2021-03-16 COMP9021第九节课笔记

487. number of maximum consecutive 1 II ●●

Markdown 实现文内链接跳转

You get in Anaconda

Auto usage example

longhorn安装与使用

1279_ Vsock installation failure resolution when VMware player installs VMware Tools

js滚动div滚动条到底部

More than observation | Alibaba cloud observable suite officially released