当前位置:网站首页>Leetcode lecture on algorithm interview for large factories 2 Time space complexity

Leetcode lecture on algorithm interview for large factories 2 Time space complexity

2022-06-24 01:05:00 【Whole stack Xiaochen】

Finish the interview of big factory algorithm leetcode Work: 2. Time and space complexity

Video tutorial ( Efficient learning ): Click to learn

Catalog :

6. Depth first & breadth-first

10. recursive & Divide and conquer

What time complexity

Time complexity is a function , It qualitatively describes the running time of the algorithm , In software development , Time complexity is used to facilitate developers to estimate the running time of programs , The number of operation units of the algorithm is usually used to represent the time consumed by the program , Default here CPU The running time of each unit is the same . Suppose the problem size of the algorithm is n, So the number of operation units is a function f(n) To express , With the size of the data n The increase of , The growth rate of algorithm execution time and f(n) There is a certain relationship between the growth rate of , This is called the asymptotic time complexity of the algorithm , Time complexity , Write it down as O(f(n)), among n Refers to the number of instruction sets .

What is big O

Big O Used to represent the upper bound of algorithm execution time , It can also be understood as the worst-case running time , The amount and order of data have a great impact on the execution time of the algorithm , What is assumed here is the running time of an input data with the algorithm , It takes longer than other data .

The time complexity of insertion sort is, we all say O(n^2) , However, the time complexity of insertion sorting has a lot to do with the input data , If the input data is completely ordered , The time complexity of insertion sorting is O(n), If the input data is completely in reverse order , Then the time complexity is O(n^2), So the worst is O(n^2) Time complexity of , We say that the time complexity of insertion sorting is O(n^2).

Quick sort is O(nlogn), The time complexity of quick sorting in the worst case is O(n^2) , Usually O(nlogn), So strictly from the big O By definition , The time complexity of quicksort should be O(n^2), But we still say that the time complexity of quick sorting is O(nlogn), This is the default rule in the industry .

The time complexity of binary search is O(logn), Halve the data size every time , Until the data size is reduced to 1, Finally, it is equivalent to seeking 2 The power of is equal to n, It's equivalent to dividing logn Time .

The time complexity of merging and sorting is O(nlogn), Top down merger , From the data scale to n Split to 1, The time complexity is O(logn), Then the time complexity of merging up is O(n), The overall time complexity is O(nlogn).

The traversal complexity of a tree is generally O(n),n Is the number of nodes in the tree , The time complexity of selecting sorting is O(n^2), We will gradually analyze the complexity of each data structure and algorithm in the corresponding chapters . For more time complexity analysis and derivation, please refer to the main theorem .

Some rules for analyzing complexity

- Add multiple time complexity , If it's all with n relevant , Take the one with high complexity , for example :O(nlogn + n) = O(nlogn),O(nlogn + n^2) = O(n^2).

- Add multiple time complexity , If some of these items are complex and n If it is not relevant, you cannot ignore any items , for example :O(AlogA + B),O(AlogA + B^2)

- The two loops execute in turn , Take the one with high complexity , Nesting multiple loops requires cumulative complexity .

Common time complexity :

- O(1): Constant complexity

let n = 100;

- O(logn): Logarithmic complexity

// Binary search non recursive

var search = function (nums, target) {

let left = 0,

right = nums.length - 1;

while (left <= right) {

let mid = Math.floor((left + right) / 2);

if (nums[mid] === target) {

return mid;

} else if (target < nums[mid]) {

right = mid - 1;

} else {

left = mid + 1;

}

}

return -1;

};- O(n): Linear time complexity

for (let i = 1; i <= n; i++) {

console.log(i);

}- O(n^2): square

for (let i = 1; i <= n; i++) {

for (let j = 1; j <= n; j++) {

console.log(i);

}

}

for (let i = 1; i <= n; i++) {

for (let j = 1; j <= 30; j++) { // Nested second level if and n Irrelevant is not O(n^2)

console.log(i);

}

}- O(2^n): Exponential complexity

for (let i = 1; i <= Math.pow(2, n); i++) {

console.log(i);

}- O(n!): Factorial

for (let i = 1; i <= factorial(n); i++) {

console.log(i);

}The time complexity of basic operations of common data structures

The time complexity of recursion

The time complexity of recursion is related to the depth of recursion

// Recursion n layer Time complexity O(n)

function sum2(n) {

if (n === 0) {

return 0;

}

return n + sum2(n - 1);

}

// Two points search Recursion logn layer O(logn)

var search = function (nums, target) {

return search_interval(nums, target, 0, nums.length - 1)

};

function search_interval(nums, target, left, right) {

if (left > right) {

return -1

}

let mid = left + Math.floor((right - left) / 2);

if (nums[mid] === target) {// Determine the target value and the size of the intermediate element

return mid

} else if (nums[mid] < target) {// Recursively find the target element

return search_interval(nums, target, mid + 1, right)

} else {

return search_interval(nums, target, left, mid - 1)

}

}

// Fibonacci number : Seeking Fibonacci number by recursion , Total recursion n layer , The height of a binary tree is n, From our basic knowledge, we can know ,

// One height is n A binary tree can have at most 2^n - 1 Nodes , That is, the number of recursive procedure function calls , So the time complexity is O(2^n).

// We can see that the recursive tree contains a lot of repeated calculations .

//0, 1,1,2,3 ...

var fib = function (N) {

if (N == 0) return 0;

if (N == 1) return 1;

return fib(N - 1) + fib(N - 2);

};Time complexity optimization

- Adopt better algorithms : give an example :1+2+3...n from

1~nSum up , Direct circulation method ,for i->n: sum+=i , We can also use the summation formula :n(n+1)/2. For example, some problems can be found by dichotomy . - Space for time , Time is precious , We calculate a very time-consuming task , It may take a long time , Sudden power failure , Or accidents may lead to very large losses , Space is cheap , At most, we buy servers with larger memory , Money can solve , There are many such examples in the following chapters , For example, use

setormapSpeed up the search , Use binary search tree or dictionary tree to speed up the search of strings .

An example of time complexity analysis

There is an array of strings , Sorts each string in the array alphabetically , Then sort the entire string array in dictionary order . Find the time complexity of the whole operation .

If I say the time complexity is O(n*nlogn + nlogn) = O(n^2logn) Am I right? , Take time to think .

Let's analyze , Suppose the length of the longest string is s, Array has n A string , Sort each string O(slogs), Sorts each string in the array alphabetically O(n * slogs), Sort the entire string array by Dictionary O(s * nlogn), So the final time complexity is O(n * slogs) + O(s * nlogn) = O(nslogs + nslogn) = O(ns * (logs+logn))

Spatial complexity

Space complexity refers to the storage space occupied by the algorithm in the running process , Spatial complexity (Space Complexity) Write it down as S(n) , Still use big O To express . Take advantage of the spatial complexity of the program , You can have a pre estimate of how much memory a program needs to run .

Common spatial complexity

- One dimensional array space , If stored n Elements , Spatial complexity

O(n) - Two dimensional array space , All in all n An array , Each array stores n Elements , Spatial complexity

O(n^2) - Constant space complexity

O(1)

Recursive spatial complexity

//O(1)

function sum1(n) {

let ret = 0;

for (let i = 0; i <= n; i++) {

ret += i;

}

return ret;

}

//O(n), Recursion n layer , The recursive stack space is O(n) Complexity

function sum2(n) {

if (n === 0) {

return 0;

}

return n + sum2(n - 1);

}

//O(logn), Recursion logn layer , The recursive stack space is O(logn) Complexity

var search = function (nums, target) {

return search_interval(nums, target, 0, nums.length - 1)

};

function search_interval(nums, target, left, right) {

if (left > right) {

return -1

}

let mid = left + Math.floor((right - left) / 2);

if (nums[mid] === target) {// Determine the target value and the size of the intermediate element

return mid

} else if (nums[mid] < target) {// Recursively find the target element

return search_interval(nums, target, mid + 1, right)

} else {

return search_interval(nums, target, left, mid - 1)

}

}边栏推荐

- Vs2022 save formatting plug-in

- Perhaps the greatest romance of programmers is to commemorate their dead mother with a software

- Niu Xuechang's anniversary celebration: software promotion, limited time and free registration code!

- Map design

- Thread safety and lock optimization

- 所见之处都是我精准定位的范畴!显著图可视化新方法开源

- Experience summary of 9 Android interviews, bytes received, Ali, advanced Android interview answer

- LSF打开Job idle information以看job的cpu time/elapse time使用情况

- Google Earth engine (GEE) - verification results used by NDVI, NDWI and NDBI to increase classification accuracy (random forest and cart classification)

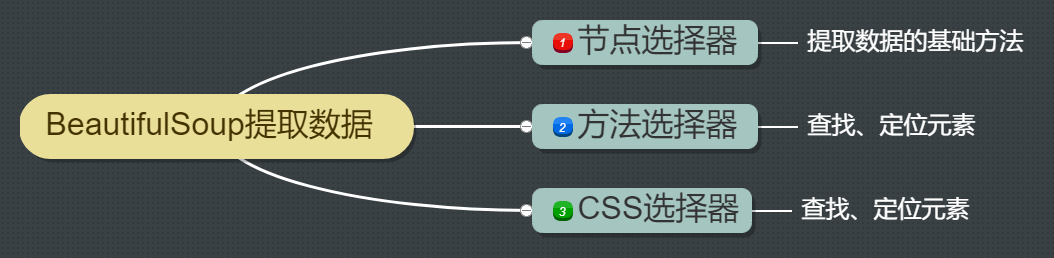

- 13 `bs_ duixiang. Tag tag ` get a tag object

猜你喜欢

Social recruitment interview is indispensable -- 1000 interview questions for Android engineers from Internet companies

C语言:结构体数组实现找出最低分学生记录

Isn't this another go bug?

机器学习中 TP FP TN FN的概念

The concept of TP FP TN FN in machine learning

钟珊珊:被爆锤后的工程师会起飞|OneFlow U

Cross domain and jsonp

【Flutter】如何使用Flutter包和插件

13 `bs_ duixiang. Tag tag ` get a tag object

paddle使用指南

随机推荐

持续测试和质量保障的关系

【osg】OSG开发(04)—创建多个场景视图

skywalking 安装部署实践

Handwritten digit recognition using SVM, Bayesian classification, binary tree and CNN

Building a digital software factory -- panoramic interpretation of one-stop Devops platform

分别用SVM、贝叶斯分类、二叉树、CNN实现手写数字识别

Empty encoded password warning reason

[iccv workshop 2021] small target detection based on density map: coarse-grained density map guided object detection in aerial images

Talk to Wu Jiesheng, head of Alibaba cloud storage: my 20 years of data storage (unlimited growth)

想开户炒股,通过网上进行股票开户安全吗?-

用一个软件纪念自己故去的母亲,这或许才是程序员最大的浪漫吧

Relationship between continuous testing and quality assurance

Application configuration management, basic principle analysis

Version ` zlib 1.2.9 "not found (required by / lib64 / libpng16.so.16)

[technology planting grass] on the "double 11" of this year, Tencent cloud lightweight servers will be collected in a fair manner

这不会又是一个Go的BUG吧?

GNN上分利器!与其绞尽脑汁炼丹,不如给你的GNN撒点trick吧

【虹科案例】3D数据如何成为可操作的信息?– 对象检测和跟踪

Zhongshanshan: engineers after being blasted will take off | ONEFLOW u

After the deployment of Beidou navigation system, why didn't we launch a high-precision map similar to Google maps?