当前位置:网站首页>Live broadcast review | detailed explanation of koordinator architecture of cloud native hybrid system (complete ppt attached)

Live broadcast review | detailed explanation of koordinator architecture of cloud native hybrid system (complete ppt attached)

2022-06-24 08:15:00 【Alibaba cloud native】

author : Zhang Zuowei Li Tao

2022 year 4 month , Alibaba cloud native hybrid system Koordinator Officially open source . After several months of iteration ,Koordinator It has been released in succession 4 A version , It can effectively help enterprise customers improve the efficiency of cloud native workload 、 Stability and computational cost .

yesterday (6 month 15 Japan ), In Alibaba cloud live studio , come from Koordinator Zhangzuowei of the community ( Youyi ) 、 Li Tao ( LV Feng ) The two technical experts started from the architecture and characteristics of the project , Shared Koordinator How to deal with the challenges in the mixed scenes , In particular, it improves the efficiency and stability of workload operation in the mixed scenario , And the thinking and planning of the subsequent technological evolution . We also sorted out the core content of this live broadcast , I hope to bring you some in-depth inspiration .

Click the link , View live playback now !

https://yqh.aliyun.com/live/detail/28787

Follow the Alibaba cloud native official account , The background to reply 【0615】 Get complete PPT

Introduction and development of hybrid technology

The concept of mixed part can be understood from two perspectives , From the node dimension , The mixing part is to deploy multiple containers on the same node , Applications within these containers include both on-line types , Also includes offline types ; From the cluster dimension , The mixed part is to deploy multiple applications in a cluster , Analyze application characteristics through prediction , Realize the peak load shifting and valley filling of business in resource use , To achieve the effect of improving cluster resource utilization .

Based on the above understanding , Then we can define the target problems and technical solutions to be solved by the mixing department . Essentially , Our original intention to implement the mixed department is derived from the unremitting pursuit of resource utilization efficiency in the data center . Accenture report shows ,2011 In, the average machine utilization rate of the public cloud data center was less than 10%, It means that the resource cost of the enterprise is extremely high , On the other hand, with the rapid development of big data technology , Computing jobs have a growing demand for resources . in fact , It has become an inevitable trend for big data to be put into the cloud through the cloud native mode , According to the Pepperdata stay 2021 year 12 The survey report of June , A considerable number of enterprise big data platforms have begun to migrate to cloud native technologies . exceed 77% Of the respondents' feedback is expected to 2021 end of the year , Its 50% Our big data applications will be migrated to Kubernetes platform . therefore , Select batch type task and online service type to apply mixed deployment , Naturally, it has become the general type selection of mixed Department scheme in the industry . Public data display , Through the mixing part , The resource utilization rate of leading enterprises in related technologies has been greatly improved .

Facing the mixed technology , On specific issues of concern , Managers in different roles will have their own priorities .

For administrators of cluster resources , They expect to simplify the management of cluster resources , Realize the resource capacity of various applications , The amount allocated , Clear insight into usage , Improve the utilization of cluster resources , To reduce IT The purpose of cost .

For administrators of online applications , They are more concerned about the interference of container hybrid deployment , Because mixed departments are more likely to generate resource competition , The application response time will have a long tail (tail latency), This leads to the decline of application service quality .

The administrators of offline applications expect that the hybrid system can provide classified and reliable resource oversold , Meet the differentiated resource quality requirements of different job types .

For the above problems ,Koordinator The following mechanisms are provided , It can fully meet the technical requirements of different roles for the mixed department system :

- Resource priority and quality of service model for mixed scenarios

- Stable and reliable resource oversold mechanism

- Fine grained container resource orchestration and isolation mechanism

- Scheduling enhancements for multiple types of workloads

- Fast access capability for complex types of workloads

Koordinator brief introduction

The following figure shows Koordinator The overall architecture of the system and the role division of each component , The green part describes K8s The components of the native system , The blue part is Koordinator The extended implementation based on this . From the perspective of the whole system architecture , We can Koordinator It is divided into two dimensions: central control and stand-alone resource management . On the center side ,Koordiantor Corresponding expansion capability enhancements have been made inside and outside the scheduler ; On the stand-alone side ,Koordinator Provides Koordlet and Koord Runtime Proxy Two components , Responsible for the fine management and management of stand-alone resources QoS Supportability .

Koordinator The detailed functions of each component are as follows

Koord-Manager

- SLO-Controller: Provide resources that are oversold 、 Mixing part SLO management 、 Refined scheduling enhances core management and control capabilities .

- Recommender: Provide relevant flexibility for applications around resource portraits .

- Colocation Profile Webhook: simplify Koordinator The use of mixed models , The ability to provide one click access for applications , Automatically inject related priority 、QoS To configure .

Koord extensions for Scheduler: Enhanced scheduling capability for mixed scenarios .

Koord descheduler: Provide flexible and extensible rescheduling mechanism .

Koord Runtime Proxy: As Kubelet and Runtime Agency between , Meet the resource management needs of different scenarios , Provide a plug-in registration framework , Provide the injection mechanism of relevant resource parameters .

Koordlet: Responsible for the single machine side Pod Of QoS guarantee , Provide fine-grained container indicator collection , And interference detection and adjustment strategy , And support a series of Runtime Proxy plug-in unit , Isolation parameter injection for refinement .

stay Koordinator In the design model of , A core design concept is priority (Priority),Koordinator Four levels are defined , Namely Product、Mid、Batch、Free ,Pod You need to specify the resource priority of the application , The scheduler will schedule based on the total priority and allocation of each resource . The total resources of each priority will be affected by the resources of high priority request and usage influence , For example, applied but unused Product Resources will be Batch Priority reassignment . The specific capacity of each resource priority of the node ,Koordinator Will be based on the standard extend-resource Form update in Node In information .

The following figure shows the capacity of each resource priority of a node , The black line total Represents the total physical resources of the node , The red line represents the high priority Product Actual usage of , The blue broken line to the black straight line reflects Batch Priority resource oversold changes , You can see when Product When the priority is at the bottom of resource consumption ,Batch Priority can get more oversold resources . in fact , Aggressive or conservative resource priority policies , Determines the oversold capacity of cluster resources , This point can also be seen from the green line in the figure Mid The analysis of resource priority oversold shows that .

As shown in the following table ,Koordinator With K8s The standard PriorityClass Form defines the priority of each resource , representative Pod The priority of the requested resource . When multi priority resources are oversold , When stand-alone resources are tight , Low priority Pod Will be suppressed or expelled . Besides ,Koordinator It also provides Pod Sub priority of level (sub-priority), It is used for fine control at the scheduler level ( line up , Preemption, etc ).

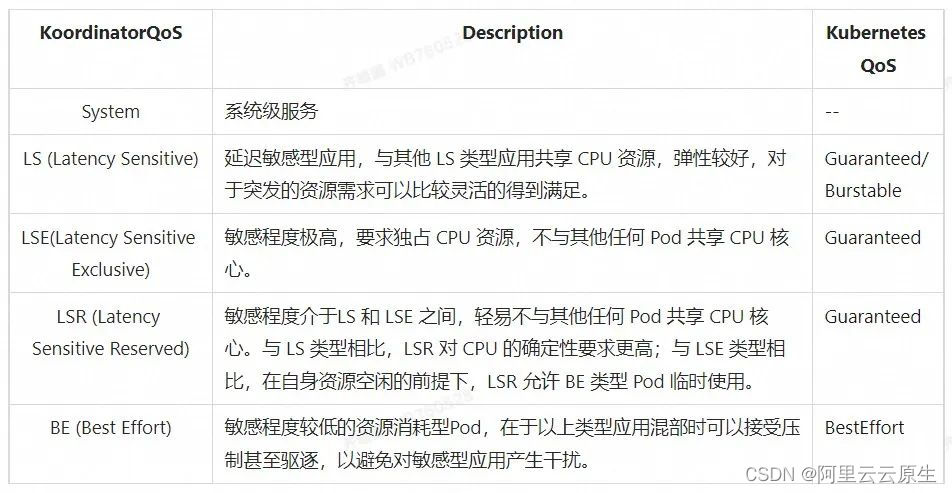

Koordinator Another core concept in the design of is the quality of service (Quality of Service),Koordinator take QoS Model in Pod Annotation Level is defined by extension , It represents the Pod Resource quality during stand-alone operation , The main performance is that different isolation parameters are used , When a single machine is short of resources, it will give priority to meeting the high-level requirements QoS The needs of . As shown in the following table ,Koordinator take QoS The whole is divided into System( System level services ),Latency Sensitive( Delay sensitive online services ),Best Effort( Resource consuming offline applications ) Three types of , Depending on the sensitivity of the application performance ,Latency Sensitive Subdivided into LSE,LSR and LS.

stay Priority and QoS The use of , They are two orthogonal dimensions as a whole , It can be arranged and combined . However, it is affected by the model definition and actual requirements , Some permutations and combinations have constraints . The following table shows some combinations commonly used in mixed scenes , among “O” It means common permutation and combination ,“X” Indicates a permutation that is basically not used .

Examples of actual use of each scenario are as follows .

Typical scenario :

- Prod + LS: Typical online applications , It usually requires high application delay , High requirements for resource quality , It is also necessary to ensure a certain resource elasticity .

- Batch + BE: Used for low optimization offline in mixed scenes , Have considerable patience with the quality of resources , For example, batch type Spark/MR Mission , as well as AI Types of training tasks

Enhancement of typical scenes :

- Prod + LSR/LSE: More sensitive online applications , It is acceptable to sacrifice resource elasticity for better certainty ( Such as CPU To nuclear ), Very high requirements for application delay .

- Mid/Free + BE: And “Batch + BE” The main difference is the different requirements for resource quality .

Atypical application scenarios :

- Mid/Batch/Free + LS: For low priority online services 、 Near line calculation and AI Reasoning and other tasks , These tasks are compared with big data type tasks , They cannot accept low resource quality , Interference to other applications is also relatively low ; And compared to the typical online service , They can also tolerate relatively low resource quality , For example, accept a certain degree of expulsion .

Quick Start

Koordinator Flexible access to mixed units supporting multiple workloads , Here we have Spark For example , Introduce how to use the oversold resources of the mixed department . stay K8s Running in cluster Spark There are two modes of mission : One is through Spark Submit Submit , That is, it is used locally Spark Client direct connection K8s colony , This method is relatively simple and fast , However, there is a lack of overall management ability , It is often used to develop self-test ; Another way is through Spark Operator Submit , As shown in the figure below , It defines the SparkApplication CRD, be used for Spark Job description , The user can go through kubectl The client will submit SparkApplication CR To APIServer, Then by Spark Operator Responsible for the operation life cycle and Driver Pod Management of .

rely on Koordinator Capacity enhancement ,ColocationProfile Webhook Automatically for Spark Mission Pod Injection related mixing part configuration parameters ( Include QoS,Priority,extened-resource etc. ), As shown below .Koordlet Responsible for the single machine side Spark Pod The performance of online application will not be affected after mixing , By way of Spark Mix with online applications , It can effectively improve the overall resource utilization of the cluster .

# Spark Driver Pod example

apiVersion: v1

kind: Pod

metadata:

labels:

koordinator.sh/qosClass: BE

...

spec:

containers:

- args:

- driver

...

resources:

limits:

koordinator.sh/batch-cpu: "1000"

koordinator.sh/batch-memory: 3456Mi

requests:

koordinator.sh/batch-cpu: "1000"

koordinator.sh/batch-memory: 3456Mi

...

Introduction to key technologies

Resource overload - Resource Overcommitment

In the use of K8s When the cluster , It is difficult for users to accurately evaluate the resource usage of online applications , I don't know how to set it better Pod Of Request and Limit, Therefore, in order to ensure the stability of online applications , Larger resource specifications will be set . In actual production , The reality of most online applications CPU The utilization rate is low most of the time , The high probability is only 10% or 20% , Waste a lot of allocated but unused resources .

Koordinator Recycle and reuse the allocated but unused resources through the resource overrun mechanism .Koordinator Evaluate online applications based on metrics data Pod How many resources can be recycled ( As shown in the figure above , Marked as Reclaimed Part of the is recyclable resources ), These recyclable resources can be used by low priority workloads , For example, some offline tasks . To make these resources easy for these low priority workloads ,Koordinator These resources will be updated to NodeStatus in ( As shown below node info). When an online application has a sudden request to process, it requires more resources ,Koordinator Through rich QoS Enhanced mechanisms help online applications retrieve these resources to ensure quality of service .

# node info

allocatable:

koordinator.sh/bach-cpu: 50k # milli-core

koordinator.sh/bach-memory: 50Gi

# pod info

annotations:

koordinator.sh/resource-limit: {cpu: “5k”}

resources:

requests

koordinator.sh/bach-cpu: 5k # milli-core

koordinator.sh/bach-memory: 5Gi

Load balancing scheduling - Load-Aware Scheduling

Over issuance of resources can greatly improve the resource utilization of the cluster , However, it will also highlight the uneven resource utilization among nodes in the cluster . This phenomenon also exists in the non mixed environment , Just because K8s The native mechanism does not support resource over issuance , The utilization rate on nodes is often not very high , To some extent, this problem is covered up . But when mixed , This problem is exposed when the resource utilization rate will rise to a relatively high level .

Uneven utilization is generally caused by uneven nodes and local load hotspots , Local load hotspots may affect the overall performance of the workload . The other is on nodes with high load , There may be serious resource conflicts between online applications and offline tasks , Affect the runtime quality of online applications .

To solve this problem , Koordinator The scheduler provides a configurable scheduling plug-in to control the utilization of the cluster . The scheduling capability mainly depends on koordlet Reported node indicator data , Nodes whose load is higher than a certain threshold will be filtered out during scheduling , prevent Pod It is impossible to obtain good resource guarantee on this node with high load , On the other hand, it is to avoid the node with high load from deteriorating . Select nodes with lower utilization in the scoring stage . The plug-in will avoid too many problems caused by instant scheduling based on time window and prediction mechanism Pod When the cold node machine overheats after a period of time .

Application access management - ClusterColocationProfile

We are Koordinator From the beginning of the project, we have considered , Need to reduce Koordinator The use threshold of the mixed system , So that we can simply and quickly gray and use the mixed part technology to obtain benefits . therefore Koordinator Provides a ClusterColocationProfile CRD, Through this CRD And corresponding Webhook , You can do this without intruding into components in the inventory cluster , On demand for different Namespace Or different workloads , One click to open the mixing ability ,Webhook Will be based on the CRD Describes the rules for newly created Pod Automatic injection Koorinator priority 、QoS Configuration and other mixed protocols .

apiVersion: config.koordinator.sh/v1alpha1

kind: ClusterColocationProfile

metadata:

name: colocation-profile-example

spec:

namespaceSelector:

matchLabels:

koordinator.sh/enable-colocation: "true"

selector:

matchLabels:

sparkoperator.k8s.io/launched-by-spark-operator: "true"

qosClass: BE

priorityClassName: koord-batch

koordinatorPriority: 1000

schedulerName: koord-scheduler

labels:

koordinator.sh/mutated: "true"

annotations:

koordinator.sh/intercepted: "true"

patch:

spec:

terminationGracePeriodSeconds: 30

for instance , It's on it ClusterColocationProfile An example of , Means all with koordinator.sh/enable-colocation=true Labeled Namespace And the Namespace Next SparkOperator Job created Pod Can be transformed into BE Type of Pod(BTW:SparkOperator Created Pod The label will be added sparkoperator.k8s.io/launched-by-spark-operator=true Express this Pod yes SparkOperator conscientious ).

It is only necessary to follow the following steps to complete the hybrid access :

$ kubectl apply -f profile.yaml

$ kubectl label ns spark-job -l koordinator.sh/enable-colocation=true

$ # submit Spark Job, the Pods created by SparkOperator are co-located other LS Pods.

QoS enhance – CPU Suppress

Koordinator In order to ensure the runtime quality of online applications in the mixed scenario , On the stand-alone side, a variety of QoS Enhance ability .

First of all, I'd like to introduce CPU Suppress(CPU Dynamic suppression ) characteristic . I'd like to introduce it to you , Most of the time, online applications do not completely use up the resources they have applied for , There will be a lot of free resources , These idle resources can be used by newly created offline tasks through resource overload , It can also be used when there are no new offline tasks to be executed on the node , As much as possible to spare CPU Resources are shared to off-line tasks in stock . As shown in this figure , When koordlet Find that the resources of online applications are idle , And used by offline tasks CPU The safety threshold has not been exceeded , Then the idle within the safety threshold CPU Can be shared with offline tasks , Make offline tasks faster . Therefore, the load of online applications determines BE Pod How much is available in total CPU. When the online load increases ,koordlet Will pass CPU Suppress suppress BE Pod, Share CPU And online applications .

QoS enhance – Expulsion based on resource satisfaction

CPU Suppress When the load of online applications increases, offline tasks may be frequently suppressed , This can guarantee the runtime quality of online applications , But it still has some impact on offline tasks . Although offline tasks are of low priority , However, frequent suppression will lead to unsatisfied performance of offline tasks , Seriously, it will also affect the offline service quality . And there are some extreme cases of frequent repression , If the offline task holds special resources such as the kernel global lock when it is suppressed , Such frequent suppression may lead to problems such as priority reversal , Instead, it will affect online applications . Although this does not happen very often .

To solve this problem ,Koordinator An expulsion mechanism based on resource satisfaction is proposed . We put the actual distribution of CPU Total amount And Expected distribution of CPU The ratio of the total amount becomes CPU Satisfaction . When offline task group CPU Satisfaction is below the threshold , And the offline task group CPU Utilization over 90% when ,koordlet Will expel some low priority offline tasks , Free up some resources for higher priority offline tasks . This mechanism can improve the resource requirements of offline tasks .

QoS enhance - CPU Burst

We know CPU Utilization is a period of time CPU Average value used . And most of the time we observe statistics in a coarse time unit granularity CPU utilization , It was observed at this time CPU The change of utilization rate is basically stable . But if we observe statistics in a finer time unit granularity CPU utilization , You can see CPU The burst characteristics used are very obvious , It's not stable . As shown below 1s Granularity observation utilization ( violet ) and 100ms Utilization of granularity observation ( green ) contrast .

The observation of fine-grained data shows that CPU Outburst and repression are the norm .Linux Through the kernel CFS Bandwidth controller cgroup CPU Consumption of , It limits cgroup Of CPU Consumption cap , Therefore, it is often encountered that some services are severely damaged in a short time under sudden traffic throttle, Long tail delay , The service quality is reduced , As shown in the figure below ,Req2 because CPU Suppressed , Postpone to the 200ms To be dealt with .

To solve this problem ,Koordinator be based on CPU Burst Technology helps online applications cope with emergencies .CPU Burst Allow workload to be used when there is burst request processing CPU Resource time , Use everyday CPU resources . For example, the container is used in daily operation CPU Resource does not exceed CPU Current limiting , spare CPU Resources will be accumulated . Later, when the container runs, it needs a lot of CPU Resource time , Will pass through CPU Burst The function is used suddenly CPU resources , The resources used suddenly come from the accumulated resources . As shown in the figure below , Suddenly Req2 Because there is accumulated CPU resources , adopt CPU Burst The function can avoid being throttle, Processed the request quickly .

QoS enhance – Group Identity

In the mixed scenes ,Linux Although the kernel provides a variety of mechanisms to meet the scheduling requirements of workloads with different priorities , But when an online application and an offline task are running on a physical core at the same time , Because offline tasks share the same physical resources , The performance of online applications will inevitably be disturbed by offline tasks, resulting in performance degradation .Alibaba Cloud Linux 2 From kernel version kernel-4.19.91-24.al7 Start supporting Group Identity function ,Group Identity Is a kind of cgroup Group as a unit to achieve the means of scheduling special priority , In short , When online applications need more resources , adopt Group Identity It can temporarily suppress offline tasks to ensure that online applications can respond quickly .

It's easy to use this feature , You can configure the cpu cgroup Of cpu.bvt_warp_ns that will do . stay Koordinator in ,BE The corresponding configuration of class offline task is -1, That is, the lowest priority , LS/LSR And other online application types are set to 2, That is, the highest priority .

QoS enhance – Memory QoS

Containers have the following two constraints when using memory :

- Own memory limit : When the container's own memory ( contain Page Cache) Close to the upper limit of the container , Will trigger the kernel's memory reclamation subsystem , This process will affect the performance of memory application and release in the container .

- Node memory limit : When container memory is oversold (Memory Limit>Request) The memory of the whole machine is insufficient , Will trigger the global memory reclamation of the kernel , This process has a great impact on performance , Extreme conditions even lead to abnormal operation of the whole machine .

To improve application runtime performance and node stability ,Koordinator introduce Memory QoS Ability , Improve memory performance for applications . When the function is turned on ,koordlet According to the adaptive configuration memory subsystem (Memcg), On the basis of ensuring the fairness of node memory resources , Optimize the performance of memory sensitive applications .

Follow up evolution plan

Refine CPU layout - Find-grained CPUOrchestration

We are designing and implementing refinement CPU The choreography mechanism .

Why should we provide this orchestration mechanism ? With the improvement of resource utilization, it enters the deep-water area of the mixed department , The performance of the resource runtime needs to be further tuned , More sophisticated resource orchestration can better ensure the quality of runtime , Thus, the utilization ratio will be pushed to a higher level through mixing .

We put Koordinator QoS Online applications LS Types are divided in more detail , It is divided into LSE、LSR and LS Three types of . After the split QoS Types have higher isolation and runtime quality . Through such a split , Whole Koordinator QoS More precise and complete semantics , And compatible K8s Existing QoS semantics .

And we aim at Koordinator QoS, Designed a rich and flexible CPU Choreography strategy , As shown in the following table .

Koordinator QoS Corresponding CPU Choreography strategy

in addition , in the light of LSR type , There are also two binding strategies , It can help users balance performance and economic benefits .

- SameCore Strategy : Better isolation , But the elastic space is small .

- Spread Strategy : Moderate isolation , But it can be optimized by other isolation strategies ; If you use it properly, you can get a better result than SameCore Strategy for better performance ; There is a certain elastic space .

Koordinator This set of refinement CPU The orchestration is compatible K8s Existing CPUManager and NUMA Topology Manager The mechanism . That is to say, the stock cluster uses Koordinator Will not affect the stock Pod, It can be safely used in grayscale .

Reserve resources - Resource Reservation

Resource reservation is another feature we are designing . Resource reservation can help solve the pain points of resource management . For example, sometimes it looks like the familiar Internet business scenarios , Have very strong peak valley characteristics . Then we can reserve resources before the peak to ensure that there must be resources to meet the peak request . In addition, you may also encounter problems during capacity expansion , After the capacity expansion is initiated, there are no resources Pod Just Pending In the cluster , If you can confirm whether resources are available before capacity expansion , Adding a new machine when there are no resources will give you a better experience . There are also rescheduling scenarios , The expelled can be guaranteed through resource reservation Pod There must be resources available , It can greatly reduce the resource risk of rescheduling , More secure use of rescheduling capabilities .

Koordinator The resource reservation mechanism of does not intrude K8s What the community already has API And code . And support PodTemplateSpec, Imitate a Pod Find the most suitable node through the scheduler . And support the way of declaring ownership Pod Give priority to reserved resources , For example, when a real Pod When scheduling , Will give priority to trying according to Pod Find the appropriate reserved resources , Otherwise, continue to use the idle resources in the cluster .

Here's a Reservation CRD Example ( With a final Koordinator The design adopted by the community shall prevail )

kind: Reservation

metadata:

name: my-reservation

namespace: default

spec:

template: ... # a copy of the Pod's spec

resourceOwners:

controller:

apiVersion: apps/v1

kind: Deployment

name: deployment-5b8df84dd

timeToLiveInSeconds: 300 # 300 seconds

nodeName: node-1

status:

phase: Available

...

Refine GPU Dispatch - GPU Scheduling

Refine GPU Scheduling is a capability we expect to provide in the future .GPU and CPU There are great differences in resource characteristics , And in model training scenarios like machine learning , A training task will have different performance differences due to different topologies , For example, according to the machine learning task worker Different topological combinations between , Will get different performance , This is not only reflected between nodes in the cluster , And even on a single node ,GPU Between cards also because of whether to use NVLINK There will also be huge performance differences , This makes the whole GPU The scheduling and allocation logic of becomes very complex . and GPU and CPU When the computing task of is mixed in the cluster , How to avoid the waste of two kinds of resources , It is also an optimization problem that needs to be considered .

Recommended specifications - Resource Recommendation

follow-up Koordinator It also provides the ability to recommend specifications based on portraits . As mentioned earlier , It is difficult for users to accurately evaluate the resource usage of an application ,Request and Limit What is the relationship , How to set it Request/Limit, Which combination is the most appropriate for my application ? Often overestimate or underestimate Pod Resource specifications , Lead to resource waste and even stability risk .

Koordinator Will provide resource portrait capability , Collect and process historical data , Recommend more accurate resource specifications .

Community building

So far, , We have released four versions in the last two months . The previous versions mainly provide resource overload 、QoS Enhanced ability , And open source new components koord-runtime-proxy. stay 0.4 In the version , We started working on the scheduler , First, the load balancing scheduling capability is opened . at present Koordinator The community is realizing 0.5 edition , In this version ,Koordinator Will provide refinement CPU The ability to orchestrate and reserve resources , In the future planning , We will be rescheduling 、Gang Dispatch 、GPU Dispatch 、 elastic Quota And so on .

We are looking forward to your use Koordinator Positive feedback on any problems encountered 、 Help improve documentation 、 Repair BUG And add new features

- If you find a typo, try to fix it!

- If you find a bug, try to fix it!

- If you find some redundant codes, try to remove them!

- If you find some test cases missing, try to add them!

- If you could enhance a feature, please DO NOT hesitate!

- If you find code implicit, try to add comments to make it clear!

- If you find code ugly, try to refactor that!

- If you can help to improve documents, it could not be better!

- If you find document incorrect, just do it and fix that!

- …

Besides , We also on Tuesday 19:30 to 20:30 Organized regular biweekly community meetings , Welcome like-minded partners to add an exchange group to learn more .

Wechat group

Nail group

Click on here , Learn now Koordinator project !

边栏推荐

- [test development] first knowledge of software testing

- Search and recommend those things

- Swift 基礎 閉包/Block的使用(源碼)

- Saccadenet: use corner features to fine tune the two stage prediction frame | CVPR 2020

- [data update] Xunwei comprehensively upgraded NPU development data based on 3568 development board

- In the post epidemic era, the home service robot industry has just set sail

- 2021-03-11 COMP9021第八节课笔记

- 1-4metaploitable2 introduction

- Sql语句内运算问题

- 3-列表简介

猜你喜欢

C language_ Love and hate between string and pointer

Configure your own free Internet domain name with ngrok

SCM stm32f103rb, BLDC DC motor controller design, schematic diagram, source code and circuit scheme

Synchronous FIFO

2021-03-11 COMP9021第八节课笔记

蓝桥杯_N 皇后问题

2021-03-04 COMP9021第六节课笔记

Examples of corpus data processing cases (reading multiple text files, reading multiple files specified under a folder, decoding errors, reading multiple subfolder text, batch renaming of multiple fil

Echart 心得 (一): 有关Y轴yAxis属性

JDBC 在性能测试中的应用

随机推荐

直播回顾 | 云原生混部系统 Koordinator 架构详解(附完整PPT)

Backup and restore SQL Server Databases locally

Swift Extension NetworkUtil(网络监听)(源码)

Écouter le réseau d'extension SWIFT (source)

Unity culling related technologies

对于flex:1的详细解释,flex:1

Atguigu---15- built in instruction

[C language] system date & time

How to use the virtual clock of FPGA?

Signature analysis of app x-zse-96 in a Q & a community

Svn actual measurement common operation record operation

Sql语句内运算问题

4-操作列表(循环结构)

Swift Extension NetworkUtil(網絡監聽)(源碼)

51单片机_外部中断 与 定时/计数器中断

Model effect optimization, try a variety of cross validation methods (system operation)

Shader common functions

FPGA的虚拟时钟如何使用?

宝塔面板安装php7.2安装phalcon3.3.2

os.path.join()使用过程中遇到的坑