当前位置:网站首页>[reading papers] fbnetv3: joint architecture recipe search using predictor training network structure and super parameters are all trained by training parameters

[reading papers] fbnetv3: joint architecture recipe search using predictor training network structure and super parameters are all trained by training parameters

2022-06-26 03:12:00 【Shameful child】

FBNetV3: Joint architecture that uses predictors to pre train - Recipe search

Abstract

- Neural structure search (NAS) Produced the most advanced neural network , Its performance is superior to that of the best manually designed counterpart . However , The previous NAS Methods in a set of training hyperparameters ( namely , Training program ) Next search schema , Ignoring better architecture - Combination of solutions . To solve this problem , This paper proposes a neural architecture - Method search (NARS ), Search at the same time (a) The architecture and (b) Their corresponding training methods .

- NARS Using an accuracy predictor , Joint scoring of the structure and training formula , Guide Sample Selection and ranking . Besides , To compensate for the expanded search space , This paper makes use of “free” Schema statistics ( for example ,FLOP count) To pre train the predictor , Thus, the sampling efficiency and prediction reliability are significantly improved . After optimizing the training predictor through constraint iteration , This article is written in just a few minutes CPU Run a fast evolutionary search in time to generate for various resource constraints architecturerecipe Yes , be called FBNetV3.

- FBNetV3 Constitute a series of the most advanced compact neural networks, Its performance is better than that of competitors with automatic and manual design . for example ,FBNetV3 Of EfficientNet and ResNeSt Precision vs ImageNet Quite a , but FLOPs The difference is reduced 2.0 Times and 7.1 times . Besides ,FBNetV3 Significantly improve the performance of downstream object detection tasks , To improve the mAP, And based on EfficientNet Compared with similar products ,FLOPs Less 18%, The parameters are reduced 34%.

- FBNet The series of papers is composed of Facebook To launch the NAS The network searched by the algorithm ,V1、V2 It's using and DARTS The same way , By building Supernet And differential gradient method to calculate the optimal network ;V3 It adopts its own unique method ——JointNAS, Take the super parameter and training strategy as the search space , Through coarse grain and fine grain stage Search out the best network and training parameters .

- Address of thesis :[2006.02049] FBNetV3: Joint Architecture-Recipe Search using Predictor Pretraining (arxiv.org)

Introduction

Designing an efficient computer vision model is a challenging but important problem : Numerous applications, from autonomous vehicle to augmented reality, require highly accurate compact models—— Even in power 、 Calculation 、 Memory and latency There are restrictions on . The number of possible constraints and architectural combinations is very large , Making manual design almost impossible .

Even though NAS The network architecture has achieved very good results , such as EfficientNet、MixNet、MobileNetV3 wait . But whatever gradient based NAS, Or based on super net Of NAS, Or based on reinforcement learning NAS All of them have these defects

- Training superparameters are ignored , That is, we only focus on the network architecture and ignore the influence of training super parameters ;

- Only one-time applications are supported , That is, only one model will be output under specific constraints , Different constraints require different models .

, The author puts forward JointNAS, At the same time, the network architecture and training strategy are searched , Pass the network architecture and corresponding training strategy NAS Federated Search , Previous NAS The method mainly focuses on the network architecture , It doesn't care whether the training parameters are set properly in the network performance verification , This can lead to model performance degradation , and JointNAS Under resource constraints , Search for most accurate indeed Of training Practice ginseng Count With And network Collateral junction structure \textcolor{red}{ The most accurate training parameters and network structure } most accurate indeed Of training Practice ginseng Count With And network Collateral junction structure .

As a solution , Recent work has used neural architecture to search (NAS) To design the most advanced Efficient deep neural network .NAS One of the categories is the distinguishable neural architecture search (DNAS). These path search algorithms are efficient , Usually, a search is completed within the time required to train a network . However ,DNAS You can't search for non architectural super parameters that are critical to model performance . Besides , HYPERNET based NAS Method is limited by search space , Because the entire hypergraph must fit in memory to avoid slow convergence [Proxylessnas] or paging.

Other approaches include reinforcement learning (RL) [MnasNet] And evolutionary algorithms (ENAS) [Large-scale evolution of image classifiers]. However , These methods have several disadvantages :

- Ignore training hyperparameters: seeing the name of a thing one thinks of its function ,NAS Search only schema , Instead of searching for relevant training hyperparameters ( namely “ Training methods ”). This ignores the fact that , namely No Same as Of training Practice Fang Law can can Meeting extremely Big The earth Change change One individual frame structure Of become Defeat \textcolor{red}{ That is, different training methods may greatly change the success or failure of an architecture } namely No Same as Of training Practice Fang Law can can Meeting extremely Big The earth Change change One individual frame structure Of become Defeat , Even change the ranking of the architecture ( The following table ).

Different training recipes can change the level of the architecture .ResNet18 1.4x Times the width and 2 Times depth refers to 1.4 Times the width and 2.0 Times the depth scaling factor ResNet18. See Appendix for details of training formula A.1.

- Support only one-time use: Many traditional NAS Method by One Group , set Of information Source about beam production raw One individual model type \textcolor{red}{ Generate a model for a specific set of resource constraints } by One Group , set Of information Source about beam production raw One individual model type . This means that when deployed to a range of products , Each product has different resource constraints , You need to set up a rerun for each resource NAS. perhaps , Model designers can search a model , And use manual heuristics to perform suboptimal scaling , To adapt to new resource constraints .

- Prohibitively large search space to search: Naive to include training recipes in the search space is either impossible (DNAS, HYPERNET based NAS), Or Extremely expensive , Because training only architecture accuracy predictors is computationally expensive (RL,ENAS).

- Ignore training hyperparameters: seeing the name of a thing one thinks of its function ,NAS Search only schema , Instead of searching for relevant training hyperparameters ( namely “ Training methods ”). This ignores the fact that , namely No Same as Of training Practice Fang Law can can Meeting extremely Big The earth Change change One individual frame structure Of become Defeat \textcolor{red}{ That is, different training methods may greatly change the success or failure of an architecture } namely No Same as Of training Practice Fang Law can can Meeting extremely Big The earth Change change One individual frame structure Of become Defeat , Even change the ranking of the architecture ( The following table ).

To overcome these challenges , This paper proposes Neural Architecture-Recipe Search(NARS) To address the above limitations . There are three aspects in this paper :

- (1) For support NAS It turns out that Reuse under multiple resource constraints , This paper trains an accuracy predictor , Then use the predictor In a few minutes CPU Find... For new resource constraints in time architecturerecipe Yes .

- (2) To avoid the pitfalls of schema only or recipe only search , The predictor scores both the training formula and the structure .

- (3) In order to avoid the prohibitive growth of predictor training time , In this paper, we pre train the predictor on the proxy data set , To predict schema statistics based on schema representation ( for example ,FLOPs,# Parameters ).

Perform predictor pre training in sequence , After constrained iterative optimization and predictor based evolutionary search ,NARS A generalizable training formula and a compact model , stay ImageNet Get the most advanced performance on , It exceeds all existing neural networks designed manually or automatically . The contributions of this paper are summarized as follows :

- Neural Architecture-Recipe Search: A predictor is proposed , It combines scoring training recipes and structures , First federated search , In terms of training formula and structure , With set up meter person Of know knowledge by ruler degree \textcolor{blue}{ The designer's knowledge is the yardstick } set up meter person Of know knowledge by ruler degree .

- Predictor pretraining: In order to search effectively in this larger space , This article further A pre training technique is proposed , The sampling efficiency of the predictor is significantly improved .

- Multi-use predictor: The predictor in this paper can be used for fast evolutionary search , Quickly generate models of various resource budgets in a few minutes .

- State-of-the-art ImageNet accuracy: Every time FLOP Of ImageNet The accuracy has reached the highest level . for example , As shown in the figure below , In this paper, the FBNetV3 and efficientnet With considerable accuracy , Than efficientnet Less 49.3% Of FLOPs, As shown in the figure below .

ImageNet Precision and model FLOPs fbnet v3 Comparison with other efficient convolutional neural networks .FBNetV3 With 557M (2.1G) FLOPs Realized 80.8% (82.8%) Top precision of , For accuracy - Efficiency tradeoffs set new SOTA.

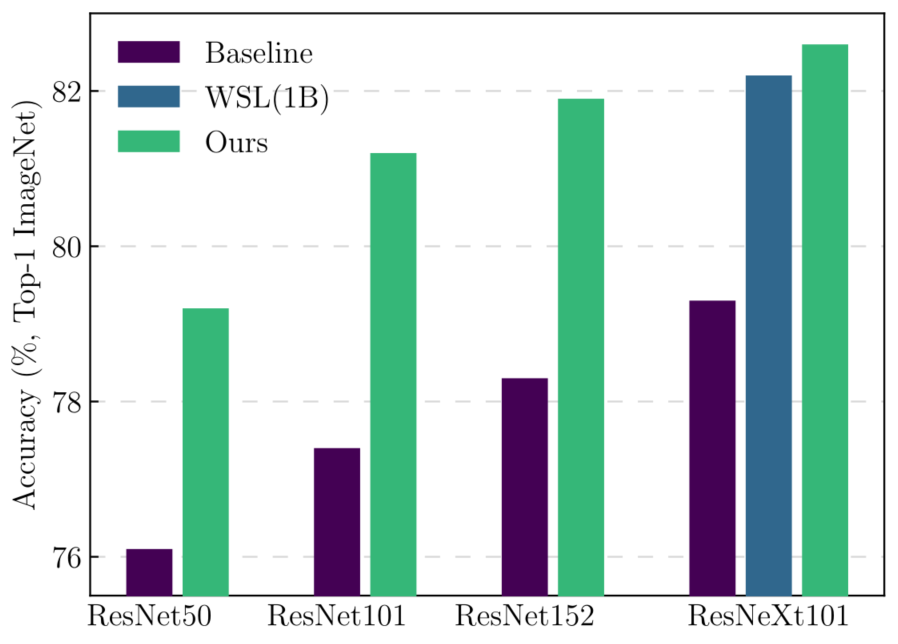

- Generalizable training recipe:NARS The single method of search has achieved significant accuracy gain in various neural networks , As shown in the figure below . In this paper, the ResNeXt101-32x8d Realized 82.6% Top precision of ; This is even more than in 1B Weak surveillance opponents trained on additional images [Exploring the limits of weakly supervised pretraining].

Use the found training recipes to improve the accuracy of the existing architecture .WSL Refers to the use of 1B A weakly supervised learning model with additional images .

network Collateral frame structure And training Practice Strategy A little Same as when Into the That's ok search Cable \textcolor{red}{ Network architecture and training strategy are searched at the same time } network Collateral frame structure And training Practice Strategy A little Same as when Into the That's ok search Cable . This is a point that the previous method did not try , Previous approaches focused on network architecture , The training method is to adopt a group of conventional training methods .

FBnetV3 Pass the network architecture and corresponding training strategy NAS Federated Search . stay ImageNet On dataset ,FBNetV3 Achieved comparable results EfficientNet And ResNeSt Performance with lower FLOPs(1.4x and 5.0x fewer); what's more , The scheme can cross the network 、 Consistent performance gains across tasks .

Related work

- The work of compact neural networks begins with manual design , It can be divided into architectural and non architectural modifications .

Manual architecture design:

- Most of the early work compressed the existing architecture . One way is to trim [Deep compression,NeST,Once for all: Train one network and specialize it for efficient deployment,Dreaming to distill: Data-free knowledge transfer via deepinversion], The middle layer or channel is removed according to some heuristics . However , Pruning either considers only one architecture [Learning both weights and connections for efficient neural network], Or you can only search smaller and smaller architectures in sequence [NetAdapt]. This limits the search space .

- Other work involves designing new architectures from scratch , Use cost-friendly new operations . This includes convolution variants , Such as MobileNet Depth direction convolution in ;MobileNetV2 Inverse residual block in ; Activate , Such as MobileNetV3 Medium hswish; And image shift [Shift: A zero FLOP, zero parameter alternative to spatial convolutions] Mix with the channel [shufflenet] This kind of operation . Although many of them are still used in the most advanced neural networks , But manually designed structures have been replaced by automatically searched structures .

Non-architectural modifications:

- Many network compression techniques include low bit quantization [Deep compression] At least two [Trained ternary quantization] Even one [Binarized neural networks]. Uneven sampling input under other work [Squeezeseg,Squeezesegv3,Efficient segmentation] To reduce computing costs .

- These approaches can be combined with architectural improvements , In order to reduce delay by approximately additional . Other non schema modifications involve hyperparametric tuning , Including the tuning Library in the pre deep learning era [Making a science of model search: Hyperparameter optimization in hundreds of dimensions for vision architectures]. Several tuning libraries dedicated to deep learning are also widely used [Tune: A research platform for distributed model selection and training]. A new class of methods automatically search for the best combination of data expansion strategies . These methods use policy search [Autoaugment]、 Population based training [Population based augmentation: Efficient learning of augmentation policy schedules]、 Bayesian based enhancement [A bayesian data augmentation approach for learning deep models] Or Bayesian optimization [Neural architecture search with bayesian optimisation and optimal transport].

Automatic architecture search:

- NAS Automatic neural network design , Achieve best in class performance . Some of the most common NAS Technology includes reinforcement learning [Neural architecture search with reinforcement learning,MnasNet], evolutionary algorithms [Large-scale evolution of image classifiers,Regularized evolution for image classifier architecture search,Cars: Continuous evolution for efficient neural architecture search] and DNAS [Darts,Fbnet,V2,Single path one-shot neural architecture search with uniform sampling,SNAS: Stochastic neural architecture search].

- DNAS Train quickly with very few computing resources , However, due to the limitation of memory, the search space is limited . Some work tries to solve this problem , By training only a subset at a time [Proxylessnas] Or by introducing an approximation [Fbnetv2]. However , It is still not as flexible as its competitors' reinforcement learning methods and evolutionary algorithms . In turn, , These previous work only searched for model schemas [Progressive neural architecture search,Neural predictor for neural architecture search,Neural architecture search using deep neural networks and monte carlo tree search,Once for all: Train one network and specialize it for efficient deployment] Or in small data sets ( for example ,CIFAR) Perform a neural architecture recipe search on .

- by comparison , In this paper, the NARS stay ImageNet Joint search on building and training methods . To make up for the larger search space , this paper (a) Introduce predictor pre training technology , To improve the convergence speed of the predictor ,( b) Evolutionary search based on predictor , Under any resource constraint settings , Just a few minutes CPU Time to design the architecture to receive pairs —— Significantly better than the highest ranking candidate of the predictor before evolutionary search .

- This paper also notes the previous work , That is, a series of models with negligible or zero cost are generated after one search [Single path one-shot neural architecture search with uniform sampling,Cars: Continuous evolution for efficient neural architecture search,Nsganetv2: Evolutionary multi-objective surrogate-assisted neural architecture search].

Method

The goal of this paper is Find the most accurate combination of architecture and training methods , To avoid ignoring architectural recipe pairs as in the previous approach . However , The search space is usually very large , It is impossible to make a thorough assessment . To solve this problem , This paper trains an accuracy predictor , The predictor is represented by the architecture and training recipe . So , This paper uses a three-stage pipeline ( Algorithm 1):

- (1) Use architecture statistics to pre train the predictor , Thus, its precision and sampling efficiency are significantly improved .

- (2) Use constrained iterative optimization to train the predictor .

- (3) For each set of resource constraints , Only in CPU Run predictor based evolutionary search in time , To produce a high-precision architecture - Recipe pair .

JointNAS, It can be divided into two stages: coarse-grained and fine-grained , Search the network architecture and training parameters .

Coarse grained stage (coarse-grained), This stage is mainly Iteratively search for high-performance candidate network structures - Super parameter pairs and training accuracy predictors .

- Coarse grained search generates Accuracy predictor and A high performance candidate network set , this individual pre measuring device yes One individual many layer sense know device structure become Of Small type network Collateral \textcolor{green}{ This predictor is a small network of multilayer perceptrons } this individual pre measuring device yes One individual many layer sense know device structure become Of Small type network Collateral , It contains two parts , A proxy predictor (Proxy Predictor), One is the accuracy predictor (Accuracy Predictor)

- The structure of the predictor is shown in the figure below , It contains a structural encoder and two head, They are auxiliary agents head And accuracy head. agent head Predict the properties of the network (FLOPs Or parameter quantity, etc ), Mainly used in encoder pre training , Accuracy rate head According to the training parameters and network structure prediction accuracy , Using agents head The pre trained encoder is in the process of iterative optimization fine-tuned.

- Neural Acquisition Function, The predictor , See above . It contains the coding architecture and two head:

- (1)Auxiliary proxy head Used for pre training coding architecture 、 Forecast schema Statistics ( such as FLOPs And parameters ). notes : The network architecture passes one-hot Way to code ;

- (2) Precision predictor , It receives training strategies and network architecture , At the same time, iterative optimization gives the accuracy evaluation of the architecture .

- Early-stopping, The author also introduces an early stop strategy to reduce the computational cost of candidate network evaluation ;

- Predictor training, After obtaining the candidate network , The author proposes training 50 individual epoch Also freeze the embedded layer , Then train the whole model again 50 individual epoch. Adopted by the author Huber Loss Train the accuracy predictor . This loss helps the model avoid abnormal dominant phenomena (prevents the model from being dominated by outliers).

- Neural Acquisition Function, The predictor , See above . It contains the coding architecture and two head:

Fine grained stage (fine-grained stages), With the help of the accuracy predictor trained in coarse-grained stage , Fast evolutionary algorithm search for candidate networks , The search integrates the super parameter optimizer proposed in this paper AutoTrain.

Predictor

The predictor of this paper To predict the accuracy of the representation of a given architecture and training recipe . Use one-hot Categorical variables ( for example , For block types ) And minimum - Maximum normalized continuous value ( for example , For channel count ) Code the architecture and training recipes . See... In the table below Full search space .

The network structure configuration and search space in this experiment .MBConv、MBPool、k、e、c、n、s、se and act.

Refer to the inverse residual block respectively [MobilenetV2]、efficient last stage[MobilenetV3]、 Nuclear size 、 Expand 、# passageway 、# The layer number 、 Stride 、 extrusion - incentive 、 Activation function .res、lr、optim、ema、p、d、m and wd Respectively refers to the resolution 、 Initial learning rate 、 Optimizer type 、EMA、dropout rate 、 Random depth dropout probability 、mixup Rate and weight attenuation .

EMA: Exponentially moving average (Exponential Moving Average) Also called weight moving average (Weighted Moving Average), It is an average method that gives higher weight to recent data .

- Suppose we have n Data : [ θ 1 , θ 2 , θ 3 , . . . , θ n ] [θ_1,θ_2,θ_3,...,θ_n] [θ1,θ2,θ3,...,θn]. The average is v ˉ = 1 n ∑ i = 1 n θ i \bar{v}=\frac{1}{n}\sum_{i=1}^n\theta_i vˉ=n1∑i=1nθi.

- EMA: v t = β ⋅ v t − 1 + ( 1 − β ) ⋅ θ t v_t=β·v_{t-1}+(1-β)·θ_t vt=β⋅vt−1+(1−β)⋅θt, among v t v_t vt Before presentation t The average of the parts ,β Is the weighted weight value ( It's usually 0.9~0.999),v0=0

- When β = n − 1 n β=\frac{n-1}{n} β=nn−1 when ,EMA It is formally equivalent to the average number .

The above is in a broad sense ema Definition and calculation method , Special , In the optimization process of deep learning , θ t θ_t θt yes t The model weight of the moment weights, v t v_t vt yes t The shadow weight of the moment (shadow weights). In the process of gradient descent , Will always maintain this shadow weight , But this shadow weight will not participate in training . The basic assumption is , The model weight is at the end n Step inside , Will shake at the actual best , So take the last n Average of steps , It can make the model more robust .

In practice , If you make v 0 = 0 v_0=0 v0=0, When the number of steps is small ,ema There will be some deviation in the calculation result of .

The ideal average is green , Because the initial value is 0, So what you get is purple . So we can add a deviation correction (bias correction).

v t = v t 1 − α t v_t=\frac{v_t}{1-\alpha^t} vt=1−αtvt

obviously , When t When a large , The correction approximates to 1.

Lingdi n The model weight of the moment (weights) by v n v_n vn, The gradient of g n g_n gn, Available :

- θ n = θ n − 1 − g n − 1 = θ n − 2 − g n − 1 − g n − 2 = θ 1 − ∑ i = 1 n − 1 g i θ_n=θ_{n-1}-g_{n-1}\\ =θ_{n-2}-g_{n-1}-g_{n-2}\\ =θ_{1}-\sum_{i=1}^{n-1}g_{i} θn=θn−1−gn−1=θn−2−gn−1−gn−2=θ1−i=1∑n−1gi

Lingdi n moment EMA The shadow weight of is v n v_n vn , Available :

v n = α v n − 1 + ( 1 − α ) θ n = α ( α v n − 2 + ( 1 − α ) θ n − 1 ) + ( 1 − α ) θ n = α n v 0 + ( 1 − α ) ( θ n + α θ n − 1 + α 2 θ n − 2 + . . . + α n − 1 θ 1 ) generation Enter into On Noodles θ n Of surface reach , Make v 0 = θ 1 exhibition open On Noodles Of Male type , can have to : v n = θ 1 − ∑ i = 1 n − 1 ( 1 − α n − i ) g i v_n=\alpha{v_{n-1}}+(1-\alpha)\theta_n\\ =\alpha(\alpha{v_{n-2}}+(1-\alpha)\theta_{n-1})+(1-\alpha)\theta_n\\ =α^nv_0+(1-α)(θ_n+αθ_{n-1}+α^2θ_{n-2}+...+α^{n-1}θ_{1})\\ Substitute into the above θ_n The expression of , Make v_0=θ_1 Expand the above formula , Available :\\ v_n=θ_1-\sum_{i=1}^{n-1}(1-α^{n-i})g_i vn=αvn−1+(1−α)θn=α(αvn−2+(1−α)θn−1)+(1−α)θn=αnv0+(1−α)(θn+αθn−1+α2θn−2+...+αn−1θ1) generation Enter into On Noodles θn Of surface reach , Make v0=θ1 exhibition open On Noodles Of Male type , can have to :vn=θ1−i=1∑n−1(1−αn−i)gi

EMA Right. i The step of step gradient descent increases the weight coefficient 1 − α n − i 1-α^{n-i} 1−αn−i , It's equivalent to making a learning rate decay.

The extension to the left of the slash is used for the first block in the stage , The extension on the right is used for the rest of the blocks . The triples in brackets represent the lowest value 、 Maximum value and step size ; A binary tuple means that the step size is 1, Tuples in parentheses represent all available choices in the search process . Please note that , If optim choice SGD,lr Multiply by 4.

Schema parameters with the same superscript share the same value during the search .

The predictor structure is a multilayer perceptron ( The figure below ), It consists of several fully connected layers and two heads :

(1) An auxiliary “ agent ” head , For pre training encoder , Predict structural statistics from structural representations ( for example ,FLOPs and #Parameters );

(2) Precision head , Fine tune in constrained iterative optimization , Prediction accuracy from the joint representation of architecture and training recipe .

Pre training to predict architecture Statistics ( Upper figure ). Training from the framework - The accuracy of the recipe for prediction ( Bottom )

Stage 1: Predictor pretraining

- Training accuracy predictors can be computationally expensive , Because each training label is ostensibly a framework for complete training under a specific training formula . To alleviate this , The solution of this paper is to pre train the agent task . The pre training step can help the predictor form a good internal representation of the input , This reduces the number of precision structure recipe samples required . This can significantly reduce the search costs required .

- In order to construct an agent task for pre training , have access to “free” Schema tag source for : in other words , image FLOPs And the number of parameters . After this pre training step , In this paper, we transfer the embedded layer of pre training to initialize the precision predictor ( See above ). This will significantly improve the sample efficiency and prediction reliability of the final predictor . for example , To achieve the same predicted mean square error (MSE ), A pre trained predictor only needs to be less than an untrained predictor 5 Times the sample , Here's the picture (e) Shown . therefore , Predictor pre training greatly reduces the overall search cost .

(a) and (b): The performance of the predictor on proxy metrics , and (d): The performance of the predictor in terms of accuracy with and without pre training ,(e): Of a predictor MSE And the number of samples with and without pre training .

Stage 2: Training predictor

In this step , This paper trains the predictor and generates a set of high-promise Candidates . As mentioned earlier , The goal of this paper is Find the most accurate combination of architecture and training methods under given resource constraints . therefore , In this paper, the schema search is formulated as a constrained optimization problem :

max ( A , h ) ∈ Ω a c c ( A , h ) s . t . g i ( A ) ≤ C i , i = 1 , . . . , γ ( 1 ) \max\limits_{(A,h)\inΩ}~acc(A,h)\\ s.t.~g_i(A)\leq{C_i},i=1,...,γ~(1) (A,h)∈Ωmax acc(A,h)s.t. gi(A)≤Ci,i=1,...,γ (1)

among A、h and Ω Respectively refers to the neural network architecture 、 Search space for training recipes and designs .acc Mapping architecture and training methods to accuracy .gi(A) and γ It refers to the formula and count of resource constraints , Such as cost calculation 、 Storage costs and runtime latency .

Constrained iterative optimization:

In this paper, Quasi Monte Carlo (QMC) [Random number generation and quasiMonte Carlo methods] Sampling to generate a schema from the search space - Sample pool of recipe pair . then , Train the predictor iteratively : In this paper, the (a) The candidate space is reduced by selecting a subset of favorable candidates based on the prediction accuracy ,(b) Use early-stopping Heuristics to train and evaluate candidates , And the use of H u b e r damage loss \textcolor{blue}{Huber Loss } Huber damage loss To fine tune the predictor . This iterative contraction of candidate space avoids unnecessary evaluation and improves exploration efficiency .

Huber Loss function

Compared to the square error loss ,Huber Losses are less sensitive to outliers in the data . The value is 0 when , It is also differentiable . It is basically an absolute value , When the error is very small, it becomes the square value . The size of the square of the error depends on a hyperparameter δ, This parameter can be adjusted . When δ~ 0 when ,Huber Losses tend to MAE; When δ~ ∞( Big numbers ),Huber Losses tend to MSE. When the prediction deviation is less than δ when , It uses squared error , When the prediction deviation is greater than δ when , Linear error used .

L δ ( y , f ( x ) ) = { 1 2 ( y − f ( x ) ) 2 f o r ∣ y − f ( x ) ∣ ≤ δ δ ∣ y − f ( x ) ∣ − 1 2 δ 2 otherwise L_δ(y,f(x))=\begin{cases} \frac{1}{2}(y-f(x))^2 &for|y-f(x)|\leqδ\\ δ|y-f(x)|-\frac{1}{2}δ^2 &\text{otherwise}\\ \end{cases} Lδ(y,f(x))={ 21(y−f(x))2δ∣y−f(x)∣−21δ2for∣y−f(x)∣≤δotherwise

δ yes HuberLoss Parameters of ,y Is the real value ,f(x) Is the predicted value of the model , And by definition Huber Loss You can lead everywhere .δ The choice of is critical , Because it determines how you view outliers . The residual is greater than δ, Just use L1( It is less sensitive to large outliers ) To minimize the , And the residual error is less than δ, Just use L2“ Appropriately ” To minimize the .

def huber(true, pred, delta): loss = np.where(np.abs(true-pred) < delta , 0.5*((true-pred)**2), delta*np.abs(true - pred) - 0.5*(delta**2)) return np.sum(loss)Compared with the linear regression of the least squares ,HuberLoss Reduced penalties for outliers , therefore HuberLoss It is a commonly used robust regression loss function .

Training candidates with early-stopping.

This paper introduces an early stop mechanism to reduce the computational cost of evaluating candidate objects . In particular , In this paper, the (a) After the first iteration of constrained iterative optimization , Sort the samples by early stop and final precision ,(b) Calculate sort dependencies , And finding correlations that exceed a certain threshold ( for example ,0.92) A period of e, As shown in the figure below .

Rank correlation and epoch. Correlation threshold ( Cyan ) by 0.92.

For all remaining candidates , This article only trains e A period of (A,h) To approximate acc(A,h). This allows much less training iterations to be used to evaluate the sample of each query .

Training the predictor with Huber loss.

- After obtaining the pre trained architecture embedding , First, the predictor is trained when the embedded layer is frozen 50 individual epoch. then , Train the whole model with a reduced learning rate 50 individual epoch. In this paper Huber Loss to train the accuracy predictor , namely L = 0.5 ( y − y ^ ) 2 i f ∣ y − y ^ ∣ < 1 e l s e ∣ y − y ^ ∣ − 0.5 \mathcal{L}=0.5(y-\hat{y})^2 ~~~if~| y-\hat{y} |<1 ~else~|y-\hat{y}|-0.5 L=0.5(y−y^)2 if ∣y−y^∣<1 else ∣y−y^∣−0.5, among y and y ^ \hat{y} y^ They are prediction and basic fact labels . This prevents the model from being dominated by outliers , Outliers can confuse predictions .

Stage 3: Using predictor

- The third stage of the proposed method is an iterative process based on adaptive genetic algorithm [Adaptive probabilities of crossover and mutation in genetic algorithms]. The best execution architecture from the second phase - Recipe pairs are inherited as part of the first generation of candidates .

- In each iteration , In this paper, we introduce mutation into candidate objects , And generate a set of subgroups according to the given constraints C ⊂ Ω \mathcal{C} ⊂ Ω C⊂Ω . Use the accuracy prediction value of pre training u To evaluate the score of each sub object , And choose before for the next generation K Candidates with the highest scores . Calculate the gain of the highest score after each iteration , And terminate the cycle when the improvement reaches saturation . Last , Evolutionary search based on predictor produces high-precision neural network structure and training formula .

- Please note that , Use a precision predictor , Searching the web to adapt to different usage scenarios will only incur negligible costs . This is because Accuracy predictors can be fully reused under different resource constraints , The evolutionary search based on predictor only needs CPU minute .

Predictor search space

- The search space of this article includes training formula and architecture configuration . The search space for the training recipe is based on the optimizer type 、 Initial learning rate 、 Weight falloff 、mixup ratio、drop out ratio、stochastic depth drop ratio And whether to use the model index to move the average (EMA) For the characteristic . The schema configuration search space is based on the inverse residual block , Including input resolution 、 Kernel size 、 Expand 、 Number and depth of channels per layer .

- In a recipe only experiment , Only adjust the training formula on the fixed structure . However , For federated search , stay ** submit a memorial to the emperor 【 The network structure configuration and search space in this experiment 】** Search the training formula and structure in the search space of . total Of Come on say , this individual empty between package contain 了 1 0 17 individual Hou choose frame structure and 1 0 7 individual can can Of training Practice Fang case \color{red}{ in general , This space contains 10^{17} Candidate architectures and 10^7 A possible training program } total Of Come on say , this individual empty between package contain 了 1017 individual Hou choose frame structure and 107 individual can can Of training Practice Fang case . It is very important to explore such a huge search space to find an optimal network architecture and its corresponding training methods .

Experiments

- In this section , First, the search method in this paper is verified in the reduced search space , To discover the training method of a given network . then , Evaluate the joint search architecture and training recipe of the search method in this paper . This article USES the PyTorch , And in ImageNet 2012 Search on classified datasets . During search , Randomly selected from the entire data set 200 Class to reduce training time . then , from 200 Class training set is randomly reserved 10K Image as validation set .

Recipe-only search

- To prove that even modern NAS The performance of the resulting architecture can also be further improved through better training programs , This paper optimizes the training scheme for the fixed architecture . In this paper FBNetV2-L3 As the infrastructure of this article , This is a kind of DNAS Search Architecture , Use [FBNet-V2] The original training method used in 79.1% Of top-1 accuracy .

- In this paper, we set the sample pool size in constrained iterative optimization n = 20K, Batch m = 48, iteration T = 4. This article uses each... During the search epoch 0.963 Learning rate decay factor training 150 individual epoch Sampling candidates for , And 3 Times slower learning rate decay ( namely , Every epoch 0.9875) Train the final model .

- The following figure shows the sample distribution of each round and the final search results in this experiment , The first round of samples is randomly generated . Found training recipes ( appendix A.3) This improves the accuracy of the infrastructure of this article 0.8%.

An illustration of the sampling and search process .

- This article will NARS The training method of search is extended to other commonly used neural networks , To further verify its universality . Even though NARS The training method for searching is FBNetV2-L3 custom , But its generalization ability is surprisingly good , As shown in the following table .

Improve the accuracy of searching training formula on the existing neural network . above ,ResNeXt101 refer to 32x8d Variants .

- stay ImageNet On ,NARS The search for training recipes resulted in up to 5.7% Accuracy gain . in fact ,ResNet50 Benchmark ResNet152 Higher than 0.9%.ResNeXt101-32x8d Even beyond the weakly supervised learning model , The model uses 10 Hundred million weakly labeled images for training , Reached 82.2% Of top-1 Accuracy rate . It is worth noting that , It is possible to achieve even better performance by searching for a specific training scheme for each neural network , This will increase search costs .

Neural Architecture-Recipe Search (NARS)

- Search settings , This article performs a joint search of the architecture and training scheme , To discover compact neural networks . Please note that , According to the above observations of this article . Reduce search space , Always use a E M A \textcolor{red}{EMA} EMA. Most settings are the same as recipe only search , But this article adds optimization iterations T = 5, And the FLOPs Constrain from 400M Set to 800M. This article uses contains 20K Sample pool of samples 80% To pre train the architecture embedding layer , And above 【Stage 1: Predictor pretraining】 Draw the remaining 20% Validation of the .

- In evolutionary search based on predictor , This article sets up four different FLOPs constraint :450M、550M、650M and 750M, Four models with the same precision predictors are found ( namely FBNetV3-B/C/D/E). This paper further reduces and enlarges the minimum and maximum models , And generate FBNetV3-A and FBNetV3F/G, To adapt to more usage scenarios , Use [efficientnet] Composite scaling proposed in .

- ** Training settings ,** For model training , This paper uses a training process based on two-step distillation :

- (1) First, the largest model is trained with a search formula with basic fact labels ( namely FBNetV3-G).

- (2) then , Train all models by distillation ( Include FBNetV3-G In itself ), This is a [Once for all: Train one network and specialize it for efficient deployment,Bignas: Scaling up neural architecture search with big single-stage models] Typical training techniques used in .

- And [once for all,Bignas] In situ distillation is different in , The teacher model here is from the step (1) The ImageNet Preliminary training FBNetV3-G. Training loss is the sum of two components : Press 0.8 shrink discharge Of steamed Distillation damage loss and Press 0.2 shrink discharge Of hand over fork entropy damage loss \textcolor{blue}{ Press 0.8 Scale the distillation losses and press 0.2 Scaled cross entropy loss } Press 0.8 shrink discharge Of steamed Distillation damage loss and Press 0.2 shrink discharge Of hand over fork entropy damage loss . In the process of training , stay 8 Nodes and each node 8 individual GPU Distributed training using synchronous batch normalization . stay 5 individual epoch After preheating , With each epoch 0.9875 Learning rate decay factor training 400 individual epoch Model of .

- In this paper, we use the FBNetV3-B and FBNetV3-E To train the scale model FBNetV3-A and FBNetV3-F/G, Only will FBNetV3-F/G The random depth drop ratio of increases to 0.2. See Appendix for more training details A.5.

- Searched models : This article compares the searched model with the figure above 【ImageNet accuracy vs. model FLOPs comparison of FBNetV3 with other efficient convolutional neural networks.】 Other relevant in NAS Benchmark and handmade compact neural network are compared , The detailed performance index comparison is listed in the following table , In the table below , According to the model Highest accuracy The models are grouped .

Comparison of different compact neural networks . For the baseline , This article quotes from the original paper ImageNet Statistics on the Internet . The result of this paper is bold .*: Group parameterization . Please see the A.6, Learn about the discussion of training techniques versus other efficiency nets .

- In all the existing efficient models , Such as EfficientNet、MobileNetV3 、ResNeSt and FBNetV2 , The model searched in this paper is in accuracy - Substantial improvements have been made in terms of efficiency tradeoffs .

- for example , Under the low cost system ,FBNetV3-A Use only 357M FLOPs And that's what happened 79.1% Top precision of ( It is similar to FLOPs Of MobileNetV3-1.25x high 2.5%). In the field of high precision ,FBNetV3-E It's more accurate than ResNeSt-50 high 0.2 times , and FBNetV3-G The precision of EfficientNetB4 Quite a , but FLOPs Than ResNeSt-50 Less 7 times . Please note that , This paper uses a larger teacher model for distillation , Further improved FBNetV3 The accuracy of the , See Appendix A.7 Shown .

Transferability of the searched models

- CIFAR-10 Classification on : This article further extends the CIFAR-10 Search on the dataset FBNetV3, The dataset has data from 10 Category 60K Images , To verify its transferability . Please note that , And [efficientnet] Enlarge the basic input resolution to 224×224 Different , In this paper, the original basic input resolution is kept at 32×32, And enlarge the input resolution of the larger model according to the scale .

- This article also replaces the second two-step module with a one-step module , To accommodate low resolution input . For the sake of simplicity , Distillation is not included . The performance of different models is compared in the figure below . Again , The model searched in this paper is obviously better than the net efficiency baseline .

CIFAR-10 The precision on the dataset is the same as FLOPs Compare .

- COCO Detection on : In order to further verify the portability of the searched model on different tasks , This article USES the FB-NetV3 As an alternative to the backbone feature extractor , Used to have conv4 (C4) The trunk faster R-CNN, And in COCO Compare with other models on the test data set . In this paper [Detectron2.](https://github. com/facebookresearch/detectron2) Most of the workout settings in , have 3 Times the training iteration , At the same time, synchronous batch normalization , Initialize the learning rate to 0.16, open EMA, Non maximum suppression (NMS) Reduced to 75, And change the learning rate plan to cosine after warming up . Please note that , This article only migrates the searched architectures , And use the same training protocol for all models .

- This article shows detailed in the following table COCO detection result . And EfficientNet backbones comparison , At a similar or higher level mAP Next , In this paper, the FBNetV3 take FLOPs And the number of parameters are reduced respectively 18.3% and 34.1%.

COCO On different trunks faster RCNN Target detection results of .

Ablation study and discussions

- In this section , We will review the performance improvement brought by federated search 、 The importance of evolutionary search based on predictor , And the influence and universality of several training techniques .

Architecture and training recipe pairing.

- The method in this paper produces different training formulas for different models . for example , We observe that smaller models tend to prefer smaller regularization ( for example , Smaller random depth drop ratio and mixing ratio ). To illustrate the importance of neural architecture recipe search , This article exchanges for FBNetV3-B and FBNetV3-E Search for training recipes , A significant decrease in the accuracy of both models was observed , As shown in the following table . This highlights the importance of the right architectural solution pairing , Emphasize the tradition NAS The decline of : Ignoring the training program and only searching the network architecture can not get the best performance .

Precision comparison of search models with exchange training recipes .

Predictor-based evolutionary search improvements.

- Evolutionary search based on predictor has made substantial improvement on the basis of constrained iterative optimization . To prove it , This paper compares In the same way FLOPs The best performance candidate obtained from the second search stage under constraints is the same as that of the final search FBNetV3( See the table below ). The observed , If the third level is discarded , The accuracy will drop by as much as 0.8%. therefore , The third search phase, though, costs very little ( A few minutes CPU Time ), But it is also crucial to the performance of the final model .

Performance improvement of prediction based evolutionary search .*: A model derived from constrained iterative optimization .

Impact of distillation and model averaging

- The following table shows different training configurations FBNetV3-G Model performance on , Where baseline refers to the absence of EMA Or the ordinary training of distillation .EMA It brings higher accuracy , Especially in the middle of training . This paper assumes that EMA Is essentially a powerful “ aggregate ” Mechanism , So as to improve the accuracy of a single model . It is also observed that distillation brings about significant performance improvement . This is related to [once for all,Bignas] The observations in are consistent . Note that the teacher model is pre trained FBNetV3-G, therefore FBNetV3-G It's self mention .EMA Combined with distillation, the former 1 The accuracy of the name ranges from 80.9% Up to 82.8%.

Use EMA And distillation to improve performance .*: Distillation training

Conclusion

- seeing the name of a thing one thinks of its function , Previous neural structure search methods only search structures , Use a fixed set of training parameters ( namely “ Training methods ”). therefore , Previous approaches have ignored more precise combinations of architectural copies . However , In this paper, the NARS No, , It is the first joint search on both architecture and training methods ImageNet Algorithms for such large data sets . It's crucial ,NARS The predictor for “free” Schema statistics ( namely FLOPs and # Parameters ) pretraining , To significantly improve the sampling efficiency of the predictor . After training and using the predictor , Got FBNetV3 Architecture - The recipe is right in ImageNet It has reached the most advanced level in classification FLOP precision .

A. Appendix

A.1. Training recipe used in Table 1

- formula 1 And formula 2 Share the same batch 256、 Initial learning rate 0.1、 The weight attenuation is 4×105、SGD Optimizer and cosine learning rate table . formula 1 Training models 30 individual epoch, formula 2 Training models 90 individual epoch. This article will not be published in Recipe-1 or Recipe-2 Introducing training techniques into , Such as dropout、 Random depth and mixup.

- When training recipes -1 And formula -2 Use the same #epoch But when different weights decay , The same observations were made : The attenuation of current weight is 1 e − 4 1e^{-4} 1e−4 and 1 e − 5 1e^{-5} 1e−5 when ,ResNet18 (1.4x Width ) Accuracy ratio of ResNet18 (2x depth ) high 0.25% And low 0.36%.

A.2. Base architecture in recipe-only search

- This article shows the infrastructure for recipe search only in the following table (FBNetV2-L2 Scaled version of ), The input resolution is 256×256.

Only the baseline schema used in the recipe search . Module symbols and tables 【The network architecture configuration and search space in our experiments.】 identical . If the input and output channels are equal , Skipping a block refers to the same connection , It is 1×1 conv.

- This is the infrastructure used in the training recipe search above . It's in ImageNet It has been realized. 79.1% Top level accuracy , And used FBNetV2 The original training method . Use the found training recipe , It achieved 79.9% Of ImageNet top-1 Accuracy rate .

A.3. Search settings and details

In the recipe only search experiment , This article will early-stop The level dependent threshold is set to 0.92, And find the corresponding early-stop The period is 103. In evolutionary search based on predictor , In this paper, the population of the initial generation is set as 100( From constrained iterative optimization 50 Best execution candidates and 50 Randomly generated samples ).

From each candidate 24 Subobject , And select the former for the next generation 40 Name candidate . Most of the settings are shared by the joint search of building and training recipes , Except that the era of early cessation is 108. The accuracy predictor consists of an embedded layer ( Architecture encoder layer ) And an additional hidden layer . For federated search , The embedded width is 24( Be careful , For recipe only search , Embedded layer without pre training ). For federated search , In this paper, the minimum and maximum FLOPs The constraints are set to 400M and 800M.

Select in constrained iterative optimization m The best sample of personality performance consists of two steps :

- (1) take FLOP The range is divided equally m A storehouse ,

- (2) Select the sample with the highest prediction score in each warehouse .

This article shows the detailed search training recipe in the following table . Also publish the searched models .

Searched training recipe.

A.4. Comparison between recipe-only search and hyperparameter optimizers

- Many famous hyperparametric optimizers (ASHA、Hyberband、PBT) All in CIFAR10 On the assessment . One exception is [Using a thousand optimization tasks to learn hyperparameter search strategies], It does this by searching the optimizer 、 Learning rate 、 Weight attenuation and momentum , Reported ImageNet On ResNet50 Of 0.5% The gain of . by comparison , In the same space ( Not included EMA) The recipe search under will ResNet50 Has improved the accuracy of 1.9%, from 76.1% Up to 78.0%.

A.5. Training settings and details

For the final model , This article uses the with 8 Distributed training of nodes , And the learning rate is increased proportionally by the number of distributed nodes ( for example , about 8 Node training is 8 times ). The batch size of each node is set to 256. This paper uses label smoothing and automatic enhancement in training . Besides , In this paper, the weight attenuation and momentum of batch standardization parameters are set to zero and 0.9.

This article will EMA The model is implemented as a copy of the original network ( They are t = 0 Share the same weight ). After each reverse transfer and model weight update , This article will EMA The weight is updated to :

w t + 1 e m a = α w t e m a + ( 1 − α ) w t + 1 , ( 2 ) w_{t+1}^{ema}=αw_t^{ema}+(1-α)w_{t+1},(2) wt+1ema=αwtema+(1−α)wt+1,(2)

among w t + 1 e m a w^{ema}_{t+1} wt+1ema、 w t e m a w^{ema}_t wtema and w t + 1 w_{t+1} wt+1 Means step t+1 Of EMA The weight 、 step t Of EMA Weight and t+1 Weight of the model . In this paper ImageNet、CIFAR-10 and COCO In the experiments on 0.99985、0.999 and 0.9998 Of EMA attenuation α. This article provides further information on FBNetV3-G Training curve .

Training curve of the search recipe on FBNetV3-G.

Baseline model ( for example AlexNet、ResNet、DenseNet and ResNeXt) use PyTorch Open source implementation , No architectural changes . The input resolution is 224×224.

A.6. More discussions on training tricks

This article learned that EfficientNet Do not use distillation . For a fair comparison , This paper reports the non - distilled FBNetV3 precision . This article provides an example in the following table : And EfficientNet comparison , Without distillation ,FBNetV3 Achieve higher accuracy , meanwhile FLOPs Less 27%. However , All the training techniques in this article ( Include EMA And distillation ) Are used in other baselines , Include BigNAS and OnceForAll.

Comparison of models with and without distillation .

Generality of stochastic weight averaging via EMA.

It is observed that , adopt EMA Random weighted average can significantly improve the accuracy of classification tasks , As mentioned before [Large scale gan training for high fidelity natural image synthesis,Momentum contrast for unsupervised visual representation learning]. This paper assumes that this mechanism can be used as a general technology to improve other DNN Model . To test this , This article USES the ResNet50 and ResNet101 The trunk , stay COCO Training on object detection RetinaNet. This article follows most of the default training settings , But it introduces EMA And cosine learning rate . The training curve and behavior similar to the classification task were observed , As shown in the figure below :

have ResNet101 The trunk RetinaNet stay COCO Training curve on object detection .

The generated has ResNet50 and ResNet101 Of the main chain RetinaNets Reach respectively 40.3 and 41.9 Of mAP, Both are much better than [Detectron2] The best value reported in (ResNet50 and ResNet101 Respectively 38.7 and 40.4). A promising future direction is to study such technologies , And extend it to other DNNs And applications .

A.7. Further improvements on FBNetV3

- This paper proves that Using a teacher model with higher accuracy will further improve FBNetV3 The accuracy of the . This article USES the RegNetY -32G FLOPs( front 1 Name accuracy 84.5%) As a teacher model , And extract all FBNetV3 Model .

- This article shows all the derived models in the following figure , It is observed that the accuracy gains of all models are consistent , by 0.2% - 0.5%.

- ImageNet Precision and model FLOPs fbnet-v3( from giant RegNet-Y Extraction from model ) Comparison with other efficient convolutional neural networks .

边栏推荐

- 文献阅读---优化RNA-seq研究以研究除草剂耐药性(综述)

- Authorization of database

- Distributed e-commerce project grain mall learning notes < 3 >

- 【flask入门系列】flask处理请求和处理响应

- How to add a table to a drawing in ggplot2

- 少儿编程对国内传统学科的推进作用

- [system architecture] - how to evaluate software architecture

- golang正則regexp包使用-06-其他用法(特殊字符轉換、查找正則共同前綴、切換貪婪模式、查詢正則分組個數、查詢正則分組名稱、用正則切割、查詢正則字串)

- Good news | congratulations on the addition of 5 new committers in Apache linkage (incubating) community

- Teach you to quickly record sounds on PC web pages as audio files

猜你喜欢

【论文笔记】Supersizing Self-supervision: Learning to Grasp from 50K Tries and 700 Robot Hours

Learn from Taiji makers - mqtt (V) publish, subscribe and unsubscribe

UE5全局光照系統Lumen解析與優化

MySQL增删查改(初阶)

Matlab| short term load forecasting of power system based on BP neural network

小 P 周刊 Vol.10

Utonmos: digital collections help the inheritance of Chinese culture and the development of digital technology

Stm32cubemx: watchdog ------ independent watchdog and window watchdog

《你不可不知的人性》经典语录

网络PXE启动WinPE,支持UEFI和LEGACY引导

随机推荐

P2483-[template]k short circuit /[sdoi2010] Magic pig college [chairman tree, pile]

Is it safe to open an account in flush online? How to open a brokerage account online

Dual batteries in series, hardware design

Oracle exercise

Clion项目中运行多个main函数

【论文笔记】Deep Reinforcement Learning Control of Hand-Eye Coordination with a Software Retina

Dreamcamera2 record key prompt sound into video during video recording

Vulhub replicate an ActiveMQ

Cox 回归模型

分布式电商项目 谷粒商城 学习笔记<3>

Drawing structure diagram with idea

使用 AnnotationDbi 转换 R 中的基因名称

Overview of orb-slam3 paper

Leetcode 176 The second highest salary (June 25, 2022)

Additional: brief description of hikaricp connection pool; (I didn't go deep into it, but I had a basic understanding of hikaricp connection pool)

[machine learning] case study of college entrance examination prediction based on multiple time series

如何在 ggplot2 中向绘图中添加表格

解析社交机器人中的技术变革

在UE内添加控制台工程(Programs)

浅谈虚拟内存与项目开发中的OOM问题