当前位置:网站首页>Learn to identify follow-up questions in dialogue Q & A

Learn to identify follow-up questions in dialogue Q & A

2022-06-26 00:35:00 【SU_ ZCS】

subject : Learn to identify follow-up questions in the dialogue

author :Souvik Kundu, Qian Lin, Hwee Tou Ng

Release place :acl

Task oriented : Subsequent problem identification

Address of thesis :Learning to Identify Follow-Up Questions in Conversational Question Answering - ACL Anthology

Paper code :https:// github.com/nusnlp/LIF

Catalog

3.2 The challenge of data sets

4. Three way attention pooling network

5.2 Statistical machine learning model

9.4.1 Three way attention pool

Abstract

Despite the recent progress made in conversational Q & A , But most previous work does not focus on follow-up issues . Practical dialogue Q & a systems often receive follow-up questions in ongoing dialogues , It is critical for the system to be able to determine whether the problem is a follow-up to the current conversation , In order to find the answer more effectively later . This paper introduces a new follow-up problem identification task , Came up with a Three way attention pool network , It captures relevant paragraphs 、 Pairwise interaction between dialogue history and candidate follow-up questions to determine the applicability of follow-up questions . It enables the model to capture topic continuity and topic shift when scoring specific candidate follow-up questions . Experiments show that , The three-way attention pool network proposed in this paper has significant advantages over all baseline systems .

1. Introduce

Conversational Q & A (QA) Imitate the process of dialogue between natural people . lately , Conversational QA It's got a lot of attention , The system needs to answer a series of interrelated questions from relevant text paragraphs or structured knowledge diagrams . However , Most conversational QA The task does not explicitly focus on the requirements model to identify subsequent problems . A practical conversational QA The system must have the ability to well understand the dialogue history and identify whether the current problem is the follow-up problem of the specific dialogue . Consider user attempts with the machine ( for example Siri、Google Home、Alexa、Cortana etc. ) dialogue : First , Users ask questions , The machine answered . When the user asks the second question , It is very important for the machine to know whether it is the first question and the follow-up of its answer . This needs to be determined for each question raised by the user in the ongoing conversation . The machine judges whether the problem is a follow-up problem by judging whether the problem is related to the historical dialogue problem , Next, we will use the appropriate answer finding strategy to answer the question :① If it is a follow-up question ,QA The system first uses information retrieval (IR) The engine retrieves some relevant documents to answer questions .② If it is not a follow-up question ,QA The system will pass according to the problem IR The engine communicates to retrieve other supporting documents .

chart 1 Some examples are given in , To illustrate the follow-up problem identification task in the dialogic reading comprehension environment . This paper presents a new data set , For learning to identify follow-up questions , namely LIF. Given a text paragraph as knowledge and a series of question and answer pairs as dialogue history , It requires a model to determine whether the candidate problem is valid or invalid by identifying the topic continuity or topic transfer of the candidate problem . for example , In the figure 1 Examples given in ① in , The model needs to start from the dialogue history (1) in ( namely , The first film is flesh and blood ) Capture topic continuity and from (2) Get topic transfer in ( namely , Film type ).(2) Candidate follow-up questions in are invalid , Because the relevant paragraphs do not provide any information about his last years . The last example of a follow-up question ③ Invalid , because Verhoeven Is he , Not her .

The paragraph :…Verhoeven The first American film 《 Blood and meat 》(1985 year ), The film is made by Rutger Hauer and Jennifer Jason Leigh starring .Verhoeven Moving to Hollywood is to get a wider range of film production opportunities . When working in the United States , His style has changed a lot , Directed a big budget 、 Very violent 、 A blockbuster with special effects 《 robocop 》 and 《 Total recall 》. robocop … Verhoeven With the same strong and provocative basic instinct (1992) Follow these successes … Won two Oscar nominations , Film editing and original music … Dialogue history : (1) ask :Verhoeven What was the first movie made in the United States ? answer : Flesh and blood (2) ask : What kind of movies did he make ? answer : Big budget , Very violent , Special effect weight Examples of candidate follow-up questions : ① When was his first film released ? - Effective ② Did he make any movies in the last few years ? - invalid ③ What did she do after her debut ? - invalid |

chart 1: Explain examples of subsequent problem identification tasks .

In the past, some studies have focused on determining which part of the dialogue history is important for dealing with follow-up issues . However , The recently proposed neural network-based conversation QA The model does not explicitly focus on subsequent issues . In this paper, a three-way attention pool network is proposed , It is used for subsequent problem identification in the context of conversational reading comprehension . It evaluates each candidate's follow-up questions based on two perspectives —— Topic shift and topic continuity . The proposed model utilizes two attention matrices , They are conditional on the relevant paragraphs , To capture the topic shift in subsequent questions . It also relies on another attention matrix to capture topic continuity directly from previous Q & A pairs in the dialogue history . For comparison , Several powerful benchmark systems are also studied for subsequent problem identification .

The contributions of this paper are as follows :

① Put forward a new task , Subsequent problem identification in a conversational reading comprehension environment that supports automatic assessment .

② A new data set is proposed , namely LIF, It comes from the recently released conversational QA Data sets QuAC.

③ A three-way attention pooling network is proposed , It aims to capture topic shift and topic continuity for subsequent problem identification . The proposed model is obviously superior to all baseline systems .

2. Mission Overview

Given a piece of text 、 A series of Q & A pairs and candidate follow-up questions in the dialogue history , The task is to determine whether the candidate follow-up question is a valid follow-up question . Symbolic representation :

① The paragraph :P, from T individual tokens form

② Sequence of previous questions :{Q1,Q2,... . . , QM}; The answer to the previous question sequence :{A1, A2, . . . , AM}; This article links all previous questions and their answers with special delimiters :Q1 | A1|| Q2 | A2 || . . . || QM | AM

③ Previous questions in dialogue history - The combined length of the answer pairs : U

④ Candidate follow-up questions :C. Binary classification task , the C Classification as valid or invalid

⑤ Length of candidate follow-up questions :V

3.LIF Data sets

In this section , Will describe how the author prepared LIF Data sets , Then analyze the data set .

3.1 Data preparation

This paper relies on QuAC Data sets to prepare LIF Data sets .QuAC Each problem in the dataset is assigned one of three categories :“ affirmatory (should ask)”、“ May be certain (could ask)” or “ Can't answer (should not ask)” follow-up problem . Use “ affirmatory (should ask)” Follow up the problem example to build a valid instance of the data set . because QuAC The test set for is hidden (Question Answering in Context The official website only gives training and development sets , Test set not given ), take QuAC The development set is divided into two halves , Generate LIF Development set and test set . Splitting is done at the paragraph level , To ensure that the paragraphs used in the development and test set do not overlap .

Positive sample

In order to learn from QuAC Created in LIF Each instance , When you meet “ affirmatory (should ask)”, Collect relevant paragraphs 、 The previous question - Answer , And take this question as an effective candidate follow-up question .

Negative sample

Extract invalid follow-up questions from two sources :

① come from QuAC Questions about other conversations in , These problems can be distracting . Sampling from the first source involves a two-step filtering process . First by using InferSent The generated embedding is used to compare the cosine similarity between the relevant paragraphs and all the questions from other dialogues , Select the former according to the higher similarity score 200 A question ; In the second step , Connect the valid candidate follow-up questions to the question and answer pairs in the dialogue history , Form an enhanced follow-up question , Then calculate the relationship between each ranking problem obtained in the first step and the enhanced follow-up problem token Overlap count , By way of token Overlap count divided by the length of the sorting problem ( After removing the stop words ) To standardize token Overlap count , For each valid instance , Fix a threshold , And at least one but at most two have the highest normalization token The problem of overlapping count is regarded as the follow-up problem of invalid candidate .

② What happens after an effective follow-up question QuAC Non follow up questions in the same dialogue , Introduce potential distractions from the same conversation . Check the remaining question and answer pairs after the valid follow-up questions . If the problem is marked as “ Can't answer (should not ask)” after , Then mark the question as an invalid candidate . Throughout the sampling process of invalid problems , Exclude inclusion such as “what else”, “any other”, “interesting aspects” And so on , To avoid choosing possible follow-up questions ( for example , Are there any other interesting aspects of this article ?).

For training sets and development sets , Combine all candidate follow-up questions from other conversations and the same conversation . Three test sets are reserved for candidates from different sources : From other conversations and the same conversation (Test-I), Only from other conversations (Test-II), Only from the same conversation (Test- 3、 ... and ). surface 1 The statistical data of the whole data set are given . from Test-I A collection of random samples 100 Invalid follow-up questions , And manually check them , It is verified that 97% Is really invalid .

surface 1:LIF Dataset Statistics . FUQ† Follow-up questions

3.2 The challenge of data sets

To identify whether the problem is a valid follow-up problem , The model needs to be able to capture its relevance to relevant paragraphs and dialogue history . The model needs to determine whether the topic of the question is the same as that in the relevant paragraph or dialogue history , generation word ( for example I, he, she) And possessive pronouns ( for example ,my, his, her) Of Introduction is usually a distraction . When determining the validity of the follow-up questions , This analysis of pronouns is a key aspect . It also needs to check whether the actions and features of the topics described in the candidate follow-up questions can be logically inferred from the relevant paragraphs or dialogue history . Besides , Capturing topic continuity and topic shifting is necessary to determine the effectiveness of subsequent issues . Topics and their behaviors or characteristics in invalid follow-up questions are often mentioned in paragraphs , But related to different topics .

3.3 Data analysis

This paper starts from Test-I A collection of random samples 100 Invalid instances , And according to the table 2 Manually analyze them for the different attributes given in . Find out 35% The invalid question of has the same subject as the relevant paragraph ,42% The problem of needs pronoun analysis .11% The question of has the same subject entity as the valid follow-up question ,5% The question of has the same subject entity as the last question in the dialogue history .8% The pronouns in the invalid problem of match the pronouns in the corresponding valid follow-up problem , in addition 8% Match the last question in the dialogue history . about 7% The case of , The question type is the same as the valid question , about 6% The case of , They are of the same type as the last question in the dialogue history . The author also observed that ,4% The invalid question of refers to the same action as the corresponding valid question , And in 3% They are the same as the last question in the history of dialogue . The distribution of these attributes shows the challenge of solving this task .

surface 2: Yes LIF Data set analysis . The percentages do not add up to 100%( Greater than 100%), Because many examples contain multiple attributes .(Q~— Invalid follow-up questions ;P— Relevant paragraphs ;G— Effective follow-up questions ;L— The last question in the history of dialogue ).

4. Three way attention pooling network

In this section , A three-way attention pool network is described . First , Apply embedded layers to relevant paragraphs 、 Dialogue history and candidate follow-up questions . Besides , They are encoded to derive sequence level coding vectors . Last , The proposed three-way attention pool network is applied to the scoring of each candidate follow-up question .

4.1 Embedding and encoding

The embedded (Embedding) and code (Encoding) difference , Reference resources encoding And embedding - Simple books . Character embedding (character-level Embedding) Reference resources In natural language processing Character Embedding technology _ Potato potato yam egg blog -CSDN Blog .

remarks : Embed... Through characters ( Fine grained words ) And word embedding to obtain the semantic similarity between words ( Words with similar meanings have closer force in the embedded space , The difference between the values is small ), Coding is the dialogue of history 、 Follow up questions such as encoding long text words . The two are not the same , Is to embed words first , On the basis of embedding, the text is encoded , It can be understood as Embedding and Encoding It's all coding , Embedding takes into account the semantic relationship between words .

4.4.1 The embedded

Use both character embedding and word embedding 2 We are also right ELMO and BERT Experiments were carried out , But no consistent improvement was observed . Be similar to Kim (2014), Using convolutional neural networks (CNN) Get character level embedding . First , Use a character based lookup table to embed characters as vectors , Feed it to CNN, Its size is CNN Input channel size of . Then over the entire width CNN Maximize the pool of output , To get each token A vector of fixed size . Use from GloVe (Pennington et al., 2014) The pre training vector for each token Get a fixed length word embedding vector . Last , Connect the word and character embedding to get the final embedding .

4.1.2 Joint coding

Use two-way LSTM, Talk about history 、 The sequence level codes of candidate follow-up questions and paragraph codes are respectively expressed as Q∈R、C∈ and D∈

and D∈ , among H Is the number of hidden cells . Joint coding of paragraphs and dialogue history , The similarity matrix is derived A =

, among H Is the number of hidden cells . Joint coding of paragraphs and dialogue history , The similarity matrix is derived A =  ∈

∈ , stay A Apply a line by line softmax Function to get R∈

, stay A Apply a line by line softmax Function to get R∈ ( normalization , Because the values of the similarity matrix are different , Therefore, it is normalized ). Now? , For all paragraph words 、 The aggregation of session history is expressed as G = RQ∈

( normalization , Because the values of the similarity matrix are different , Therefore, it is normalized ). Now? , For all paragraph words 、 The aggregation of session history is expressed as G = RQ∈ . And then will be with G The aggregation vector corresponding to the paragraph word in is the same as D Join the paragraph vectors in , And then there's another one BiLSTM To get a joint representation V∈

. And then will be with G The aggregation vector corresponding to the paragraph word in is the same as D Join the paragraph vectors in , And then there's another one BiLSTM To get a joint representation V∈ .

.

4.1.3 Multifactor attention

Besides , Multi factor self attention coding is applied to joint representation . If m Represents the number of factors , Multifactor attention ![F^{[1:m]}](http://img.inotgo.com/imagesLocal/202206/26/202206252225512226_42.gif) ∈

∈  Formula for :

Formula for :

here ![W_{f}^{[1:m]}](http://img.inotgo.com/imagesLocal/202206/26/202206252225512226_18.gif) ∈

∈ Is a three-dimensional tensor . Yes

Is a three-dimensional tensor . Yes ![F^{[1:m]}](http://img.inotgo.com/imagesLocal/202206/26/202206252225512226_42.gif) Perform the maximum pool operation , In the number of factors , Lead to self attention matrix F ∈

Perform the maximum pool operation , In the number of factors , Lead to self attention matrix F ∈  . By applying line by line softmax Function pair F Normalize , To get

. By applying line by line softmax Function pair F Normalize , To get  ∈

∈  . Now the self - attention code can be given as M =

. Now the self - attention code can be given as M =  V ∈

V ∈  . Then the self attention coding vector and the joint coding vector are connected , Then the gating based on feedforward neural network is applied to control the overall influence , Lead to Y ∈

. Then the self attention coding vector and the joint coding vector are connected , Then the gating based on feedforward neural network is applied to control the overall influence , Lead to Y ∈ . The last segment is coded P ∈

. The last segment is coded P ∈ It is through Y Apply another BiLSTM Layer .

It is through Y Apply another BiLSTM Layer .

4.2 Three way attention pool

Score each candidate follow-up question using a three-way attention pool network . The network architecture is shown in the figure 2 Shown .

chart 2: Three way attention pool network architecture .

attention pooling(AP) First of all dos Santos wait forsomeone (2016) Proposed and successfully used in answer sentence selection task .AP It is essentially an attention mechanism , A pair of input representations and their similarity measures can be jointly learned . The main idea is to project pairs of inputs into a common representation space , To compare them more reasonably , Even if the two inputs are not semantically comparable , For example, questions and answers are correct . In this paper , Focus on pooling The idea of network is extended to the proposed three-way attention focused network for subsequent problem identification tasks , The model needs to capture the applicability of candidate follow-up questions by comparing with dialogue history and relevant paragraphs . especially , The proposed model aims to capture the topic shift and topic continuation in the follow-up problems . Dos Santos et al (2016) Use a single attention matrix to compare a pair of inputs . by comparison , The proposed model relies on three attention matrices , Two of these additional attention matrices utilize the relevant paragraphs ( Capture topic transfer ). Besides , Compared with the model proposed by dos Santos et al , The model proposed in this paper is developed to deal with the more complex task of subsequent problem identification . This paper scores each candidate follow-up question from two different perspectives according to its relevance to the dialogue history :(1) Consider relevant paragraphs ( Knowledge ) and (2) Do not consider paragraphs .

Pay attention to matrix calculation

In this step , Calculate three different attention matrices to capture the similarity between the dialogue history and the candidate follow-up questions —— When considering relevant paragraphs, two matrices , Another matrix when paragraphs are not considered . Notice the matrix Aq,p ∈ Capture the dialogue history and each... Between paragraphs token Text similarity , The following are given. :

Capture the dialogue history and each... Between paragraphs token Text similarity , The following are given. :

![]()

among fattn(.) Functions can be written as fattn(Q, P) =  . Intuitively speaking ,Aq,p(i, j) Captured the... In the paragraph i individual token And the... In the dialogue history j individual token Context similarity score between . Similarly , Attention matrix Ac,p ∈

. Intuitively speaking ,Aq,p(i, j) Captured the... In the paragraph i individual token And the... In the dialogue history j individual token Context similarity score between . Similarly , Attention matrix Ac,p ∈ , Capture the contextual similarity of candidate follow-up questions and related paragraphs ,Ac,p(i,j) Captured the... In the paragraph i individual token And the following questions j individual token The text similarity score between :

, Capture the contextual similarity of candidate follow-up questions and related paragraphs ,Ac,p(i,j) Captured the... In the paragraph i individual token And the following questions j individual token The text similarity score between :

![]()

Given P,Aq,p and Ac,p Use Federation to capture Q and C Similarity between .

matrix Ac,q ∈ , It captures candidate follow-up questions and conversation history similarity scores that do not consider related paragraphs :

, It captures candidate follow-up questions and conversation history similarity scores that do not consider related paragraphs :

![]()

attention Pooling

After obtaining the attention matrix , Apply column or row maximization . When relevant paragraphs are considered to capture the similarity between dialogue history and candidate follow-up questions , Yes Aq,p and Ac,p Perform column maximization , And then use softmax Normalize , Results respectively rqp ∈  and rcp ∈

and rcp ∈  . for example ,rqp by (1 ≤ i ≤ U):

. for example ,rqp by (1 ≤ i ≤ U):

![]()

rqp Of the i Elements are represented as :( In the history of dialogue i individual token Text encoding of ) be relative to ( Paragraph code ) Relative importance score of .rcp Expressed as :( The... Of the candidate questions i individual token Text encoding of ) be relative to ( Paragraph code ) Relative importance score of . When the relevant paragraph code is not considered , Yes Ac,q Perform a maximum pool of rows and columns to generate rqc∈ and rcq ∈

and rcq ∈ .rqc Of the i Elements are represented as :( In the history of dialogue i individual token Text encoding of ) amount to ( Candidate question code ) Relative importance score of ,rcq Of the i Elements are represented as :( Candidate question code ) amount to ( In the history of dialogue i individual token Text encoding of ) Relative importance score of .

.rqc Of the i Elements are represented as :( In the history of dialogue i individual token Text encoding of ) amount to ( Candidate question code ) Relative importance score of ,rcq Of the i Elements are represented as :( Candidate question code ) amount to ( In the history of dialogue i individual token Text encoding of ) Relative importance score of .

Candidate scores

In this step , Score each candidate's follow-up questions . Each candidate C Both are rated from two perspectives - Consider and not consider the relevant paragraph codes P:

among C yes C The coding . Similar function fsim(C, Q|P) = xyT, among x=rqp Q∈RH and y=rcp C∈RH. Another similar function fsim(C,Q) =  , among m = rqc Q∈

, among m = rqc Q∈ and n=rcq C∈

and n=rcq C∈ . It's said above rqp The first i Elements are represented as historical dialogue No i individual token The relative importance score of text encoding relative to paragraph encoding , add Q Is the importance score of the whole historical dialogue relative to the paragraph code .

. It's said above rqp The first i Elements are represented as historical dialogue No i individual token The relative importance score of text encoding relative to paragraph encoding , add Q Is the importance score of the whole historical dialogue relative to the paragraph code .

The binary cross entropy loss is used to train the model . For forecasting , A threshold was found to maximize the score of the development set . For test cases , Use thresholds to predict whether subsequent problems are valid or invalid ( Look at the recurrence introduction ).

5 Baseline model

This paper develops several rule-based statistical machine learning and neural baseline models . For all models , Determine the threshold based on the best performance on the development set .

5.1 Rule based models

This paper develops two models based on word overlap counting —— Between candidate follow-up questions and paragraphs , And between the candidate follow-up questions and the history of the dialogue . The count value is normalized according to the length of the candidate follow-up question .

Next , Use InferSent Sentence embedding develops two models based on the context similarity score . These two models compare candidate follow-up questions with relevant paragraphs and dialogue history, respectively . The similarity score is calculated based on the vector cosine similarity .

Also used tf-idf weighting token Overlapping scores develop another rule-based model . Add the last question in the dialogue history to the candidate follow-up question , And add overlapping words between the connected context and paragraphs tf idf.

5.2 Statistical machine learning model

In this paper, two sets of features are handmade for statistical machine learning model . A set of features consisting of tf-idf weighting GloVe Vectors make up . Because the author used 300 dimension GloVe vector , So the dimension of these features is 300.

Another set of features includes word overlap count . Calculate candidate follow-up questions 、 Count of pairs of words overlapping between related paragraphs and dialogue history . The feature based on the overlap count is the dimension 3. Experiment with logistic regression using derived features .

5.3 Neural models

Several neural baseline models have also been developed . First embed ( Same as previously described ), Then connect the relevant paragraphs 、 Dialogue history and candidate follow-up questions . then , Use BiLSTM or CNN Apply sequence level coding . about CNN, Use the same number of unigram、bigram and trigram filter , And connect the output to get the final code . Next , Apply global maximum pooling or attention pooling to obtain aggregate vector representation , Then the feedforward layer scores the candidate follow-up questions . Make the sequence code of the connection text as E ∈ R,et by E Of the t That's ok . The aggregation vector of the attention pool e ∈

R,et by E Of the t That's ok . The aggregation vector of the attention pool e ∈ It can be

It can be

![]()

among w ∈  Is a learnable vector . Use BERT Developed a baseline model . First connect all inputs , Then apply BERT To export the context vector . Next , Use attention to aggregate them into a vector . Then the feedforward layer is used to score each candidate follow-up question .

Is a learnable vector . Use BERT Developed a baseline model . First connect all inputs , Then apply BERT To export the context vector . Next , Use attention to aggregate them into a vector . Then the feedforward layer is used to score each candidate follow-up question .

6 experiment

In this section , The experimental setup will be introduced 、 Results and performance analysis .

6.1 Experimental setup

Do not update during training GloVe vector .100 Dimension character level embedding vector . all LSTM The number of hidden cells in is 150(H = 300). The probability of use is 0.3 Of dropout. Set the number of factors in the multi factor attention coding to 4, Use Adam Optimizer , The learning rate is 0.001,clipnorm 5. Consider the most in the history of dialogue 3 The answer to the previous question is right . This is a binary classification task , Use accuracy 、 Recall rate 、F1 and macro F1 As an evaluation indicator . All scores reported in this article are in % In units of .

6.2 result

surface 3 Show , The proposed model is superior to the competitive baseline model in all test sets . Use pairing t Test and self-service resampling for statistical significance test . The performance of the proposed model is obviously better than that of the optimal baseline system (p < 0.01), The latter in Test-I Provide the highest Macro-F1 fraction . in the majority of cases , be based on LSTM The neural baseline performs better than the rule-based and statistical machine learning model . stay Test III On , Statistical models tend to predict validity , The number of valid instances is much higher than that of invalid instances ( about 75%:25%), Which leads to Valid F1 The score is very high . These baseline systems ( Although it performs well on effective issues ) In the use of macro F1 Poor performance in measuring effective and ineffective follow-up issues .macro F1 It is the overall evaluation index used to compare all systems . Overall speaking , Talk to others ( test II) comparison , From the same conversation ( test III) It is more difficult to identify follow-up problems .

surface 3: Comparison results of subsequent problem identification tasks . The three-way attention pooling network is combined with several rule-based statistical machine learning and neural models (V – Valid、P – Precision、R – Recall、Psg – Passage、Hist – Conversation history) Compare the performance of .

Executed table 4 Ablation studies shown in . When not considering the history of dialogue , The proposed model performs worst . This is because the question and answer pairs in the dialogue history help to determine the continuity of the topic , At the same time, identify effective follow-up problems . When not considering the relevant paragraphs ( Knowledge ) when , Performance will also drop , Because it helps capture the topic shift . When the delete Ac,q when , Performance will also drop . It performs better than a model that completely ignores the history of dialogue , Because the dialogue history is considered in the paragraph coding . When deleting other components ( For example, multifactor attention coding , Joint encoding and character embedding ) when , Performance will also drop .

surface 4: Ablation study of the development set .

6.3 qualitative analysis

The proposed model aims to capture topic continuity and topic shift by using a three-way attention pool network . Yes Aq,p and Ac,p Our attention pool is designed to capture topic shifts in subsequent questions of a given conversation history . Consider table 5 The first example in . When not considering paragraphs , It does not correctly identify subsequent problems , The model proposed in this paper correctly identifies the topic transfer to “ The duration of the disturbance after four days and restore order and take back the prison on September 13.”. In the second example , Although the model in this paper can be Schuur Correctly identify topic continuity , But a model without a historical dialogue cannot identify subsequent problems .

The author makes an error analysis , The model proposed in this paper fails to identify the follow-up problems . Randomly selected from the development set 50 An example of this (25 Effective and 25 Invalid ). The author found that 32% The example of requires pronoun parsing for the topic in the subsequent questions .38% An instance of requires action / The characteristics of the subject are verified ( for example did they have any children? vs. gave birth to her daughter).14% The error occurs when you need to match objects or predicates that appear in different forms ( for example ,hatred vs hate, television vs TV). For the rest 16% The case of , It does not properly capture topic transitions .

surface 5: Taken from the LIF Examples of development sets , The model in this paper correctly identifies the effective follow-up problems .

7. Related work

Many data-driven machine learning methods have been proved to be effective for dialog related tasks , For example, dialogue strategy learning (Young wait forsomeone ,2013 year )、 Conversation status tracking (Henderson wait forsomeone ,2013 year ;Williams wait forsomeone ,2013 year ; Kim wait forsomeone ,2016) And natural language generation (Sordoni wait forsomeone ,2015 year ;Li wait forsomeone ,2016 year ;Bordes wait forsomeone ,2017 year ). Most recent dialog systems are either not goal oriented ( for example , A simple chat robot ), Or domain specific , If they are goal oriented ( for example ,IT Reception ). In the last few years , There is a surge of interest in conversational Q & A . Saha et al (2018) Released a complex sequential Q & A (CSQA) Data sets , It is used for reasoning through knowledge graph , Through a series of interrelated QA Come and learn the dialogue . Cui et al (2018) Released a large-scale dialogue QA Data sets , Context question and answer (QuAC), It imitates the interaction between teachers and students . Reddy et al (2019) Released CoQA Data sets , And many systems have been evaluated on it . Zhu et al (2018) Put forward SDNet Integrating context into the traditional reading comprehension model . Huang et al (2019) Put forward a kind of “ technological process ” Mechanism , Intermediate representations generated in response to previous questions can be merged through alternate parallel processing structures . In a conversational environment , Put the previous QA Yes, as a history of dialogue , And these models focus on answering the next question , The work of this paper focuses on identifying the follow-up problems . lately ,Saeidi wait forsomeone (2018) This paper presents a regulatory text data set , A model is needed to raise subsequent clarification questions . However , The answer is limited to yes or no , This makes the task quite limited . Besides , although Saeidi wait forsomeone (2018) Focus on generating clarification questions for dialogue questions , This article focuses on identifying whether the question is a follow-up to the conversation .

8 Conclusion

In this paper, we propose a new follow-up problem identification task in the dialogue environment . Developed a data set , namely LIF, It comes from a previously released QuAC Data sets . It is worth noting that , The proposed dataset supports automatic evaluation . A novel three-way attention pool network is proposed , The network identifies whether the follow-up question is valid or invalid by considering the relevant knowledge in the paragraph and the dialogue history . Besides , Several powerful baseline systems have been developed , It is shown that the proposed three-way attention pool network is superior to all baseline systems . Integrate a three-way attention pool network into an open domain conversation QA The system will be an interesting work in the future ( This article is just to judge whether the candidate question is a follow-up question to the previous conversation , But there is no answer to the identified follow-up questions ).

9 Reappear

Download the source code of this article , The initial content is as follows ,12af Source code for the model ,training_configs Configure the file for the model ,download_data.sh Script for downloading datasets ,download_pretrined_models.sh To download the model that the author has trained the model ( No download , You can train yourself ),evalutor.py Code for evaluating model performance ,README.md A tutorial for reproducing the model in this article ,requirements Libraries needed to run code ( Just give 3 individual , Incomplete ). The reproduced textbook can refer to the author's README.md, You can also follow my steps .

9.1 Download datasets (LIF)

You can see download_data.sh The script is as follows , Two things need to be downloaded , The red ones are words embedded , The green one is LIF Data sets , You can execute the script directly , Don't know what's going on , An error is reported when I execute the script directly , I downloaded it manually , This is the link below , The red link can be accessed directly , Green data sets are stored in Google cloud disk , You have to hang a ladder .

Download it , Word embedding glove.840B.300d.txt.gz Put in path data/embeddings/ Next data and 12af、training_configs In the same directory . The data set is downloaded for lif_v1, Take the inside dataset Put it in data/ Underside .

9.2 Pre treatment

Library version :

allennlp==0.8.4

overrides==1.9

torch==1.0.0

torchvision==1.4.0

spacy==2.1.9

① In the installation allenlet The following errors are reported when :

Cannot uninstall 'greenlet'. It is a distutils installed project and thus we cannot accurately determine which files belong to it which would lead to only a partial uninstall.solve :pip install --ignore-installed greenlet

② An error is reported after executing the training command :

requests.exceptions.ConnectionError: HTTPSConnectionPool(host='raw.githubusercontent.com', port=443): Max retries exceeded with url: /explosion/spacy-models/master/shortcuts-v2.json (Caused by NewConnectionError('<urllib3.connection.VerifiedHTTPSConnection object at 0x000002368F92A7B8>: Failed to establish a new connection: [WinError 10061] Due to the target computer product Extremely refuse , Unable to connect .'))solve : install en_core_web_sm

link : Baidu SkyDrive Please enter the extraction code

Extraction code :0xim

Will download okay en_core_web_sm Put it in the right place ,pip install route /en_core_web_sm-2.2.5.tar.gz

9.3 Training

training_configs/bert_baseline.json and l2af_3way_ap.json Revise it GPU The serial number of , if GPU Only one piece , The original configuration file is not desirable , With l2af_3way_ap.json For example , For example, only one piece is changed to 0. I try to use multiple pieces , Change it to [0,1,2,3], System error reporting ValueError: Found input variables with inconsistent numbers of samples I don't know how to solve , So I used a piece .GPU It's using P100

① Three way attention pool training execution :

allennlp train training_configs/l2af_3way_ap.json -s models/3way_ap --include-package l2af

Trained 18 Hours , The system itself ends .

②BERT Training execution :

allennlp train training_configs/bert_baseline.json -s models/bert_baseline --include-package l2afBERT Training for more than two days ,AUC Has been 89 wandering , I will stop

9.4 forecast

9.4.1 Three way attention pool

The three-way attention pool model has been trained models/bert_baseline/ The file is shown in the figure above , among best.th Is the optimal parameter , The system automatically packs it into model.tar.gz in ( Red box ).

Execute the following code respectively , Get the blue file above .

allennlp predict models/3way_ap/model.tar.gz data/dataset/dev.jsonl \

--output-file models/3way_ap/dev.jsonl \

--batch-size 32 \

--silent \

--cuda-device 0 \

--predictor l2af_predictor_binary \

--include-package l2af

allennlp predict models/3way_ap/model.tar.gz data/dataset/test_i.jsonl \

--output-file models/3way_ap/test_i.jsonl \

--batch-size 32 \

--silent \

--cuda-device 0 \

--predictor l2af_predictor_binary \

--include-package l2af

allennlp predict models/3way_ap/model.tar.gz data/dataset/test_ii.jsonl \

--output-file models/3way_ap/test_ii.jsonl \

--batch-size 32 \

--silent \

--cuda-device 0 \

--predictor l2af_predictor_binary \

--include-package l2af

allennlp predict models/3way_ap/model.tar.gz data/dataset/test_iii.jsonl \

--output-file models/3way_ap/test_iii.jsonl \

--batch-size 32 \

--silent \

--cuda-device 0 \

--predictor l2af_predictor_binary \

--include-package l2afYou can also use the model trained by the author , Script download_pretrained_models.sh Can download , Again, I can't execute the script directly , Or download it manually ( Red box Links , It is also necessary to hang ladders ), And put the file in the appropriate folder .

The execution forecast is the same as described above .

9.4.2 BERT

Because of training BERT I terminated it manually , So the system does not best.th Automatically pack to model.tar.gz in , It needs to be packed manually . Download the model trained by the above author , And extract the , Get the following documents , take models/3way_ap/ Under the best.th Replace the original weights.th And changed his name to weights.th. Packaging here requires specific packaging tools , I use the Internet to download 7-zip

So you get model.tar.gz, Put it in models/3way_ap/ Next , Perform the following steps respectively :

allennlp predict models/bert_baseline/model.tar.gz data/dataset/dev.jsonl \

--output-file models/bert_baseline/dev_predictions.jsonl \

--batch-size 32 \

--silent \

--cuda-device 0 \

--predictor l2af_predictor_binary \

--include-package l2af

allennlp predict models/bert_baseline/model.tar.gz data/dataset/test_i.jsonl \

--output-file models/bert_baseline/test_i_predictions.jsonl \

--batch-size 32 \

--silent \

--cuda-device 0 \

--predictor l2af_predictor_binary \

--include-package l2af

allennlp predict models/bert_baseline/model.tar.gz data/dataset/test_ii.jsonl \

--output-file models/bert_baseline/test_ii_predictions.jsonl \

--batch-size 32 \

--silent \

--cuda-device 0 \

--predictor l2af_predictor_binary \

--include-package l2af

allennlp predict models/bert_baseline/model.tar.gz data/dataset/test_iii.jsonl \

--output-file models/bert_baseline/test_iii_predictions.jsonl \

--batch-size 32 \

--silent \

--cuda-device 0 \

--predictor l2af_predictor_binary \

--include-package l2afGet the following documents :

9.5 assessment

Execute the following commands to evaluate the three-way attention pool model of training ( The probability of each sample obtained from the previous prediction , You can look at those by yourself jsonl file ), The results are as follows , With test_i For example

python evaluator.py --dev_pred_file Under the absolute path /LIF-master/models/bert_baseline/dev_predictions.jsonl \

--test_pred_file Under the absolute path /LIF-master/models/bert_baseline/test_i_predictions.jsonl

python evaluator.py --dev_pred_file Under the absolute path /LIF-master/models/bert_baseline/dev_predictions.jsonl \

--test_pred_file Under the absolute path /LIF-master/models/bert_baseline/test_ii_predictions.jsonl

python evaluator.py --dev_pred_file Under the absolute path /LIF-master/models/bert_baseline/dev_predictions.jsonl \

--test_pred_file Under the absolute path /LIF-master/models/bert_baseline/test_iii_predictions.jsonl

The red one is 4.2 The threshold at the end of section , Light blue is the validation set (dev) Of macro F1, The blue one is the verification set (dev) Three indicators of , The orange is the test set test_i Of macro F1, Purple is the test set test_i Three indicators of

assessment Bert The same goes for model operation

9.6 Data integration

among ↑ The representative result is higher than the data in the original paper ,↓ The representative result is lower than the data in the original paper . Why is this so? We can analyze it by ourselves .

边栏推荐

- CaMKIIa和GCaMP6f是一樣的嘛?

- [OEM special event] in the summer of "core cleaning", there are prize papers

- 11.1.1 overview of Flink_ Flink overview

- 实现异步的方法

- Darkent2ncnn error

- Summary of common terms and knowledge in SMT chip processing industry

- 从进程的角度来解释 输入URL后浏览器会发生什么?

- SMT葡萄球现象解决办法

- EasyConnect连接后显示未分配虚拟地址

- [advanced ROS] Lecture 1 Introduction to common APIs

猜你喜欢

把控元宇宙产业的发展脉络

元宇宙中的法律与自我监管

快手实时数仓保障体系研发实践

【TSP问题】基于Hopfield神经网络求解旅行商问题附Matlab代码

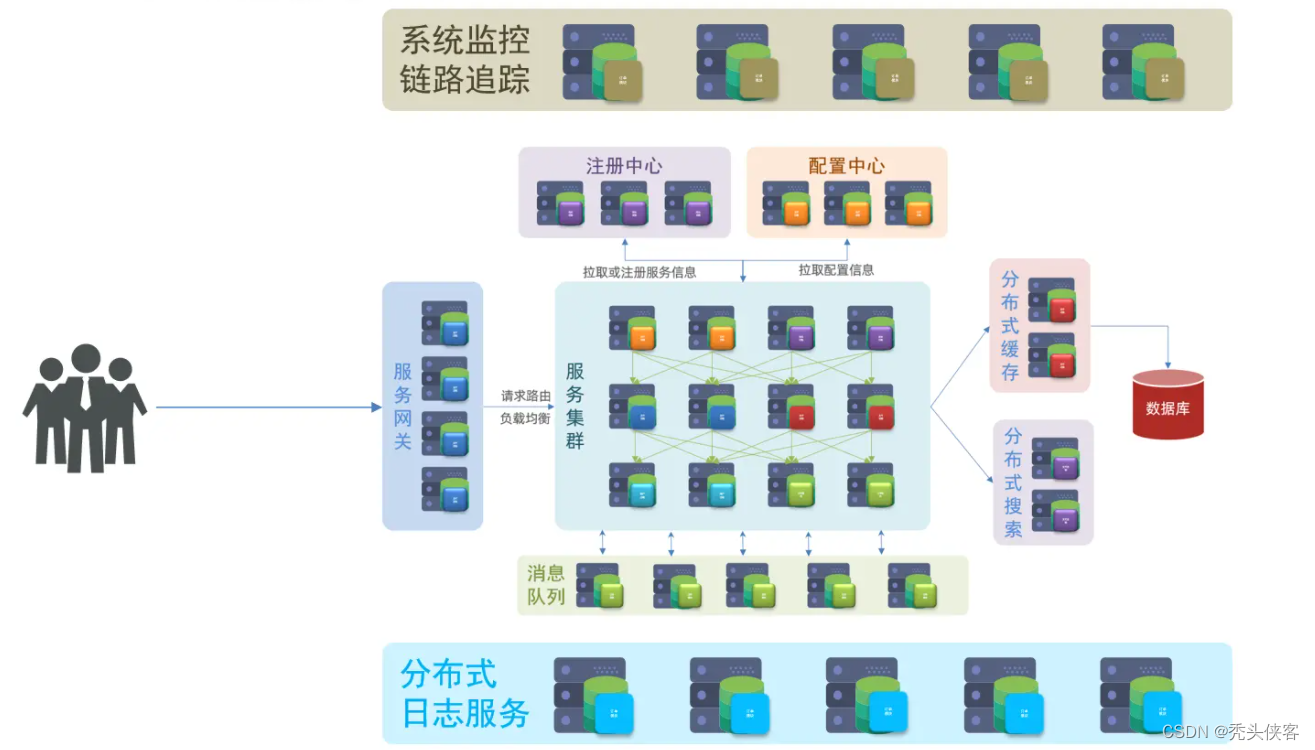

小红书微服务框架及治理等云原生业务架构演进案例

Apache foundation officially announced Apache inlong as a top-level project

mtb13_Perform extract_blend_Super{Candidate(PrimaryAlternate)_Unique(可NULL过滤_Foreign_index_granulari

What is micro service

No executorfactory found to execute the application

Law and self-regulation in the meta universe

随机推荐

SSL unresponsive in postman test

“Method Not Allowed“,405问题分析及解决

如何绕过SSL验证

"Method not allowed", 405 problem analysis and solution

性能领跑云原生数据库市场!英特尔携腾讯共建云上技术生态

Regular expression introduction and some syntax

mtb13_Perform extract_blend_Super{Candidate(PrimaryAlternate)_Unique(可NULL过滤_Foreign_index_granulari

Research and development practice of Kwai real-time data warehouse support system

《SQL优化核心思想》

Multi-Instance Redo Apply

Understanding of prototypes and prototype chains

Servlet response download file

Atlas200dk刷机

1-11Vmware虚拟机常见的问题解决

基于OpenVINOTM开发套件“无缝”部署PaddleNLP模型

flink报错:No ExecutorFactory found to execute the application

After being trapped by the sequelae of the new crown for 15 months, Stanford Xueba was forced to miss the graduation ceremony. Now he still needs to stay in bed for 16 hours every day: I should have e

Use js to obtain the last quarter based on the current quarter

Thrift getting started

快手实时数仓保障体系研发实践