当前位置:网站首页>Mathematics in machine learning -- point estimation (IV): maximum posteriori probability (map)

Mathematics in machine learning -- point estimation (IV): maximum posteriori probability (map)

2022-06-24 16:32:00 【von Neumann】

Catalogues :《 Mathematics in machine learning 》 General catalogue

Related articles :

· Point estimation ( One ): Basic knowledge of

· Point estimation ( Two ): Moment estimate

· Point estimation ( 3、 ... and ): Maximum likelihood estimation / Maximum likelihood estimation (Maximum Likelihood Estimate,MLE)

· Point estimation ( Four ): Maximum posterior estimate (Maximum Posteriori Probability,MAP)

In the previous article, we have discussed the frequency statistical method and the method based on estimating single value θ \theta θ Methods , Then make all predictions based on this estimate . Another way is to consider all possible... When making predictions θ \theta θ. The latter belongs to the category of Bayesian statistics . The perspective of the frequency school is the real parameter θ \theta θ Is an unknown constant value , And point estimates θ ^ \hat{\theta} θ^ Is to consider the functions on the data set ( It can be regarded as random ) Random variable of .

The perspective of Bayesian statistics is completely different . Bayesian statistics uses probability to reflect the degree of certainty of knowledge state . Data sets can be directly observed , So it's not random . On the other hand , Real parameters θ \theta θ Is unknown or uncertain , Therefore, it can be expressed as a random variable .

Before the data is observed , We will θ \theta θ The known knowledge of is expressed as a priori probability distribution p ( θ ) p(\theta) p(θ). generally speaking , Machine learning practitioners will choose a fairly broad ( High entropy ) Prior distribution , To reflect the parameters before any data is observed θ \theta θ A high degree of uncertainty . for example , We might assume a priori θ \theta θ Uniformly distributed in a finite interval . Many priors prefer “ It's simpler ” Solution .

Now suppose we have a set of data samples x 1 , x 2 , … , x n x_1, x_2, \dots, x_n x1,x2,…,xn, Combining Bayesian rules with data likelihood p ( x 1 , x 2 , … , x n ∣ θ p(x_1, x_2, \dots, x_n|\theta p(x1,x2,…,xn∣θ And a priori , Can recover data for us about θ \theta θ The influence of faith :

p ( x 1 , x 2 , … , x n ∣ θ ) = p ( x 1 , x 2 , … , x n ∣ θ ) p ( θ ) p ( x 1 , x 2 , … , x n ) p(x_1, x_2, \dots, x_n|\theta)=\frac{p(x_1, x_2, \dots, x_n|\theta)p(\theta)}{p(x_1, x_2, \dots, x_n)} p(x1,x2,…,xn∣θ)=p(x1,x2,…,xn)p(x1,x2,…,xn∣θ)p(θ)

In the context of Bayesian estimation , A priori starts with a relatively uniform distribution or a high entropy Gaussian distribution , The observed data usually reduce the posterior entropy , And focus on a few possible values of the parameter . Relative to maximum likelihood estimation , There are two important differences in Bayesian estimation :

- Unlike the maximum likelihood method used in prediction θ \theta θ Point estimate of , Bayesian method uses θ \theta θ The full distribution of . for example , In the observation of n n n After a sample , Next data sample x n + 1 x_{n+1} xn+1 The predicted distribution of is as follows : p ( x n + 1 ∣ x 1 , x 2 , … , x n ) = ∫ p ( x n + 1 ∣ θ ) p ( θ ∣ x 1 , x 2 , … , x n ) d θ p(x_{n+1}|x_1, x_2, \dots, x_n)=\int p(x_{n+1}|\theta)p(\theta|x_1, x_2, \dots, x_n)\text{d}\theta p(xn+1∣x1,x2,…,xn)=∫p(xn+1∣θ)p(θ∣x1,x2,…,xn)dθ Each has a positive probability density θ \theta θ The value of is helpful for the prediction of the next sample , The contribution is weighted by the posterior density itself . In the observed data set x 1 , x 2 , … , x n x_1, x_2, \dots, x_n x1,x2,…,xn after , If we are still very uncertain θ \theta θ Value , Then this uncertainty will be directly included in any forecast we make . In the previous article , We have explored the frequency method to solve the given point estimation θ \theta θ The method of uncertainty is to evaluate variance , The estimated variance evaluates the observed data after re sampling from the observed data , Estimate how it might change . How to deal with the problem of estimation uncertainty , The Bayesian answer is integral , This tends to prevent over fitting . Of course , Integration is simply an application of the law of probability , Make Bayesian method easy to verify , And frequency machine learning builds an estimate based on fairly specific decisions , Generalize all the information in the data set into a single point estimate .

- A priori can affect the shift of probability mass density towards the region of preference a priori in parameter space . In practice , A priori usually shows a preference for simpler or smoother models . The critique of Bayesian method holds that , A priori is the source of human subjective judgment affecting prediction .

When training data is limited , Bayesian methods are generally better generalized , But when the number of training samples is large , There is usually a great computational cost .

In principle, , We should use parameters θ \theta θ The complete Bayesian posterior distribution is predicted , But single point estimation is often needed . A common reason to want to use point estimation is , For most meaningful models , Most calculations involving Bayesian posterior are very tricky , Point estimation provides a feasible approximate solution . We can still let the choice of prior influence point estimation take advantage of the advantages of Bayesian method , Instead of simply going back to maximum likelihood estimation . A reasonable way to do this is to choose the maximum a posteriori estimation . Maximum a posteriori estimation select the point with the maximum a posteriori probability :

θ M A P = arg max θ log p ( θ ∣ x ) = arg max θ log p ( x ∣ θ ) p ( θ ) \theta_{MAP}=\arg\max_{\theta}\log p(\theta|x)=\arg\max_{\theta}\log \frac{p(x|\theta)}{p(\theta)} θMAP=argθmaxlogp(θ∣x)=argθmaxlogp(θ)p(x∣θ)

Dexter log p ( x ∣ θ ) \log p(x|\theta) logp(x∣θ) Corresponding to the standard log likelihood term , log p ( θ ) \log p(\theta) logp(θ) Corresponding to a priori distribution .MAP The advantage of Bayesian inference is that it can use information from a priori , This information cannot be obtained from the training data . Relative to maximum likelihood estimation , This additional information helps to reduce the variance of the maximum a posteriori point estimation . However , This advantage comes at the expense of increased bias . Many normalized estimation methods , For example, the maximum likelihood learning of weight attenuation regularization , Can be interpreted as Bayesian inference MAP The approximate . This additional term added to the objective function during regularization corresponds to log p ( θ ) \log p(\theta) logp(θ). Not all regularization penalties correspond to MAP Bayesian inference . for example , Some regularization may not be the logarithm of a probability distribution . There are also regularizations that rely on data , Of course, it will not be a prior probability distribution .MAP Bayesian inference provides an intuitive way to design complex but interpretable regularization . for example , More complex penalty terms can be obtained by using Gaussian mixture distribution as a priori , Instead of a single Gaussian distribution .

边栏推荐

- Popular explanation [redirection] and its practice

- Some experiences of K project: global template highlights

- Build go command line program tool chain

- How FEA and FEM work together

- Virtual machine virtual disk recovery case tutorial

- B. Ternary Sequence(思维+贪心)Codeforces Round #665 (Div. 2)

- What is browser fingerprint recognition?

- What is a framework?

- Tencent releases the full platform version of reasoning framework TNN, and supports mobile terminal, desktop terminal and server terminal at the same time

- Object store signature generation

猜你喜欢

B. Terry sequence (thinking + greed) codeforces round 665 (Div. 2)

Ps\ai and other design software pondering notes

One article explains Jackson configuration information in detail

Problems encountered in the work of product manager

![[download attached] installation and simple use of Chinese version of awvs](/img/3b/f26617383690c86edff465c9a1099e.png)

[download attached] installation and simple use of Chinese version of awvs

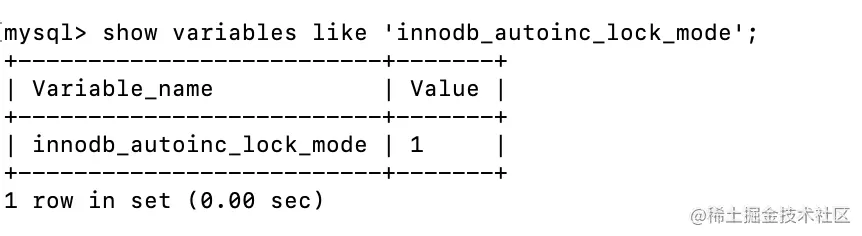

MySQL Advanced Series: Locks - Locks in InnoDB

There are potential safety hazards Land Rover recalls some hybrid vehicles

C. Three displays(动态规划)Codeforces Round #485 (Div. 2)

Wechat official account debugging and natapp environment building

Applet wxss

随机推荐

Siggraph 2022 | truly restore the hand muscles. This time, the digital human hands have bones, muscles and skin

How does easydss, an online classroom / online medical live on demand platform, separate audio and video data?

Product level design of a project in SAP mm

Inter thread communication of embedded development foundation

Cause analysis of the failure of web page live broadcast on demand RTMP streaming platform easydss streaming live broadcast

Embedded Software Engineer written interview guide arm system and architecture

Goby+AWVS 实现攻击面检测

Join in ABAP CDs

Find out the invisible assets -- use hosts collision to break through the boundary

Little red book, hovering on the edge of listing

Istio FAQ: sidecar stop sequence

ZOJ——4104 Sequence in the Pocket(思维问题)

How do HPE servers make RAID5 arrays? Teach you step by step today!

My network relationship with "apifox"

Cognition and difference of service number, subscription number, applet and enterprise number (enterprise wechat)

转置卷积详解

Some adventurer hybrid versions with potential safety hazards will be recalled

[application recommendation] the hands-on experience and model selection suggestions of apifox & apipost in the recent fire

Annual contribution! Tencent cloud middleware product upgrade conference is in hot registration!

Enterprise service growth path (7): what key factors will affect SaaS' sales performance?