当前位置:网站首页>【GCN-RS】Towards Representation Alignment and Uniformity in Collaborative Filtering (KDD‘22)

【GCN-RS】Towards Representation Alignment and Uniformity in Collaborative Filtering (KDD‘22)

2022-07-25 13:09:00 【chad_ lee】

Towards Representation Alignment and Uniformity in Collaborative Filtering (KDD’22)

This paper mainly studies the characterization of collaborative filtering methods . The existing research mainly focuses on designing more powerful encoder To learn a better representation of . And lack of right CF The expected attributes of the representation in , This is important for understanding the existing CF The basic principle of the method and the design of new learning objectives are very important . This article defines Alignment and Uniformity Two indicators to measure the quality of characterization .

- Theoretically revealed BPR Loss and these two attributes ( Alignment and uniformity ) The connection between .

- from Alignment and Uniformity From the perspective of classic CF Methods analyze the learning process , better Alignment or Uniformity Will help improve the recommended performance

- According to the analysis results, a learning goal to directly optimize these two indicators is proposed DirectAU

Alignment and Uniformity

stay CF in , Use encoder f ( ) f() f() Map users and items to low dimensional representations f ( u ) , f ( i ) ∈ R d f(u), f(i) \in \mathbb{R}^{\mathrm{d}} f(u),f(i)∈Rd. For example, the matrix decomposition model is a embedding surface (LightGCN On this basis, neighborhood information is used ).

The quality of representation is highly correlated with two key attributes :Alignment and Uniformity. Given the data distribution p d a t a ( ⋅ ) p_{d a t a}(\cdot) pdata(⋅) And the distribution of positive sample pairs p p o s ( ⋅ , ⋅ ) p_{p o s}(\cdot, \cdot) ppos(⋅,⋅),Alignment For the definition of Standardization of positive sample pairs embedding Expectation of distance between , use f ~ ( ) \tilde{f}() f~() Express L2 Standardized characterization :

l align ≜ E ( x , x + ) ∼ p pos ∥ f ( x ) − f ( x + ~ ) ∥ 2 l_{\text {align }} \triangleq \underset{\left(x, x^{+}\right) \sim p_{\text {pos }}}{\mathbb{E}}\left\|f(x)-f\left(\tilde{x^{+}}\right)\right\|^{2} lalign ≜(x,x+)∼ppos E∥∥f(x)−f(x+~)∥∥2

Uniformity Defined as the logarithm of the mean of the paired Gaussian function :

l uniform ≜ log E x , y ∼ p data e − 2 ∥ f ( x ) − f ( y ~ ) ∥ 2 l_{\text {uniform }} \triangleq \log \underset{x, y \sim p_{\text {data }}}{\mathbb{E}} e^{-2\|f(x)-f(\tilde{y})\|^{2}} luniform ≜logx,y∼pdata Ee−2∥f(x)−f(y~)∥2

These two indicators are very consistent with the goal of representational learning : Positive samples should be close to each other , The random samples should be distributed on the hypersphere as evenly as possible .

The author first analyzes theoretically ,Perfect Alignment Refer to encoder f Encode all samples into the same representation : f ( u ) = f ( ~ i ) f(u)=\tilde{f(} i) f(u)=f(~i) a.s. over ( u , i ) ∼ p pos (u, i) \sim p_{\text {pos }} (u,i)∼ppos ;Perfect Uniformity Refer to encoder f The characterization of all samples is evenly distributed on a hypersphere .

Theoretical proof

The author put BPR loss Simplification , Prove if it exists Perfect Alignment and Perfect Uniformity Of encoder, yes BPR loss Lower bound of :

The formula (4) The condition satisfied is if and only if f yes perfectly aligned; The formula (5) The condition satisfied is if and only if f yes perfectly uniform Of . therefore , L B P R L_{BPR} LBPR Greater than or equal to one independent of f The constant , If and only if f yes perfectly aligned and uniform The equal sign is established .

experimental analysis

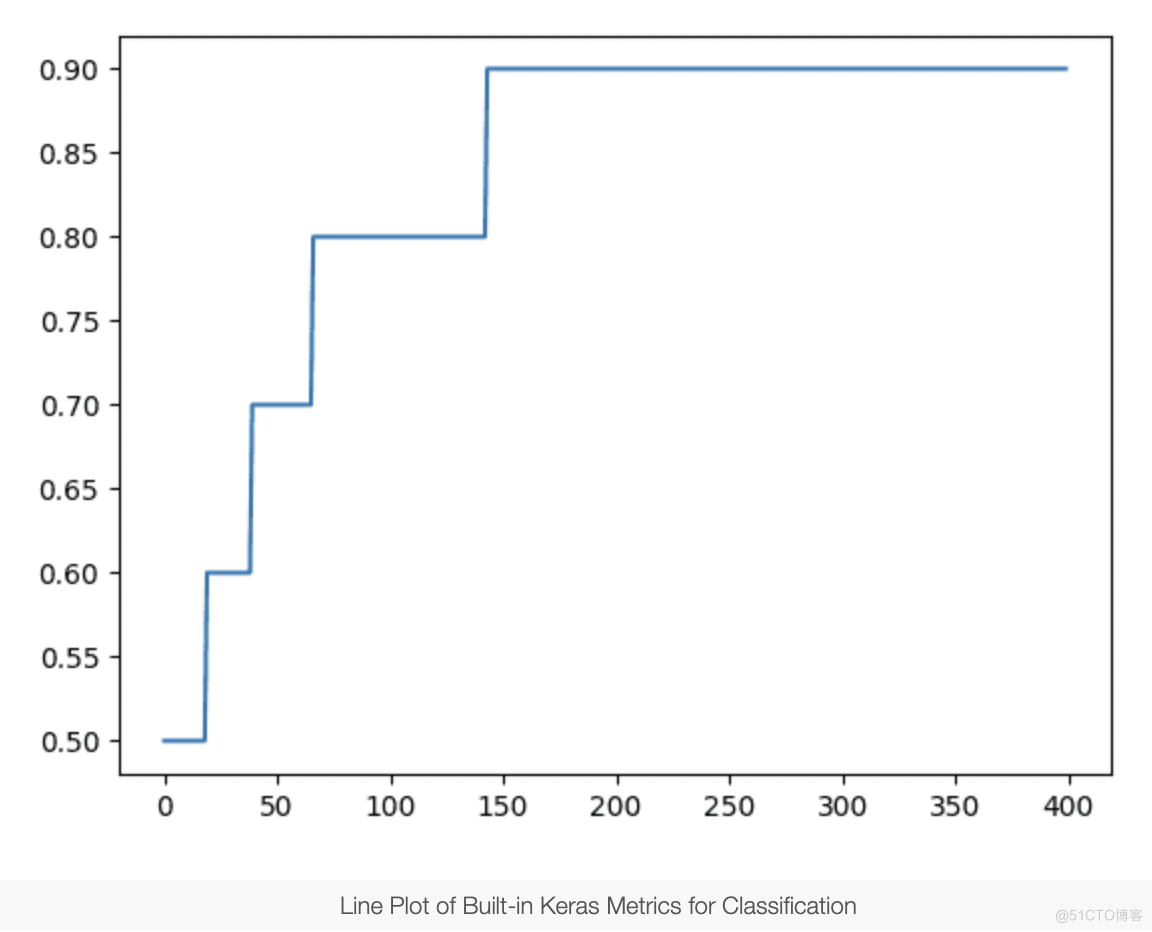

In order to verify BPR And other things loss In the process of optimization, alignment and uniformity will be optimized , The author carried out experiments on different methods , As the training progresses , Alignment and uniformity will be optimized and improved accordingly . This also shows that CF The quality of user and product representation in depends on these two attributes . Better alignment or uniformity can help improve recommendation performance , It may be beneficial to optimize them at the same time .

After random initialization ,Uniformity very good ,Alignment Is very poor , The early learning process is mainly optimization Alignment, The performance improvement in the later stage mainly comes from Uniformity. It's easy to understand , It is easy to realize that positive samples are close , But on the basis of uniform sample distribution, it needs to spend effort Of .

DirectAU

After analysis, I found Alignment and Uniformity It is very important for the quality of user and item representation , So I designed a new learning goal , Directly optimize these two attributes :

l align = E ( u , i ) ∼ p pos ∥ f ( ( ~ u ) − f ( ~ i ) ∥ 2 l uniform = log E u , u ′ ∼ p user e − 2 ∥ f ( u ) ~ − f ( u ′ ~ ) ∥ 2 / 2 + log i , i ′ ∼ p item E e − 2 ∥ f ( i ) − f ( i ′ ) ∥ 2 / 2. L DirectAU = l align + γ l uniform \begin{aligned} &l_{\text {align }}=\underset{(u, i) \sim p_{\text {pos }}}{\mathbb{E}} \| f(\tilde{(} u)-f \tilde{(} i) \|^{2}\\ &l_{\text {uniform }}=\log \underset{u, u^{\prime} \sim p_{\text {user }}}{\mathbb{E}} e^{-2\left\|f \tilde{(u)}-f\left(\tilde{u^{\prime}}\right)\right\|^{2}} / 2+ \log _{i, i^{\prime} \sim p_{\text {item }}}^{\mathbb{E}} e^{-2\left\|f(i)-f\left(i^{\prime}\right)\right\|^{2}} / 2 .\\ &\mathcal{L}_{\text {DirectAU }}=l_{\text {align }}+\gamma l_{\text {uniform }} \end{aligned} lalign =(u,i)∼ppos E∥f((~u)−f(~i)∥2luniform =logu,u′∼puser Ee−2∥f(u)~−f(u′~)∥2/2+logi,i′∼pitem Ee−2∥f(i)−f(i′)∥2/2.LDirectAU =lalign +γluniform

One advantage here is that negative samples are not needed to construct negative samples , Just need positive sample optimization l a l i g n l_{align} lalign, then batch Sample optimization l u n i f o r m l_{uniform} luniform,in-batch Sample of yes yes user and user Calculation ,item and item Calculation .

experimental result

Only MF As encoder Good results , If you use LightGCN As encoder, The improvement is also relatively large :

This loss It can make embedding Of Uniformity Maintain the state of random initialization , That is, the state of uniform distribution .

边栏推荐

- 【问题解决】ibatis.binding.BindingException: Type interface xxDao is not known to the MapperRegistry.

- 全球都热炸了,谷歌服务器已经崩掉了

- JS sorts according to the attributes of the elements in the array

- 程序员奶爸自制AI喂奶检测仪,预判宝宝饿点,不让哭声影响老婆睡眠

- 一味地做大元宇宙的规模,已经背离了元宇宙本该有的发展逻辑

- Docker学习 - Redis集群-3主3从-扩容-缩容搭建

- JS 中根据数组内元素的属性进行排序

- 【运维、实施精品】月薪10k+的技术岗位面试技巧

- Mysql 远程连接权限错误1045问题

- 程序员成长第二十七篇:如何评估需求优先级?

猜你喜欢

【视频】马尔可夫链原理可视化解释与R语言区制转换MRS实例|数据分享

Substance Designer 2021软件安装包下载及安装教程

【重温SSM框架系列】15 - SSM系列博文总结【SSM杀青篇】

2022.07.24 (lc_6125_equal row and column pairs)

Cv2.resize function reports an error: error: (-215:assertion failed) func= 0 in function ‘cv::hal::resize‘

Zero basic learning canoe panel (14) -- led control and LCD control

How to understand metrics in keras

【OpenCV 例程 300篇】239. Harris 角点检测之精确定位(cornerSubPix)

B树和B+树

程序员成长第二十七篇:如何评估需求优先级?

随机推荐

Introduction to web security UDP testing and defense

机器学习强基计划0-4:通俗理解奥卡姆剃刀与没有免费午餐定理

Make a general cascade dictionary selection control based on jeecg -dictcascadeuniversal

[机器学习] 实验笔记 – 表情识别(emotion recognition)

Redis可视化工具RDM安装包分享

【历史上的今天】7 月 25 日:IBM 获得了第一项专利;Verizon 收购雅虎;亚马逊发布 Fire Phone

Zero basic learning canoe panel (14) -- led control and LCD control

程序员奶爸自制AI喂奶检测仪,预判宝宝饿点,不让哭声影响老婆睡眠

【OpenCV 例程 300篇】239. Harris 角点检测之精确定位(cornerSubPix)

yum和vim须掌握的常用操作

若依如何实现用户免密登录配置方法?

Mid 2022 review | latest progress of large model technology Lanzhou Technology

ECCV 2022 | climb to the top semantickitti! Semantic segmentation of LIDAR point cloud based on two-dimensional prior assistance

【问题解决】org.apache.ibatis.exceptions.PersistenceException: Error building SqlSession.1 字节的 UTF-8 序列的字

并发编程 — 内存模型 JMM

Clickhouse notes 03-- grafana accesses Clickhouse

Convolutional neural network model -- lenet network structure and code implementation

Shell常用脚本:获取网卡IP地址

[ai4code final chapter] alphacode: competition level code generation with alphacode (deepmind)

如何用因果推断和实验驱动用户增长? | 7月28日TF67