当前位置:网站首页>Sampling strategy and decoding strategy based on seq2seq text generation

Sampling strategy and decoding strategy based on seq2seq text generation

2022-06-25 08:29:00 【Happy little yard farmer】

List of articles

be based on seq2seq Decoding of text generation 、 The sampling strategy ?

be based on Seq2Seq There are different ways to generate text for models decoding strategy. In text generation decoding strategy It can be divided into two categories :

- Argmax Decoding: It mainly includes beam search, class-factored softmax etc.

- Stochastic Decoding: It mainly includes temperature sampling, top-k sampling etc. .

stay Seq2Seq In the model ,RNN Encoder Code the input sentences , Generate a fixed size hidden state h c h_c hc ; Based on the input sentence hidden state h c h_c hc And the previously generated 1 To t-1 Word x 1 : t − 1 x_{1:t-1} x1:t−1,RNN Decoder Will generate the current second t One word hidden state h t h_t ht , Finally through softmax Function to get the second t Word x t x_t xt Of vocabulary probability distribution P ( x ∣ x 1 : t − 1 ) P(x|x_{1:t-1}) P(x∣x1:t−1).

Two types of decoding strategy The main difference is , How to go from vocabulary probability distribution P ( x ∣ x 1 : t − 1 ) P(x|x_{1:t-1}) P(x∣x1:t−1) Choose a word from x t x_t xt :

- Argmax Decoding The best way is to choose from the word list probability The biggest word , namely x t = a r g m a x P ( x ∣ x 1 : t − 1 ) x_t=argmax\quad P(x|x_{1:t-1}) xt=argmaxP(x∣x1:t−1) ;

- Stochastic Decoding Is based on probability distribution P ( x ∣ x 1 : t − 1 ) P(x|x_{1:t-1}) P(x∣x1:t−1) Random sample A word x t x_t xt, namely x t ∼ P ( x ∣ x 1 : t − 1 ) x_t \sim P(x|x_{1:t-1}) xt∼P(x∣x1:t−1) .

Doing it seq predcition when , We need to model every moment according to the hypothesis softmax The output probability of sample word , Appropriate sample Methods may achieve more effective results .

1. Greedy sampling

1.1 Greedy Search

The core idea : Take the most probable result at each step , As a result .

The specific methods : Get the newly generated word is vocab The probability of each word in , take argmax As a word vector index to be generated , Then the latter word is generated .

1.2 Beam Search

The core idea :beam search Try to optimize the search space on the basis of breadth first ( It's like pruning ) Achieve the purpose of reducing memory consumption .

The specific methods : stay decoding Every step of , We all keep top K A possible candidate word , Then it's time for the next step , We're talking about this K Do the next step for every word decoding, Selected separately top K, And then to the K^2 Choose a candidate sentence and choose top K A sentence . And so on until decoding End . Of course Beam Search In essence, it is also a greedy decoding Methods , So we can't guarantee that we can get the best decoding result .

Greedy Search and Beam Search The problem is :

- Prone to repetition 、 Predictable words ;

- The sentence / Poor coherence of language .

2. Random sampling

The core idea : Random sampling according to the probability distribution of words .

2.1 Temperature Sampling:

The specific methods : stay softmax Introduce a temperature To change vocabulary probability distribution, Make it more biased towards high probability words:

P ( x ∣ x 1 : t − 1 ) = e x p ( u t / t e m p e r a t u r e ) ∑ t ′ e x p ( u t ′ / t e m p e r a t u r e ) , t e m p e r a t u r e ∈ [ 0 , 1 ) P(x|x_{1:t-1})=\frac{exp(u_t/temperature)}{\sum_{t'}exp(u_{t'}/temperature)},temperature\in[0,1) P(x∣x1:t−1)=∑t′exp(ut′/temperature)exp(ut/temperature),temperature∈[0,1)

Another way to express : hypothesis p ( x ) p(x) p(x) The original distribution output for the model , Given a temperature value , The original probability distribution will be computed as follows ( The model of softmax Output ) reweight , And we get a new probability distribution .

π ( x k ) = e l o g ( p ( x k ) ) / t e m p e r a t u r e ∑ i = 1 n e l o g ( p ( x i ) ) / t e m p e r a t u r e , t e m p e r a t u r e ∈ [ 0 , 1 ) \pi(x_{k})=\frac{e^{log(p(x_k))/temperature}} {\sum_{i=1}^{n}e^{log(p(x_i))/temperature}},temperature\in[0,1) π(xk)=∑i=1nelog(p(xi))/temperatureelog(p(xk))/temperature,temperature∈[0,1)

When t e m p e r a t u r e → 0 temperature \to 0 temperature→0, It becomes greedy search; When t e m p e r a t u r e → ∞ temperature \to \infty temperature→∞, It becomes uniform sampling (uniform sampling). See the paper for details :The Curious Case of Neural Text Degeneration

2.2 Top-k Sampling:

It can alleviate the problem of generating rare words . for instance , We can only be in the highest probability 50 Sample words according to the probability distribution . I only keep top-k individual probability 's words , Then do according to the probability in these words sampling.

The core idea : Sort probabilities in descending order , And then on the k The probability after a location is converted to 0.

The specific methods : stay decoding In the process , from P ( x ∣ x 1 : t − 1 ) P(x|x_{1:t-1}) P(x∣x1:t−1) Select the probability The highest front k individual tokens, Put their probability Add up to get p ′ = ∑ P ( x ∣ x 1 : t − 1 ) p'=\sum P(x|x_{1:t-1}) p′=∑P(x∣x1:t−1) , And then P ( x ∣ x 1 : t − 1 ) P(x|x_{1:t-1}) P(x∣x1:t−1) Adjusted for P ′ ( x ∣ x 1 : t − 1 ) = P ( x ∣ x 1 : t − 1 ) / p ′ P'(x|x_{1:t-1})=P(x|x_{1:t-1})/p' P′(x∣x1:t−1)=P(x∣x1:t−1)/p′ , among x ∈ V ( k ) x\in V^{(k)} x∈V(k)! , Finally from the P ′ ( x ∣ x 1 : t − 1 ) P'(x|x_{1:t-1}) P′(x∣x1:t−1) in sample One token As output token. See the paper for details :Hierarchical Neural Story Generation

but Top-k Sampling The problem is , constant k Is the value given in advance , For different lengths and sizes , Sentences with different contexts , We may sometimes need to compare k added tokens.

2.3 Top-p Sampling (Nucleus Sampling ):

The core idea : By accumulating the probability distribution , Then when the accumulated value exceeds the set threshold p, Then set the subsequent probability 0.

The specific methods : Put forward Top-p Sampling To solve Top-k Sampling The problem of , be based on Top-k Sampling, It will p ′ = ∑ P ( x ∣ x 1 : t − 1 ) p'=\sum P(x|x_{1:t-1}) p′=∑P(x∣x1:t−1) Set as a pre-defined constant p ′ ∈ ( 0 , 1 ) p'\in(0,1) p′∈(0,1) , and selected tokens According to the sentence history distribution Vary according to the changes of . See the paper for details :The Curious Case of Neural Text Degeneration

Essentially Top-p Sampling and Top-k Sampling from truncated vocabulary distribution in sample token, The difference in the choice of confidence interval is .

Problems with random sampling :

- The resulting sentences tend to be incoherent , The context is contradictory .

- It's easy to generate strange sentences , Rare words appear .

3. Reference

[0]: LSTM The text generated :《Python Deep learning 》 The first 8 Chapter one 1 section :8.1 Use LSTM The generation of textual P228-P234.

[1]: https://www.jiqizhixin.com/articles/2017-05-22 “ Overview of text generation ”

[2]: https://blog.csdn.net/weixin_40255337/article/details/83303702 “softmax sampling ”

[3]: https://blog.csdn.net/linchuhai/article/details/90140555 “ Common evaluation indicators for text generation tasks ”

[4]: https://www.zhihu.com/question/58482430/answer/373495424 “NLP Inside perplexity What is it? ?”

[5]: https://www.cnblogs.com/massquantity/p/9511694.html “ Use deep learning to generate text ”

[6]: https://github.com/massquantity/text-generation-using-keras “ The text generated ”

[7]: https://zhuanlan.zhihu.com/p/68383015 “ In text generation decoding strategy”

[8]: https://zhuanlan.zhihu.com/p/267471193?utm_source=wechat_session “ Language model sampling strategy ”

边栏推荐

- Rqt command

- Thread + thread problem record

- STM32CubeMX 学习(5)输入捕获实验

- What are the indicators of entropy weight TOPSIS method?

- Jdbc-dao layer implementation

- 使用pytorch搭建MobileNetV2并基于迁移学习训练

- To achieve good software testing results, it is a prerequisite to build a good testing environment

- GPU calculation

- Super simple case: how to do hierarchical chi square test?

- Go language learning tutorial (13)

猜你喜欢

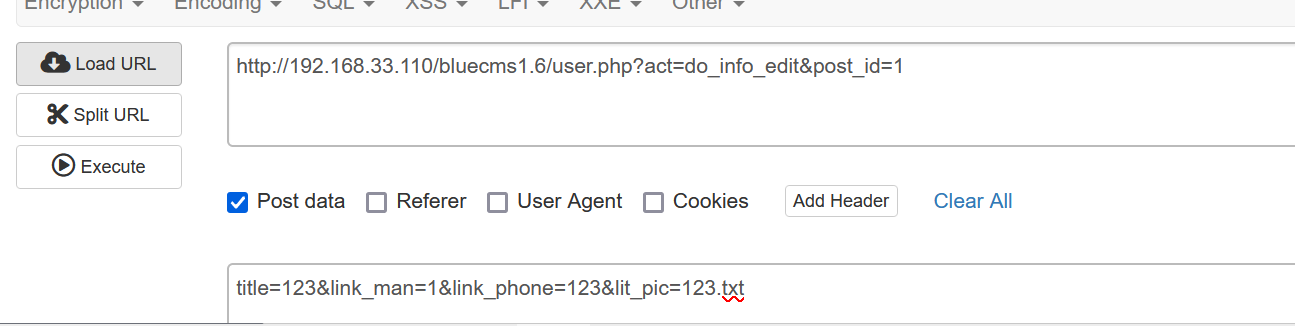

Bluecmsv1.6- code audit

How to calculate the D value and W value of statistics in normality test?

What about the exponential smoothing index?

How to analyze the coupling coordination index?

How to calculate the distance between texts: WMD

Prepare these before the interview. The offer is soft. The general will not fight unprepared battles

How to interpret the information weight index?

Self made ramp, but it really smells good

![[QT] QT 5 procedure: print documents](/img/76/2fce505c43f75360a8ff477aa2d31d.png)

[QT] QT 5 procedure: print documents

What is SKU and SPU? What is the difference between SKU and SPU

随机推荐

4个不可不知的采用“安全左移”的理由

Bat start NET Core

4 reasons for adopting "safe left shift"

Daily question brushing record (III)

Is there any risk in making new bonds

What is the role of software validation testing? What is the price of the confirmation test report?

如何成为一名软件测试高手? 月薪3K到17K,我做了什么?

How to calculate the correlation coefficient and correlation degree in grey correlation analysis?

软件确认测试有什么作用?确认测试报告的价格是多少?

Network model -- OSI model and tcp/ip model

Bluecmsv1.6- code audit

QSS 不同风格的按钮

LeetCode_ Hash table_ Medium_ 454. adding four numbers II

Iframe is simple to use, iframe is obtained, iframe element value is obtained, and iframe information of parent page is obtained

Measure the current temperature

物联网毕设(智能灌溉系统 -- Android端)

【操作教程】TSINGSEE青犀视频平台如何将旧数据库导入到新数据库?

Wechat applet introduction record

linux中的mysql有10061错误怎么解决

417 sequence traversal of binary tree 1 (102. sequence traversal of binary tree, 107. level traversal of binary tree II, 199. right view of binary tree, 637. layer average of binary tree)