当前位置:网站首页>图解OneFlow的学习率调整策略

图解OneFlow的学习率调整策略

2022-06-23 13:34:00 【InfoQ】

1、背景

- https://huggingface.co/spaces/basicv8vc/learning-rate-scheduler-online

- https://share.streamlit.io/basicv8vc/scheduler-online

2、学习率调整策略

基类LRScheduler

ConstantLR

oneflow.optim.lr_scheduler.ConstantLR(

optimizer: Optimizer,

factor: float = 1.0 / 3,

total_iters: int = 5,

last_step: int = -1,

verbose: bool = False,

)

LinearLR

oneflow.optim.lr_scheduler.LinearLR(

optimizer: Optimizer,

start_factor: float = 1.0 / 3,

end_factor: float = 1.0,

total_iters: int = 5,

last_step: int = -1,

verbose: bool = False,

)

ExponentialLR

oneflow.optim.lr_scheduler.ExponentialLR(

optimizer: Optimizer,

gamma: float,

last_step: int = -1,

verbose: bool = False,

)

StepLR

oneflow.optim.lr_scheduler.StepLR(

optimizer: Optimizer,

step_size: int,

gamma: float = 0.1,

last_step: int = -1,

verbose: bool = False,

)

MultiStepLR

oneflow.optim.lr_scheduler.MultiStepLR(

optimizer: Optimizer,

milestones: list,

gamma: float = 0.1,

last_step: int = -1,

verbose: bool = False,

)

PolynomialLR

oneflow.optim.lr_scheduler.PolynomialLR(

optimizer,

steps: int,

end_learning_rate: float = 0.0001,

power: float = 1.0,

cycle: bool = False,

last_step: int = -1,

verbose: bool = False,

)

CosineDecayLR

oneflow.optim.lr_scheduler.CosineDecayLR(

optimizer: Optimizer,

decay_steps: int,

alpha: float = 0.0,

last_step: int = -1,

verbose: bool = False,

)

CosineAnnealingLR

oneflow.optim.lr_scheduler.CosineAnnealingLR(

optimizer: Optimizer,

T_max: int,

eta_min: float = 0.0,

last_step: int = -1,

verbose: bool = False,

)

CosineAnnealingWarmRestarts

oneflow.optim.lr_scheduler.CosineAnnealingWarmRestarts(

optimizer: Optimizer,

T_0: int,

T_mult: int = 1,

eta_min: float = 0.0,

decay_rate: float = 1.0,

restart_limit: int = 0,

last_step: int = -1,

verbose: bool = False,

)

3、组合调度策略

LambdaLR

oneflow.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda, last_step=-1, verbose=False)def rate(step, model_size, factor, warmup):

"""

we have to default the step to 1 for LambdaLR function

to avoid zero raising to negative power.

"""

if step == 0:

step = 1

return factor * (

model_size ** (-0.5) * min(step ** (-0.5), step * warmup ** (-1.5))

)

model = CustomTransformer(...)

optimizer = flow.optim.Adam(

model.parameters(), lr=1.0, betas=(0.9, 0.98), eps=1e-9

)

lr_scheduler = LambdaLR(

optimizer=optimizer,

lr_lambda=lambda step: rate(step, d_model, factor=1, warmup=3000),

)SequentialLR

oneflow.optim.lr_scheduler.SequentialLR(

optimizer: Optimizer,

schedulers: Sequence[LRScheduler],

milestones: Sequence[int],

interval_rescaling: Union[Sequence[bool], bool] = False,

last_step: int = -1,

verbose: bool = False,

)WarmupLR

oneflow.optim.lr_scheduler.WarmupLR(

scheduler_or_optimizer: Union[LRScheduler, Optimizer],

warmup_factor: float = 1.0 / 3,

warmup_iters: int = 5,

warmup_method: str = "linear",

warmup_prefix: bool = False,

last_step=-1,

verbose=False,

)ChainedScheduler

oneflow.optim.lr_scheduler.ChainedScheduler(schedulers)lr ==> LRScheduler_1 ==> LRScheduler_2 ==> ... ==> LRScheduler_NReduceLROnPlateau

oneflow.optim.lr_scheduler.ReduceLROnPlateau(

optimizer,

mode="min",

factor=0.1,

patience=10,

threshold=1e-4,

threshold_mode="rel",

cooldown=0,

min_lr=0,

eps=1e-8,

verbose=False,

)optimizer = flow.optim.SGD(model.parameters(), lr=0.1, momentum=0.9)

scheduler = flow.optim.lr_scheduler.ReduceLROnPlateau(optimizer, 'min')

for epoch in range(10):

train(...)

val_loss = validate(...)

# 注意,该步骤应在validate()之后调用。

scheduler.step(val_loss)4、实践

- https://github.com/basicv8vc/oneflow-cifar100-lr-scheduler

- 深度学习概述

- 一个算子在深度学习框架中的旅程

- 手把手推导分布式矩阵乘的最优并行策略

- 训练千亿参数大模型,离不开四种并行策略

- 解读Pathways(二):向前一步是OneFlow

- 关于并发和并行,Go和Erlang之父都弄错了?

- OneFlow v0.7.0发布:全新分布式接口,LiBai、Serving等一应俱全

边栏推荐

- Shutter clip clipping component

- [in depth understanding of tcapulusdb technology] how to realize single machine installation of tmonitor

- Detailed explanation of serial port, com, UART, TTL, RS232 (485) differences

- 【深入理解TcaplusDB技术】Tmonitor后台一键安装

- leetcode:42. Rain water connection

- 边缘和物联网学术资源

- Intelligent digital signage solution

- Working for 7 years to develop my brother's career transition test: only by running hard can you get what you want~

- The data value reported by DTU cannot be filled into Tencent cloud database through Tencent cloud rule engine

- Technology sharing | do you understand the requirements of the tested project?

猜你喜欢

通过 OpenVINO Model Server和 TensorFlow Serving简化部署

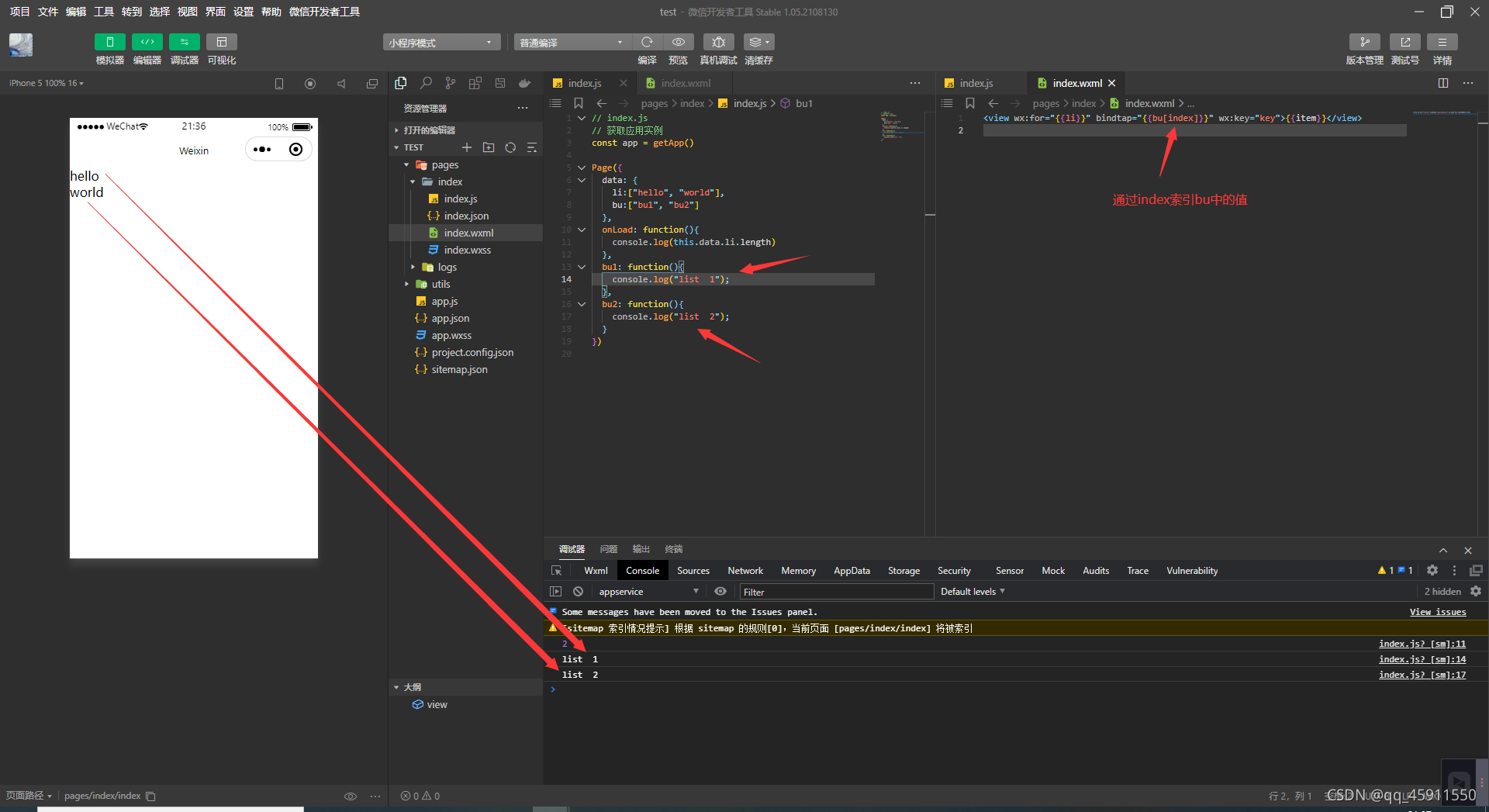

微信小程序之在wx:for中绑定事件

Crmeb second open SMS function tutorial

爱思唯尔-Elsevier期刊的校稿流程记录(Proofs)(海王星Neptune)(遇到问题:latex去掉章节序号)

Quartus call & design D trigger Simulation & timing wave verification

Assembly language interrupt and external device operation --06

PHP receiving and sending data

【深入理解TcaplusDB技术】单据受理之表管理

Technology creates value and teaches you how to collect wool

As a software testing practitioner, do you understand your development direction?

随机推荐

When pandas met SQL, a powerful tool library was born

kali使用

微信小程序之在wx:for中绑定事件

Ks007 realizes personal blog system based on JSP

Basic data types of C language and their printouts

SAP inventory gain / loss movement type 701 & 702 vs 711 & 712

Crmeb second open SMS function tutorial

Pyqt5 designer making tables

KS008基于SSM的新闻发布系统

WPF (c) open source control library: newbeecoder Nbexpander control of UI

父母-子女身高数据集的线性回归分析

[deeply understand tcapulusdb technology] tmonitor system upgrade

Flex attribute of wechat applet

人脸注册,解锁,响应,一网打尽

Vulnhub target os-hacknos-1

使用OpenVINOTM预处理API进一步提升YOLOv5推理性能

微信小程序之获取php后台数据库转化的json

Multi-Camera Detection of Social Distancing Reference Implementation

Flutter Clip剪裁组件

In depth analysis of mobilenet and its variants