当前位置:网站首页>Compare and explain common i/o models

Compare and explain common i/o models

2022-06-25 09:06:00 【wwzroom】

For common use I/O The model is compared and explained

1. The Internet I/O Model

1.1I/O Model related concepts

Sync / asynchronous : Focus on the message communication mechanism , That is, when the caller is waiting for the processing result of an event , Whether the callee provides notification of completion status .

- Sync :synchronous, The callee does not provide notification messages related to the processing results of the event , Need callers Take the initiative to ask whether things have been handled .

- asynchronous :asynchronous, The callee passes through the State 、 The notification or callback mechanism proactively notifies the caller The running state of the callee .

Blocking / Non blocking : Focus on the state of the caller before waiting for the result to return

Blocking :blocking, finger IO Operation requires When it's done Back to user space , Before the call result returns , The caller is suspended , I can't do anything else .

Non blocking :nonblocking, finger IO A status value is returned to the user immediately after the operation is called , and No need to wait IO Operation complete , Before the final call result is returned , The caller will not be suspended , You can do something else .

Sync + Blocking : Equivalent to people boiling water , Always watching , You can't do anything else , See if it's on .

Sync + Non blocking : When boiling water , People don't stare , You can do other things , But you still need to see whether it is open or not .

asynchronous + Blocking : The water heater will give an alarm when boiling water , But people can't do anything else , Keep an eye on .

asynchronous + Non blocking : The water heater will give an alarm when boiling water , People don't have to stay in front of them , You can do other things .

2. The Internet I/O Model

Blocking type 、 Non blocking 、 Reuse type 、 Signal driven 、 asynchronous

2.1 Blocking type I/O Model (blocking IO)

** Applications call the kernel through the system , Let the kernel process disk files , It can't be done right away , The application process has to wait ( Wait state ), The blocking time includes the first stage ( The kernel copies data from the network to the kernel space , Longer time ) And the second stage ( Copy kernel space data to user space ), After the user space receives the data , To do something else .** A user occupies a process , Limited processing capacity .

Blocking IO The model is the simplest I/O Model , User thread in kernel IO Blocked during operation

User thread through system call read launch I/O Read operations , From user space to kernel space . The kernel waits until the packet arrives , Then copy the received data to user space , complete read operation

Users need to wait read Read the data to buffer after , To continue processing received data . Whole I/O During request , User threads are blocked , This causes the user to initiate IO When asked , Can't do anything , Yes CPU The utilization rate of resources is not enough

advantage : The procedure is simple , While blocking waiting for data, the process / Thread hanging , It doesn't take up much CPU resources

shortcoming : Each connection requires a separate process / Thread processing alone , When concurrent requests are large, in order to maintain the program , Memory 、 Thread switching costs a lot ,apache Of preforck This mode is used .

Synchronous blocking : The program sends to the kernel I/O Wait for the kernel to respond after the request , If the kernel handles the request IO Operation cannot return immediately , The process will wait and no longer accept new requests , And check by the process rotation I/O Whether it is completed or not , When finished, the process will I/O The result is returned to Client, stay IO The process cannot accept requests from other clients without returning , And there's a process to check by itself I/O Whether it is completed or not , It's easy , But it's slower , Use less .

2.2 Non blocking I/O Model (nonblocking IO)

Although the process is not suspended , But finishing the task , Will constantly poll to ask if it is complete , This stage is not blocked , And I won't deal with anything else , But increases i/o frequency , The second stage will still be blocked .

User thread initiated IO Return immediately on request . But no data was read , User threads need to be initiated continuously IO request , Until the data arrives , To actually read the data , Carry on . namely “ polling ” There are two problems with the mechanism : If you have a large number of file descriptors, you have to wait , Then one by one read. This will bring a lot of Context Switch(read It's a system call , Every time you call it, you have to switch between user mode and core mode ). The polling time is not easy to grasp . Here is how long it takes to guess how long the data will arrive . The waiting time is set too long , The program response delay is too large ; Set too short , It will cause too many retries , Dry consumption CPU nothing more , It's a waste CPU The way , This model is rarely used directly , But in others IO Use non blocking in the model IO This feature .

Non blocking : The program sends to the kernel, please I/O Wait for the kernel to respond after the request , If the kernel handles the request IO Operation cannot return immediately IO result , Into the

Cheng will no longer wait , And continue to process other requests , But it still takes the process to look at the kernel at intervals I/O Whether it is completed or not .

See the figure above , When setting the connection to non blocking , When the application process system calls recvfrom When no data is returned , The kernel will immediately return a EWOULDBLOCK error , Instead of blocking until the data is ready . As shown in the figure above, a datagram is ready for the fourth call , So the data will be copied to Application process buffer , therefore recvfrom Data returned successfully

When an application process calls in such a loop recvfrom when , Call it polling polling . Doing so often costs a lot CPU Time , It's rarely used in practice

2.3 Multiplexing I/O type (I/O multiplexing)( good , High concurrency )

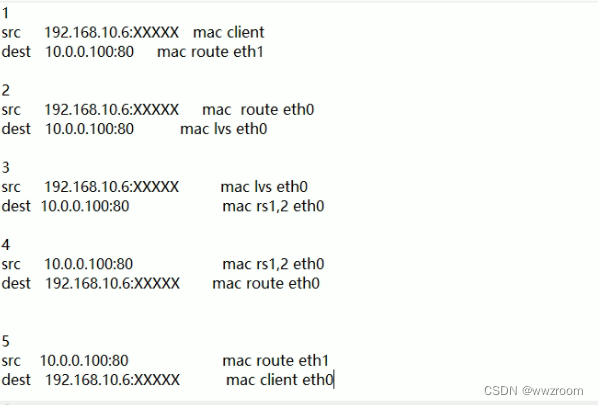

The user initiates a request , To the agent select, In the first phase ( Before the data is sent to the kernel ) It's also blocked , Knowledge is blocked in select On ,select Dealing with clients , Notify the process kernel of the processing status . The second stage ( Copy data from kernel space to the application buffer ) It's also blocked , This phase is process blocking . Reduce the interaction between users and the system , Give Way select Interact with users . Single process You can handle multiple network connections at the same time IO.

I/O multiplexing It mainly includes :select,poll,epoll Three system calls ,select/poll/epoll The good thing about it is that it's a single process You can handle multiple network connections at the same time IO.

Its basic principle is select/poll/epoll This function Will constantly poll all the socket, When a socket There's data coming in , Just inform the user of the process .

When the user process calls select, Then the whole process will be block, At the same time ,kernel Meeting “ monitor ” all select conscientious socket, When any one socket The data in is ready ,select It will return . At this time, the user process calls read operation , Take data from kernel Copy to user process .

Apache prefork This is the of this mode select,work yes poll Pattern .

IO Multiplexing (IO Multiplexing) : It's a mechanism , The program registers a set of socket File descriptor to the operating system , Express “ I want to watch these fd Is there a IO events , When you have it, tell the program to handle ”

IO Multiplexing is generally the same as NIO Used together .NIO and IO Multiplexing is relatively independent .NIO It just means IO API Always return immediately , It won't be Blocking; and IO Multiplexing is just a convenient notification mechanism provided by the operating system . The operating system does not force the two to work together , It can be used only IO Multiplexing + BIO, At this time, the current thread is still stuck .IO Multiplexing and NIO It makes sense to use together

IO Multiplexing refers to one or more processes specified by the kernel once it is found IO Condition ready to read , Notify the process

Multiple connections share a waiting mechanism , This model will block the process , But the process is blocked in select perhaps poll On these two system calls , Instead of blocking in the real IO Operationally

Users will first need to IO Add operations to select in , Wait at the same time select System call return . When data arrives ,IO To be activated ,select The function returns . User thread officially initiated read request , Read data and continue

From the perspective of process , Use select Function IO There is not much difference between request and synchronous blocking model , There's even more to add monitoring IO, And call select Extra operations on functions , Efficiency is even worse. . And blocked twice , But the first jam was select Upper time ,select You can monitor multiple IO Whether there is IO Operational readiness , It can handle multiple threads at the same time in the same thread IO Purpose of request . Not like blocking IO That kind of , Only one... Can be monitored at a time IO

Although the above method allows multiple processing within a single thread IO request , But every one of them IO The request process is still blocked ( stay select Blocking on function ), The average time is even longer than synchronous blocking IO The model is still long . If the user thread just registers what it needs IO request , And then do your own thing , Wait until the data arrives , Can be improved CPU Utilization ratio

IO Multiplexing is the most commonly used IO Model , But it's not asynchronous enough “ thoroughly ”, Because it uses thread blocking select system call . therefore IO Multiplexing can only be called asynchronous blocking IO Model , Not really asynchronous IO

Advantages and disadvantages

- advantage : Can be based on a blocking object , Waiting for ready on multiple descriptors at the same time , Instead of using multiple threads ( One thread per file descriptor ), This can greatly save system resources

- shortcoming : When the number of connections is small, the efficiency is better than multithreading + Blocking I/O The efficiency of the model is low , It could be more delayed , Because single connection processing requires 2 Secondary system call , The time taken up will increase

IO Multiplexing is used in the following situations :

- When the client processes multiple descriptors ( Generally interactive input and network socket interface ), You have to use I/O Reuse

- When a client processes multiple sockets at the same time , This is possible, but rarely

- When a server has to handle both listening sockets , Also deal with connected sockets , In general, it also needs to use I/O Reuse

- When a server is about to process TCP, And deal with UDP, Generally use I/O Reuse

- When a server has to process multiple services or protocols , Generally use I/O Reuse

2.4 Signal driven I/O Model (signal-driven IO)

** The first stage ( Data is copied from the network to kernel space , Longer time ) Don't block , Processes can do other things , A process can handle multiple requests ; The second stage ( Data is copied from kernel space to user space ) In a blocking state .** This can save time , Improve performance .

Signal driven I/O The process doesn't have to wait , You don't have to poll . Instead, let the kernel when the data is ready , Signal the process .

The call steps are , By system call sigaction , And register a callback function for signal processing , The call immediately returns , Then the main program can continue down , When there is I/O Operational readiness , That is, when the kernel data is ready , The kernel will generate a SIGIO The signal , And call back the registered signal callback function , In this way, the system can call... In the signal callback function recvfrom get data , Copy the data required by the user process from kernel space to user space

The advantage of this model is that the process is not blocked while waiting for the datagram to arrive . The user's main program can continue to execute , Just wait for the notification from the signal processing function .

In signal driven mode I/O In the model , The application program uses socket interface to drive signal I/O, And install a signal processing function , The process continues to run without blocking

When the data is ready , The process will receive a SIGIO The signal , It can be called in the signal processing function I/O Operating functions process data . advantage : The thread is not blocked while waiting for data , The kernel directly returns the call to receive the signal , It does not affect the process to continue to process other requests, so it can improve the utilization of resources

shortcoming : The signal I/O In large quantities IO During operation, it may be impossible to notify due to signal queue overflow

Asynchronous blocking : The program process sends... To the kernel IO After calling , Don't wait for the kernel to respond , You can continue to accept other requests , After the kernel receives the process request

On going IO If you can't return immediately , The kernel waits for the result , until IO When it is finished, the kernel notifies the process .

2.5 asynchronous I/O Model (asynchronous IO)( good )

The first and second stages , Processes can continue to execute , The process of complete emancipation .

asynchronous I/O And Signal driven I/O The biggest difference is , Signal driven is the kernel that tells the user when a process starts I/O operation , The asynchronous I/O The kernel notifies the user of the process I/O When is the operation completed , There is an essential difference between the two , It's equivalent to not having to eat in a restaurant , Just order a takeout , It also saves time waiting for dishes

Relative to synchronization I/O, asynchronous I/O Not in sequence . The user process goes on aio_read After the system call , Whether the kernel data is ready or not , Will be returned directly to the user process , Then the user mode process can do something else . wait until socket The data is ready , The kernel copies data directly to the process , It then sends a notification to the process from the kernel .IO Two phases , Processes are non blocking .

Signal driven IO When the kernel notifies the trigger handler , The signal handler also needs to block copying data from the kernel space buffer to the user space buffer , The asynchronous IO Directly after the second stage , The kernel directly informs the user that the thread can perform subsequent operations

advantage : asynchronous I/O Be able to make full use of DMA characteristic , Give Way I/O Operation and calculation overlap

shortcoming : To be truly asynchronous I/O, The operating system needs to do a lot of work . at present Windows Pass through IOCP True asynchrony I/O, stay Linux Under the system ,Linux 2.6 To introduce , at present AIO Is not perfect , So in Linux When implementing high concurrency network programming under IO Reuse model pattern + The architecture of multithreaded tasks can basically meet the requirements

Linux Provides AIO Library functions implement asynchrony , But it's rarely used . There's a lot of open source asynchrony right now IO library , for example libevent、libev、libuv.

Asynchronous non-blocking : The program process sends... To the kernel IO After calling , Don't wait for the kernel to respond , You can continue to accept other requests , Called by the kernel IO If you can't return immediately , The kernel will continue to handle other things , until IO After completion, notify the kernel of the results , The kernel will IO The completed result is returned to the process , During this period, the process can accept new requests , The kernel can also handle new things , So they don't affect each other , It can achieve larger and higher IO Reuse , Therefore, asynchronous non blocking is the most used communication method

3. Five kinds IO contrast

These five I/O In the model , The later , The less congestion , Theoretically, the efficiency is also the best. The first four belong to synchronization I/O, Because the real I/O operation (recvfrom) Will block the process / Threads , Only asynchrony I/O The model is related to POSIX Asynchronous defined I/O Match

4. Commonly used I/O Model comparison

select There was no notice , Active traversal , More processes , The longer it takes to traverse , The less efficient . A process can process at most one time 1024 A mission ,epoll Use callback , Proactive notification mechanism , There is no need to traverse , There is no upper limit to the number of processing tasks .

Select:

POSIX Required by , Currently, it is supported on almost all platforms , Its good cross platform support is also one of its advantages , It's essentially stored by setting or checking fd The data structure of the flag bit is used for the next processing

shortcoming

There is a maximum limit to the number of file descriptors a single process can monitor , stay Linux Generally speaking 1024, You can modify the macro definition

FD_SETSIZE, Then recompile the kernel to realize , But it will also reduce efficiency

A single process can monitor fd The quantity is limited , The default is 1024, Modifying this value requires recompiling the kernel

Yes socket It's a linear scan , That is, the polling method is adopted , Low efficiency

select The memory copy method is adopted to realize that the kernel will FD Message notification to user space , This one is used to store a large number of fd Data structure of , This will make the user space and kernel space to transfer the structure of the replication overhead

poll:

In essence, select There is no difference between , It copies the array passed in by the user into kernel space , And then look up each fd The corresponding device state has no limit on the maximum number of connections , The reason is that it's based on a linked list

a large number of fd The array is copied between user mode and kernel address space , And whether this kind of replication makes sense

poll Characteristic is “ Level trigger ”, If you report fd after , Not handled , So next time poll It will be reported again fd

select It is an edge trigger, that is, it is only notified once

epoll:

stay Linux 2.6 In the kernel select and poll Enhanced version of

Support level triggering LT And edge trigger ET, The biggest feature is edge triggered , It only tells the process what fd It has just become an on demand state , And only once

Use “ event ” Is ready to inform , adopt epoll_ctl register fd, Once it's time to fd be ready , The kernel uses something like callback Call back mechanism to activate the fd,epoll_wait You can receive the notice

advantage :

There is no maximum concurrent connection limit : It can be opened FD The upper limit is much larger than 1024(1G Can monitor about 10 Ten thousand ports ), Specific to see /proc/sys/fs/file-max, This value is related to the size of the system memory

Efficiency improvement : Non polling way , Not as FD The number increases and the efficiency decreases ; Only active is available FD Will call callback function , namely epoll The biggest advantage is that it only manages “ active ” The connection of , It's not about the total number of connections

Memory copy , utilize mmap(Memory Mapping) Speed up messaging with kernel space ; namely epoll Use mmap Reduce replication overhead

summary :

1、epoll It's just a group of API, Compared with select This scans all file descriptors ,epoll Read only ready file descriptors , Then add the event based readiness notification mechanism , So the performance is quite good

2、 be based on epoll Event multiplexing reduces the number of inter process switches , It makes the operating system do less useless work relative to user tasks .

3、epoll Than select In terms of multiplexing , Reduce the workload of traversal loop and memory copy , Because active connections account for only a small part of the total concurrent connections .

边栏推荐

- Summary of hardfault problem in RTOS multithreading

- Unity--Configurable Joint——简单教程,带你入门可配置关节

- (translation) the use of letter spacing to improve the readability of all capital text

- [MySQL] understanding and use of indexes

- 3 big questions! Redis cache exceptions and handling scheme summary

- Object. Can defineproperty also listen for array changes?

- 【OpenCV】—离散傅里叶变换

- 《JVM》对象内存分配的TLAB机制与G1中的TLAB流程

- 首期Techo Day腾讯技术开放日,628等你!

- Le labyrinthe des huit diagrammes de la bataille de cazy Chang'an

猜你喜欢

cazy長安戰役八卦迷宮

Mapping mode of cache

Analysis on the bottom calling process of micro service calling component ribbon

Where are the hotel enterprises that have been under pressure since the industry has warmed up in spring?

声纹技术(六):声纹技术的其他应用

![[opencv] - Discrete Fourier transform](/img/03/10ce3d7c5d99ead944b2cae8d0cec0.png)

[opencv] - Discrete Fourier transform

The meshgrid() function in numpy

Matplotlib plt Axis() usage

Cazy eight trigrams maze of Chang'an campaign

LVS-DR模式单网段案例

随机推荐

关掉一个线程

C language: count the number of words in a paragraph

第十五周作业

Jmeter中的断言使用讲解

How safe is the new bond

声纹技术(六):声纹技术的其他应用

Matplotlib plt Axis() usage

Oracle one line function Encyclopedia

matplotlib matplotlib中plt.axis()用法

Unity--Configurable Joint——简单教程,带你入门可配置关节

The meshgrid() function in numpy

Is it safe to buy stocks and open accounts through the account QR code of the account manager? Want to open an account for stock trading

获取扫码的客户端是微信还是支付宝

¥3000 | 录「TBtools」视频,交个朋友&拿现金奖!

cazy长安战役八卦迷宫

C language: find all integers that can divide y and are odd numbers, and put them in the array indicated by B in the order from small to large

Oracle-单行函数大全

3、 Automatically terminate training

Unknown table 'column of MySQL_ STATISTICS‘ in information_ schema (1109)

106. simple chat room 9: use socket to transfer audio