当前位置:网站首页>Kubernetes GPU's Dilemma and failure

Kubernetes GPU's Dilemma and failure

2022-07-24 15:37:00 【InfoQ】

kubernetes GPU The plight and ruin of

kubernetes Dispatch GPU- Use article

- GPU equipment , such as

/dev/nvidia0;

- GPU Drive directory , such as

/usr/local/nvidia/*.

apiVersion: v1

kind: Pod

metadata:

name: cuda-vector-add

spec:

restartPolicy: OnFailure

containers:

- name: cuda-vector-add

image: "k8s.gcr.io/cuda-vector-add:v0.1"

resources:

limits:

nvidia.com/gpu: 1

nvidia.com/gpuUse Device Plugins

- AMD

- NVIDIA

amd.com/gpunvidia.com/gpu<vendor>.com/gpu- GPUsIt can only be set in

limitspart, It means :

- You can specify GPU Of

limitsWithout specifying itsrequests,Kubernetes Will use restrictions Value as the default request value ;

- You can also specify

limitsandrequests, However, these two values must be equal .

- You can't just specify

requestsNot specifiedlimits.

- Containers ( as well as Pod) Is not shared GPU Of.GPU Nor should it be over allocated (Overcommitting).

- Each container can request one or more GPU, howeverRequest parts with decimal values GPU It's not allowed.

Expand resources Expand resources apiVersion: v1

kind: Pod

metadata:

name: cuda-vector-add

spec:

restartPolicy: OnFailure

containers:

- name: cuda-vector-add

# https://github.com/kubernetes/kubernetes/blob/v1.7.11/test/images/nvidia-cuda/Dockerfile

image: "k8s.gcr.io/cuda-vector-add:v0.1"

resources:

limits:

nvidia.com/gpu: 1 # requesting 1 GPU

Device plug-ins Device PluginDeploy AMD GPU Device plug-ins

- Kubernetes Nodes must be pre installed AMD GPU Of Linux drive .

kubectl create -f https://raw.githubusercontent.com/RadeonOpenCompute/k8s-device-plugin/r1.10/k8s-ds-amdgpu-dp.yaml

Deploy NVIDIA GPU Device plug-ins

- Kubernetes Nodes of must be pre

Installed NVIDIA drive

- Kubernetes Nodes of must be pre installednvidia-docker 2.0

- Docker OfDefault runtimeMust be set to

nvidia-container-runtime, instead of runc

- NVIDIA Driver version ~= 384.81

- Kubernetes edition >= 1.10

Get ready GPU node

nvidia-docker2nvidia-container-toolkit--gpus# Add the package repositories

$ distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

$ curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

$ curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

$ sudo apt-get update && sudo apt-get install -y nvidia-docker2

$ sudo systemctl restart docker

/etc/docker/daemon.json{

"default-runtime": "nvidia",

"runtimes": {

"nvidia": {

"path": "/usr/bin/nvidia-container-runtime",

"runtimeArgs": []

}

}

}

runtimesstay Kubernetes Enable GPU Support

$ kubectl create -f https://raw.githubusercontent.com/NVIDIA/k8s-device-plugin/v0.11.0/nvidia-device-plugin.yml

nvidia-device-pluginhelmThere are different types of GPU

# Label your nodes with the type of accelerator they have

kubectl label nodes <node-with-k80> accelerator=nvidia-tesla-k80

kubectl label nodes <node-with-p100> accelerator=nvidia-tesla-p100

Not Device Plugin plug-in unit

NVIDIA_DRIVER_CAPABILITIES# Reference resources :https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/user-guide.html

containers:

- env:

- name: NVIDIA_DRIVER_CAPABILITIES

value: compute,utility,video

kubernetes Dispatch GPU- Principles

- The first is

Extend Resources, Allow users to customize resource names . And theThe measurement of resources is at the integer level, The goal is to support different heterogeneous devices through a common pattern , Include RDMA、FPGA、AMD GPU wait , Not just for Nvidia GPU The design of the ;

Device Plugin FrameworkAllow third-party equipment providers to be externalManage the whole life cycle of equipment, and Device Plugin Framework establish Kubernetes and Device Plugin The bridge between modules . On the one hand, it is responsible for reporting equipment information to Kubernetes, On the other hand, it is responsible for the scheduling and selection of equipment .

Extended Resource The report of

# start-up Kubernetes The client of proxy, So you can use it directly curl Follow me Kubernetes Of API Server It's interactive

$ kubectl proxy

# perform PACTH operation

$ curl --header "Content-Type: application/json-patch+json" \

--request PATCH \

--data '[{"op": "add", "path": "/status/capacity/nvidia.com/gpu", "value": "1"}]' \

http://localhost:8001/api/v1/nodes/<your-node-name>/status

apiVersion: v1

kind: Node

...

Status:

Capacity:

cpu: 2

memory: 2049008Ki

nvidia.com/gpu: 1

nvidia.com/gpuDevice Plugin Programming model Device Plugin Working mechanism

- One is to report the resources at the start-up time ;

- The other is the scheduling and operation of user's use time .

- among

ListAndWatchReporting of corresponding resources , At the same time, it also provides the mechanism of health examination . When the equipment is not healthy , It can be reported to Kubernetes Unhealthy equipment ID, Give Way Device Plugin Framework Remove this device from the schedulable device ;

- and

AllocateWill be Device Plugin Call... When deploying the container , The core of the parameter passed in is the device that the container will use ID, The parameter returned is when the container starts , Equipment needed 、 Data volumes and environment variables .

Resource reporting and monitoring

- The first step is to

Device Plugin Registration of, need Kubernetes Know which one to follow Device Plugin Interact . This is because there may be multiple devices on a node , need Device Plugin As a client to Kubelet Report three things .

- ** Who am I ?** Namely Device Plugin Name of the managed device , yes GPU still RDMA;

- ** Where am i? ?** It's the plug-in that monitors itself unix socket File location , Give Way kubelet Be able to call yourself ;

- Interaction protocol, namely API Version number of .

- The second step is

Service startup,Device Plugin Will start a GRPC Of server. After that Device Plugin Has been providing services as this server kubelet To visit , And monitor addresses and provide API The version of is already finished in the first step ;

- The third step , When it's time to GRPC server After starting ,

kubelet Will build one to Device Plugin Of ListAndWatch Long connection of , Used to find equipment ID And the health of the device. When Device Plugin When an unhealthy device is detected , Will take the initiative to inform kubelet. At this time, if the device is idle ,kubelet It will be removed from the assignable list . But when this device has been Pod When used ,kubelet Would not do anything , If you kill this Pod It's a very dangerous operation .

- Step four ,kubelet Will expose these devices to Node The state of the node , Send the number of devices to Kubernetes Of api-server in . The subsequent scheduler can schedule according to this information .

kubelet In the api-server When reporting , I will only report that GPU Corresponding quantity NVLINKPCIe Pod The process of scheduling and running

- First step :Pod Want to use a GPU When , It just needs to be like the example before , stay Pod Of Resource Next limits Field GPU Resources and corresponding quantities ( such as nvidia.com/gpu: 1).Kubernetes We will find the nodes that meet the quantity condition , And then I'll put the GPU Quantity reduction 1, And complete Pod And Node The binding of .

- The second step : After the binding is successful , Naturally, it will be the corresponding node kubelet To create a container . And when kubelet Found this Pod The resource requested by the container of is a GPU When ,**kubelet I will entrust my own internal Device Plugin Manager modular , From what you own GPU Of ID Select one of the available GPU Assign to the container .** here

kubelet It'll go to the local Device Plugin To launch a Allocate request , Parameters carried by this request , It's the device to be assigned to the container ID list.

- The third step :Device Plugin received AllocateRequest After the request, It will be based on kubelet The equipment that came in ID, Go toLook for this device ID Corresponding device path 、 Drive directories and environment variables, And take AllocateResponse In the form of kubelet.

- Step four :AllocateResponse Device path and drive directory information carried in, Once returned to kubelet after ,kubelet The container is assigned based on this information GPU The operation of , suchDocker Will be based on kubelet To create a container, And there will be GPU equipment . And mount the driver directory it needs , thus Kubernetes by Pod Allocate one GPU The process is over .

Device Plugin Startup time, With grpc In the form ofvar/ib/kubelet/device-plugins/kubelet..socktowards Kubelet Register device id( such as nvidia.com/gpu)

Kubelet The number of equipment will be divided into Node Check in status APIServer in, The subsequent scheduler will schedule based on this information

- Kubelet At the same time

Build one to Device Plugin Of listAndWatch A long connection, When the plug-in detects that a device is unhealthy , Will take the initiative to inform Kubelet

- When the user's application requests GPU After resources ,Device Plugin according to Kubeleti stay Allocate Request assigned devices id Locate the corresponding device path and driver file

- Kubelet according to Device plugin The information provided creates the corresponding container

kubernetes Dispatch GPU- Community strengthening

Device Plugin: Available but not easy to use

1. GPU The precision of resource scheduling is insufficient

- about GPU Resources can only be set

limit, It meansrequestsIt can't be used alone , Or just setlimit、 Or set both , But the two values must be equal , You can't just setrequestIt is not setlimit.

- It is not allowed to request GPU Resource allocation .

2. GPU Insufficient resource sharing ability

- pod And between containers , Can't share GPU, And GPU Nor should it be over allocated ( So our online program adopts

daemonSetWay to run ).

3. colony GPU Resources lack a global perspective

- There is no intuitive way to access the cluster level GPU Information , such as Pod / Container and GPU Card binding relationship 、 Already used GPU Number of cards etc.

- existing Kubernetes Yes GPU The allocation and scheduling of resources is through extended resource Realized , It is based on the addition and subtraction of the number of cards on the node . If the user wants to know GPU Card allocation , You need to traverse the nodes , Get and calculate this information . And because this resource is scalar , So I can't get Pod / Containers Binding relationship with card . These problems are not so prominent in the whole card mode , But in fine-grained sharing mode , Especially serious .

- The problem lies in GPU Resource scheduling , It's all in fact kubelet The complete .

- As a global scheduler, the participation is very limited , As a tradition Kubernetes For the scheduler , It can only deal with GPU Number . Once your device is heterogeneous ,When you can't simply use numbers to describe requirements, Such as my Pod Want to run in two with nvlink Of GPU On , This Device Plugin I can 't handle it at all .

4. Can't support many GPU Back end

- Various GPU technology (nvidia docker、qGPU、vCUDA、gpu share、GPU Pooling ) All components need to be deployed independently , Unable to uniformly dispatch and manage

Community GPU Share technology practices

Resource isolation mainly adopts virtualization technology Most of them pay attention to GPU Resource scheduling GRID: Patterns are more used in virtual machine scenarios , Based on driver , The isolated type will do better , butNot open source, Good performance .

MPS: Apply to container scenarios , Software based approach , Isolation is relatively weak , But alsoNot open source.

1. Ali GPU Sharing practice ()

- ** can

Let more prediction services share the same GPU obstruct,** Enable users to pass API Describe an application for a shareable resource , And can realize the scheduling of this kind of resources

- Isolation of this shared resource is not supported

- Continue to use Kubernetes Extended Resource Definition , howeverThe smallest unit of measurement dimension is from 1 individual GPU Card becomes GPU The memory MiB

- User applied GPU The upper limit of resources will not exceed one card , That is to sayThe upper limit of resources applied is a single card

Extended ResourceDefinition

Scheduler ExtenderMechanism

Device PluginMechanism

- utilize

kubernetes Extended ResourceMechanism ,Redefinition GPU resources , Mainly for video memory and GPU NumberThe definition of .

- utilize

Device PluginMechanism , On the node GPU Report the total resources to kubelet,kubelet Further report to Kubernetes API Server.

- utilize

k8s scheduler ExtenderMechanism , Expand scheduler functions , In charge of the global scheduler Filter and Bind When judging a single node GPU Whether the card can provide enough GPU memory , And in Bind The moment will GPU The distribution result of is passed annotation It was recorded that Pod Spec For follow-up Filter Check the allocation results .

- Node operation : When Pod Events bound to nodes are Kubelet When received ,Kubelet Will create a real Pod Entity , In the process , Kubelet Would call GPU Share Device Plugin Of Allocate Method , Allocate The parameters of the method are Pod Applied gpu-mem. And in the Allocate In the method , Will be based on GPU Share Scheduler Extender Corresponding to the scheduling decision of Pod.

- Integrate Nvidia MPS As an isolation option

- from kubeadm The deployment of Kubernetes Automated deployment of clusters

- Scheduler extender high availability

- Apply to GPU、RDMA And other devices

2. Huawei GPU Sharing practice ()

- At the scheduling level, multiple containers can share one gpu card

- At present, it is allowed to fill in memory The size of the video memory . You can fill in one gpu Part of video memory , You can also fill in more than one gpu Memory capacity of the card .

- In isolation , Originally intended to do video memory and computing power isolation , But I don't know hack cuda api perhaps driver Legal risks will be involved , There's no going on

- Direct designation gpu num, Not yet . This device plugin At that time, I did gpu Shared development .

- Create a with

volcano.sh/gpu-memoryResource requested pod,

- Volcano And for pod Distribute gpu resources . Add the following comment

annotations:

volcano.sh/gpu-index: "0"

volcano.sh/predicate-time: "1593764466550835304"

- kubelet Monitor bound to itself pod, And call... Before running the container allocate API Set up env.

env:

NVIDIA_VISIBLE_DEVICES: "0" # GPU card index

VOLCANO_GPU_ALLOCATED: "1024" # GPU allocated

VOLCANO_GPU_TOTAL: "11178" # GPU memory of the card

volcano.sh/gpu-memoryvolcano.sh/gpu-numbervolcano.sh/gpu-memoryvolcano.sh/gpu-number- Collect data on nodes in the cluster

gpu-numberAnd memorygpu-memory

- monitor GPU A healthy state

- Apply in the cluster GPU Of workload mount GPU resources

- GPU virtualization: Reasoning scenarios and GPU Development scenarios ,GPU The utilization rate is generally low ,Volcano Multi container sharing has been realized GPU, The future will further enhance computing power 、 Isolation capability of video memory , Ensure that while improving utilization , Reduce interference between services ;

- Support GPU Node multi-dimensional resource proportion partition

- “ Support GPU Node multi-dimensional resource proportion partition ” Is one of the significant features of this version , Mainly used to solve GPU Nodal factor CPU And other dimensional resources are overused GPU Homework hungry but GPU The problem of resource idleness and waste .This feature is provided by Volcano The contribution of scientific brain in community partners. In traditional schedulers ,GPU And other scarce resources are allocated with CPU Wait for resources to be discretized , namely CPU Type jobs can be directly assigned to GPU Nodes without considering GPU Operational CPU、 Memory requirements , No resources will be reserved for it . In this feature , Allow users to set a dominant resource ( Usually set to GPU), And you can configure the reserved proportion of the supporting resource dimension ( Such as GPU:CPU:Memory=1:4:32). The scheduler will always keep GPU Node GPU、CPU、Memory The proportion of idle resources is not lower than the set value , Therefore, any time that meets the demand of this proportion GPU Jobs can be scheduled to this node , Without causing GPU waste . This method is better than other solutions in the industry , Such as GPU Node assignment independent scheduler 、CPU Type jobs are not allowed to be scheduled to GPU Nodes etc. , It is more conducive to improving node resource utilization , It's also more flexible to use .

- For feature design and usage, please refer to :https://github.com/volcano-sh/volcano/blob/master/docs/design/proportional.md

2. tencent GPU Sharing practice ()

- routine GPU Use , Schedule by block

- Use Nvidia Virtualization technology provided

- Self developed GPU Semi virtualization : Based on the driver function , Changed the function memory application 、 Release and thread initiator .

summary

- From the community 、stackoverflow And the practice of the above companies , at presentGPU Sharing mainly shares GPU Explicit memory of, Poor isolation of resources , There are situations such as resource preemption , Whether it is necessary to carry out GPU Sharing related development work depends on the company's machine learning GPU Use scenarios to determine ;

- GPU There are certain restrictions on sharing :The resources applied by users are limited to single card

- fromVideo memory sharingFrom the perspective of ,single GPU Card sharing is feasible, The main implementation steps include :

Extend resource definitions, Redefinition GPU resources , Mainly for video memory and GPU Definition of quantity

Extension scheduler, In charge of the global scheduler Filter and Bind When judging a single node GPU Whether the card can provide enough GPU memory

- utilize

Nvidia Device Plugin Plug-in mechanism , Complete resource escalation and allocation

Reference material

边栏推荐

- 遭受DDoS时,高防IP和高防CDN的选择

- C - partial keyword

- 2022 robocom world robot developer competition - undergraduate group (provincial competition) -- question 2: intelligent medication assistant (finished)

- SQL row to column, column to row

- 中信证券账户开通流程,手机上开户安全吗

- [Luogu] p1908 reverse sequence pair

- 【TA-霜狼_may-《百人计划》】图形3.4 延迟渲染管线介绍

- Pattern water flow lamp 1: check the table and display the LED lamp

- C# 无操作则退出登陆

- kubernetes多网卡方案之Multus_CNI部署和基本使用

猜你喜欢

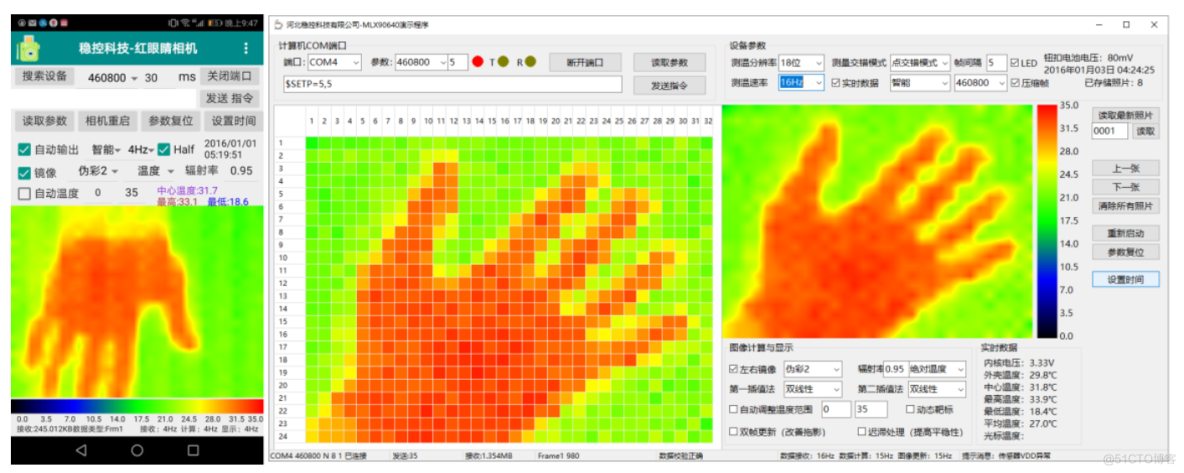

Mlx90640 infrared thermal imager temperature measurement module development notes (III)

Istio1.12:安装和快速入门

【OpenCV 例程300篇】238. OpenCV 中的 Harris 角点检测

2022 RoboCom 世界机器人开发者大赛-本科组(省赛)-- 第三题 跑团机器人 (已完结)

Android section 13 detailed explanation of 03sqlite database

JSON file editor

Cloud development standalone image Jiugongge traffic main source code

celery 启动beat出现报错ERROR: Pidfile (celerybeat.pid) already exists.

Still using listview? Use animatedlist to make list elements move

![[fluent -- layout] flow layout (flow and wrap)](/img/01/c588f75313580063cf32cc01677600.jpg)

[fluent -- layout] flow layout (flow and wrap)

随机推荐

mysql源码分析——索引的数据结构

Configuring WAPI certificate security policy for Huawei wireless devices

[tf.keras]: a problem encountered in upgrading the version from 1.x to 2.x: invalidargumenterror: cannot assign a device for operation embedding_

[bug solution] error in installing pycocotools in win10

徽商期货平台安全吗?办理期货开户没问题吧?

[acwing] 909. Chess game

4279. 笛卡尔树

25.从生磁盘到文件

Leetcode 1288. delete the covered interval (yes, solved)

Arduino ide esp32 firmware installation and upgrade tutorial

[fluent -- layout] flow layout (flow and wrap)

在LAMP架构中部署Zabbix监控系统及邮件报警机制

MySQL source code analysis -- data structure of index

Citic securities account opening process, is it safe to open an account on your mobile phone

27.目录与文件系统

Analysys analysis "2022 China data security market data monitoring report" was officially launched

云开发单机版图片九宫格流量主源码

2022 RoboCom 世界机器人开发者大赛-本科组(省赛)-- 第三题 跑团机器人 (已完结)

【机器学习基础】——另一个视角解释SVM

华为无线设备配置WAPI-证书安全策略