当前位置:网站首页>Centernet network structure construction

Centernet network structure construction

2022-07-25 08:28:00 【m0_ fifty-six million two hundred and forty-seven thousand and 】

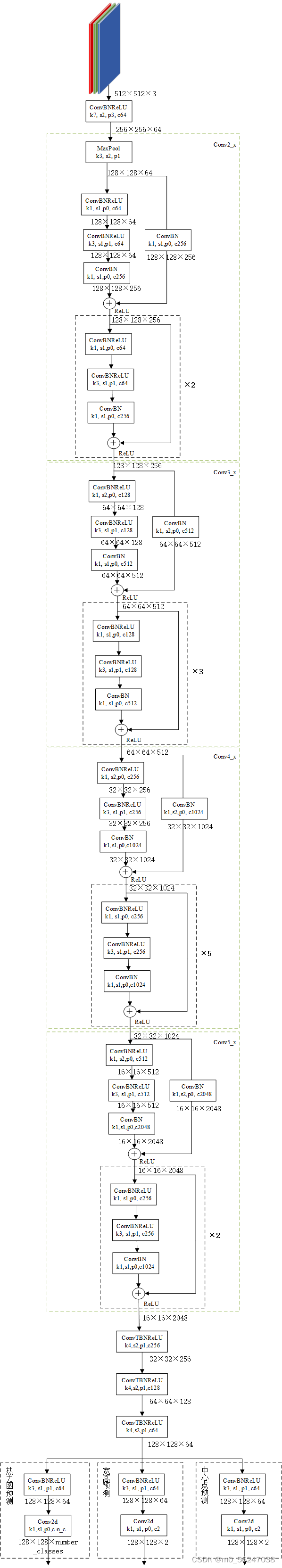

One 、ResNet50 For the backbone network

1、 Backbone network part

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, inplanes, planes, stride=1, downsample=None):

super(Bottleneck, self).__init__()

self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, stride=stride, bias=False) # change

self.bn1 = nn.BatchNorm2d(planes)

self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=1, # change

padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(planes)

self.conv3 = nn.Conv2d(planes, planes * 4, kernel_size=1, bias=False)

self.bn3 = nn.BatchNorm2d(planes * 4)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None: # Judge downsample Is it None

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out

#-----------------------------------------------------------------#

# Use Renset50 As a backbone feature extraction network , Input is 512x512x3 You will eventually get one

# 16x16x2048 Effective feature layer

#-----------------------------------------------------------------#

class ResNet(nn.Module):

def __init__(self, block, layers, num_classes=1000):

self.inplanes = 64

super(ResNet, self).__init__()

# 512,512,3 -> 256,256,64

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3,bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

# 256x256x64 -> 128x128x64

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=0, ceil_mode=True) # change

# 128x128x64 -> 128x128x256

self.layer1 = self._make_layer(block, 64, layers[0])

# 128x128x256 -> 64x64x512

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

# 64x64x512 -> 32x32x1024

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

# 32x32x1024 -> 16x16x2048

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

self.avgpool = nn.AvgPool2d(7)

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

def _make_layer(self, block, planes, blocks, stride=1):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential( # structure downsample, Contains a Conv2d And a BatchNorm2d

nn.Conv2d(self.inplanes, planes * block.expansion,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(planes * block.expansion),

)

layers = []

layers.append(block(self.inplanes, planes, stride, downsample)) #downsample Pass in block As the residual edge

self.inplanes = planes * block.expansion

for i in range(1, blocks):

layers.append(block(self.inplanes, planes))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

def resnet50(pretrained = True):

model = ResNet(Bottleneck, [3, 4, 6, 3])

if pretrained:

state_dict = load_state_dict_from_url(model_urls['resnet50'], model_dir = 'model_data/')

model.load_state_dict(state_dict)

#----------------------------------------------------------#

# Get the feature extraction part

# take layer4 Layer and before

features = list([model.conv1, model.bn1, model.relu, model.maxpool, model.layer1, model.layer2, model.layer3, model.layer4])

features = nn.Sequential(*features)

return features

2、 The backbone network is followed by three deconvolutions for up sampling

# Three after building the backbone network ConvTranspose2d Deconvolution for up sampling

class resnet50_Decoder(nn.Module):

def __init__(self, inplanes, bn_momentum=0.1):

super(resnet50_Decoder, self).__init__()

self.bn_momentum = bn_momentum

self.inplanes = inplanes

self.deconv_with_bias = False

#----------------------------------------------------------#

# 16,16,2048 -> 32,32,256 -> 64,64,128 -> 128,128,64

# utilize ConvTranspose2d Perform three times of deconvolution up sampling .

# Each time the width and height of the feature layer become twice the original .

#----------------------------------------------------------#

self.deconv_layers = self._make_deconv_layer(

num_layers=3,

num_filters=[256, 128, 64],

num_kernels=[4, 4, 4],

)

def _make_deconv_layer(self, num_layers, num_filters, num_kernels):

layers = []

for i in range(num_layers):

kernel = num_kernels[i]

planes = num_filters[i]

layers.append(

nn.ConvTranspose2d(

in_channels=self.inplanes, # 2048 256 128

out_channels=planes, # 256 128 64

kernel_size=kernel, # 4 4 4

stride=2,

padding=1,

output_padding=0,

bias=self.deconv_with_bias))

layers.append(nn.BatchNorm2d(planes, momentum=self.bn_momentum))

layers.append(nn.ReLU(inplace=True))

self.inplanes = planes

return nn.Sequential(*layers)

def forward(self, x): # Positive propagation process

return self.deconv_layers(x)

3、 Detection head part

class resnet50_Head(nn.Module):

def __init__(self, num_classes=80, channel=64, bn_momentum=0.1):

super(resnet50_Head, self).__init__()

#-----------------------------------------------------------------#

# Upsampling the acquired features , Make classification prediction and regression prediction

# 128, 128, 64 -> 128, 128, 64 -> 128, 128, num_classes

# -> 128, 128, 64 -> 128, 128, 2

# -> 128, 128, 64 -> 128, 128, 2

#-----------------------------------------------------------------#

# Thermodynamic diagram prediction part

# The result of thermodynamic map prediction can represent the result of network classification prediction

self.cls_head = nn.Sequential(

nn.Conv2d(64, channel,

kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(channel, momentum=bn_momentum),

nn.ReLU(inplace=True), #ConvBNReLU It can be regarded as a function of feature integration

nn.Conv2d(channel, num_classes,

kernel_size=1, stride=1, padding=0)) #1*1 Convolution to adjust the number of channels

# Part of width and height prediction

# Number of output channels 2 It is equivalent to the width and height of the object at each feature point

self.wh_head = nn.Sequential(

nn.Conv2d(64, channel,

kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(channel, momentum=bn_momentum),

nn.ReLU(inplace=True), #ConvBNReLU It can be regarded as a function of feature integration

nn.Conv2d(channel, 2,

kernel_size=1, stride=1, padding=0)) #1*1 Convolution to adjust the number of channels

# Part of the central point prediction

# Number of output channels 2 Equivalent to the center point at X、Y The offset of the axis

self.reg_head = nn.Sequential(

nn.Conv2d(64, channel,

kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(channel, momentum=bn_momentum),

nn.ReLU(inplace=True), #ConvBNReLU It can be regarded as a function of feature integration

nn.Conv2d(channel, 2,

kernel_size=1, stride=1, padding=0)) #1*1 Convolution to adjust the number of channels

def forward(self, x):

hm = self.cls_head(x).sigmoid_()

wh = self.wh_head(x)

offset = self.reg_head(x)

return hm, wh, offset

4、CenterNet_Resnet50 The overall structure

class CenterNet_Resnet50(nn.Module):

def __init__(self, num_classes = 20, pretrained = False):

super(CenterNet_Resnet50, self).__init__()

# 512,512,3 -> 16,16,2048

self.backbone = resnet50(pretrained = pretrained)

# 16,16,2048 -> 128,128,64

self.decoder = resnet50_Decoder(2048)

#-----------------------------------------------------------------#

# Upsampling the acquired features , Make classification prediction and regression prediction

# 128, 128, 64 -> 128, 128, 64 -> 128, 128, num_classes

# -> 128, 128, 64 -> 128, 128, 2

# -> 128, 128, 64 -> 128, 128, 2

#-----------------------------------------------------------------#

self.head = resnet50_Head(channel=64, num_classes=num_classes)

def forward(self, x):

feat = self.backbone(x)

return self.head(self.decoder(feat))

reference

边栏推荐

- When easyexcel uses converter conversion to inject nullpoint exception

- How to create a simple electron desktop program

- My creation anniversary

- RTOS系列(13):汇编LDR指令、LDR伪指令、[Rn]寄存器间接引用 详细解析

- 【黑马程序员】Redis学习笔记004:主从复制+哨兵模式+集群

- How to obtain the intersection / subtraction / Union of two sets by MySQL

- Apartment repair reporting system (idea, SSM, MySQL)

- CAS operation

- Redis fragment cluster

- The database of idea cannot prompt the table name, field name, and schema cannot be loaded

猜你喜欢

Raspberrypico serial communication

Niuke dynamic planning training

NVIDIA programmable reasoning accelerator tensorrt learning notes (II) - practical operation

记录两次多端排查问题的过程

Idea reads configuration files such as validationmessages.properties Chinese garbled

A simple SQL injection shooting range exercise

Apartment repair reporting system (idea, SSM, MySQL)

JS cool rolling picture deformation animation JS special effects

A simple hotel background management system based on jsp+servlet+mysql

【黑马程序员】Redis学习笔记001:Redis简介+五种基本数据类型

随机推荐

node+js搭建时间服务器

Brush the title "sword finger offer" day01

聊下自己转型测试开发的历程

[shader realizes shadow projection effect _shader effect Chapter 8]

A powerful port scanning tool (nmap)

The database of idea cannot prompt the table name, field name, and schema cannot be loaded

Dirty data and memory leakage of ThreadLocal

mysql 如何获取两个集合的交集/差集/并集

CM4 development cross compilation tool chain production

Dijkstra sequence (summer vacation daily question 5)

My creation anniversary

CentOS 8.2 MySQL installation (xshell6)

机器学习理论及案例分析(part2)--回归

Learn when playing No 4 | can you take an online exam in programming? Right here →

serialization and deserialization

Rstudio shows that it can't connect to the web page, or it has a new website.

【黑马程序员】Redis学习笔记004:主从复制+哨兵模式+集群

Hash table questions (Part 1)

Redis最佳实践

How to obtain the intersection / subtraction / Union of two sets by MySQL