当前位置:网站首页>The principle of redis cache consistency deep analysis

The principle of redis cache consistency deep analysis

2022-06-23 14:39:00 【Liu Java】

Keep creating , Accelerate growth ! This is my participation 「 Nuggets day new plan · 6 Yuegengwen challenge 」 Of the 22 God , Click to see the event details

In detail Redis There are three ways to achieve cache consistency , And their advantages and disadvantages .

First of all, understand , There is no absolute consistency between the cache and the database data , If absolutely consistent , Then you can't use the cache , We can only guarantee the final consistency of the data , And try to keep the cache inconsistency time as short as possible .

in addition , To avoid data inconsistency between the cache and the database caused by extreme conditions , The cache needs to set an expiration time . time out , Cache is automatically cleaned up , Only in this way can the cache and database data be “ Final consistency ”.

If the concurrency is not very high , Whether you choose to delete the cache first or later , It rarely causes problems .

1 Update the database first , Then delete the cache

If the database is successfully updated first , Failed to delete cache , Then there are new data in the database , There is old data in the cache , At this point, data inconsistency will occur .

If you're in a high concurrency scenario , There is also a more extreme case of database and cache data inconsistency :

- The cache just failed ;

- request A Query the database , Get an old value ;

- request B Writes the new value to the database ;

- request B Delete cache ;

- request A Writes the old value found to the cache ;

This leads to inconsistencies . and , If you do not use the cache expiration policy , The data is always dirty , Unless the database data is updated next time .

2 So let's delete the cache , Then update the database

So let's delete the cache , Update the database after , Even if updating the database later fails , Cache is empty , When reading, it will re read from the database , Although they are all old data , But the data are consistent .

If you're in a high concurrency scenario , There is also a more extreme case of database and cache data inconsistency :

- request A Write operation , Delete cache ;

- request B Query found that the buffer does not exist ;

- request B Query the database , Get an old value ;

- request B Writes the old value found to the cache ;

- request A Writes the new value to the database ;

3 Adopt the strategy of delay double deletion

The two methods above , Whether you write the library first , Delete the cache ; Or delete the cache first , Write the library again , There may be data inconsistencies . relatively speaking , The second is safer , therefore , If only these two simple methods , The second is recommended .

A better and better way is to adopt a “ Delay double delete ” The strategy of ! Before and after writing the library redis.del(key) operation , In addition, to avoid updating the database , Other threads cannot read data from the cache and then read the old data and write it to the cache , Just after updating the database , Again sleep A span , Then delete the cache again .

This sleep The time should be longer than another request to read the old data in the database + Write cache time , And if there is redis Master slave synchronization 、 Database sub database sub table , Also consider the time-consuming data synchronization , stay Sleep Then try deleting the cache again ( Whether new or old ). such , Although there is no guarantee that there will be no cache consistency problem , But it can be guaranteed that only sleep Cache inconsistency in time , Reduced cache inconsistency time .

Of course, this strategy needs to sleep for a certain period of time , This undoubtedly increases the time required to write the request , This causes the throughput of server requests to decrease , It's also a problem . So , You can treat the second deletion as an asynchronous deletion . such , Business thread requests don't have to sleep Return after a period of time , Do it , It can increase throughput .

What if deleting the cache fails ? At this time, a retry mechanism is required , At this point, you can use the message queue , Will need to be deleted key To the message queue , Asynchronous consumption messages , Get what needs to be removed key The value of is compared with the value of the database ! Delete if inconsistent , Or if the deletion fails, it will start from new consumption to success , Or when you fail a certain number of times, it's usually Redis There's something wrong with the server !

In fact, after the introduction of message oriented middleware , The problem has become more complicated , We need to ensure that there are no problems with message oriented middleware , For example, the producer sends a message successfully or unsuccessfully . therefore , We can listen to the database binlog Log message queue to delete the cache , The advantage is that you don't have to put messages into the message queue , Listen to the database through a middleware binlog journal , Then automatically put messages into the queue , We no longer need to program producer code , Just write the consumer's code . This kind of monitoring database binlog+ Message queue It is also a popular way at present .

4 Why delete cache

Why do the above methods delete the cache instead of updating the cache ?

If you update the database first , Then update the cache , Then the following may happen : If the database 1 Updated within hours 1000 Time , Then the cache needs to be updated as well 1000 Time , But this cache may be in 1 Only read in hours 1 Time . If it's deleted , Even if the database is updated 1000 Time , So it's just done 1 Second valid cache delete , Subsequent deletion operations will immediately return , Only when the cache is actually read can the database load the cache . This reduces Redis The burden of .

Related articles :

If you need to communicate , Or the article is wrong , Please leave a message directly . In addition, I hope you will like it 、 Collection 、 Focus on , I will keep updating all kinds of Java Learning blog !

边栏推荐

- ai智能机器人让我们工作省时省力

- 2021-06-07

- php接收和发送数据

- How to install the DTS component of SQL server2008r2 on win10 64 bit systems?

- Uniswap 收购 NFT交易聚合器 Genie,NFT 交易市场将生变局?

- 掌舵9年,艾伦研究所创始CEO光荣退休!他曾预言中国AI将领跑世界

- KS003基于JSP和Servlet实现的商城系统

- 阿里 Seata 新版本终于解决了 TCC 模式的幂等、悬挂和空回滚问题

- 今年英语高考,CMU用重构预训练交出134高分,大幅超越GPT3

- Cause analysis and intelligent solution of information system row lock waiting

猜你喜欢

The data value reported by DTU cannot be filled into Tencent cloud database through Tencent cloud rule engine

【DataHub】LinkedIn DataHub学习笔记

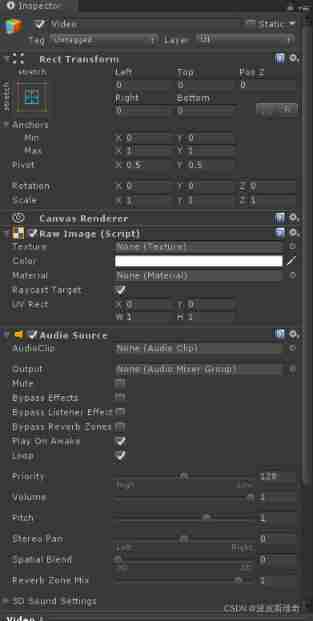

Unity realizes the function of playing Ogg format video

![[compréhension approfondie de la technologie tcaplusdb] données de construction tcaplusdb](/img/0c/33d9387cd7733a0c6706a73f9535f7.png)

[compréhension approfondie de la technologie tcaplusdb] données de construction tcaplusdb

![Web technology sharing | [Gaode map] to realize customized track playback](/img/b2/25677ca08d1fb83290dd825a242f06.png)

Web technology sharing | [Gaode map] to realize customized track playback

用OBS做直播推流简易教程

Flutter Clip剪裁组件

【深入理解TcaplusDB技术】TcaplusDB导入数据

As a software testing practitioner, do you understand your development direction?

Soaring 2000+? The average salary of software testing in 2021 has come out, and I can't sit still

随机推荐

The well-known face search engine provokes public anger: just one photo will strip you of your pants in a few seconds

Gold three silver four, busy job hopping? Don't be careless. Figure out these 12 details so that you won't be fooled~

What is the working status of software testing with a monthly salary of 7500

Flutter Clip剪裁组件

Teach you how to build Tencent cloud server (explanation with pictures and pictures)

Pyqt5 工具盒使用

golang--判断字符串是否相等

知名人脸搜索引擎惹众怒:仅需一张照片,几秒钟把你扒得底裤不剩

golang--文件的多个处理场景

k8s--部署单机版MySQL,并持久化

Hexiaopeng: if you can go back to starting a business, you won't name the product in your own name

Working for 7 years to develop my brother's career transition test: only by running hard can you get what you want~

渗透测试-提权专题

【DataHub】LinkedIn DataHub学习笔记

今年英语高考,CMU用重构预训练交出134高分,大幅超越GPT3

SAP inventory gain / loss movement type 701 & 702 vs 711 & 712

Xmake v2.6.8 release, compilation cache improvement

Input adjustment of wechat applet

Ks008 SSM based press release system

kali使用