当前位置:网站首页>Interpretation of bilstm-crf in NER forward_ algorithm

Interpretation of bilstm-crf in NER forward_ algorithm

2022-06-28 02:41:00 【365JHWZGo】

If you have any doubts about the following , You may need to read what I wrote in my previous article BiLSTM-CRF Explanation

CRF+BiLSTM The code is interpreted step by step

Explain

I've talked about it before forward_algorithm Is a function used to solve the sum of all path scores , The following will use a specific example to explain the flow of this function implementation .

First initialize a random emission matrix e_score (batch_size, seq_len, tags_size)

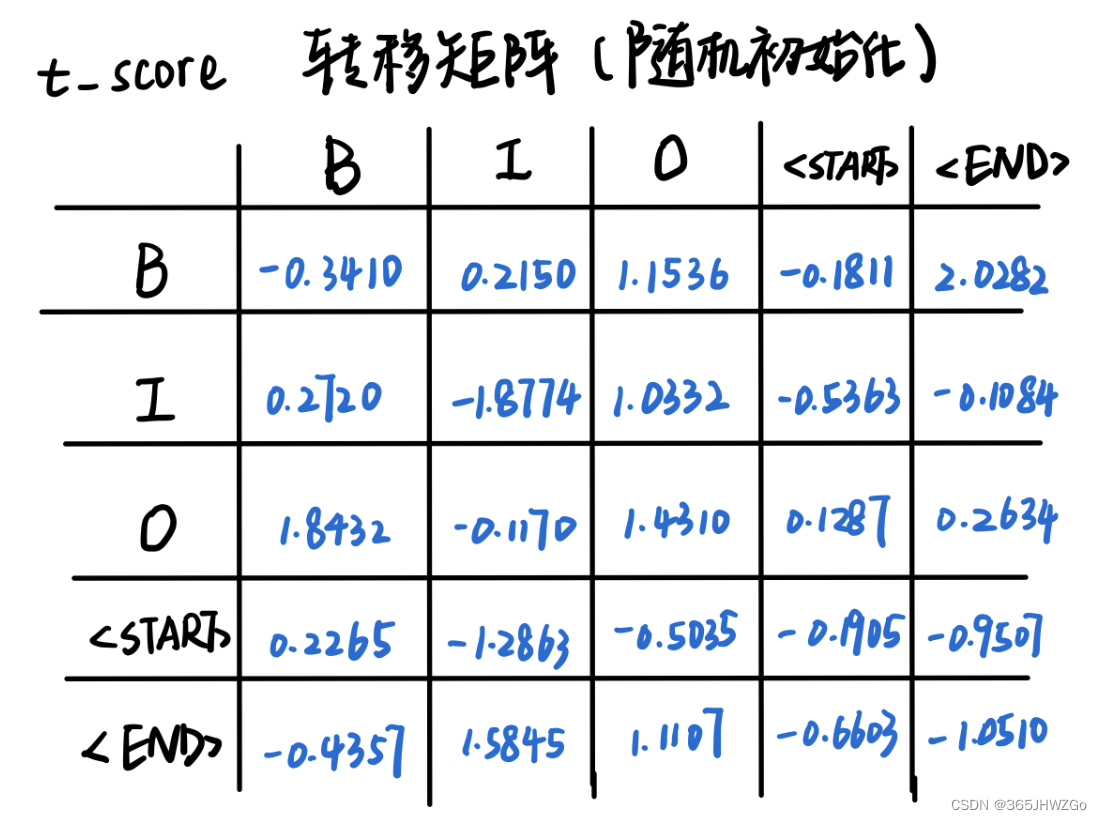

And then randomly initialize an emission matrix t_score (tags_size, tags_size)

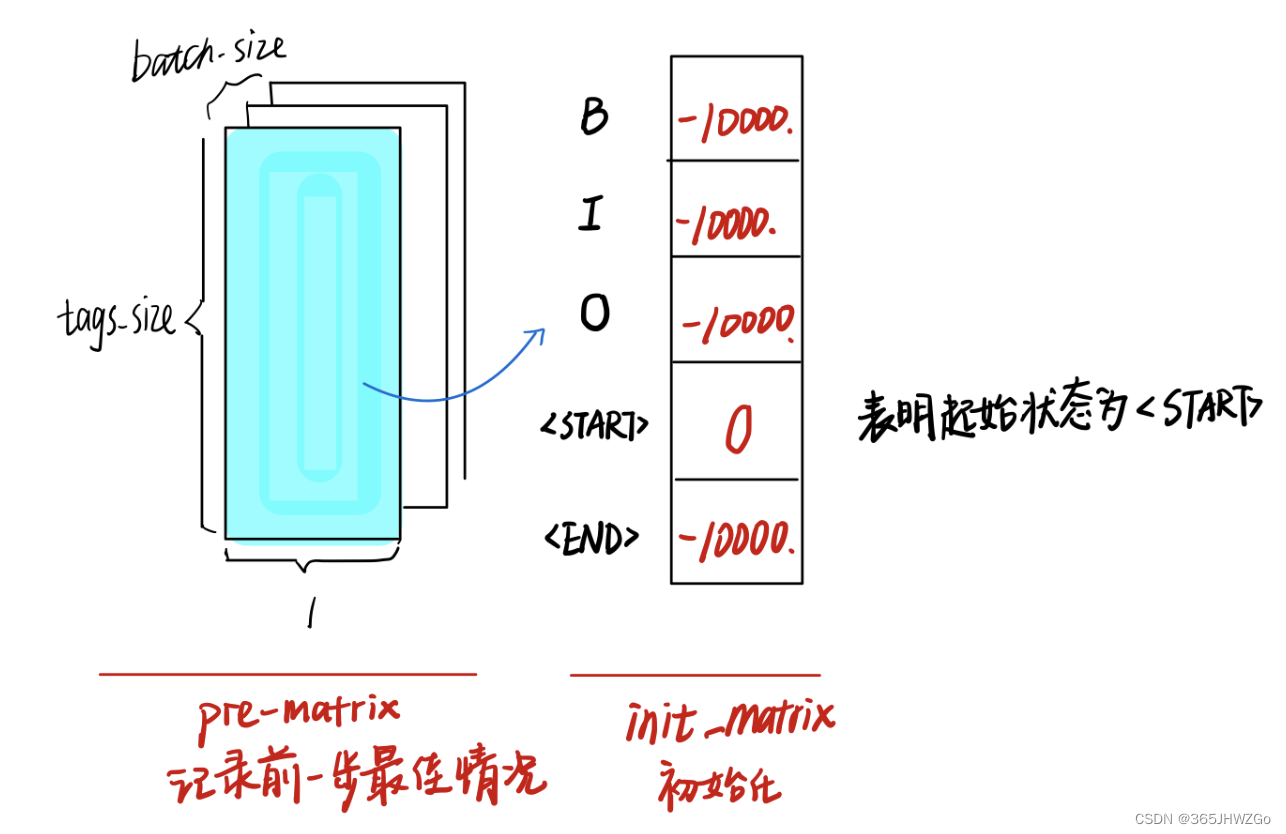

Create a init_matrix, Then make another copy for pre_matrix, Here, the model is erected for convenience of understanding (batch_size, 1, tags_size)

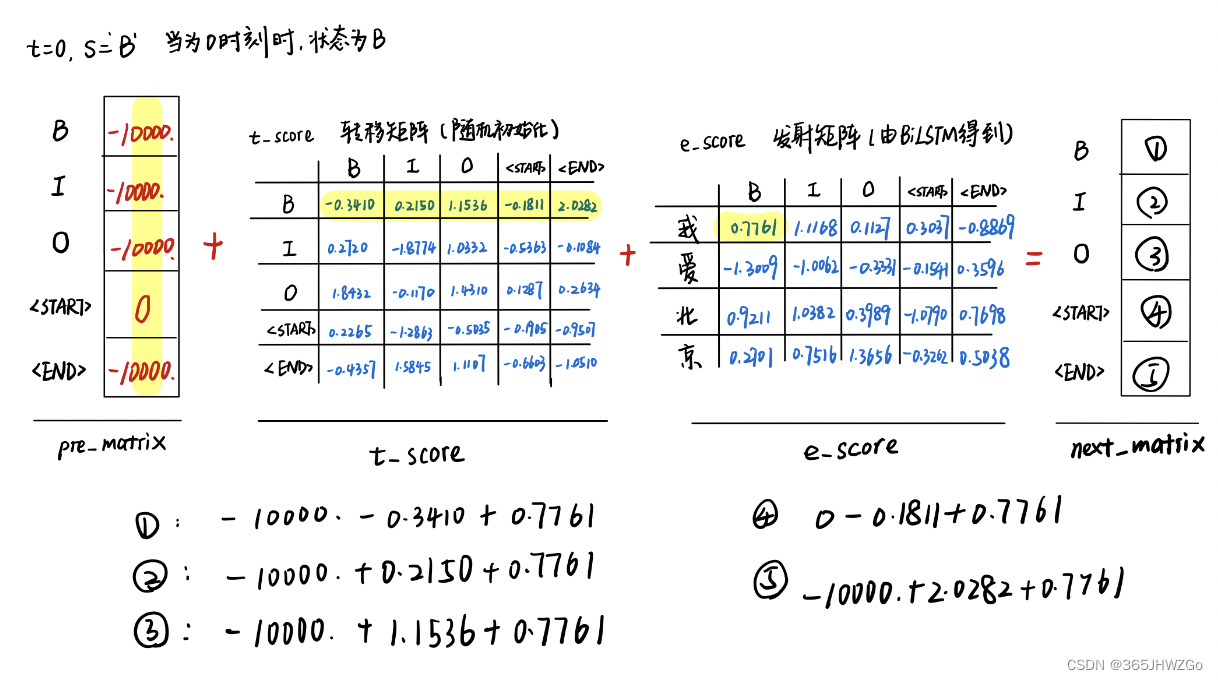

Here is only a demonstration of what was then engraved as 0, Status as ’B’ The calculation process of

It's all down here log_sum_exp Temporary variables in

Code

def forward_algorithm(self, e_matrix):

# matrix Is the sum of the total paths in the current state

init_matrix = torch.full((BATCH_SIZE, 1, tags_size), -10000.0)

init_matrix[:, 0, self.s2i[START_TAG]] = 0.

# The optimal value of the previous step

pre_matrix = init_matrix

# Cycle time

for i in range(SEQ_LEN):

# Save the path value of the current time step

matrix_value = []

# Cycle state

for s in range(tags_size):

# Calculate the emission fraction , (BATCH_SIZE, 1, tags_size)

e_score = e_matrix[:, i, s].view(BATCH_SIZE, 1, -1).expand(BATCH_SIZE, 1, tags_size)

# Calculate transfer score (1,tags_size)

t_score = self.t_score[s, :].view(1, -1)

# The next score (BATCH_SIZE, 1, tags_size)

next_matrix = pre_matrix + e_score + t_score

# self.log_sum_exp(next_matrix) (BATCH_SIZE, 1)

matrix_value.append(self.log_sum_exp(next_matrix))

# Record it in pre_matrix variable

pre_matrix = torch.cat(matrix_value, dim=-1).view(BATCH_SIZE, 1, -1)

# The final variable : Previous scores + The score transferred to the destination (BATCH_SIZE, 1, tags_size)

terminal_var = pre_matrix + self.t_score[self.s2i[STOP_TAG], :]

alpha = self.log_sum_exp(terminal_var)

# (BATCH_SIZE,1)

return alpha

You can see here that it is not clear why you can get the sum of all paths by doing this , Actually , This is simply to simplify the calculation , But the disadvantage of this calculation is that it has been used many times logsumexp, This is actually a little different from the original value .

Ideal value

s c o r e i d e a l = l o g ( e S 1 + e S 2 + . . . + e S N ) (1) score_{ideal} = log(e^{S_1}+e^{S_2}+...+e^{S_N})\tag1 scoreideal=log(eS1+eS2+...+eSN)(1)

Realistic value

s c o r e r e a l i t y = l o g ( ∑ e p r e + t ) = l o g ( ∑ e l o g ( ∑ e p r e + t + e s ) + t ) = . . . (2) \begin{aligned} score_{reality} &= log(\sum e^{pre+t})\\ &= log(\sum e^{log(\sum e^{pre+t+es})+t})\\ &=... \end{aligned}\tag2 scorereality=log(∑epre+t)=log(∑elog(∑epre+t+es)+t)=...(2)

t->t_score

es->e_score

pre->pre_matrix

As shown in the figure above ,* The ball has been calculated from <START> To " I ", All statuses in the previous step are up to B Total path score of S1, seek logsumexp(S1) It was recorded that * Bulbar , Similarly, the sphere is where all the paths of the first two steps arrive " Love ", And all States are transferred to B Total path score of S2, seek logsumexp(S2) Record to the ball .

thus , Did you stop learning ?

边栏推荐

- SQL reported an unusual error, which confused the new interns

- How to handle computer security certificate errors

- 【历史上的今天】6 月 5 日:洛夫莱斯和巴贝奇相遇;公钥密码学先驱诞生;函数语言设计先驱出生

- 如何系统学习LabVIEW?

- 【二維碼圖像矯正增强】基於MATLAB的二維碼圖像矯正增强處理仿真

- Introduction to hybrid apps

- 如何以数据驱动「客户全生命周期管理」,提高线索转化率及客户满意度?

- 【方块编码】基于matlab的图像方块编码仿真

- 【历史上的今天】6 月 1 日:Napster 成立;MS-DOS 原作者出生;谷歌出售 Google SketchUp

- 匿名挂载&具名挂载

猜你喜欢

STM32的通用定时器与中断

系统管理员设置了系统策略,禁止进行此安装。解决方案

Embedded must learn, detailed explanation of hardware resource interface -- Based on arm am335x development board (Part 1)

【模糊神经网络】基于matlab的模糊神经网络仿真

Low code solution - a low code solution for digital after-sales service covering the whole process of work order, maintenance and Finance

SQL injection Bypass (2)

Win11不能拖拽图片到任务栏软件上快速打开怎么办

ScheduledThreadPoolExecutor源码解读(二)

MySQL collection, here are all the contents you want

数智学习|湖仓一体实践与探索

随机推荐

【历史上的今天】6 月 7 日:Kubernetes 开源版本发布;《魔兽世界》登陆中国;分组交换网络发明者出生

Solutions to st link USB communication error

Architecture high reliability application knowledge map ----- microservice architecture map

云平台kvm迁移本地虚拟机记录

投资同业存单基金是靠谱吗,同业存单基金安全吗

flask基础:模板继承+静态文件配置

【历史上的今天】6 月 1 日:Napster 成立;MS-DOS 原作者出生;谷歌出售 Google SketchUp

【历史上的今天】6 月 17 日:术语“超文本”的创造者出生;Novell 首席科学家诞生;探索频道开播

数仓的字符截取三胞胎:substrb、substr、substring

【二维码图像矫正增强】基于MATLAB的二维码图像矫正增强处理仿真

【 amélioration de la correction d'image de Code bidimensionnel】 simulation du traitement d'amélioration de la correction d'image de Code bidimensionnel basée sur MATLAB

后勤事务繁杂低效?三步骤解决企业行政管理难题

Machine learning (x) reinforcement learning

Keil "St link USB communication error" solution

NER中BiLSTM-CRF解读Forward_algorithm

毕业总结

设计电商秒杀系统

Locust performance test - parameterization, no repetition of concurrent cyclic data sampling

【历史上的今天】5 月 31 日:Amiga 之父诞生;BASIC 语言的共同开发者出生;黑莓 BBM 停运

技术人员如何成为技术领域专家