当前位置:网站首页>biggan:large scale gan training for high fidelity natural image synthesis

biggan:large scale gan training for high fidelity natural image synthesis

2022-06-26 01:45:00 【Kun Li】

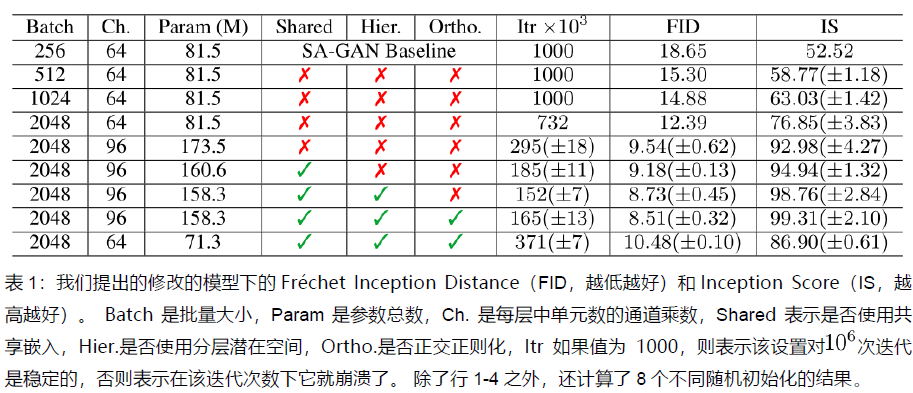

In depth reading DeepMind new : The strongest in history GAN Image Generator —BigGAN - You know The paper notes recommended in this issue are from PaperWeekly Community users @TwistedW. from DeepMind It brings BigGAN It is the best I have ever seen GAN Model , there Big Not just model parameters and Batch The big , It still seems to imply impressive , The article also does … https://zhuanlan.zhihu.com/p/46581611 This article is easy to understand , Compared with the previous large-scale pendulum formula ,biggan It's much easier to understand , But the model structure diagram has been put in the appendix ,biggan There are three core points , The first is bigger , Big bs And big parameters , second , From the adjustment of the model , such as latent Hierarchical input of , contribution c, Third latent z Truncated input of , In response to this truncation input , Orthogonal regularization is proposed , Finally, based on the instability of training, some improved skills are put forward .

https://zhuanlan.zhihu.com/p/46581611 This article is easy to understand , Compared with the previous large-scale pendulum formula ,biggan It's much easier to understand , But the model structure diagram has been put in the appendix ,biggan There are three core points , The first is bigger , Big bs And big parameters , second , From the adjustment of the model , such as latent Hierarchical input of , contribution c, Third latent z Truncated input of , In response to this truncation input , Orthogonal regularization is proposed , Finally, based on the instability of training, some improved skills are put forward .

1.introduction

gan Benefit from scaling , Compared with the prior art , The training parameters are 2-4 times ,batch Big 8 times , Reached 2048 This level , Corresponding to the topic large scale, Actually biggan The discovery is just a simple adjustment ,gan Can have a better effect . As a side effect of the modification , The model becomes suitable for the truncation technique ,truncation trick, This is a simple sampling technique , The trade-off between sample type and fidelity can be made clear , Fine-grained control .

2.background

3.scaling up gans

Exploration and expansion gan How to train , In order to get in larger models and larger batch Advantages under . Use SAGAN framework ,hinge loss, Conditions bn Of G, With projection D, Spectral normalization ,moving averages of G weights, Orthogonal initialization .

First add baseline model Of bs,IS Improve quickly , But the side effect is that our model is better in fewer iterations , But it becomes unstable and prone to mode crash .

Width increase 50%, The effect continues to improve .

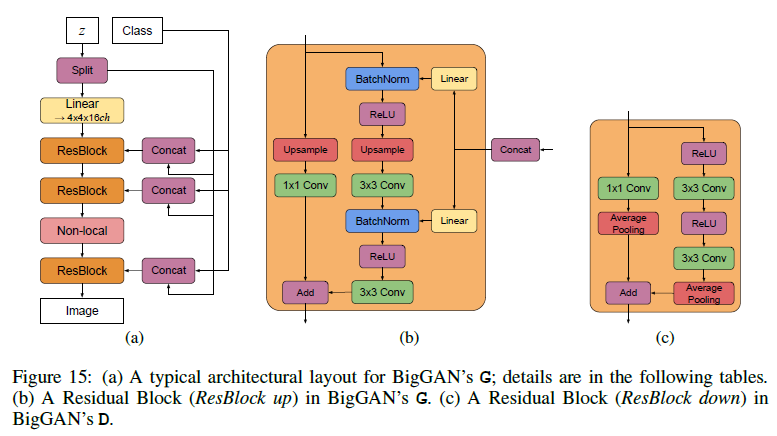

Look at the picture above ,c Is Shared , Yes latent z Conduct split, Noise vector z Fed into multiple layers instead of just the initial layer , This is very meaningful , stay stylegan That's what we do in China ,latent z In fact, it is useful for every volume layer in the generator , Convolution layer is actually a process from coarseness to refinement , from latent z Spatial sampling , The intuition behind this design is to allow the generator to use potential space to directly affect features at different levels of resolution and hierarchy . stay bigan in , take z Divided into pieces of each resolution , And link each block to a condition vector c, Condition vector c Mapping to BN Gain and deviation of .

3.1 trading off variety and fidelity with the truncation trick

gan Any prior can be used , But most use Gauss and uniform distribution , There can be better alternatives . The so-called truncation technique , It's through a priori distribution z sampling , Truncate by setting a threshold z Sampling of , Values out of range are resampled and fall into the range , This threshold can be generated according to the quality index IS and FID decision . This experiment can be known by setting the threshold , As the threshold decreases, the quality of the generation will get better , But as the threshold drops , The sampling range is narrowed , It will lead to the simplification of the generative orientation , The problem of insufficient diversity of generation .

Above picture a As the threshold gets lower and lower , The effect is getting better and better , But more and more single ,b The graph is a large model, which is not suitable for truncation , Prone to saturation artifacts . To offset this , We're trying to put G Adjust to smooth to force the adaptability of truncation , In order to z The entire space to a good output sample . Sampling orthogonal regularization .

4.analysis

It mainly analyzes gan Reasons for instability during training , And make some restrictions on the generator and discriminator .

边栏推荐

猜你喜欢

随机推荐

Difference between app test and web test

二造实务案例答题技巧和举例汇总,满满都是精髓

Region of Halcon: generation of multiple regions (4)

浅谈接口测试(一)

阳光男孩陈颢天 受邀担任第六季完美童模全球总决赛代言人

UN make (6) makefile的条件执行

GUN make (5) makefile中的变量

GUN make (7) 执行make

ActivityManager kill reason

Some summary of model compression

Oracle database complete uninstallation steps (no screenshot)

Perfdog

readv & writev

GUN make (4) 规则的命令

Test questions and answers for the 2022 baby sitter (Level 5) examination

cyclegan:unpaired image-to-image translation using cycle-consistent adversarial network

22. pixel remapping

Quickly generate 1~20 natural numbers and easily copy

王老吉药业“关爱烈日下最可爱的人”公益活动在杭启动

PTA class a simulated first bomb: 1132-1135