当前位置:网站首页>How to solve the sample imbalance problem in machine learning?

How to solve the sample imbalance problem in machine learning?

2022-06-26 09:19:00 【Merrill Lynch data tempodata】

In recent years, , With the popularization of intelligent application concept in various industries 、 Implementation of intelligent application projects , As the basic technology of intelligent application - machine learning , It has also been widely used and achieved good results . meanwhile , It has also experienced various difficulties in the actual project application , For example, the data is scattered and difficult to unify 、 Output results lag 、 Inaccurate data, etc , Sample imbalance is a typical data problem .

Sample imbalance problem refers to the problem of pattern classification , A certain type of data in the sample is far more than other types of data, resulting in inaccurate discrimination of a few types , However, a small number of samples in practical applications often contain key information . For example, fault samples in equipment fault prediction , Unqualified samples in product quality analysis , Lost users in the user loss alert , Electricity stealing users in electricity stealing identification , Case samples in medical diagnosis, etc , They are all objects that need to be focused on in the process of intelligent application analysis .

To solve the problem of unbalanced sample classification , We have tried so far 5 A direction :

1、 Changing the distribution of data , Reduce unbalance , Including sampling methods ( Oversampling algorithm 、 Undersampling algorithm ) And data synthesis ;

2、 optimization algorithm , Analyze the defects of existing algorithms in the face of unbalanced data , Improve the algorithm or propose a new algorithm to improve the classification accuracy of a few classes , It mainly includes cost sensitive and integrated learning ;

3、 Introducing prior knowledge , Sample generation in the process of modeling 、 Model design 、 Prior knowledge is introduced into the model training stage , Improve model accuracy ;

4、 The migration study , Use similar data and knowledge in other fields to optimize models in this field ;

5、 Adjust business objectives , Try to change your perspective , Adjust business objectives or transform business problems .

01、 Changing the distribution of data

► By sampling

The sampling method is to process the training set so that the unbalanced data set becomes the balanced data set , In most cases, it will improve the final result . Sampling is divided into oversampling and undersampling , Among them, oversampling is to copy multiple copies of minority classes , Under sampling is to eliminate some samples from the public class , Or just select some samples from the popular class .

► Data composition method

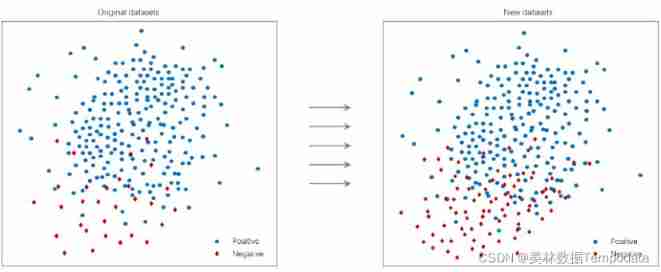

Data synthesis is to generate more samples from a small number of available samples , That is, from the perspective of raw data distribution , Generate data with similar distribution to real data , Achieve the purpose of sample enhancement . The main sample enhancement methods include :SMOTE smooth 、GAN Generate confrontation network model, etc .

SMOTE Smoothing is mainly applied to small data sets to obtain new samples , The implementation is to randomly select a sample , Calculate its distance from other samples , obtain K a near neighbor , from K A new sample is constructed by randomly selecting multiple samples from the nearest neighbors .

GAN Generate the confrontation network model : It mainly includes two parts , That is, generator. generator And discriminator discriminator. The generator is mainly used to learn the distribution of real data so as to make the data generated by itself more real , To cheat the discriminator . The discriminator needs to judge whether the received data is true or false . In the whole process , The generator strives to make the generated data more realistic , The discriminator tries hard to identify the true and false data , This process is equivalent to a two person game , as time goes on , The generator and the discriminator are constantly fighting each other , Finally, the two networks reach a dynamic equilibrium : The data image generated by the generator is close to the real data distribution , The discriminator can not identify the true and false data , So as to build more new samples .

for example , In the identification model of user stealing electricity , The number of electricity stealing users verified in the database is relatively small , If the model is built directly, the generalization performance of the model will be low , Over fitting , Unable to make the model more accurately learn the characteristics of power stealing users . In order to ensure the accuracy of the model , We can use the verified data of power stealing users , According to the data of different power stealing methods, the SMOTE The smoothing method performs sample enhancement on the data , Get more samples that conform to the original data distribution , Let the intelligent model fully learn the characteristics of power stealing users , Effectively improve the generalization performance and anti-interference ability of the model . The model is enhanced with data samples , The accuracy of power stealing user identification model 70% Up to the 86%, Bring substantial improvement to the project .

It should be noted that , Increasing samples by sampling is applicable to the case that the sample size has a certain foundation , The sample data itself is small , Or the sample is extremely unbalanced. For example, the abnormal sample has only one digit , It doesn't make much sense to use . The method of data synthesis is widely used in some fields , Such as the loss behavior prediction in the telecommunications industry 、 User portrait in the field of power grid , Image recognition, etc , But in industry , For example, the product has been processed in engineering , Equipment failure occurs , There is a strong correlation between the data , There is an inherent physical relationship between data , The method of data synthesis only focuses on the distribution characteristics of data , The strong correlation between data is ignored , Therefore, the generated data often deviates from the reality .

02、 optimization algorithm

From the algorithmic level , In the model design and training, the tendentious strategy is used to alleviate the imbalance of samples , It mainly includes cost sensitive and integrated learning . Cost sensitivity makes the model pay more attention to a few classes by modifying the loss function , Ensemble learning improves the overall classification accuracy by integrating the results of multiple classifiers .

► From the perspective of evaluation indicators

When the data is extremely unbalanced , At this time, the index of model accuracy cannot be observed . We can observe the accuracy and recall of training results , There are two advantages to doing so : First, we can understand the sensitivity of the algorithm to data ; Second, it can be clear which evaluation index is more appropriate . Aiming at the sample imbalance problem in machine learning , It is recommended that more PR(Precision-Recall curve ), Instead of ROC curve , If the ROC The curve is used as the evaluation index , It's easy because AUC When the value is high, ignoring the actual effect on a small number of samples is not ideal .

Of course, in practical applications , The selection of evaluation indicators should also be determined in combination with business needs . for example , In the loss warning scenario , It shall be based on the data volume of field maintenance personnel , Decide whether to use recall rate as the main evaluation index or accuracy rate as the main index . in addition , In industrial applications , If the model is used as an auxiliary means for quality early warning , The main consideration is the accuracy of the model , Be sure to give accurate results each time , When judging the equipment fault , To ensure the recall rate , No fault can be missed .

► Cost sensitive method

The core idea of cost sensitive method is in the process of algorithm implementation , Different weights are given to the categories with different sample numbers ( The small sample size category in the general idea classification has a high weight , The weight of large sample cost category is low ), In this way, the model pays more attention to small sample classes , Then calculate and model .

► Integrated learning

The integration method refers to using a small sample size in all classifications every time a training set is generated , At the same time, from the large sample size in the classification, random sampling data is combined with small sample size to form the training set , In this way, many training sets and training models will be obtained after repeated times . Finally, when applying , Use the combination method ( For example, voting 、 Weighted voting, etc ) Generate classification prediction results . If computing resources are sufficient , And if the timeliness of the model is not high , This method is more appropriate .

03、 Introducing prior knowledge

Using prior knowledge , Bring business knowledge 、 Mechanism rules are introduced into the sample generation of machine learning 、 Model design 、 Model training and other stages are also an idea to solve the problem of sample imbalance . Prior knowledge can be quickly extended to new tasks that contain only a small number of supervisory information samples . In the process of data mining model construction , Making full use of the relevant rules of prior knowledge can improve the effect of the model , Prior knowledge exerts its value mainly in two ways :

data : Use prior knowledge to enhance supervisory experience , For example, prior knowledge can be used to determine the range of data collected by the sensor , The abnormal data beyond the range shall be eliminated , Avoid interfering with model training due to data acquisition errors .

Model : Using prior knowledge to reduce the size of hypothesis space , Such as gear pitting 、 Local faults such as peeling and tooth breakage , When the fault part enters the engagement, the system is impacted and excited , Every revolution of the faulty gear , The system is impacted once , This phenomenon is periodic , The model can be designed based on this feature .

If in Weight overload prediction model of distribution transformer During construction , In the model stage, the prediction space is reduced by prior knowledge , The load rate of distribution transformer shall be fully considered 2 year Pearson The determination of correlation coefficient is near 2 The trend of the year , Select the distribution variable with high correlation coefficient , Using prior knowledge to predict the load rate in the future cycle , Remove the equipment with obviously low load rate , Reduce sample imbalance , Improve the generalization ability of the model .

It's worth noting that : While using prior knowledge , We must ensure the accuracy of prior knowledge , If there are errors in prior knowledge , Inevitably, the error of the model will increase , Affect the accuracy of the model .

04、 The migration study

Since it is difficult to obtain sample data in the current field , Can we use the data and knowledge of similar fields instead ? There are many such examples in real life , For example, learn to play the flute , It's easier to learn to play the flute 、 Hulusi and other wind instruments , Learned to C Language , It will be much easier to learn some other programming languages , This is actually the idea of transfer learning . Migrate annotation data or knowledge structure from related fields 、 Complete or improve the learning effect of the target area .

In a general way , There are three common migration methods :

One is instance based migration , Carry out effective weight distribution for existing large samples in other fields , Make the distribution characteristics of samples in other fields close to those in the target domain ;

The second is feature-based migration , It is divided into feature selection based migration and feature mapping based migration . Transfer learning algorithm based on feature selection , The focus is how to find out the common feature representation between the source domain and the target domain , Then use these features to transfer knowledge ; Transfer learning algorithm based on feature mapping , The data of source domain and target domain are mapped from the original feature space to the new feature space , In this space , The data distribution of the source domain is the same as that of the target domain ;

The third is migration based on shared parameters , It mainly studies how to find the common parameters or prior distributions between the spatial models of source data and target data .

In gear 、 In the process of fault prediction of bearing mechanical equipment , Under actual working conditions , Because the equipment has been in normal service for a long time , Normal samples are abundant , There are very few failure samples , We often face the problem of serious data imbalance . For gears 、 Simple machines like bearings , The characteristics of components of the same type in case of failure often have strong similarity . At this time, we can use the method of transfer learning , On the one hand, the specific implementation method converts the distribution of the data to be migrated into the distribution of the target data through data distribution transformation .

On the other hand, the data features of the two fields are spatially transformed , Make it have the same characteristic distribution . If the vibration signal is collected , According to the amplitude distribution of the target data in the time domain, the common vibration signal is converted into a signal similar to the target distribution , Reuse time-frequency transformation , The features in time domain space are mapped to those in frequency domain ( Pay attention to using relative indicators as much as possible , Not absolute ), Then, the fault prediction model of parts is built .

Besides , The application of transfer learning is often affected by several aspects , First, different application scenarios , The influence relationship between data is very different , The interrelationship between data tends to change , As a result, it cannot be completely migrated . Second, when there are many characteristic variables of the target , Mapping features is a big project in itself , It's not easy , Suitable for scenes with few features .

05、 Adjust business objectives

What should I do if none of the above methods are applicable ? Then we need to enlarge the move —— Adjust business objectives . Generally, we can transform the classification prediction problem of sample extreme disequilibrium into regression problem or anomaly detection problem .

► Classification variable regression

In the actual project , There is another way to achieve the goal of prediction , That is to transform the classification prediction problem into the regression prediction problem . Using regression prediction + Business rules are combined to achieve the purpose of forecasting . For example, in a product quality prediction project , The original goal is to build a prediction model of whether the product is qualified , However, during the construction of the model, it is found that the proportion of unqualified products is very small , Only a few samples , The basic conditions used to build the product nonconformity model are insufficient .

At this time, it can be transformed into the method of predicting the product detection indicators , This method is to predict the evaluation index in the product inspection process when the number of unqualified products is relatively small . relatively speaking , The accumulation of test index samples during product testing is easier than that of unqualified products . Obviously , The prediction of product detection indicators belongs to the category of regression prediction , It can be realized by the regression prediction method in machine learning . For the results predicted by the regression model , It can be judged in combination with existing business rules , So as to predict whether the product is qualified .

► Taxonomic variation is often detected

The second approach is to use unsupervised learning methods , Consider such problems as single classification or anomaly detection problems . The point of this kind of method is not to find the differences between classes , It's modeling for one of these . For example, in the equipment fault diagnosis project , No abnormal data , Then let's set a range for the normal situation , When judging new data, those within this range are considered as normal data , Those not in this range are considered as abnormal data , What needs to be focused on . Of course, in practical application, the model will also be updated iteratively , The effect of judgment will be more and more accurate .

This paper introduces several solutions to the sample imbalance problem we tried in the actual project , I hope some of the above methods can help you solve the problems you currently encounter or bring you some ideas for solving problems .

These methods are just the tip of the iceberg among the solutions to the sample imbalance problem , Here we suggest that you read more articles in this regard , You may get some more interesting and effective methods from it . Of course, the solution of the sample imbalance problem should be combined with the on-site environment and the problems encountered , Flexible choice of coping methods , No one solution can solve all problems .

边栏推荐

猜你喜欢

Understanding of swing transformer

Nacos注册表结构和海量服务注册与并发读写原理 源码分析

Merrill Lynch data technology expert team | application of recommendation of relevant contents in group system data retrieval

Self taught neural network series - 9 convolutional neural network CNN

《一周搞定模电》—基本放大电路

常用电路设计

Analysis of ROS calculation diagram level

Error importerror: numpy core. multiarray failed to import

![[cloud primordial | kubernetes chapter] go deep into the foundation of all things - container (V)](/img/67/26508edc451139cd0f4c9511ca1ed2.png)

[cloud primordial | kubernetes chapter] go deep into the foundation of all things - container (V)

In depth study paper reading target detection (VII) Chinese version: yolov4 optimal speed and accuracy of object detection

随机推荐

php不让图片跟数据一起上传(不再是先上传图片再上传数据)

Yolov5 advanced 5 GPU environment setup

Phpcms V9 mall module (fix the Alipay interface Bug)

Practice is the fastest way to become a network engineer

php提取txt文本存储json数据中的域名

Notes on setting qccheckbox style

Phpcms applet plug-in tutorial website officially launched

Tutorial 1:hello behavioc

Self learning neural network sequence -- 2 perceptron

Edit type information

《一周搞定模电》-二极管

《單片機原理及應用》——概述

Pycharm occasionally encounters low disk space

Yolov5 advanced camera real-time acquisition and recognition

行為樹XML文件 熱加載

Unity connects to Turing robot

51 single chip microcomputer ROM and ram

行为树的基本概念及进阶

HDU - 6225 little boxes (\u int128)

thinkphp5使用composer安装插件提示php版本过高