当前位置:网站首页>Self taught neural network series - 9 convolutional neural network CNN

Self taught neural network series - 9 convolutional neural network CNN

2022-06-26 09:10:00 【ML_ python_ get√】

Convolutional neural networks

1 Convolutional neural network background

- CNN(Convolution Neural Network) Is a convolution layer 、 Feedforward neural network of pooling layer , It is mainly used to process image information 、 Text information .

- Convolution neural network is used to process images , It aims to solve the problem of too many parameters of fully connected neural network . For example, a 1000 Pixel color image expansion 1000×1000×3=3M Dimension vector , Suppose the size of the hidden layer is 1000, Then the first full connection has to be trained 3B Parameters , This will make it difficult to train the neural network , Therefore, convolution kernel is introduced to realize the local connection between the layers of neural network , Reduce parameters .

- Because the picture has translation invariance, that is, the target pixel is located anywhere in the picture, human beings can recognize , Location factors should not affect the recognition of target pixels . Therefore, it is reasonable to use the same convolution kernel to extract the information of any position in the picture .

- The convolution layer is composed of multiple convolution kernels , Involves filling 、 Stride 、 The concept of access . The common convolutional neural networks mainly include LeNet、AlexNet、VGG、GoogleNet、ResNet、DenseNet etc. . This article serves as a personal study note , Only the concept of convolutional neural network is reviewed , Do not delve into the characteristics of various convolutional neural networks .

2 Basic knowledge of convolutional neural network

2.1 Convolution

- Convolution can be seen as a custom operator , The result is about x The function of is generally referred to as the following integral operation :

∫ τ f ( τ ) g ( x − τ ) d τ \int_\tau f(\tau)g(x-\tau)d\tau ∫τf(τ)g(x−τ)dτ - In signal processing , Convolution is usually used to calculate the cumulative delay of a signal , among f ( τ ) f(\tau) f(τ) Generate a function for the signal , g ( ⋅ ) g(·) g(⋅) Is the signal attenuation function , Also called filter or convolution kernel , When x distance τ \tau τ The farther away, the worse the attenuation . When the signal function encounters a filter , The signal is filtered , It measures the size of the real signal that exists .

- Different from the traditional one-dimensional convolution , The convolution kernel in convolution neural network uses two-dimensional convolution to extract the real information in the picture , Two dimensional convolution is defined as follows :

y i j = ∑ u = 1 U ∑ v = 1 V w u v x i + 1 − u , j + 1 − v y_{ij} = \sum_{u=1}^U\sum_{v=1}^Vw_{uv}x_{i+1-u,j+1-v} yij=u=1∑Uv=1∑Vwuvxi+1−u,j+1−v

- chart 1 By calculating the two-dimensional convolution, we find , Convolution is a bottom-up sweep of data , Sweep from right to left to calculate the , The convolution kernel in convolution neural network is the transpose of two-dimensional convolution , This operation is also called cross correlation , Often used in time series , Analyze the correlation of different variables at different times . Specially , When the parameter in the convolution kernel is a learnable parameter , Whether it is two-dimensional convolution operation or cross-correlation operation , The neural network will automatically learn the proper parameters .

- feature extraction : Convolution kernel ( filter ) Its main function is feature extraction

- Gaussian filter : Denoise and smooth the picture

- Edge filter : Extract edge features

- wait

2.2 Convolutional neural network structure

- Convolution layer

- Convolution operation using convolution kernel

- Input :36×36 Gray image or convolution kernel output

- Convolution kernel :9×9 The size of the convolution kernel ( Different convolution layers are different 、 The same convolution layer can also be different )

- Output : The output through the convolution kernel is called a characteristic graph , Represent different features of images extracted by different convolution kernels

- Pooling layer

- Feature selection using convolution kernel

- The convolution kernel is smaller than that of the picture , So the number of neurons in the convolution layer is still very large , Easy to overfit

- Feature selection is achieved by using maximum pooling layer or average pooling layer , Reduce the probability of over fitting

- The tendency of the pool layer to the scanning of the characteristic map can overlap or not overlap

- The pooled layer can not only down sample but also pass through max()、mean() Dimension reduction , Dimension reduction can also be realized by nonlinear activation function

- Feel the field : The size of all elements that affect the feature map or output is called receptive field , With the forward propagation of neural network , Each element represents a growing receptive field , That is, you can see a larger field of view of the original image .

- The output of convolution kernel becomes smaller and smaller , The deeper the network , The more convolution kernels per layer , Prevent information loss

- Convolutional neural networks are getting wider and wider , The output is getting smaller and smaller

- The final output of convolutional neural network is generally expanded into a one-dimensional vector

2.3 Filling and stride of convolution kernel

fill : To keep the input and output the same size , The input matrix is often filled

Stride : The number of rows and columns that the convolution kernel slides from the input matrix each time is called stride , Generally high and wide Equal strides .

When calculating the output of convolution kernel, it is necessary to determine the filling and step size , Suppose the input size is n h n_h nh, The convolution kernel size is k h k_h kh, Fill the upper and lower sides p h p_h ph, Fill the left and right sides p w p_w pw, The up and down steps are s h s_h sh, The left and right strides are s w s_w sw, The output shape is :

s h a p e = [ ( n h − k h + p h ) / s h + 1 ] × [ ( n w − k w + p w ) / s w + 1 ] shape = [(n_h-k_h+p_h)/s_h+1]×[(n_w-k_w+p_w)/s_w+1] shape=[(nh−kh+ph)/sh+1]×[(nw−kw+pw)/sw+1]Double your stride , The output will be halved

3 Convolution neural network learning

3.1 Derivative of convolution

- The derivative of the loss function with respect to the parameters of the convolution kernel is equal to the convolution of the derivative of the loss function with respect to the output with respect to the input .

- Consistency between derivative and derivative : Multiplication corresponds to multiplication , Convolution operation corresponds to convolution operation

∂ L ( y , y ^ ) ∂ w i j = ∑ i = 1 n h − k h + 1 ∑ j = 1 n w − k w + 1 ∂ L ( y , y ^ ) ∂ y i j ∂ y i j ∂ w i j = ∑ i = 1 n h − k h + 1 ∑ j = 1 n w − k w + 1 ∂ L ( y , y ^ ) ∂ y i j x k h + i − 1 , k w + j − 1 {\partial L(y, \hat y) \over \partial w_{ij}} = \sum_{i=1}^{n_h-k_h+1}\sum_{j=1}^{n_w-k_w+1}{\partial L(y, \hat y) \over \partial y_{ij}} {\partial y_{ij}\over \partial w_{ij}} =\sum_{i=1}^{n_h-k_h+1}\sum_{j=1}^{n_w-k_w+1}{\partial L(y, \hat y) \over \partial y_{ij}}x_{k_h+i-1,k_w+j-1} ∂wij∂L(y,y^)=i=1∑nh−kh+1j=1∑nw−kw+1∂yij∂L(y,y^)∂wij∂yij=i=1∑nh−kh+1j=1∑nw−kw+1∂yij∂L(y,y^)xkh+i−1,kw+j−1 - The input size is n h n_h nh, The convolution kernel size is k h k_h kh

- The convolution of the input data to the convolution kernel is transformed into the convolution of the input data to the error term of the layer

3.2 Back propagation algorithm

- Forward propagation : Input layer - Convolution layer - Activation function - Convergence layer

- The error back propagation algorithm first passes through the pooling layer , And then to the activation function 、 Convolution operation back propagation

- Back propagation algorithm

- Because the pooling layer performs the down sampling operation , Therefore, it is necessary to propagate through upsampling

- Maximum pool layer : The error of only one element is propagated forward , During back propagation, the error term is transmitted to the neuron corresponding to the maximum output value of the upper layer , The other elements are set to 0

- Average pooling layer : Forward propagation , All elements affect the error term , Therefore, the error term is evenly distributed to each neuron .

- l l l The layers are convolutions

∂ L ( y , y ^ ) ∂ w i j = ∑ i = 1 n h − k h + 1 ∑ j = 1 n w − k w + 1 ∂ L ( y , y ^ ) ∂ y i j ∂ y i j ∂ w i j = ∑ i = 1 n h − k h + 1 ∑ j = 1 n w − k w + 1 ∂ L ( y , y ^ ) ∂ y i j x k h + i − 1 , k w + j − 1 {\partial L(y, \hat y) \over \partial w_{ij}} = \sum_{i=1}^{n_h-k_h+1}\sum_{j=1}^{n_w-k_w+1}{\partial L(y, \hat y) \over \partial y_{ij}} {\partial y_{ij}\over \partial w_{ij}} =\sum_{i=1}^{n_h-k_h+1}\sum_{j=1}^{n_w-k_w+1}{\partial L(y, \hat y) \over \partial y_{ij}}x_{k_h+i-1,k_w+j-1} ∂wij∂L(y,y^)=i=1∑nh−kh+1j=1∑nw−kw+1∂yij∂L(y,y^)∂wij∂yij=i=1∑nh−kh+1j=1∑nw−kw+1∂yij∂L(y,y^)xkh+i−1,kw+j−1- Convolution of input data to the error term of the layer

- l + 1 l+1 l+1 The layer is a pool layer

δ l , p = ∂ L ( y , y ^ ) ∂ y l , p = ∂ L ( y , y ^ ) ∂ y l + 1 , p ∂ y l + 1 , p ∂ h l , p ∂ h l , p ∂ y l , p = δ l + 1 , p u p ( ⋅ ) ∂ h l , p ∂ y l , p \delta^{l,p}={\partial L(y, \hat y) \over \partial y^{l,p}} = {\partial L(y, \hat y) \over \partial y^{l+1,p}} {\partial y^{l+1,p} \over \partial h^{l,p}} {\partial h^{l,p} \over \partial y^{l,p}} =\delta^{l+1,p}up(·){\partial h^{l,p} \over \partial y^{l,p}} δl,p=∂yl,p∂L(y,y^)=∂yl+1,p∂L(y,y^)∂hl,p∂yl+1,p∂yl,p∂hl,p=δl+1,pup(⋅)∂yl,p∂hl,p- u p ( ⋅ ) = ∂ X l + 1 , p ∂ h l , p up(·) ={\partial X^{l+1,p} \over \partial h^{l,p}} up(⋅)=∂hl,p∂Xl+1,p Represents the upsampling operation from the pooled layer to the hidden layer .

- l + 1 l+1 l+1 The layers are convolutions

δ l = ∂ L ( y , y ^ ) ∂ y l = ∂ L ( y , y ^ ) ∂ h l ∂ h l ∂ y l = ∂ L ( y , y ^ ) ∂ x l + 1 ∂ h l ∂ y l \delta^{l}={\partial L(y, \hat y) \over \partial y^{l}} ={\partial L(y, \hat y) \over \partial h^{l}} {\partial h^{l} \over \partial y_{l}} = {\partial L(y, \hat y) \over \partial x^{l+1}} {\partial h^{l} \over \partial y_{l}} δl=∂yl∂L(y,y^)=∂hl∂L(y,y^)∂yl∂hl=∂xl+1∂L(y,y^)∂yl∂hl - according to W And X The symmetry of ∂ L ( y , y ^ ) ∂ x l + 1 {\partial L(y, \hat y) \over \partial x^{l+1}} ∂xl+1∂L(y,y^) It's No l + 1 l+1 l+1 The layer error term is about l + 1 l+1 l+1 Convolution of layer convolution kernel , So that we can

Recursively find ∂ L ( y , y ^ ) ∂ y l {\partial L(y, \hat y) \over \partial y^{l}} ∂yl∂L(y,y^), And then find out ∂ L ( y , y ^ ) ∂ w i j {\partial L(y, \hat y) \over \partial w_{ij}} ∂wij∂L(y,y^).

4 Other convolutions

- Transposition convolution : Used to upgrade dimension , The two ends are filled with more 0

- Micro step convolution : Used to upgrade dimension , Strides less than 1

- Cavity convolution : Increase receptive field size , Insert a hole in the convolution kernel , Disguised expanded convolution kernel

- The influencing factors of receptive field : The layer number 、 Convolution kernel size 、 Converge

边栏推荐

猜你喜欢

20220623 getting started with Adobe Illustrator

phpcms v9手机访问电脑站一对一跳转对应手机站页面插件

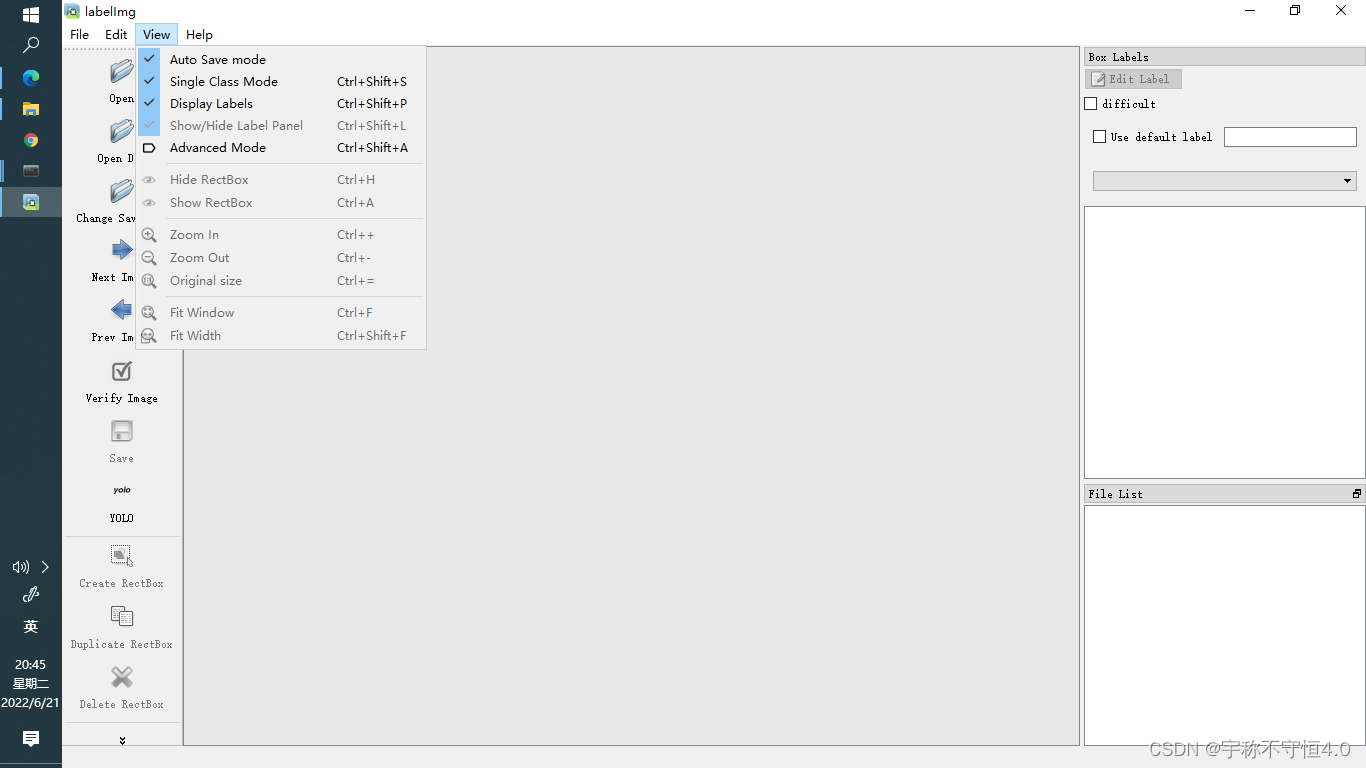

Yolov5 advanced level 2 installation of labelimg

Phpcms applet plug-in version 4.0 was officially launched

Phpcms V9 mobile phone access computer station one-to-one jump to the corresponding mobile phone station page plug-in

Slider verification - personal test (JD)

【MATLAB GUI】 键盘回调中按键识别符查找表

力扣399【除法求值】【并查集】

编辑类型信息

PD快充磁吸移动电源方案

随机推荐

编辑类型信息

phpcms小程序插件api接口升级到4.3(新增批量获取接口、搜索接口等)

Yolov5 advanced III training environment

力扣399【除法求值】【并查集】

外部排序和大小堆相关知识

Notes on setting qccheckbox style

基于SSM的电脑商城

Section IV HQL execution process

Uniapp uses uparse to parse the content of the background rich text editor and modify the uparse style

Isinstance() function usage

Slider verification - personal test (JD)

Docker install redis

phpcms v9后台增加阅读量字段,可任意修改阅读量

读书笔记:SQL 查询中的SQL*Plus 替换变量(DEFINE变量)和参数

Chargement à chaud du fichier XML de l'arbre de comportement

【C】青蛙跳台阶和汉诺塔问题(递归)

Unity WebGL发布无法运行问题

Phpcms V9 adds the reading amount field in the background, and the reading amount can be modified at will

运行时端常用类的介绍

Lagrange multiplier method