当前位置:网站首页>[paper notes] supersizing self supervision: learning to grasp from 50K tries and 700 robot hours

[paper notes] supersizing self supervision: learning to grasp from 50K tries and 700 robot hours

2022-06-26 03:35:00 【See deer (Python version)】

Catalog

- Abstract

- 1. INTRODUCTION

- 2. RELATED WORK

- 3. APPROACH

- 4. RESULTS

- 5. CONCLUSION

Abstract

At present, the robot grasping method based on model free learning uses the manually marked data set to train the model . This will bring some disadvantages .

- Because every object can be caught in many ways , So manually marking the grab position is not a simple task ;

- Human labels are subject to semantics Influence .( As mentioned below : A cup can be taken out from many places , But the manual label is set on the handle by default .)

- Although attempts have been made to use trial and error experiments to train robots , But the amount of data used in such experiments is still very low , So it makes it easy for learners Excessive fitting .

To address these issues , The author puts forward the following methods :

- We added the available training data to the previous work 40 times , So we can get the result of 700 Hours of robot grabbing attempts 50K The data set size of the data point .

- Train a convolutional neural network (CNN), It can predict the grab position without serious over fitting .

- The regression problem is redefined as a problem on the image patch 18 A binary classification .

- A multi-stage learning method is proposed , Make use of what you train at one stage CNN To collect hard negative information in subsequent stages .

1. INTRODUCTION

How to predict the grasping position of this object ?

- Fit these objects 3D Graphics or use 3D Depth sensor , perform 3D Inferentially predict the grab position . However , This method has two disadvantages :(a) fitting 3D The model itself is an extremely difficult problem ;(b) A method based on geometry , The density and mass distribution of the object are neglected , This may be critical for predicting the grab position .

- Use visual recognition to predict the location and configuration of the grab , Because it does not require explicit object modeling . You can create a grab position training data set for hundreds of objects , And use standard machine learning algorithms , Such as CNNs Or an automatic encoder to predict the grab position in the test data . But machine learning of artificial tags , Will be affected by the above two shortcomings .

- Even the largest visual - based data set (2016 year ), Only about 1K An isolated object image ( Only one object is visible , No clutter ).

The author is subject to reinforcement learning (reinforcement learning) And human experience (imitation learning) Inspired by the , We propose a self - monitoring algorithm , Predict the grab position by trial and error .

We think , This method , The training data is much less than the number of model parameters , There must be over fitting , It cannot be extended to new Invisible objects . therefore , What we need is a way to collect hundreds of data points ( Maybe by having robots interact with objects 7 Day by day 24 Hours of interaction ) To learn the meaningful representation of this task .

We propose a large-scale experimental study , It not only greatly increases the amount of data to be mastered in learning , It also provides complete information about whether an object can be grasped at a specific position and angle .

We use this data set on ImageNet I've been pre trained on AlexNet-CNN Fine tune the model , Learn in the full connectivity layer 1800 New parameters of million , Used to predict the grab position .

We don't use regression loss , Instead, the crawl problem is represented as a more than 18 From two angles 18 Binary classification . Inspired by the reinforcement learning paradigm , We also propose a method based on Stage - Course Learning algorithm , There we learn how to master , And use recently learned models to collect more data .

2. RELATED WORK

| Using analytical methods and three-dimensional reasoning to predict the position and configuration of the grab . | You need to model a given object in three dimensions , And the surface friction characteristics and mass distribution of the object . | Perceive and infer 3D models and other attributes , Tathagata comes from RGB or RGBD Camera friction / Quality is an extremely difficult problem . | To solve these problems , People build data sets for crawling . Sample and sort the captured values according to the similarity , To grab instances from existing datasets . |

|---|---|---|---|

| Predictive crawling involves using simulators , Such as Graspit | Sample the capture candidate actions , And sort them by analysis . | However , Questions often arise about how simulated environments reflect the real world . | Some papers have analyzed why a simulated environment cannot be parallel to the highly unstructured real world . |

| Use visual learning to learn directly from RGB or RGB-d Predict the capture position in the image . | Using visual based features ( Edge and texture filter response ), And learn the logistic regression on the synthetic data . Capture data using human annotations in RGB-D Training grab synthesis model on data . | However , As mentioned above , It is not easy to collect training data on a large scale to capture prediction tasks , And there are a few problems . | therefore , Neither of the above methods can be extended to use big data .(2016 year ) |

| Use the robot's own trial and error experience . | Previous work only used hundreds of trial and error runs to train high-capacity deep networks . However, the over fitting and generalization ability are poor . | Use reinforcement learning to learn the mastery attributes on the depth image of a cluttered scene . | The crawling attribute is based on supervoxel segmentation and facet detection . This creates a crawl synthesis process , This method may not be suitable for complex objects . |

We propose an end-to-end self-monitoring phase - learning system , It uses thousands of trial and error runs to learn about deep networks . then , The learned depth network is used to collect more positive and negative and hard negative ( The model is considered to be available , But usually not ) data , This helps the web learn faster .

3. APPROACH

Robot Grasping System

The experiment is in Rethink Robot company Baxter On a robot , Use ROS As our development system .

For retainers , We use a two finger parallel gripper , Maximum width ( open ) by 75 m m 75mm 75mm, Minimum width ( Closed state ) by 37 m m 37mm 37mm.

One KinectV2 Connected to the head of the robot , Can provide 1920 × 1280 1920 \times 1280 1920×1280 Resolution workspace image ( Dark white tabletop ). Besides , One 1280 × 720 1280 \times 720 1280×720 Resolution cameras are connected to each Baxter On the end actuator of , It provides with Baxter Rich images of interactive objects .

For trajectory planning , Extended space tree is used (Expansive Space Tree) Planner . Use these two robot arms to collect data faster .

During the experiment , Human participation is limited to turning on robots , And put objects on the table in any way . In addition to initialization , We have no human involvement in the data collection process .

Gripper Configuration Space and Parametrization

In this paper , We only focus on the gripping force on the plane .

Plane grabbing means Crawl configuration along and perpendicular to the workspace . therefore , The crawl configuration is 3D , ( x , y ) (x,y) (x,y): The position of the grab point on the surface of the table , as well as θ \theta θ: The angle of the grab point .

A. Trial and Error Experiments

The workspace first sets up several objects with different degrees of difficulty , Randomly placed on a table with a dark white background . Then, multiple randomized trials were performed consecutively .

Region of Interest Sampling

Head on Kinect Query desktop images through off the shelf Gaussian mixture (MOG) Background subtraction algorithm Recognition the algorithm recognizes the region of interest in the image .

This is only done to reduce the number of random tests conducted in the open space without objects nearby .

Then, a random region in the image is selected as the region of interest of a specific test example .

Grasp Configuration Sampling

Given a particular region of interest , The robot arm moves over the object 25 centimeter .

Now? , A random point is uniformly sampled from the space of the region of interest . This will be the grasping point of the robot .

To complete the grip configuration , Now in scope ( 0 , π ) (0,\pi) (0,π) Select an angle randomly in the , Because the two finger grips are symmetrical .

Grasp Execution and Annotation

Now given the crawl configuration , The robot arm grabs the object .

Then lift the object 20 centimeter , And mark it as success or failure according to the force sensor reading of the grip .

During the execution of these randomized trials , Images from all cameras 、 Robot arm trajectory and grip history are recorded on disk .

B. Problem Formulation

The grasp synthesis problem is expressed as a problem in a given object I I I When finding a successful crawl configuration ( x S , y S , θ S ) (x_{S},y_{S},\theta_{S}) (xS,yS,θS).

CNN’s INPUT

our CNN The input of is an image patch extracted around the capture point .

In our experiment , The patch we use is projected on the image by the fingertip 1.5 1.5 1.5 times , Also includes context .

The patch size used in the experiment is 380 × 380 380 \times 380 380×380. The size of this patch has been adjusted to 227 × 227 227 \times 227 227×227, This is the training of image network AlexNet Input image size of .

CNN’s OUTPUT

First, learn a binary classifier model , The model divides objects into two categories: grabble or not , Then select the object's grasping angle .

However , The graspability of the image patch is a function of the gripper angle , Therefore, image patches can be marked as graspable and non graspable .

contrary , In our case , Given an image patch , We estimate one 18 Dimensional likelihood vector , Each dimension indicates whether the center of the patch is 0 ° , 10 ° , ⋯ 170 ° 0°, 10°, \cdots170° 0°,10°,⋯170°. therefore , Our problem can be seen as a 18 Binary classification problem .

Testing

Given an image patch , our CNN Output 18 The center of a grasping angle .

During the robot's test time , Given an image , We Sample the grab position , Extract patch input CNN.

For each patch , The output is 18 It's worth , They describe 18 The accessibility score for each of the angles .

C. Training Approach

Data preparation

Given a test data point ( x i , y i , θ i ) (x_{i},y_{i},\theta_{i}) (xi,yi,θi), We use ( x i , y i ) (x_{i},y_{i}) (xi,yi) Extract for the center 380 × 380 380 \times 380 380×380 Patch .

To increase the amount of data the network sees , We used a rotation transform : adopt θ r a n d \theta_{rand} θrand Rotate dataset patch , And mark the corresponding grasping direction as θ i + θ r a n d θ_{i}+θ_{rand} θi+θrand. Some of these patches can be found in Figure 4 see .

Network Design

our CNN, Is a standard network architecture .

Our first five convolution layers are taken from pre trained AlexNet. We also use two fully connected layers , There were 4096 And 1024 Neurons .

These two fully connected layers ,fc6 and fc7, Use Gaussian initialization for training .

Loss Function

L B = ∑ i = 1 B ∑ j = 1 N = 18 δ ( j , θ i ) ⋅ s o f t m a x ( A j i , l i ) L_{B} = \sum_{i=1}^{B} \sum_{j=1}^{N=18} \delta(j, θ_{i})·softmax(A_{ji}, l_{i}) LB=i=1∑Bj=1∑N=18δ(j,θi)⋅softmax(Aji,li)

among , δ ( j , θ i ) = 1 \delta(j,θ_{i})=1 δ(j,θi)=1 when , θ i θ_{i} θi Corresponding to the first j j j Classes .

Please note that , The last layer of the network contains 18 A binary layer , Instead of a multi-layer classifier to predict the final crawling score .

therefore , For a single patch , Only the loss of the test corresponding class can be back propagated .

D. Multi-Staged Learning

At this stage of data collection , We use both previously seen objects and new objects . This ensures that in the next iteration , The robot corrects the incorrect grasp mode , At the same time, strengthen the correct grasp mode .

It should be noted that , In each object grab test at this stage , Will be randomly selected 800 Patches , And evaluate the depth network learned from the previous iteration .

This creates a 800 × 18 800\times18 800×18 A priori matrix of grasping ability , Where input ( i , j ) (i,j) (i,j) Corresponding to the first i i i Patch No j j j Network activation on classes . Crawl execution is now determined by the importance sampling of the crawl capability prior matrix .

suffer Data aggregation technology Inspired by the , In the k k k In the training process of the second iteration , Data sets D k D_{k} Dk from { D k } = { D k − 1 , Γ d k } \{D_{k}\}=\{D_{k−1},\Gamma d_{k}\} { Dk}={ Dk−1,Γdk} give , among d k d_{k} dk Is to use the k − 1 k−1 k−1 The data collected by the second iteration model .

Be careful , D 0 D_{0} D0 Is to grab data sets randomly , The first 0 0 0 This iteration is simply in D 0 D_{0} D0 Training on .

As a design option , Important factors Γ \Gamma Γ Keep for 3.

4. RESULTS

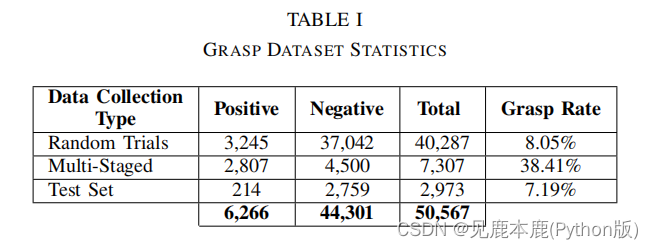

A. Training dataset

B. Testing and evaluation setting

adopt 3k This physical robot interaction collects 15 A novel 、 Multiple locations of different test objects .

This test set is balanced by random sampling from collected robot interactions .

The precision measure used for evaluation is binary classification , That is, a patch is given in the test set and the grab angle is executed , To predict whether the object is captured .

The evaluation of this method preserves two important aspects of crawling :(a) It ensures the exact same comparison of test data , This is not possible with real robot experiments ;(b) The data comes from a real robot , This means that methods that work well on this test set should work well on real robots .

Our approach based on deep learning , Then multi-stage reinforcement , On this test set 79.5 % 79.5\% 79.5% The accuracy of .

C. Comparison with heuristic baselines

by RGB A heuristic algorithm for image input task modification , Encodes obvious crawl rules .

- Grab the center of the patch . This rule is implicit in our patch based crawling formula .

- Master the smallest object width . This is achieved by target segmentation and eigenvector analysis .

- Don't catch too thin objects , Because the gripper is not completely closed .

The maximum accuracy obtained is 62.11 % 62.11\% 62.11%, It is obviously lower than the accuracy of this method .

D. Comparison with learning based baselines

We are in the following two baseline Used in HoG features , Because it preserves the rotation variance , This is important for grabbing .

- k Nearest neighbor (kNN): For each element in the test set , The elements belonging to the same angle class in the training set are based on kNN The classification of . Different k The maximum accuracy in the case of value is 69.4 % 69.4\% 69.4%.

- linear SVM: by 18 Each of the angle boxes learns 18 A binary support vector machine . After selecting the regularization parameters through verification , The maximum accuracy obtained is 73.3 % 73.3\% 73.3%.

E. Ablative analysis

Effects of data

Adding more data will certainly help improve accuracy . This growth is even more remarkable , Until about 20K Data points , After that, the growth rate was very small .

Effects of pretraining

Our experiments show that , This improvement is remarkable : The accuracy rate from the network is 64.6 % 64.6\% 64.6% To the pre training network 76.9 % 76.9\% 76.9%. This means that the visual features learned from the image classification task assist the task of capturing objects

Effects of multi-staged learning

After a stage of reinforcement , The test accuracy ranges from 76.9 % 76.9\% 76.9% Up to 79.3 % 79.3\% 79.3%. This shows the effect of hard negativity in training , Only 2K Grab ratio 20K Random grab improves more . However , stay 3 After two stages , The accuracy is improved to 79.5 % 79.5\% 79.5%.

Effects of data aggregation

We noticed that , Without aggregating data , And only use the data of the current stage to train the capture model , The accuracy is from 76.9 % 76.9\% 76.9% Down to 72.3 % 72.3\% 72.3%.

F. Robot testing results

Re-ranking Grasps

therefore , In order to consider inaccuracy , We're right 10 Grab for sampling , And reorder them based on neighborhood analysis : Given an instance of the top patch ( P t o p K i , θ t o p K i ) (P^{i}_{topK},\theta^{i}_{topK}) (PtopKi,θtopKi), We go further in P t o p K i P^{i}_{topK} PtopKi Neighborhood sampling of 10 A patch . The average value of the best angle score of the neighborhood patch is assigned as ( P t o p K i , θ t o p K i ) (P^{i}_{topK},\theta^{i}_{topK}) (PtopKi,θtopKi) The new patch score of the defined crawl configuration R t o p K i R^{i}_{topK} RtopKi. Then execute with maximum R t o p K i R^{i}_{topK} RtopKi Associated crawl configuration .

Grasp Result

Please note that , Some gripping , For example, the red guns in the second row are reasonable , But still not successful , Because the size of the grip is incompatible with the width of the object .

Clutter Removal

We tried 5 Attempts to , In order to remove the extracted from the novel and previously seen objects 10 An object . On average, ,Baxter stay 26 This interaction successfully cleared the confusion .

5. CONCLUSION

A future extension will be in RGB-D Training capture model in data domain , This brings challenges to the proper use of depth in deep networks .

Besides , The three-dimensional information of the object can be extended to predict non planar grasping .

边栏推荐

- Click event

- jupyter notebook的插件安装以及快捷键

- Google recommends using kotlin flow in MVVM architecture

- redux-thunk 简单案例,优缺点和思考

- [reading papers] fbnetv3: joint architecture recipe search using predictor training network structure and super parameters are all trained by training parameters

- P2483-[template]k short circuit /[sdoi2010] Magic pig college [chairman tree, pile]

- Inkscape如何将png图片转换为svg图片并且不失真

- 【论文笔记】Supersizing Self-supervision: Learning to Grasp from 50K Tries and 700 Robot Hours

- Request object, send request

- Business process diagram design

猜你喜欢

QT compilation error: unknown module (s) in qt: script

分割、柱子、list

Problems encountered in project deployment - production environment

Lumen Analysis and Optimization of ue5 global Lighting System

UE5全局光照系统Lumen解析与优化

经典模型——NiN&GoogLeNet

Inkscape如何将png图片转换为svg图片并且不失真

redux-thunk 简单案例,优缺点和思考

Request object, send request

Utonmos: digital collections help the inheritance of Chinese culture and the development of digital technology

随机推荐

Double carbon bonus + great year of infrastructure construction 𞓜 deep ploughing into the field of green intelligent equipment for water conservancy and hydropower

经典模型——ResNet

todolist未完成,已完成

MySQL增删查改(进阶)

P2483-[模板]k短路/[SDOI2010]魔法猪学院【主席树,堆】

Vulhub replicate an ActiveMQ

Notes on the 3rd harmonyos training in the studio

Lumen Analysis and Optimization of ue5 global Lighting System

Scratch returns 400

ArrayList#subList这四个坑,一不小心就中招

360 秒了解 SmartX 超融合基础设施

js array数组json去重

The role of children's programming in promoting traditional disciplines in China

The "eye" of industrial robot -- machine vision

MySQL开发环境

Worm copy construction operator overload

进度条

工作室第3次HarmonyOS培训笔记

解析创客空间机制建设的多样化

虫子 拷贝构造 运算符重载