当前位置:网站首页>Detr3d multi 2D picture 3D detection framework

Detr3d multi 2D picture 3D detection framework

2022-06-26 03:51:00 【AI vision netqi】

Recently, there has been a wave in the circle of automatic driving BEV(Bird's Eye View, Aerial view ) Under the trend of target detection for cameras , And one of the jobs that set off this trend is that we MARS Lab And MIT, TRI There are also ideal automobile cooperation CORL2021 The paper DETR3D.

Now let's introduce our paper by Mr. Chen Kuo :DETR3D: 3D Object Detection from Multi-view Images via 3D-to-2D Queries[1].

Do... In the look around camera image of automatic driving 3D Target detection is a thorny problem , For example, how to get from a monocular camera 2D Forecast in information 3D The object 、 The shape and size of the object change with the distance from the camera 、 How to fuse information between different cameras 、 How to deal with objects truncated by adjacent cameras, etc .

take Perspective View Turn into BEV Characterization is a good solution , Mainly reflected in the following aspects :

- BEV It is a unified and complete representation of the global scene , The size and orientation of an object can be expressed directly ;

- BEV It is easier to do sequential multi frame fusion and multi-sensor fusion ;

- BEV More conducive to target tracking 、 Trajectory prediction and other downstream tasks .

DETR3D programme

DETR3D The design of the model mainly includes three parts :Encoder,Decoder and Loss.

Encoder

stay nuScenes Data set , Each sample contains 6 A ring view camera picture . We use it ResNet Go to each picture encode To extract features , And then one more FPN Output 4 layer multi-scale features.

Decoder

Detection head CO contained 6 layer transformer decoder layer. Be similar to DETR, We preset 300/600/900 individual object query, Every query yes 256 Dimensional embedding. be-all object query Predicted by a fully connected network at BEV In the space 3D reference point coordinate (x, y, z), The coordinates go through sigmoid The normalized function represents the relative position in space .

On each floor layer In , be-all object query Between doing self-attention To interact with each other to obtain global information and avoid multiple query Converge to the same object .object query And then do with the image features cross-attention: Each one query Corresponding 3D reference point Project to the picture coordinates through the camera's internal and external parameters , Use linear interpolation to sample the corresponding multi-scale image features, If the projection coordinate falls outside the range of the picture, fill in zero , After that sampled image features To update object queries.

after attention Updated object query Through two MLP Network to predict the corresponding objects class and bounding box Parameters of . In order to make the network learn better , We always predict bounding box The central coordinate of is relative to reference points Of offset(△x,△y,△z) To update reference points Coordinates of .

Updated per layer object queries and reference points As the next layer decoder layer The input of , Calculate and update again , Total iterations 6 Time .

Loss

The design of loss function is mainly affected by DETR Inspired by the , We are in all object queries The predicted detection frame and all ground-truth bounding box The Hungarian algorithm is used for bipartite graph matching , Find out what makes loss The smallest optimal match , And calculate classification focal loss and L1 regression loss.

experimental result

We are based on FCOS3D In the process of the training backbone Training , Before use NMS and test-time augmentation In the case of FCOS3D Result .

We are based on DD3D In the process of the training backbone Training , stay nuScenes test set Got the best results on .

It is always a difficult problem to detect the truncated objects in the overlapped parts of adjacent look around cameras ,DETR3D By directly in BEV This method avoids the post-processing between cameras , It has effectively alleviated this problem . We are in the overlap mAP exceed FCOS3D about 4 A little bit .

Recent related work

DETR3D In the last year 10 Month in nuScenes I have reached the first place in the . In recent months nuScenes There are many on the list of BEV Next do visual 3D The work of target detection , It seems that our work has inspired colleagues in many fields , Everyone is trying to explore in this direction .

Let's compare DETR3D And the recent work and think about the following questions , I hope to give you some inspiration .

- How to convert a look around image into BEV?

stay DETR3D、BEVFormer[2] in , It's through reference points And the physical meaning of camera parameters features, This has the advantage of less computation , adopt FPN Of mutli-scale The structure and deformable detr Of learned offset, Even if there is only one or several reference points You can also get enough receptive field information . The disadvantage is BEV Same as polar ray Upper reference point The image features sampled by projection are the same , The image lacks depth information , The network needs to distinguish between the sampled information and the current location in the subsequent feature aggregation reference points whether match.

stay BEVDet[3] in , The transformation process follow 了 lift-splat-shoot[4] Methods , That is to say image feature map One for each position of the depth distribution, then feature Multiplied by the depth probability lift To BEV Next . This requires a lot of computation and video memory , Because there is no real depth label , So what is actually predicted is a probability that has no exact physical meaning . And a considerable part of the content in the picture does not contain objects , Will all feature Participation in the calculation may be slightly redundant .

- How to choose BEV Form of expression ?

stay DETR3D in , We don't explicitly express the whole BEV, And by sparse Of object query To represent . The most significant benefit is that it saves memory and computation . And in the BEVDet and BEVFormer in , They created a dense Of BEV feature, Although video memory has been added , But it's easier to do BEV space Under the data augmentation, Binary image BEVDet The same can be added to BEV features Of encoding, Three to adapt to a variety of 3D detection head(BEVDet It was used centerpoint,BEVFormer It was used deformable detr).

边栏推荐

- Is the compass app regular? Is it safe or not

- "Renegotiation" agreement

- What does virtualization mean? What technologies are included? What is the difference with private cloud?

- WebRTC系列-网络传输之7-ICE补充之偏好(preference)与优先级(priority)

- ABP framework Practice Series (III) - domain layer in depth

- 等保备案是等保测评吗?两者是什么关系?

- JS array array JSON de duplication

- User control custom DependencyProperty

- 169. most elements

- 2022.6.25-----leetcode.剑指offer.091

猜你喜欢

Open camera anomaly analysis (I)

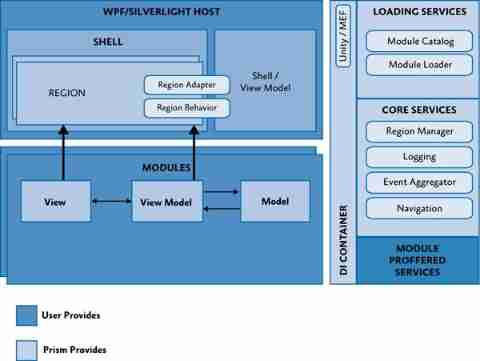

Prism framework

Machine learning notes - trend components of time series

DETR3D 多2d图片3D检测框架

Classic model – RESNET

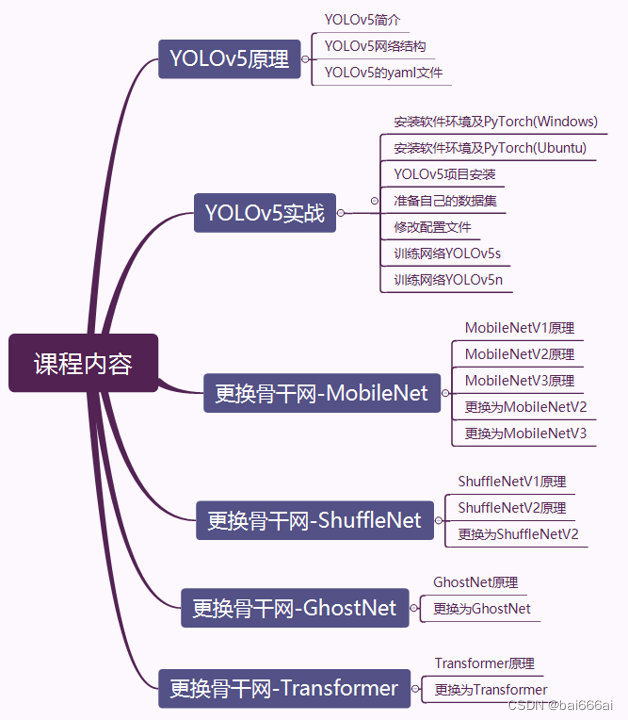

YOLOv5改进:更换骨干网(Backbone)

【LOJ#6718】九个太阳「弱」化版(循环卷积,任意模数NTT)

Solve the problem that the uniapp plug-in Robin editor reports an error when setting the font color and background color

阿里云函数计算服务一键搭建Z-Blog个人博客

Uni app custom selection date 1 (September 16, 2021)

随机推荐

[Flink] Flink batch mode map side data aggregation normalizedkeysorter

Dynamic segment tree leetcode seven hundred and fifteen

Three level menu applet

MySQL高級篇第一章(linux下安裝MySQL)【下】

Concept and implementation of QPS

mysql存儲過程

在出海获客这件事上,数字广告投放之外,广告主还能怎么玩儿?

Prism framework project application - Navigation

Uni app QR code scanning and identification function

【好书集锦】从技术到产品

C # knowledge structure

优化——多目标规划

ABP framework Practice Series (III) - domain layer in depth

"Renegotiation" agreement

bubble sort

面了个字节拿25k出来的测试,算是真正见识到了基础的天花板

刷题记录Day01

外包干了四年,人直接废了。。。

[Flink] Flink source code analysis - creation of jobgraph in batch mode

Uni app custom navigation bar component