当前位置:网站首页>(note) learning rate setting of optimizer Adam

(note) learning rate setting of optimizer Adam

2022-07-23 21:06:00 【[email protected]】

Record the problems you see :Adam Learning rate setting

Commonly used neural network optimizer Adam The adaptive learning rate of is not really adaptive .

From a statistical point of view ,Adam The adaptive principle of is also to modify the gradient according to statistics , But it is still inseparable from the previously set learning rate . If the learning rate is set too large , Will cause the model to diverge , Cause slow convergence or fall into local minimum , Because too much learning rate will skip the optimal solution or suboptimal solution in the optimization process . According to experience , At the beginning, it will not set too much learning rate , Instead, you need to choose according to different tasks . Usually, the default learning rate is 1e-3.

At the same time, the loss function of neural network is basically not convex function , Gradient descent method, these optimization methods are mainly aimed at convex functions , Therefore, in terms of optimization, the learning rate of deep learning will be much smaller than that of traditional machine learning . If the learning rate is set too high ,Adam The optimizer will only correct the gradient , It will not affect the initial learning rate , At this time, the model Loss There will be large fluctuations , This means that there is no way for the model to converge .

- be relative to SGD Come on ,Adam Of lr More unified .

That's different task You can use the same lr To adjust , More versatility ;

- learning rate decay Very important .

Even in accordance with paper How it works ,lr It can automatically learn without adjustment , However, after a drop, the efficiency is still significantly improved ;

- lr Of decay The impact is far less than SGD.

Generally speaking SGD stay CV There are two problems lr falling , Each promotion is considerable , however Adam After the first, the subsequent impact is minimal . This is even in multi-scale In the long-term training ,Adam Not so good SGD;

Reference:

https://www.zhihu.com/question/387050717

版权声明

本文为[[email protected]]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/204/202207232025565679.html

边栏推荐

- Himawari-8 数据介绍及下载方法

- Major upgrade of openim - group chat reading diffusion model release group management function upgrade

- 信号的理解

- 确定括号序列中的一些位置

- If the order is not paid within 30 minutes, it will be automatically cancelled

- MySQL(3)

- [Yunxiang book club No. 13] Chapter IV packaging format and coding format of audio files

- Green-Tao 定理的证明 (1): 准备, 记号和 Gowers 范数

- Ssm+mysql to realize snack mall system (e-commerce shopping)

- 1062 Talent and Virtue

猜你喜欢

Himawari-8 data introduction and download method

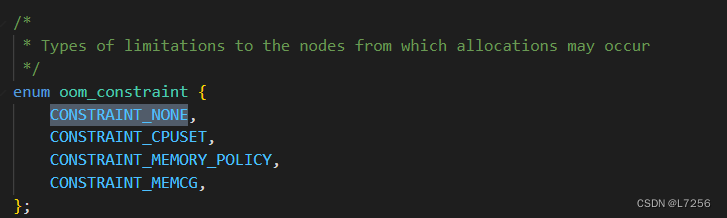

Oom mechanism

网络学习型红外模块,8路发射独立控制

High numbers | calculation of double integral 4 | high numbers | handwritten notes

STM32C8t6 驱动激光雷达实战(二)

jsp+ssm+mysql实现的租车车辆管理系统汽车租赁

One of QT desktop whiteboard tools (to solve the problem of unsmooth curve -- Bezier curve)

STM32c8t6驱动激光雷达(一)

1309_STM32F103上增加GPIO的翻转并用FreeRTOS调度测试

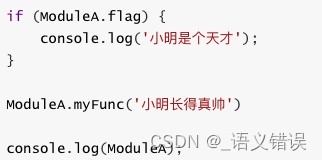

模块化开发

随机推荐

高数下|二重积分的计算2|高数叔|手写笔记

High numbers | calculation of double integral 4 | high numbers | handwritten notes

Understanding of signals

Install under win7-vs2012 Net framework work

一道golang中关于for range常见坑的面试题

Ssm+mysql to realize snack mall system (e-commerce shopping)

微服务架构 VS 单体服务架构【华为云服务在微服务模式里可以做什么】

STM32c8t6驱动激光雷达(一)

1061 Dating

确定括号序列中的一些位置

1063 Set Similarity

如何在面試中介紹自己的項目經驗

Network learning infrared module, 8-way emission independent control

Oom mechanism

Chapter 3 business function development (creating clues)

2022.7.11mySQL作业

[cloud co creation] what magical features have you encountered when writing SQL every day?

TCP half connection queue and full connection queue (the most complete in History)

Green Tao theorem (4): energy increment method

WinDbg实践--入门篇