当前位置:网站首页>R language uses logistic regression, ANOVA, outlier analysis and visual classification iris iris data set

R language uses logistic regression, ANOVA, outlier analysis and visual classification iris iris data set

2022-07-25 02:01:00 【Extension Research Office】

Full text link :http://tecdat.cn/?p=27650

The source of the original text is : The official account of the tribal public

Abstract

This article will explore Fisher and Anderson The relationship between the three variables presented in the iris dataset , especially virginica and versicolor The dependent variable of the level species For predictive variables Petal length and Petal width The logical return of . One way ANOVA and data visualization both determine a factor level of the dependent variable , namely I. setosa, It is easy to be linearly separated from the other two factors , It has very obvious mean and variance , So it's not that we are interested in logistic regression .

Introduce

The preliminary examination of iris data raises direct questions about the nature of the dataset itself : Why collect such simple data , in fact , One of our first intuitions was to know , Given the information in the data set , Is it possible to conduct relevant analysis and diagnosis , Build a new view A model for classifying results .

We are surprised and pleased to learn that datasets are usually analyzed for this purpose . Its most common use is machine learning , In particular, classification and pattern recognition applications . We began to use the tools we have learned so far to check some data —— namely , We will use logistic regression and two kinds of iris ,Virginica and versicolor( Expressed as π =0 and π=1). The third species I. setosa To be excluded from , Because it is highly separated from the other two species in all dimensions .

Method

under these circumstances , Logistic regression ratio chi square or Fisher Accurate inspection is more appropriate , Because we have a binary dependent variable and multiple predictive variables , It also allows us to clearly quantify the intensity of various impacts while controlling other variables ( That is, the odds ratio of each parameter ).

plot(predicresiduals(logit.fylab="

rl=lm(resi.fit)~bs(predict(.fit),8))

#rl=loess(repredictit.fit))

y=pree=TRUE)

segments(predict(l

result

Created a logical model , The general model and parameter characteristics are as follows :

By looking at their odds ratio , It can effectively summarize the meaning of parameter estimation . obviously , The intercept term is not particularly interesting , Because data points (0,0) Theoretically, it is impossible , And it's far beyond the data we collect .β1 It's better than ![]() and β2

and β2![]() More interesting ; They represent each increment of related variables , While the other remains the same , Certain plants belong to I. virginica The probability of species increases . under these circumstances , Obviously , Increasing the width of petals will classify specific plants as I. virginica The probability of having a huge impact —— This effect is about the length of petals 110 times . However , The odds ratio is 95% Confidence intervals do not include 1, So we can come to a conclusion , Both effects were statistically significant .

More interesting ; They represent each increment of related variables , While the other remains the same , Certain plants belong to I. virginica The probability of species increases . under these circumstances , Obviously , Increasing the width of petals will classify specific plants as I. virginica The probability of having a huge impact —— This effect is about the length of petals 110 times . However , The odds ratio is 95% Confidence intervals do not include 1, So we can come to a conclusion , Both effects were statistically significant .

library(ggplot2)

# The drawing data

qplot(Petal.Width, Petal.Length, colour = Species, data = irises, main = "Iris classification")

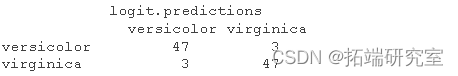

Use the coefficient estimation in the model , We can set a standard —— A linear discriminant —— Through it, we can best separate data . The accuracy of linear discriminant is given in the following confusion matrix :

# Get the prediction results from the model

logit.predictions <- ifelse(predict(logit.fit) > 0,'virginica', 'versicolor')

# Confusion matrix

table(irises[,5],logit.predictions)

The diagnosis

By checking the effects of residuals and data , We identified several potentially abnormal observations :

In all observations that may be problematic , We notice that the second 57 Observation samples may be outliers . Check the diagnostic diagram , We see the trend characteristics of logistic regression , Including residual error and two different curves in the prediction diagram . The first 57 Observation samples appear in each diagnostic diagram , But fortunately, there is no distance beyond cook .

Conclusion and discussion

under these circumstances , The use of logical models is enlightening , Because it shows the powerful function of classifying data into binary dependent variables according to multiple predictive variables . The model predictably shows the greatest uncertainty , That is, in a given dimension ( That is, the boundary between the data of one species and the data of another species ) When the mesoscopic value is close to the average . Consider whether the model can be improved , Or whether different models are more suitable for data is very interesting ; Maybe for this classification problem ,k- The nearest neighbor method is necessary . in any case ,6% The error classification rate of is actually quite good ; More data will certainly increase this number .

Self test questions

Diagnosis of Depression in Primary Care

To study factors related to the diagnosis of depression in primary care, 400 patients were randomly selected and the following variables were recorded:

DAV: Diagnosis of depression in any visit during one year of care.

0 = Not diagnosed

1 = Diagnosed

PCS: Physical component of SF-36 measuring health status of the patient.

MCS: Mental component of SF-36 measuring health status of the patient

BECK: The Beck depression score.

PGEND: Patient gender

0 = Female

1 = Male

AGE: Patient’s age in years.

EDUCAT: Number of years of formal schooling.

The response variable is DAV (0 not diagnosed, 1 diagnosed), and it is recorded in the first column of the data. The data are stored in the file final.dat and is available from the course web site. Perform a multiple logistic regression analysis of this data using SAS or any other statistical packages. This includes

estimation, hypothesis testing, model selection, residual analysis and diagnostics. Explain your findings in a 3 to 4- page report. Your report may include the following sections:

• Introduction: Statement of the problem.

• Material and Methods: Description of the data and methods that you used for the analysis.

• Results: Explain the results of your analysis in detail. You may cut and paste some of your computer

outputs and refer to them in the explanation of your results.

• Conclusion and Discussion: Highlight the main findings and discuss.

Please cut and paste the computer outputs to your report and do not include any direct computer output as an attachment

Please note that you have also the option of using a similar data set in your own field of interest.

The most popular insights

1.R Language diversity Logistic Logical regression The application case

2. Panel smooth transfer regression (PSTR) Analyze the case and realize

3.matlab Partial least squares regression in (PLSR) And principal component regression (PCR)

4.R Language Poisson Poisson Regression model analysis case

5.R The return of language Hosmer-Lemeshow Goodness of fit test

6.r In language LASSO Return to ,Ridge Ridge return and Elastic Net Model implementation

7. stay R In language Logistic Logical regression

8.python Using linear regression to predict stock prices

9.R How to analyze the existence of language and Cox Calculate in regression IDI,NRI indicators

边栏推荐

- An article explains unsupervised learning in images in detail

- Service address dynamic awareness of Nacos registry

- [summer daily question] Luogu P7550 [coci2020-2021 6] bold

- EasyX realizes button effect

- 【Power Shell】Invoke-Expression ,Invoke-Expression -Command $activateCommand; Error or power shell failed to activate the virtual environment

- Contemporary fairy quotations

- Freedom and self action Hegel

- Application status of typical marine environmental observation data products and Its Enlightenment to China

- Resolution of multi thread conflict lock

- Performance analysis method - Notes on top of performance

猜你喜欢

Redis learning notes (2) - power node of station B

Several schemes of traffic exposure in kubernetes cluster

Upgrade the leapfrog products again, and the 2023 Geely Xingrui will be sold from 113700 yuan

How to use ES6 async and await (basic)

Take the first place in the International Olympic Games in mathematics, physics and chemistry, and win all the gold medals. Netizen: the Chinese team is too good

Hongmeng harmonyos 3 official announcement: officially released on July 27; Apple slowed down the recruitment and expenditure of some teams in 2023; Russia fined Google 2.6 billion yuan | geek headlin

I was forced to graduate by a big factory and recited the eight part essay in a two-month window. Fortunately, I went ashore, otherwise I wouldn't be able to repay the mortgage

6-10 vulnerability exploitation SMTP experimental environment construction

C#/VB. Net insert watermark in word

Fraud detection using CSP

随机推荐

Yunyuanyuan (VIII) | Devops in depth Devops

Safety management and application of genomic scientific data

Nacos service discovery data model

G025-db-gs-ins-02 openeuler deployment opengauss (1 active and 1 standby)

How can arm access the Internet through a laptop?

Inventory of well-known source code mall systems at home and abroad

SAP Spartacus - progressive web applications, progressive web applications

VRRP virtual redundancy protocol configuration

Leetcode - number of palindromes

Web Security Foundation - file upload

Hongmeng harmonyos 3 official announcement: officially released on July 27; Apple slowed down the recruitment and expenditure of some teams in 2023; Russia fined Google 2.6 billion yuan | geek headlin

Using ODBC to consume SAP ABAP CDs view in Excel

xts performance auto fix script

EasyX realizes button effect

PG Optimization -- execution plan

[leetcode] 2. Add two numbers - go language problem solving

Detailed explanation of manually writing servlet in idea

Android memory optimized disk cache

Grpc sets connection lifetime and server health check

Industrial control safety PLC firmware reverse III