当前位置:网站首页>stylegan1: a style-based henerator architecture for gemerative adversarial networks

stylegan1: a style-based henerator architecture for gemerative adversarial networks

2022-06-23 16:16:00 【Kun Li】

stylegan The article itself is very meaningful , It has done a lot of exploration and thinking . But in terms of landing , It has two main contributions , The first is that latent space w, The second is to put w In every layer of the generator . Real data is not Gaussian , Input is Gaussian , It's hard to match ,w Is the space of implicit variables , It can be any space , Better match real data ;w Send to each Adain in , Control of generator .

1.introduction

Yes latent space Lack of understanding of the properties of , Yes latent space interpolations There is no quantitative way to compare different generators . This article redesigns the generator architecture , A new method for controlling image generation is proposed . The generator starts with an input constant , according to latent code Adjust the style of the image at each convolution layer , Thus, the intensity of image features can be directly controlled at different scales , The discriminator and loss function are not modified , It can be well embedded into the current gan In the frame .

Our generator will input latent code Embedded in an intermediate potential space (intermediate latent space) in ,input latent space The probability density of the data must be obeyed , This leads to a certain degree of inevitability entanglement( entanglement ), But the intermediate potential space is not limited by this , Can be disentangled , Two new metrics are proposed , Perceived path length perceptual path length And linear separability linear separability. This is a stylegan A core contribution of , Introduced latent space, Generally, the sampling of noise is almost Gaussian or uniformly distributed , But the sampling of real data is not the standard Gaussian distribution , If the noise sample is Gaussian , But data sampling is not Gaussian , Then the two are difficult to match ,w Is the space of implicit variables , It can be any space , Better match real data . About latent code Of The simple understanding is , In order to better classify or generate data , We need to represent the characteristics of the data , But data has many characteristics , These features are related to each other , High coupling , It's hard for models to figure out the relationship between them , Make learning inefficient , Therefore, we need to find the deep relationship hidden under these surface features , Decouple these relationships , The resulting hidden features , namely latent code. from latent code The space of composition is latent space. Hidden variables z The sample space of .

2.style-based generator

Combined with the picture above , so to speak stylegan The two core points of , The first left is traditional gan The generator , from z Start sampling , But on the right style-based The generator of A mapping network, Given an input potential space z Medium latent code z, By a nonlinear network mapping by z Mapping to w,w Is the potential space in the middle , This step is the first point , The input is mapped to the intermediate potential space , The mapping network consists of 8 individual fc layers , Second points , In the past gan It is a series structure , The generator only received... At the beginning z, and style-based Every convolution layer in the generator receives w,A Affine transformation representing learning ,A take w Turn into y form , These styles are then imported into the builder , The generator controls the normalization of adaptive instances after each convolution layer (AdaIN), Intermediate potential space w The control generator is normalized by each convolution layer adaptive instance .

Each feature map is normalized separately , Then use the style y Scale and offset the corresponding scalar component in , therefore ,y The dimension of is twice the number of feature maps on the image ,B Operation applies the learned single channel scaling factor to the noise input , Broadcast the noise image to all feature maps , Then Gaussian noise is added to the corresponding convolution output . The synthetic network consists of 18 layer , Finally, use it alone 1x1 Convolution turns the last layer into RGB.

2.1 Quality of generated images

The table above states , stay CelebA-HQ and FFHQ Of different generator architectures in the dataset FDI value ,FDI The smaller the value, the better . The basic model is (A)Progressive GAN This generator architecture . Unless otherwise stated , Otherwise, the network and all the super parameters will be inherited from it .

1. First, by using bilinear upper / Down sampling operation 、 Longer training and adjusted super parameters improve the basic model to (B).

2. Then by adding a mapping network and AdaIN Operation improved to (C), And observe that the network no longer benefits from latent code Feed into the first convolution layer .

3. Then by removing the traditional input layer and learning from 4×4×512 Constant tensor (D) Start image compositing to simplify the architecture .

4. Then we found that adding noise can also improve the results (E).

5. Finally, we decorrelate the adjacent styles and realize the hybrid regularization of the generated image with more fine-grained control .

The figure above shows the generator for this article from FFHQ A set of novel, uncluttered images generated from a dataset . just as FID As it turns out , The average quality is very high , Even accessories such as glasses and hats can be successfully synthesized . Avoid the so-called truncation technique for this diagram (truncation trick) Come from W The extreme areas of the . The generator in this article allows selective application of truncation only in low resolution , Therefore, the high-resolution details will not be affected .

2.2 prior art

of gan Improvement work .

3.properties of the style-based generator

The second point of this article is explained here . The generator structure can control image generation by modifying the style at a specific scale . Mapping functions and affine transformations can be viewed as distributions learned from each style Drawing samples , Synthetic networks can be viewed as based on style The set of generates samples , This is why this article is called style Why , there style Is an attribute that can control composition . The effect of each style has been positioned in the network (localize) Of , That is, modifying a specific subset of the style can only affect some aspects of the image . Why does it have this effect ?AdaIN The operation first normalizes each channel to zero mean and unit variance, Then apply the scale and deviation according to the style ; Next , New per channel statistics based on styles will be applied to features The relative importance in the subsequent convolution operation is modified , But because the normalization operation has been carried out , The new per channel statistics do not rely on the original statistics . So each style controls only one convolution layer , And then by the next AdaIN Operation coverage .

The following content can be seen from the first csdn Inside , It's very detailed ,stylegan There are only two points at the core of , The latter is basically something to explain and measure .

边栏推荐

- CA认证和颁发吊销证书

- XML

- Can the hbuilderx light theme be annotated?

- ADB key name, key code number and key description comparison table

- 【TcaplusDB知识库】Tmonitor单机安装指引介绍(二)

- [tcapulusdb knowledge base] Introduction to tmonitor background one click installation (II)

- Sleuth + Zipkin

- A tour of gRPC:01 - 基础理论

- Uniapp sends picture messages to Tencent instant messaging Tim

- 多年亿级流量下的高并发经验总结,都毫无保留地写在了这本书中

猜你喜欢

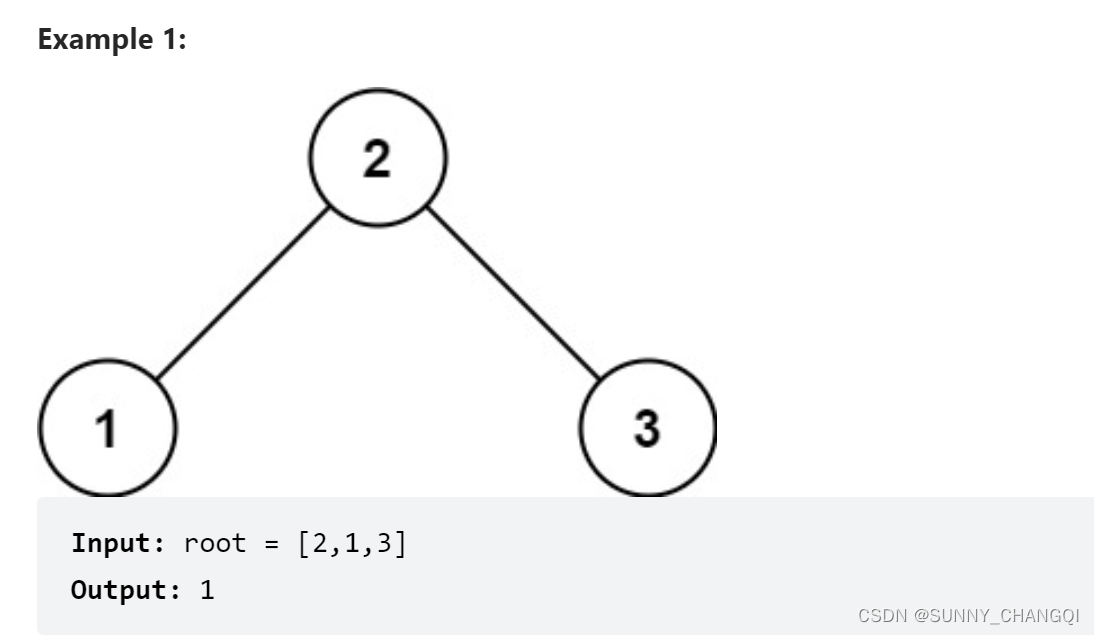

513. Find Bottom Left Tree Value

Innovation strength is recognized again! Tencent security MSS was the pioneer of cloud native security guard in 2022

![[tcapulusdb knowledge base] Introduction to tmonitor stand-alone installation guidelines (II)](/img/6d/8b1ac734cd95fb29e576aa3eee1b33.png)

[tcapulusdb knowledge base] Introduction to tmonitor stand-alone installation guidelines (II)

Thread pool

《ThreadLocal》

golang二分查找法代码实现

Quartz

多年亿级流量下的高并发经验总结,都毫无保留地写在了这本书中

安全舒适,全新一代奇骏用心诠释老父亲的爱

How did Tencent's technology bulls complete the overall cloud launch?

随机推荐

[tcapulusdb knowledge base] Introduction to tmonitor stand-alone installation guidelines (II)

【TcaplusDB知识库】TcaplusDB Tmonitor模块架构介绍

Golang对JSON文件的读写操作

机器人方向与高考选专业的一些误区

Object

Web篇_01 了解web开发

Do you understand Mipi c-phy protocol? One of the high-speed interfaces for mobile phones

Apache commons tool class

ADB 按鍵名、按鍵代碼數字、按鍵說明對照錶

OutputDebugString使用说明以及异常处理

R语言使用magick包的image_scale函数对图像进行缩放(resize)、可以自定义从宽度或者高度角度进行缩放

Apple iPhone, Samsung mobile phone and other electronic products began to enter Russia through parallel import channels

Web容器是怎样给第三方插件做初始化工作的

Example of if directly judging data type in JS

MySQL中json_extract函数说明

阻塞、非阻塞、多路复用、同步、异步、BIO、NIO、AIO 一文搞定

企业想上MES系统?还得满足这些条件

Web篇_01 了解web開發

创建好后的模型,对Con2d, ConvTranspose2d ,以及归一化BatchNorm2d函数中的变量进行初始化

解决:在验证阶段,第一个batch不会报错,第二个batch报cuda超出的错误

https://zhuanlan.zhihu.com/p/263554045

https://zhuanlan.zhihu.com/p/263554045